Introduction

There is little question but that the forensic sciences are dynamic and that their dramatic growth parallels current advances in technology and system operation. DNA analysis and the computerization of fingerprints are but two examples. Forensic phonetics also is currently developing. It is defined as a professional specialty based upon the utilization of knowledge about human communicative processes and the application of the specialized techniques which result. As with the forensic sciences in general, the focus here is on law enforcement and the courts.

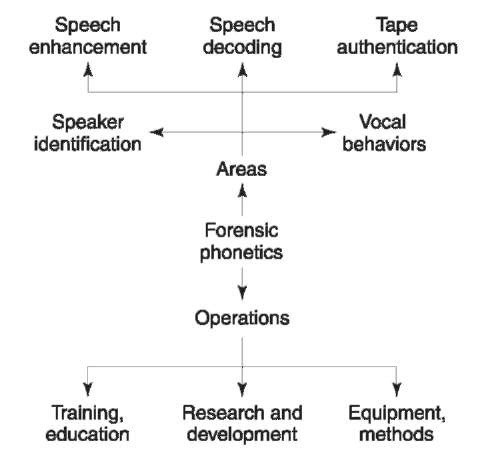

Forensic phonetics consists of electroacoustical analysis of speech signals that have been transmitted or stored, and the analysis of the communicative acts themselves. The first involves the proper transmission and storage of spoken exchanges, the authentication of tape recordings, the enhancement of speech on tape recordings, speech decoding and the like. The second area involves issues such as speaker identification and the process of analyzing speaking behaviors to obtain information relative to the emotional, physiological or psychological states of a talker. Figure 1 provides a visualization of the field. Note that the signal versus human analysis dichotomy has been dropped and five basic areas have been identified. Also listed are the specialized operations that lead to progress in the cited fields. Not included are several secondary areas; in those cases, forensic phonetics is usually interfaced with forensic linguistics (for language analysis) or audioengineering (for nonhuman signal analysis). They were not included in the figure, as they will not be discussed in this brief review. What is covered here will be problems with: (1) the integrity of captured utterances (and related), (2) the accuracy/completeness of messages, (3) the identification of the human producing the utterances, and (4) determination of the various physical states experienced by a person. These areas will be defined and the professional response discussed.

Figure 1 The nature and scope of forensic phonetics. The five content areas most basic to the specialty are listed in the upper third of the figure; the three major activities at the bottom. Not shown are any of the secondary or interface areas discussed in the text.

Authentication of Tape Recordings

The problem of tape and/or video recording integrity is a rather serious one as a challenge (at any time) relative to their validity suggests that someone has tampered with evidence or has falsified information. Yet tape recordings are quite vulnerable and changes to them can be either intentional or accidental. The purpose of authentication, then, is to determine if the target recordings can be considered valid and reliable or whether they distort what actually happened.

But, what is authenticity? To be authentic a tape recording must include the complete set of events that occurred and nothing must have been added, changed or deleted during the recording period or subsequently.

For purposes of this discussion, the focus will be on assessing analog tape recordings. Analysis of digital or video recordings is also based upon these procedures; however, specialized tests must be added. Evaluations are divided into two parts: the physical examination and signal assessment.

Physical examination

Five tasks are carried out prior to initiation of the physical examination:

1. A log should be structured.

2. A high-quality copy should be made.

3. The tape should be listened to for familiarization purposes.

4. The evidence pouch should be examined for openings.

5. The tape housing should be checked for identification marks.

The first assessment is to determine if the correct amount of tape is on the reel. Usually the manufacturer’s estimates are a little casual (closeness to the reel edge, the number of ‘minutes’ the reel or cassette contains). While small variations are of little importance, large ones suggest manipulation. The second examination is focused on the cassettes or reel. The housing is examined for pry marks, damage to the screw heads, etc. Negative ‘evidence’ here is cause for concern. Third, the tape must be examined for splices – either adhesive or heat. If any are found, it must be assumed that editing has taken place and the area around the splice must be carefully examined. Fourth, the recorder on which the tape purportedly was made must be examined and tested. The procedures here involve making a series of test recordings under conditions of quiet, noise and speech, and repeating them while serially operating all switches associated with the system. Later, evaluations will determine if the tape is actually the original. For example, the electronic signatures found on the tape must be the same as those produced by the equipment (that is, if the tape is to be judged authentic). They also permit determination of stop-and-start activity within the tape, over-recordings and so on. Finally, some of these issues can be assessed by additional methods. An example is where the ‘tracks’ made by the tape recorder drive mechanism are examined; another involves the magnetic recording patterns themselves (as seen by application of powders or solutions).

Laboratory examination

Once copies of the ‘originals’ are observed to be good duplicates, they may be taken to the laboratory for further tests. First, the recording is listened to critically and all questionable events are logged. This process is repeated 5-10 times. Questionable events include clicks, pulses, thumps, clangs, rings, crackles, etc. They also may involve loss of signal, abrupt shifts in ambient noise, shifts in amplitude, inappropriate noises, and so on. Apparent breaks in, or difficulty with, context, word boundaries or coarticulation must also be identified and recorded. Indeed, a tape recording must be rigorously examined for any event that could suggest it has been modified.

Once all of the questionable events have been located and logged, it is necessary to systematically identify and explain each of them. Most will be innocuous – for example, a telephone hang-up or a door closing; dropouts could result from change in microphone position or operation of interface equipment. On the other hand, any of these ‘events’ could signal a malicious modification.

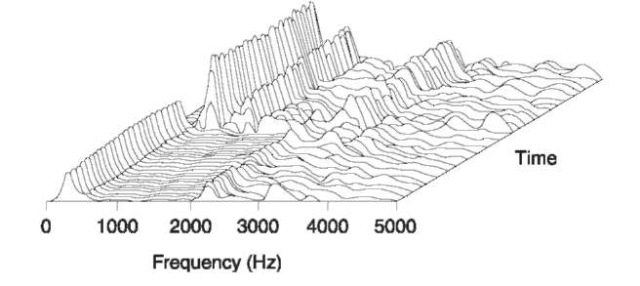

The procedures by which the questionable events

can be explained vary from careful listening to complex signal analysis. Any number of procedures can be applied: time-amplitude, wave and/or spectral analyses are examples. In Fig. 2 an abrupt change in a waterfall-type spectrum signals that the tape has probably had a section removed. Even noise processing and flutter analysis can be useful. The goal is to identify and/or explain every questionable event that can be found on the tape. It is a long and tortuous process and, while most of the suspicious events will be found to be ‘innocent’, it is important not to miss evidence of manipulation. Finally, it must be remembered that even if it is demonstrated that the tape recording has been altered, the reason for such modification cannot be specified. It could have been either accidental or intentional; the examiner has no way of knowing.

Special analyses may be required; video tapes may have to be authenticated; the tape may be digital (DAT) or may have been ‘minimized’ (presumably to prevent the invasion of privacy). These problems are met by special techniques.

Finally, there are three reasons for testing the integrity of a tape recording: (1) to challenge its authenticity; (2) to defend it; or (3) simply to determine what happened. However, it must be stressed that the evaluations must be totally independent of the needs or desires of the agency that requests them. Indeed, all examinations must be conducted thoroughly and ethically.

Difficult Tapes and Transcripts

Tape recordings used for legal and law enforcement purposes are rarely of studio quality; indeed, they often are of severely limited fidelity. The two main sources of difficulty here are noise and distortion. In turn can be caused by the use of poor quality equipment, poorly trained operators and/or hostile environments. Equipment inadequacies include: limited frequency bandwidth, harmonic distortion, internal noise, amplitude limits, and so on. The most common sources are inadequate pickup transducers (microphones, ‘bugs’, telephones), poor quality recording equipment, very slow recording speeds, very thin tapes and/or inadequate transmission links. The second factor, operator error, is self-explanatory.

Figure 2 A waterfall plot of a rerecorded splice.Note the very abrupt break in the serial spectra.

Third, masked or degraded speech can result from environmental factors; they include: external ‘hum’, wind, automobile movement, fans/blowers, clothing friction. In other instances, speech is blocked by other talkers, music or ‘forensic’ noise. Whatever the cause or causes, the events must be analyzed and compensatory actions taken; that is, if reasonably good transcripts are to accrue.

Enhancing speech

Basically, the two remedies are speech enhancement and decoding, but they are preceded by the log, tape duplication and listening to the tape for familiarization purposes.

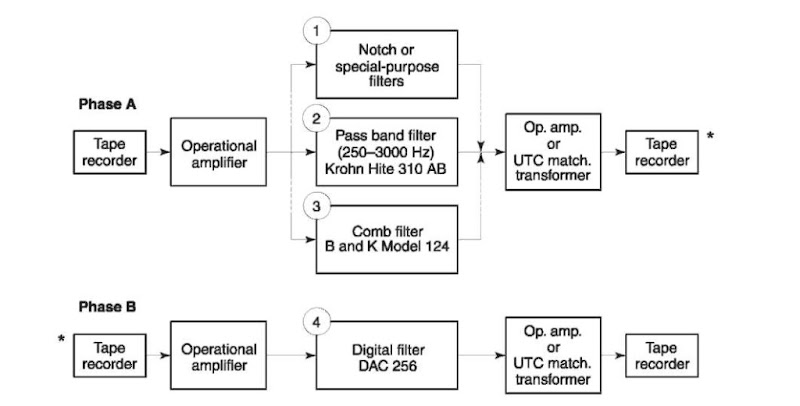

The first process involves filtering. If there is a substantial amount of noise at either (or both) the extreme lows and highs, speech may be enhanced by subjecting the tape recording to a band-pass filter with an appropriate frequency range (i.e. 3003500 Hz). Second, if a spectrum analysis shows that a noise source is producing a relatively narrow band of high energy at a specific frequency, a notch filter may be employed. Third, comb filters can help when noise exists within the frequency range for speech. They can be used to modify continuously the spectrum of a signal by selectively attenuating relatively narrow frequency bands throughout its range. Of course, not very much filtering can be carried out here as speech elements can be removed along with the unwanted sounds. Finally, it must be stressed that these procedures are best carried out with digital filters or with systems that have been developed to compensate for multiple noise problems (Fig. 3). In any event, digital filters (either hardware or software) are advantageous, as distortion is reduced to a minimum and rebound cannot occur.

Since binaural listening is helpful, the equipment should be organized to permit stereo. Further, variable-speed tape recorders also can be useful. There are two kinds of systems here; each is used to produce a different effect. With the first type, a manual increase or decrease of tape recorder speed is possible. These units are especially useful in situations where the speed of the original recording was varied for some reason. The second type of recorder has compensatory circuits that allow the perceived speech to appear undistorted. Finally, there are yet other techniques that can be useful. Filling short dropouts with thermal noise is one. Yet others (sophisticated techniques such as linear predictive coefficients, deconvolution, bandwidth compression, etc.) can be helpful.

Figure 3 A block diagram demonstrating how analog and/or digital filtering can be accomplished.The three analog filters (1-3) can be used singly or cascaded; however, they would not be employed in parallel (as shown).A typical configuration would be to place a comb filter and a digital filter (both isolated) in a series.*For single phase use, remove these tape recorders and one operational amplifier.

However, at present, they are of limited value in the ordinary forensic situation as they are quite complex, time-consuming and expensive.

Speech decoding and transcripts

Speech enhancement, of course, is only the preliminary step in the development of optimum transcripts. Very often specialists must be employed to make them. These individuals are professionals who can deal effectively with factors such as voice disguise, dialects (and/or foreign languages), very fast speech, multiple speakers, stress, psychosis, fear, fatigue, intoxication, drugs and health states. These, and other problems, can make the decoding task quite demanding. But what approaches are useful here?

As would be expected, intense listening procedures are required, as is the use of the best tapes possible and equipment with good fidelity (including binaural headphones). Initial concentration on relatively easy-to-decode portions of the tape can aid in developing perceptual cues for problem areas. For example, it is well known that words have an effect upon one another, that context aids decoding and that determination of one of the words in a two-word series often can aid in the correct identification of the other.

Decoding can sometimes be aided by the use of graphic displays of the speech signal. Particularly useful to this approach are sound spectrograms of the time-frequency-amplitude class. For example, displays of this type can be useful in estimating the vowel used (from the formants), the consonant used from among several possible, and the consonant spoken by comparison of its energy distributions to identifiable productions. As would be expected, a variety of techniques are available to assist the specialist with the decoding process.

Finally, basic knowledge about, and skills in applying, many of the phonetic and linguistic realities relative to language and speech are also quite helpful. For example, a basic understanding of the nature and structure of vowels, consonants and other speech elements is necessary. So too is a good understanding of word boundaries, word structure, dialect, linguistic stress patterns and paralinguistic elements of speech (fundamental frequency, vocal intensity, phoneme/ word duration, and so on). Especially useful is a thorough understanding of coarticulation and its effects on words and phrases. In short, speech/language systems can be differently employed for purposes of decoding.

Decoding mechanics

Just as the approaches to the decoding process must be highly organized, so too must the reporting structure. That is, it is not good enough to just write down the words and phrases heard (and verified); it also is necessary to explain what went on during the entire recording period and what is missing. Codes are useful here but they must be used consistently and systemically. A couple of examples should suffice. If sections are found where the speech is audible but not intelligible, more information must be provided than simply ‘inaudible’. Rather, it might look like this ‘(10 seconds of inaudible speech by talker A)’ or ‘(6-8 words of unintelligible response)’. Another requisite is to include all the events: ‘Talker A:

”Help me.” (footsteps, door closing, two gunshots, loud thump)’.

Once the decoding process has been completed and confusions resolved, the transcript can be structured in final form and carefully evaluated for errors. As many prosecutors and defense attorneys have discovered (sometimes to their distress), it is only those transcripts that are accurate and reliable that can be effectively utilized in the courtroom.

Speaker Identification

Speaker identification should not be confused with speaker verification. The second of these two processes (verification) results when a known and cooperative person wishes to be recognized by means of an analysis of a sample of his or her speech. In this case, exemplars have already been made available and ‘reference sets’ have been developed for the talker. Speaker verification techniques are most useful to industry, government and the military; they are only occasionally of use to law enforcement agencies. Moreover, since speaker identification is, by far, the more difficult task of the two, any technique that will work for identification purposes should work even better for verification. In any case, speaker identification is where attempts are made to identify an individual from his or her speech when he or she is unknown and when anyone within a relatively open-ended population could have been the talker. Considerable research has been carried out on this issue; it has focused on three areas: (1) aural/perceptual approaches, (2) ‘voiceprints’ and (3) machine/computer approaches. Only the first and third of these will be reviewed, as ‘voiceprints’ have been shown to be inadequate to the task (and in the extreme) and have been pretty much discarded (even in the United States). Hence, it would not appear useful to outline an invalid technique in this very short review.

At least primitive efforts in voice recognition probably antedate recorded history; they have continued down through the millennia. But what is happening these days? Courtroom testimony is common but most of it is by some type of listener. Some Courts permit witnesses to testify (about the identify of a speaker) only if they are able to satisfy the jurist that they ‘really know’ that person. Many courts permit qualified specialists to render opinions. Here, a sample of the unknown talker’s speech (usually an evidence tape) must be available, as must an exemplar tape recording of the suspect. The professional carries out an examination and then decides if the two tapes contain, or do not contain, the voice of a single person. Finally, a third type, here, involves earwitness line-ups or ‘voice parades’. They will be considered first.

Earwitness identification

An earwitness line-up (or voice parade) is ordinarily conducted by the police. They ask the witness to listen to the suspect’s exemplar incorporated in a group of 5-6 recorded speech samples obtained from other people. Ordinarily, the witness is required to listen to all the samples and make a choice as to which one is the perpetrator. However, this approach has come under fire and research is currently being carried out to assess its validity. Moreover, guidelines for auditory line-ups are being developed. Basically, an earwitness line-up is defined as a process where a person who has heard, but not seen, a perpetrator attempts to pick his or her voice from a field of voices. As a procedure, it must be conducted in a scrupulously fair manner. Detailed criteria relative to the procedures involved are now available.

Aural-perceptual approaches

As would be expected, a great deal of research has been carried out in an attempt to discover the nature and dimensions of speaker identification when conducted by listeners. A substantial number of the relationships here are now reasonably well understood. Briefly, correct speaker identification is enhanced if:

• the listener knows the speaker.

• listeners’ familiarity with the talker’s speech is reinforced occasionally.

• high-fidelity samples are available.

• the speech samples are large and varied.

• noise masking is minimal.

• those listeners who are naturally quite good at the task are used.

• listeners use the ‘natural’ characteristics found within speech/voice.

• examiners are phoneticians crosstrained in foren-sics.

Naturally, negatives here will tend to degrade identification accuracy.

Use of specialists It is clear that forensic phoneticians are able to assist attorneys and law enforcement personnel with speaker identification tasks. Indeed, there now are a number of them who can demonstrate valid analysis techniques, superior identification rates and extensive graduate training in the phonetic sciences and especially in forensic phonetics.

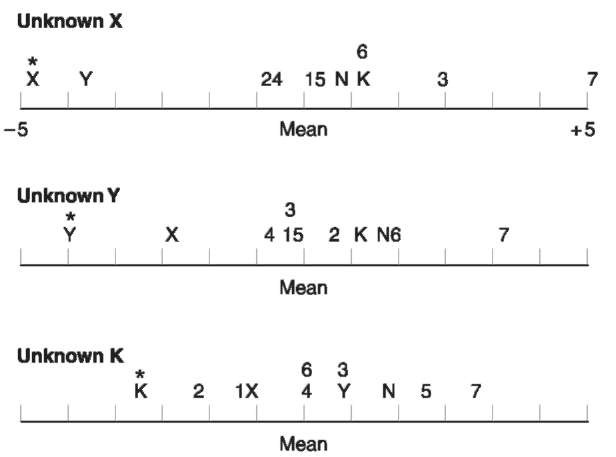

Many phoneticians attempt to determine identity by assessing speech segmentals (i.e. speech sounds). Such procedures are usually successful if the practitioner establishes a robust set of criteria to follow, is reasonably well trained and is experienced in this type of analysis. But an even more powerful technique is one that is rigorously structured and uses both supra-segmentals (assessment of voice, prosody, frequency patterns, vocal intensity, quality and so on) plus the segmentals. This approach is illustrated in Fig. 4.

The method cited involves obtaining multiple speech samples of the unknown (evidence tape) and known (exemplar) speakers and placing them in pairs on an evaluation tape. The pairs are played repeatedly and comparisons are made of one speech parameter at a time (Fig. 4). Each characteristic (pitch patterns, for example) is evaluated continually until the judgment is made. The next parameter is then assessed and the process replicated until all possible comparisons have been completed. At that time, an overall judgment is made and a confidence level generated. Ordinarily, the entire process is independently repeated several times over a period of several days.

If the overall range of scores falls between 0 and 3, a match cannot be made and the samples were probably produced by two different people. If the scores fall between 7 and 10, a reasonably robust match is made. Scores between 4 and 6 are generally neutral but are actually a little on the positive side. Incidentally, if foils are used in the process, the confidence level is substantially enhanced, that is, if the mean scores polarize (i.e. 0-3 or 7-10).

| Score sheet 0 = U- K least likely; 10 = U- K most likely 1. Pitch Score Range |

| (a) Level 0 …. 5 …. 10 |

| (b) Variability 0 …. 5 …. 10 |

| (c) Patterns 0 …. 5 …. 10 |

| 2. Voice quality |

| (a) General 0 …. 5 …. 10 |

| (b) Vocal fry 0 …. 5 …. 10 |

| (c) Other 0 …. 5 …. 10 |

| 3. Intensity |

| (a) Variability 0 …. 5 …. 10 |

| 4. Dialect |

| (a) Regional 0 …. 5 …. 10 |

| (b) Foreiqn 0 …. 5 …. 10 |

| (c) Idiolect 0 …. 5 …. 10 |

| 5. Articulation |

| (a) Vowels 0 …. 5 …. 10 |

| (b) Consonants 0 …. 5 …. 10 |

| (c) Misarticulations 0….5….10 |

| (d) Nasality 0 …. 5 …. 10 |

| (e) 0 …. 5 …. 10 6. Prosody |

| (a) Rate 0 …. 5 …. 10 |

| (b) Speech bursts 0 …. 5 …. 10 |

| (c) 0 …. 5 …. 10 |

| 7. Other |

| (a) Speech disorders 0 …. 5 …. 10 |

| (b) 0 …. 5 …. 10 |

| Mean |

Figure 4 A form useful in the recording of scores resulting from aural-perceptual speaker identification evaluations.Each parameter is rated on a continuum ranging from 0 (definitely two individuals) to 10 (the samples are produced by a single per-son).The resulting scores are summed and converted to a percentage which, in turn, can be viewed as a confidence level estimate U-K, unknown-Known.

Reasonable amounts of data now are available about people’s ability to make aural-perceptual speaker identifications. Even though it has some limitations, fairly good results can be expected if the task is highly structured and if the auditors are well trained professionals who are able to demonstrate good competency.

Machine/computer approaches

The speaker recognition issue changes radically when efforts are made to apply modern technology to the problem. Indeed, with the seeming limitless power of electronic hardware and computers, it would appear that solutions are but a step away. Yet such may not be the case. While the problem is simple, attempts to solve it are complex and messy. Most of the effort here has gone into speaker verification (described above), which is neat, clean and much simpler. None the less, it is necessary to address the identification problem.

A good way to develop a machine-based system is to establish a structure and test it. For example, a group of vectors or relationships could be chosen and then researched, as shown in Fig. 5. The process proceeds step-by-step to assessments of multiple vectors, distorted speech, field assessment, etc. While there may be no (single) vector which is robust enough to permit efficient identification under any and all conditions, a profile approach usually is effective. A number of potentially useful programs in the speaker identification area have been initiated; however, even though some were promising, virtually none of them was sustained. An exception is the SAUSI (semiautomatic speaker identification system) program being carried out at the University of Florida.

The first step was to identify and evaluate a number of speech parameters which might make effective identification cues. It was discovered early that traditional approaches to signal processing appeared lacking; hence, natural speech features were adopted. This decision was supported by results from early experiments, the aural-perceptual literature and the realization that people routinely process heard sounds using these very features. They included speaking fundamental frequency (SFF), voice quality (LTS), vowel quality (VFT) and temporal patterning or prosody (TED); several others were tried but proved lacking.

Figure 5 A structured research approach for the development of computer-based speaker identification systems. s Plus others; b illustrative only as each vector will be studied in all possible combinations.

A second perspective also emerged: it was noted that no single vector seemed able to provide high levels of correct identification for all the degraded speech encountered. Hence, those being studied were normalized, combined into a single unit and then organized as a two-dimensional continuum or profile. This rather harsh procedure addressed the severe limitations imposed upon the identification task by the forensic model (i.e. one referent; one test sample within a field of competing samples), as the research design forced matches (or nonmatches) from a fairly large collection of voices (6-25in number). After the profile is generated, the process is replicated several times. The final continuum usually consists of the data from 3-5rotations and includes a summation of all vectors. Hence, any decision made about identity is based on several million individual comparisons (factors, parameters, vectors, rotations).

Figure 6 is a practical illustration of how this procedure works. The evaluation here (a real-life case) involved two unknowns (X,Y), two knowns (K,N) and a number of foils. The unknown X was selected as himself (as necessary for validity), with the data for unknown Y placing him very close. The knowns and foils were in a different part of the continuum. Additional rotations compared both unknown Y and known K to the rest. What is suggested here is that the two unknowns were probably the same speaker but that none of the knowns or foils were either X or Y. Case outcome provided (nonscientific) support for this judgment.

A summary of the more recent experiments which support this approach is given in Table 1. The first of these (1988) involved a large number of subjects but only laboratory samples (high fidelity). Note that none of the individual vectors provided 100% correct identification. The second part of this project (not shown) was designed to test the proposition that SAUSI would eliminate a known speaker if he was not also the unknown; it did so and at a level of 100% correct elimination. The second set of experiments (1993) involved a large number of subjects, plus separate replications for high fidelity, noise and telephone passband. Here, the upgrading of the vectors resulted in marked improvement for all conditions. Finally, the SAUSI vectors were further upgraded and replications of the 1993 experiments were run. As can be seen, correct identifications were strikingly higher for all conditions and the correct identification level reached 100% for all summed rotations. In short, it appears that machine-based approaches to speaker identification are feasible.

Figure 6 An example taken from a real-life investigation but one where the unknowns (X and Y) may or may not be the same person and where K and N are the suspects.Note that, in each of the three rotations, the target talker (starred) was found to best match himself.

Table 1 Summary data from three major experiments evaluating the SAUSI vectors under three conditions

| Conditions and study | Vectors11 | ||||

| TED | LTS | SFF | VFT | Sum | |

| High fidelity | |||||

| Hollien (1988) | 62 | 88 | 68 | 85 | 90 |

| Hollien eta/.(1993) | 63 | 100 | 100 | 100 | 100 |

| Jiang (1995) | 82 | 100 | 80 | 100 | 100 |

| Noise | |||||

| Hollien eta/.(1993) | 64 | 90 | 77 | 92 | 100 |

| Jiang (1995) | 76 | 94 | 76 | 96 | 100 |

| Telephone passband | |||||

| Hollien eta/.(1993) | 55 | 92 | 90 | 88 | 98 |

| Jiang (1995) | 58 | 100 | 90 | 96 | 100 |

Vocal Behaviors

A number of psychological or physiological states can be deduced (sometimes) by speech and voice analysis. Of these, two have been selected for brief review. They are psychological stress and intoxication.

While determining how a person is feeling just from hearing his or her voice is not something which is very easy to do, there are times when there is little else to go on; hence, this area is quite important.

Stress

First, it should be noted that the term ‘stress’ denotes a negative psychological state, but is it fear, anger or anxiety? A reasonable definition of stress would appear to be that it is a ‘psychological state which results as a response to a perceived threat and is accompanied by the specific emotions of fear and anxiety’.

It has long been accepted that listeners can identify some emotions (including stress) from speech samples alone, and do so very well. If this is true, what are some of the vocal correlations of this psychological state? First, increases in pitch or speaking fundamental frequency appear to correlate with stress increments. However, if this relationship is to be functional, the subject’s baseline data should ordinarily be available as it (the behavior) actually results as a shift from the norm. Second, while frequency variability is often cited as a correlate of stress, it actually is a poor predictor. Third, vocal intensity is another acoustic parameter that may correlate with psychological stress; however, the data here are a little ‘mixed’. Nevertheless, the best evidence is that vocal intensity tends to increase with stress. Fourth, while identification of the prosodic speaking characteristics related to stress is a fairly complex process, the temporal pattern of fewer speech bursts appears to correlate with it. Finally, an important recent finding is that speaker nonfluencies appear to increase sharply with stress.

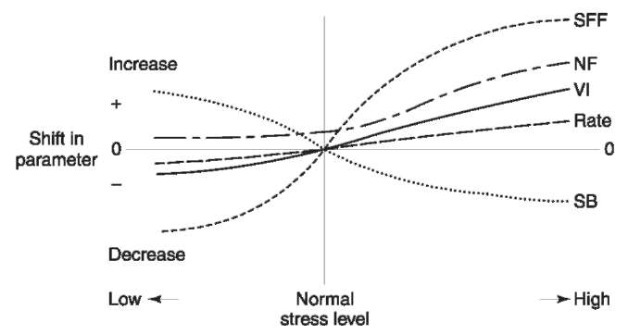

A predictive model of the vocal correlates of stress has been developed (Fig. 7). As may be seen, changes occur in speaking fundamental frequency, nonfluencies, vocal intensity, speech rate and the number of speech bursts; however, it should be remembered that information of this type will be of greatest value when it can be contrasted with reference profiles for that person’s normal speech.

The psychological stress evalvator It would be a mistake to leave this area without some reference to

Figure 7 Model of the most common shifts in the voice and speech of a person who is experiencing psychological stress.SFF, speaking fundamental frequency; NF, nonfluencies; VI, vocal intensity; SB, number of speech bursts per unit of time.

‘voice stress analyzers’. These devices, it is claimed, can be used to detect both stress and lying. It is without question but that the legal, law enforcement, intelligence and related agencies would benefit greatly from the availability of an effective method for the detection of stress and, especially, deception. Taking deception first: can lies be detected by any means at all? is there any such thing as a lie response? Perhaps Lykken has articulated the key concept here. He argues that, if lies are to be detected, there must be some sort of a ‘lie response’, a measurable physiological or psychological event that always occurs. He correctly suggests that, until a lie response has been identified and its validity and reliability have been established, no one can claim to be able to measure, detect and/or identify falsehoods on anything remotely approaching an absolute level. But has such a lie response been isolated? Simple logic can be used to test this possibility. For example, consider what would happen if it were possible to determine the beliefs and intent of politicians simply from hearing them speak. There would be no need for trials by jury as the guilt or innocence of anyone accused of a crime could be determined simply by asking them: ‘Did you do it?’ Consider also the impact an infallible lie detection system would have on family relationships! The answer seems clear.

Yet the voice analysts claim they can detect falsehoods and do it with certainty. They market a number of ‘systems’ for that purpose. How do these devices work? Unfortunately, it is almost impossible to answer this question as the claims are quite vague. One explanation is that the systems utilize the microtremors of a human’s muscles. Such microtremors do exist in the long muscles of the body; however, there is very little chance that they either exist in (or can affect) the antagonistic actions of the numerous and complexly interacting respiratory, laryngeal and vocal tract muscles. Indeed, there is substantial evidence that they do not. And is the presence of stress

equivalent to lying in the first place? A myriad of such questions can be asked but, at present, there are virtually no valid data to support the claims of the voice stress evaluators; rather the great preponderance demonstrates that they are quite invalid. Indeed, it appears that the PSE is an even greater fraud than are voiceprints.

Alcohol-speech relationships

Almost anyone who is asked to do so probably will describe the speech of an inebriated talker as ‘slurred’, ‘misarticulated’ or ‘confused’. But do commonly held stereotypes of this type square with the results of reality and/or research? More importantly, are there data which suggest that it is possible to determine a person’s sobriety solely from analysis of his or her speech? An important question, yet only limited research has been reported.

The rationale for an intoxication-speech link is clear-cut. Since both cognitive function and sensori-motor performance can be impaired, so too can the speech act, which results from operation of a number of high-level integrated systems (sensory, cognitive, motor). Moreover, it can be argued (from research) that articulation is degraded, speech rate slowed and perception of impairment raised as intoxication increases. Degradations in morphology and/or syntax have also been reported, as have articulatory problems. Perhaps more importantly, it has been found that:

• speaking fundamental frequency level is changed and its variability increased;

• speaking rate often is slowed;

• the number and length of pauses is often increased;

• amplitude or intensity levels are sometimes reduced;

• nonfluencies are markedly increased.

The basic problem with virtually all of the relationships cited is that they are variable and the reasons for this are not clear. Moreover, any number of other behavioral states – stress, fatigue, depression, effort, emotions and speech/voice disorders – can complicate attempts to determine intoxication level from speech analysis. And such determinations might not be possible in the first place unless the target utterances can be compared to that person’s speech when sober.

Having recognized the confusions and contradictions associated with the intoxication-speech dilemma, a team at the University of Florida developed a research program focused on resolution of these conflicts. New approaches designed to induce acute alcohol intoxication were employed; here, subjects received doses of 80 proof rum or vodka mixed with both a soft drink (orange juice, cola) and Gatorade. The subjects drank at their own pace but breath concentration levels (BrAC) were measured at 10-15min intervals. The approach was efficient, with nausea and discomfort sharply reduced, serial measurements permitted and intoxication level highly controlled. Moreover, large groups could be (and were) studied, with subjects participating in all procedures related to their experiment. Data were taken at ‘windows’ or intoxication levels (ascending or descending), including (among others) BrAC 0.00 (sober), BrAC 0.04-0.05(mild), BrAC 0.08-0.09 (legal) and BrAC 0.12-0.13 (severe). Subjects were carefully selected on the basis of 27 behavioral and medical criteria. After training, they were required to produce four types of speech at each intoxication level. Included were: (1) a standard 98 word oral reading passage, (2) articulation test sentences, (3) a set of diadochokinetic gestures, and (4) extemporaneous speech. As may be deduced from these descriptions, very careful and precise procedures were carried out for all conditions and levels. Analyses included auditory processing by listeners (drunk-sober, intoxication level, etc.), acoustic analysis of the signal, and various classification/sorting (behavioral) tests.

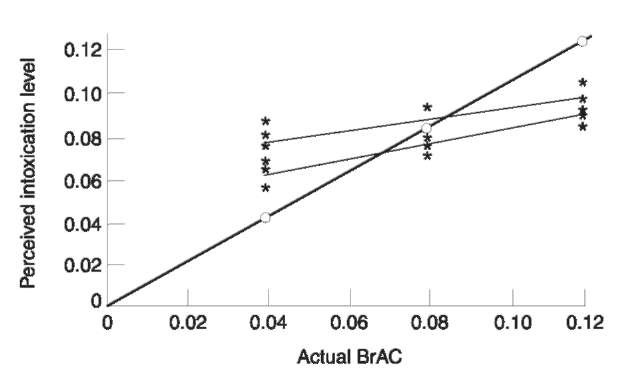

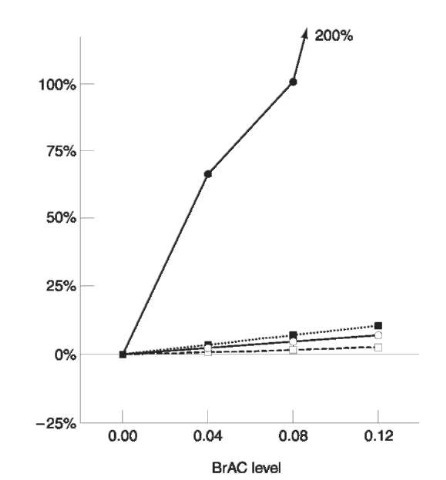

A number of relationships have already emerged. First, it appears that auditors tend to overestimate speaker impairment for individuals who are only mildly (to moderately) intoxicated. On the other hand, they tend to underestimate the level of involvement for subjects who are severely intoxicated (Fig. 8). Second, it appears possible to simulate accurately rather severe levels of intoxication, and even reduce the percept of intoxication if an inebriated individual attempts to sound sober. Moreover (and surprisingly), there seem to be only minor gender differences and few-to-none for drinking level (light, moderate, heavy). Perhaps the most powerful data so far are those observed for large groups of subjects in the ‘primary’ experiments (Fig. 9). As can be seen, they show shifts for all of the speaking characteristics measured except vocal intensity. Note also that speaking fundamental frequency (heard pitch) is raised with increases in intoxication level – a relationship which was noted by clinicians but not by previous researchers. Perhaps the most striking relationship of all is that between nonfluencies and intoxication level. The correlation here is a very high one and the pattern seen in Fig. 9 has been confirmed. While some variability exists, the predictable relationships are that speech is slowed down as intoxication increases and the number of nonfluencies rises sharply for the same conditions.

Figure 8 Perceived intoxication level contrasted to the physiologically measured levels (45° line with circles) from sober to severely intoxicated (BrAC 0.12-0.13). Four studies are combined for the top set (35 talkers, 85 listeners) and two for the lower (36 talkers, 52 listeners).Note the overreaction to the speech of people who are mildly intoxicated and underrating of those who are seriously inebriated.

Figure 9 The shifts in several speech parameters as a function of increasing intoxication.The increase in F0 and reduction in speaking rate (increased duration) are actually statistically significant.However, they are dwarfed by the dramatic shift in nonfluencies. O, F0(SFF); ■, duration; •. nonfluencies; □, vocal intensity.

In Summary

The new forensic sciences subspecialty of forensic phonetics can be seen as a dynamic and growing area. Some of its elements are quite well established, while others need refinement. Of course, it must be stressed that, just as with all relatively new fields, much is to be learned about what can and cannot be accomplished by application of the methods and procedures proposed or in use. Fortunately, appropriate baseline materials have been, or are being, developed by relevant practitioners and scientists situated both in America and Europe.