MacAdam Ellipses and MPCDs

One other graphical depiction of the non-uniformity of the xy diagram can be seen via MacAdam ellipses, which are experimentally derived groupings or small areas describing indistinguishable colors. In other words, the average viewer sees all colors within the area as the same color. The ellipses, then, define the distance corresponding to a “just-noticeable difference” (JND) in that particular area of the chart. (That they are referred to as “ellipses” is due to their elongated appearance only; they are not mathematically defined.) The size and shape of these areas when drawn on the xy diagram (shown in Figure 3-12, with the ellipses at roughly 10x their actual size, for visibility) dramatically demonstrate the perceptual nonuniformity of this space. The concept of a just-noticeable difference, or as it is sometimes called, a minimum-perceivable color difference (MPCD) is also used to define an incremental change or distance on a chromaticity diagram. For example, it is common to see the standard D65 illuminant referred to as “6500 K + 8MPCD”, as its location does not lie exactly on the 6500 K point of the black-body locus.

Figure 3-12 MacAdam ellipses, also known as “color ovals”, sketched on the 1931 CIE xy diagram. These define areas (shown here 10x actual size) that define colors that will be perceived by the average viewer as the same; i.e., the boundary of each ellipse represents the locus of colors that are just barely noticeable as being different from the center color. Note that this shows the xy chart to be perceptually non-uniform, as the ellipses are not the same size in all parts of this space.

The Kelly Chart

One additional variation on the standard chromaticity diagrams should be mentioned before we move off this subject. In 1955, K. L. Kelly, of the US National Bureau of Standards (now NIST, the National Institute of Standards and Technology)., defined a set of standard names for regions of the chromaticity diagram. The Kelly chart, while not itself a new color space definition, does permit the approximate location of colors within the existing standard spaces to be quickly identified. Originally, of course, the Kelly chart was a variation of the standard CIE xy diagram, but since the u v diagram is simply a rescaling of the xy space, the same regions can be mapped to that chart as well. The name “Kelly chart” is often misinterpreted as applying to the basic xy or u v diagrams themselves, rather than simply the versions of these with the named regions overlaid.

Encoding Color

From the standpoint of one concerned with the supposed subject of this topic – display interfaces – we still have not made it to the key question, which is how color information may be conveyed between physically or logically separate devices. As long as the image samples (pixels) were themselves simply one-dimensional values within a two- or three-dimensional sampling space, the interface could be expected to be relatively straightforward. Pixel data would be transmitted sequentially, in whatever scanning order was defined by the sampling process used, and as long as some means was provided for distinguishing the samples the original sampled image could easily be reconstructed. This is, of course, precisely how monochrome (“single-color”, but generally meaning “luminance only”) systems operate.

Introducing color into the picture (no pun intended) clearly complicates the situation. As has been shown in the above discussion, color is most commonly considered, when objectively quantified, as itself three-dimensional. The labels assigned to these three dimensions, and what each describes, can vary considerably (hue, saturation, value; red, green, and blue; X, Y, Z; and so forth), but at the very least we can expect to now require three separate (or at least separable) information channels where before there was but one. In the most obvious approaches, this will generally mean a tripling of the physical channels between image source and display, and along with this a tripling of the required – and available – total channel capacity. In many applications, this increase in required capacity or “bandwidth” is intolerable; some means must be developed for adding the color information without significantly increasing the demands on the physical channel, and/or without breaking compatibility with the simpler luminance-only representation.In general, such systems are based on recognizing that a considerable amount of the information required for a full-color image is already present in a luminance-only sample. Therefore, the original luminance-only signal of a monochrome scheme is retained, and to this are added two additional signals that provide the information required to derive the full-color image.

These added signals are viewed as providing information representing the difference between the full-color and luminance-only representations, and so are generically referred to as “color-difference” signals. This term is also used to refer to signals that represent the difference between the luminance channel and the information obtained from sampling the image in any one of the three primaries in use in a given system. Another common term used to distinguish these additional channels is chrominance, which is somewhat inaccurate as a description but which leads to such encoding systems being referred to as “luminance-chrominance” (or “Y/C”, since “Y” is the standard symbol for the luminance channel) types. Thus, we can generally separate color interfaces standards into two broad categories – those which maintain the information in the form of three primary colors throughout the system (as in the common “red-green-blue,” or “RGB,” systems), and those which employ some form of luminance/chrominance encoding. It is important to note, however, that practically all real-world display and image-sampling devices (video cameras, scanners, etc.) operate in a “three-primary” space. Use of a luminance/chrominance interface system almost always involves conversions between that system and a primary-based representation (RGB or CMY/CMYK) at either end.

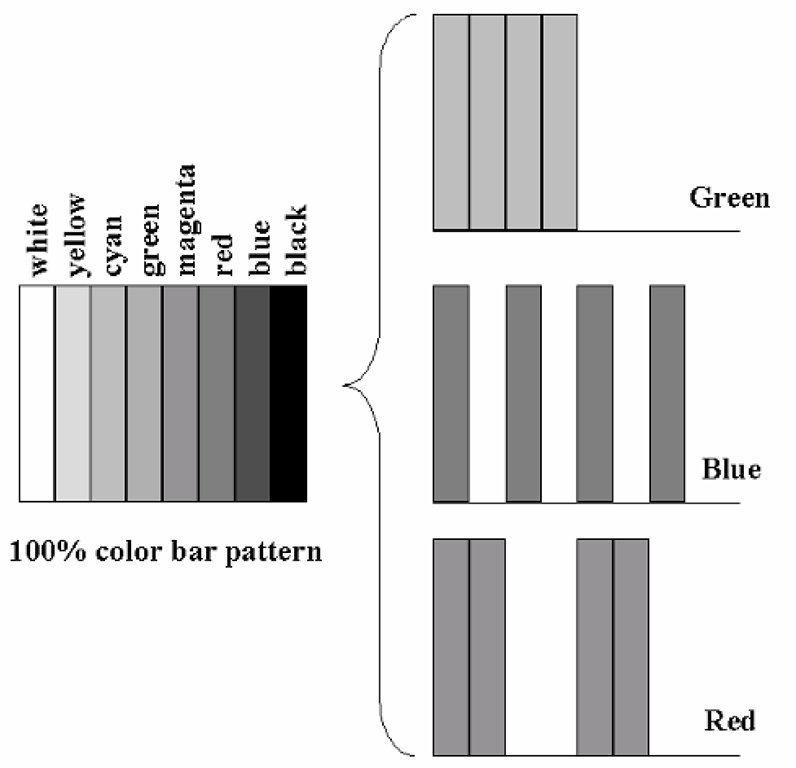

One further division of possible color-encoding systems has to do with how the three signals are transmitted in time. If we consider the operation of an image-sampling device – a camera, for instance – which is intended to provide full-color information, we might view each sample point or pixel as being a composite of three separate samples. In the most obvious example, a camera is actually producing independent red, green, and blue values for each pixel. (In practice, this is most often achieved through what is in effect three cameras in one, each viewing the scene through a primary-color filter.) We can say that the complete image is made up of pixels with three values each (RGB pixels), or we could equally well view this as generating three separate images – one in red, one in green, and one in blue – which must be combined to recover the image of the full-color original (Figure 3-13). This seems to be a trivial distinction, but in fact there are display technologies, as well as image-capture devices, which use each of these. Many display types provide physically distinct pixels which are, in fact composed of three separate primary-color elements. Other displays generate completely separate red, green, and blue images, and then present these to the viewer such that the desired full-color image is perceived. This leads to two very distinct methods for transmitting color data.

Figure 3-13 The separation of a color image into its primary components. Here, the standard color bar pattern (commonly used in television), consisting of various combinations of the three primaries (each at 100% value when used), is broken down into three single-color images or fields. This sort of color separation process is common in image capture, display, and printing systems, as these often deal with imagery through separate primary channels, sensors, and display devices. Recombining the three fields, in proper alignment, reproduces the original full-color image.

If the display is of the former type, and presenting the red, green, and blue information for each pixel simultaneously but through elements that are physically separated, it is said to be employing the spatial color type. The three primary-color images are presented such that the eye sees them simultaneously, occupying the same image plane and location, and so the appearance of a full-color image results. However, it is also possible to present the three primary-color images in the same location but separated in time. For example, the red image might be seen first, then the green, and then the blue. If this sequence is repeated rapidly enough, the eye cannot distinguish the separate color images (called fields) , and again perceives a single full-color image. This is referred to as a sequential color display system, or sometimes as field-sequential color. Obviously, the interface requirements for the two are considerably different. In the spatial-color types, the information for the red, green and blue values for each pixel must be transmitted essentially simultaneously, or at least sufficiently close in time such that the display can recover all three and present them together. This is commonly achieved through the use of separate, dedicated channels for each primary color (Figure 3-14a). With a sequential-color display, however, all of the information for the each single-color field must be transmitted in the proper order, and only then will the information for the next color be sent. This can be implemented through any number of physical channels, but the fields themselves will be serialized in time (Figure 3-14b).

Figure 3-14 Transmission of the color fields. Many display systems employ spatial color techniques, or other types in which the three primary-color fields may be transmitted and processed simultaneously, in parallel (a). Some, however, employ a field-sequential method (b), in which the separate primary fields are transmitted in a sequence, often over a single physical channel.

In the next topic, the last of the “fundamentals” section of this topic, an overview of the most popular current display technologies, and some promising future possibilities, are presented. With that review completed, we will have examined each of the separate components of the overall display system, and be ready to move to the main topic – the interfaces between these components. Of particular interest, however, will be how each display technology, and each of the various display-interface systems, handles the encoding of color, and the limitations on color reproduction that these methods create.