(k1, k2)-design:

An unbalanced design in which two measurements are made on a sample of k2 individuals and only a single measurement made on a further kj individuals.

Kalman filter:

A recursive procedure that provides an estimate of a signal when only the ‘noisy signal’ can be observed. The estimate is effectively constructed by putting exponentially declining weights on the past observations with the rate of decline being calculated from various variance terms. Used as an estimation technique in the analysis of time series data.

Kaiser’s rule:

A rule often used in principal components analysis for selecting the appropriate number of components. When the components are derived from the correlation matrix of the observed variables, the rule suggests retaining only those components with eigenvalues (variances) greater than one. See also scree plot.

Kaplan-Meier estimator:

Synonym for product limit estimator.

Kappa coefficient:

A chance corrected index of the agreement between, for example, judgements and diagnoses made by two raters. Calculated as the ratio of the observed excess over chance agreement to the maximum possible excess over chance, the coefficient takes the value one when there is perfect agreement and zero when observed agreement is equal to chance agreement. See also Aickin’s measure of agreement.

KDD:

Abbreviation for knowledge discovery in data bases.

Kellerer, Hans (1902-1976):

Born in a small Bavarian village, Kellerer graduated from the University of Munich in 1927. In 1956 he became Professor of Statistics at the University of Munich. Kellerer played an important role in getting modern statistical methods accepted in Germany.

Kempthorne, Oscar (1919-2000):

Born in Cornwall, England, Kempthorne studied at Cambridge University where he received both B.A. and M.A. degress. In 1947 he joined the Iowa State College statistics faculty where he remained an active member until his retirement in 1989. In I960 Kempthorne was awarded an honorary Doctor of Science degree from Cambridge in recognition of his contributions to statistics in particular to experimental design and the analysis of variance. He died on 15 November 2000 in Annapolis, Maryland.

Kempton, Rob (1946-2003):

Born in Isleworth, Middlesex, Kempton read mathematics at Wadham College, Oxford. His first job was as a statistician at Rothamsted Experimental Station, and in j976 he was appointed Head of Statistics at the Plant Breeding Institute in Cambridge where he made contributions to the design and analysis of experiments with spatial trends and treatment carryover effects. In 1986 Kempton became the founding director of the Scottish Agricultural Statistics

Service where he carried out work on the statistical analysis of health risks from food and from genetically modified organisms. He died on 11 May 2003.

Kendall’s coefficient of concordance:

Synonym for coefficient of concordance.

Kendall, Sir Maurice George (1907-1983):

Born in Kettering, Northamptonshire, Kendall studied mathematics at St John’s College, Cambridge, before joining the Administrative Class of the Civil Service in 1930. In 1940 he left the Civil Service to become Statistician to the British Chamber of Shipping. Despite the obvious pressures of such a post in war time, Kendall managed to continue work on The Advanced Theory of Statistics which appeared in two volumes in 1943 and 1946. In 1949 he became Professor of Statistics at the London School of Economics where he remained until 1961. Kendall’s main work in statistics involved the theory of k-statistics, time series and rank correlation methods. He also helped organize a number of large sample survey projects in collaboration with governmental and commercial agencies. Later in his career, Kendall became Managing Director and Chairman of the computer consultancy, SCICON. In the 1960s he completed the rewriting of his major topic into three volumes which were published in 1966. In 1972 he became Director of the World Fertility Survey. Kendall was awarded the Royal Statistical Society’s Guy Medal in gold and in 1974 a knighthood for his services to the theory of statistics. He died on 29 March 1983 in Redhill, UK.

Kendall’s tau statistics:

Measures of the correlation between two sets of rankings. Kendall’s tau itself (t) is a rank correlation coefficient based on the number of inversions in one ranking as compared with another, i.e. on S given by

where P is the number of concordant pairs of observations, that is pairs of observations such that their rankings on the two variables are in the same direction, and Q is the number of discordant pairs for which rankings on the two variables are in the reverse direction. The coefficient t is calculated as

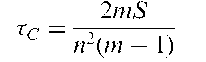

A number of other versions of t have been developed that are suitable for measuring association in an r x c contingency table with both row and column variables having ordered categories. (Tau itself is not suitable since it assumes no tied observations.) One example is the coefficient, tc given by

where m = min(r, c). See also phi-coefficient, Cramer’s V and contingency coefficient.

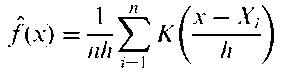

Kernel density estimators: Methods of estimating a probability distribution using estimators of the form

where h is known as window width or bandwidth and K is the kernel function which is such that

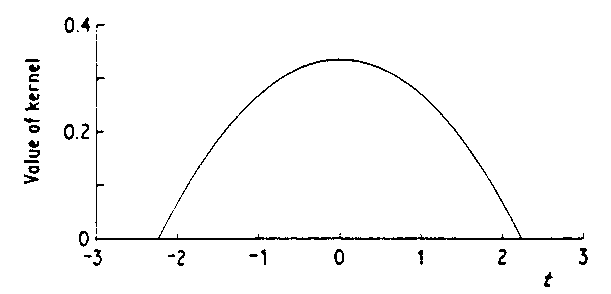

Essentially such kernel estimators sum a series of ‘bumps’ placed at each of the observations. The kernel function determines the shape of the bumps while h determines their width. A useful kernel function is the Epanechnikov kernel defined as

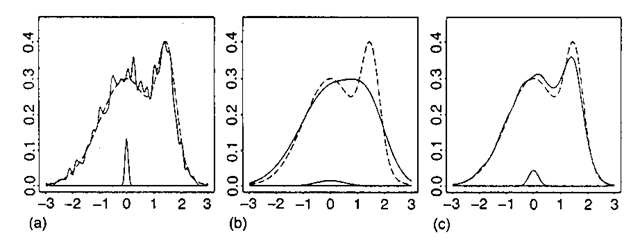

A graph of this kernel is shown at Fig. 79. In fact the choice of the shape of the kernel function is not usually of great importance. In contrast the choice of bandwidth can often be critical. The three diagrams in Fig. 80, for example, show this type of density estimate derived from a sample of 1000 observations from a mixture of two normal distributions with h being 0.06 for the first figure, 0.54 for the second and 0.18 for the third. The first is too ‘rough’ to be of much use and the second is oversmoothed and the multimodality is concealed; the third represents an acceptable compromise. There are situations in which it is satisfactory to choose the bandwidth subjectively by eye; more formal methods are however available. [Density Estimation in Statistics and Data Analysis, 1986, B. Silverman, Chapman and Hall/CRC Press, London.]

Kernel regression smoothing:

A distribution free method for smoothing data. In a single dimension, the method consists of the estimation of f fx,) in the relation

where e,, i = 1,…, n are assumed to be symmetric errors with zero means. There are several methods for estimating the regression function, f, for example, averaging the y, values that have x, close to x. See also regression modelling.

Fig. 79 Epanechnikov kernel.

Fig. 80 Kernel estimates for different bandwidths.

Khinchin theorem: If x1,…, xn is a sample from a probability distribution with expected value \i then the sample mean converges in probability to \i as n !1.

Kish, Leslie (1910-2000):

Born in Poprad at the time it was part of the Austro-Hungarian Empire (it is now in Slovakia), Kish received a degree in mathematics from the City College of New York in 1939. He first worked at the Bureau of the Census in Washington and later at the Department of Agriculture. After serving in the war, Kish moved to the University of Michigan in 1947, and helped found the Institute for Social Research. During this period he received both MA and Ph.D degrees. Kish made important and far reaching contributions to the theory of sampling much of it published in his pioneering topic Survey Sampling. Kish received many awards for his contributions to statistics including the Samuel Wilks Medal and an Honorary Fellowship of the ISI.

Kleiner-Hartigan trees:

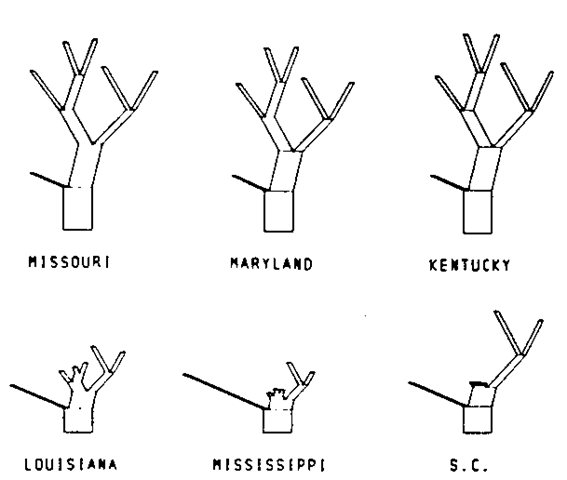

A method for displaying multivariate data graphically as ‘trees’ in which the values of the variables are coded into the length of the terminal branches and the terminal branches have lengths that depend on the sums of the terminal branches they support. One important feature of these trees that distinguishes them from most other compound characters, for example, Chernoffs faces, is that an attempt is made to remove the arbitrariness of assignment of the variables by performing an agglomerative hierarchical cluster analysis of the variables and using the resultant dendrogram as the basis tree. Some examples are shown in Fig. 81. [Journal of the American Statistical Association, 1981, 76, 260-9.]

Klotz test:

A distribution free method for testing the equality of variance of two populations having the same median. More efficient than the Ansari-Bradley test. See also Conover test.

K-means cluster analysis:

A method of cluster analysis in which from an initial partition of the observations into K clusters, each observation in turn is examined and reassigned, if appropriate, to a different cluster in an attempt to optimize some predefined numerical criterion that measures in some sense the ‘quality’ of the cluster solution. Many such clustering criteria have been suggested, but the most commonly used arise from considering features of the within groups, between groups and total matrices of sums of squares and cross products (W, B, T) that can be defined for every partition of the observations into a particular number of groups. The two most common of the clustering criteria arising from these matrices are minimization of trace(W) minimization of determinant(W)

Fig. 81 Kleiner-Hartigan trees for Republican vote data in six Southern states.

The first of these has the tendency to produce ‘spherical’ clusters, the second to produce clusters that all have the same shape, although this will not necessarily be spherical. See also agglomerative hierarchical clustering methods, divisive methods and hill-climbing algorithm.

Knowledge discovery in data bases (KDD):

A form of data mining which is interactive and iterative requiring many decisions by the researcher.

Knox’s tests:

Tests designed to detect any tendency for patients with a particular disease to form a disease cluster in time and space. The tests are based on a two-by-two contingency table formed from considering every pair of patients and classifying them as to whether the members of the pair were or were not closer than a critical distance apart in space and as to whether the times at which they contracted the disease were closer than a chosen critical period.

Kolmogorov, Andrei Nikolaevich (1903-1987):

Born in Tambov, Russia, Kolmogorov first studied Russian history at Moscow University, but turned to mathematics in 1922. During his career he held important administrative posts in the Moscow State University and the USSR Academy of Sciences. He made major contributions to probability theory and mathematical statistics including laying the foundations of the modern theory of Markov processes. Kolmogorov died on 20 October 1987 in Moscow.

Kolmogorov-Smirnov two-sample method:

A distribution free method that tests for any difference between two population probability distributions. The test is based on the maximum absolute difference between the cumulative distribution functions of the samples from each population. Critical values are available in many statistical tables.

Korozy, Jozsef (1844-1906):

Born in Pest, Korozy worked first as an insurance clerk and then a journalist, writing a column on economics. Largely self-taught he was appointed director of a municipal statistical office in Pest in 1869. Korozy made enormous contributions to the statistical and demographic literature of his age, in particular developing the first fertility tables. Joined the University of Budapest in 1883 and received many honours and awards both at home and abroad.

Kovacsics, Jozsef (1919-2003):

Jozsef Kovacsics was one of the most respected statisticians in Hungary. Starting his career in the Hungarian Statistical Office, he was appointed Director of the Public Library of the Statistical Office in 1954 and in 1965 became a full professor of statistics at the Eotvos Lorand University where he stayed until his retirement in 1989. Kovacsics carried out important work in several areas of statistics and demography. He died on 26 December 2003.

Kriging:

A method for providing the value of a random process at a specified location in a region, given a dataset consisting of measurements of the process at a variety of locations in the region. For example, from the observed values y1, y2. •••. yn of the concentration of a pollutant at n sites, t1, t2,…, tn, it may be required to estimate the concentration at a new nearby site, t0. Named after D.G. Krige who first introduced the technique to estimate the amount of gold in rock.

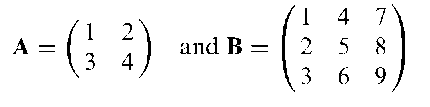

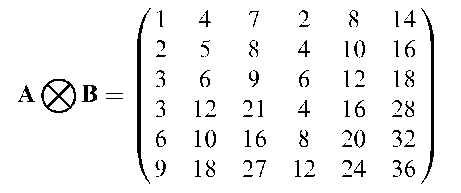

Kronecker product of matrices:

The result of multiplying the elements of an m x m matrix A term by term by those of an n x n matrix B. The result is an mn x mn matrix. For example, if

then

A® B is not in general equal to B® A.

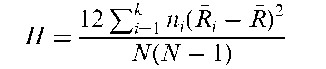

Kruskal-Wallis test:

A distribution free method that is the analogue of the analysis of variance of a one-way design. It tests whether the groups to be compared have the same population median. The test statistic is derived by ranking all the N observations from 1 to N regardless of which group they are in, and then calculating

where n, is the number of observations in group i, R, is the mean of their ranks, R is the average of all the ranks, given explicitly by (N + 1)/2. When the null hypothesis is true the test statistic has a chi-squared distribution with k — 1 degrees of freedom.

k-statistics:

A set of symmetric functions of sample values proposed by Fisher, defined by requiring that thepth k-statistic, kp, has expected value equal to thepth cumulant, Kp, i.e.

Originally devised to simplify the task of finding the moments of sample statistics. [KA1 Chapter 12.]

Kuiper’s test:

A test that a circular random variable has an angular uniform distribution. Given a set of observed values, 61,62,… ,6n the test statistic is

where

and Xj = Oj/2n. Vn*Jn has an approximate standard normal distribution under the hypothesis of an angular uniform distribution. See also Watson’s test. [MV2 Chapter 14.]

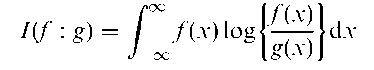

Kullback-Leibler information:

A function, I, defined for two probability distributions, f (x) and g(x) and given by

Essentially an asymmetric distance function for the two distributions. Jeffreys’s distance measure is a symmetric combination of the Kullback-Leibler information given by

Kurtosis:

The extent to which the peak of a unimodal probability distribution or frequency distribution departs from the shape of a normal distribution, by either being more pointed (leptokurtic) or flatter (platykurtic). Usually measured for a probability distribution as

where \i4 is the fourth central moment of the distribution, and \i2 is its variance. (Corresponding functions of the sample moments are used for frequency distributions.) For a normal distribution this index takes the value three and often the index is redefined as the value above minus three so that the normal distribution would have a value zero. (Other distributions with zero kurtosis are called mesokurtic.) For a distribution which is leptokurtic the index is positive and for a platykurtic curve it is negative. See Fig. 82. See also skewness. [KA1 Chapter 3.]

Fig. 82 Curves with differing degrees of kurtosis: A, mesokurtic; B, platykurtic; C, leptokurtic.