7.5

We have been concerned exclusively with integers, and, as we have noted, all of the subroutines for arithmetic operations and conversion from one base to another could be extended to include signs if we wish. We have not yet considered arithmetic operations for numbers with a fractional part. For example, the 32-bit string b3i,. . bo could be used to represent the number x, where

![tmp7-51_thumb[1] tmp7-51_thumb[1]](http://lh3.ggpht.com/_X6JnoL0U4BY/S094zt0ltNI/AAAAAAAAGtE/7jGcP9O4hbE/tmp751_thumb1_thumb.png?imgmax=800)

The notation b3i . . . bs • b7 . . . b0 is used to represent x, where the symbol called the binary point, indicates where the negative powers of 2 start. Addition and subtraction of two of these 32-bit numbers, with an arbitrary placement of the binary point for each, is straightforward except that the binary points must be aligned before addition or subtraction takes place and the specification of the exact result may require as many as 64 bits. If these numbers are being added and subtracted in a program (or multiplied and divided), the programmer must keep track of the binary point and the number of bits being used to keep the result. This process, called scaling, was used on analog computers and early digital computers. In most applications, scaling is so inconvenient to use that most programmers use other representations to get around it.

One technique, called a fixed-point representation, fixes the number of bits and the position of the binary point for all numbers represented. Thinking only about unsigned numbers for the moment, notice that the largest and smallest nonzero numbers that we can represent are fixed once the number of bits and the position of the binary point are fixed. For example, if we use 32 bits for the fixed-point representation and eight bits for the fractional part as in (13), the largest number that is represented by (13) is about 224 and the smallest nonzero number is 2-8. As one can see, if we want to do computations with either very large or very small numbers (or both), a large number of bits will be required with a fixed-point representation. What we want then is a representation that uses 32 bits but gives us a much wider range of represented numbers and, at the same time, keeps track of the position of the binary point for us just as the fixed-point representation does. This idea leads to the floating-point representation of numbers, which we discuss in this section. After discussing the floating-point representation of numbers, we examine the arithmetic of floating-point representation and the conversion between floating-point representations with different bases.

![tmp7-52_thumb[1] tmp7-52_thumb[1]](http://lh6.ggpht.com/_X6JnoL0U4BY/S095F9-_m3I/AAAAAAAAGtM/cuS5Wl3wO1Q/tmp752_thumb1_thumb.png?imgmax=800)

This type of representation is called a floating-point representation because the binary point is allowed to vary from one number to another even though the total number of bits representing each number stays the same. Although the range has increased for this method of representation, the number of points represented per unit interval with the floating-point representation is far less than the fixed-point representation that has the same range. Furthermore, the density of numbers represented per unit interval gets smaller as the numbers get larger. In fact, in our 32-bit floatingpoint example, there are 273 + 1 uniformly spaced points represented in the interval from 2″ to 2n+l as n varies between -128 and 127.

Looking more closely at this same floating-point example, notice that some of the numbers have several representations; for instance, a significand of 1.100 … 0 with an exponent of 6 also equals a significand of 0.1100 … 0 with an exponent of 7. Additionally, a zero significand, which corresponds to the number zero, has 256 possible exponents. To eliminate this multiplicity, some form of standard representation is usually adopted. For example, with the bits b^i, . . . . , b0 we could standardize our representation as follows. For numbers greater than or equal to 2-127 we could always take the representation with b23 equal to 1. For the most negative exponent, in this case -128, we could always take b23 equal to 0 so that the number zero is represented by a significand of all zeros and an exponent of -128. Doing this, the bit b23 can always be determined from the exponent. It is 1 for an exponent greater than -128 and 0 for an exponent of -128. Because of this, b23 does not have to be stored, so that, in effect, this standard representation has given us an additional bit of precision in the significand. When b23 is not explicitly stored in memory but is determined from the exponent in this way, it is termed a hidden bit.

Floating-point representations can obviously be extended to handle negative numbers by putting the significand in, say, a two’s-complement representation or a signed-magnitude representation. For that matter, the exponent can also be represented in any of the various ways that include representation of negative numbers. Although it might seem natural to use a two’s-complement representation for both the significand and the exponent with the 6812, one would probably not do so, preferring instead to adopt one of the standard floating-point representations.

We now consider the essential elements of the proposed IEEE standard 32-bit floating-point representation. The numbers represented are also called single precision floating-point numbers, and we shall refer to them here simply as floating-point numbers. The format is shown below.

![tmp7-53_thumb[2] tmp7-53_thumb[2]](http://lh6.ggpht.com/_X6JnoL0U4BY/S095HVCd42I/AAAAAAAAGtU/ox_KWJuOtFk/tmp753_thumb2_thumb.png?imgmax=800)

In the drawing, s is the sign bit for the significand, and f represents the 23-bit fractional part of the significand magnitude with the hidden bit, as above, to the left of the binary point. The exponent is determined from e by a bias of 127, that is, an e of 127 represents an exponent of 0, an e of 129 represents an exponent of +2, an e of 120 represents an exponent of -7, and so on. The hidden bit is taken to be 1 unless e has the value 0. The floating-point numbers given by

![tmp7-54_thumb[1] tmp7-54_thumb[1]](http://lh4.ggpht.com/_X6JnoL0U4BY/S095Ixy9L1I/AAAAAAAAGtc/stsVuBSN9fc/tmp754_thumb1_thumb.png?imgmax=800)

are called normalized. (In the IEEE standard, an e of 255 is used to represent +_infinity together with values that are not to be interpreted as numbers but are used to signal the user that his calculation may no longer be valid.) The value of 0 for e is also used to represent denormalized floating-point numbers, namely,

![]()

Denormalized floating-point numbers allow the representation of small numbers with magnitudes between 0 and 2-126. In particular, notice that the exponent for the denormalized floating-point numbers is taken to be -126, rather than -127, so that the interval between 0 and 2~126 contains 223-l uniformly spaced denormalized floatingpoint numbers.

Although the format above might seem a little strange, it turns out to be convenient because a comparison between normalized floating-point numbers is exactly the same as a comparison between 32-bit signed-magnitude integers represented by the string s, e, f. This means that a computer implementing signed-magnitude integer arithmetic will not have to have a separate 32-bit compare for integers and floating-point numbers. In larger machines with 32-bit words, this translates into a hardware savings, while in smaller machines, like the 6812, it means that only one subroutine has to be written instead of two if signed-magnitude arithmetic for integers is to be implemented.

We now look more closely at the ingredients that floating-point algorithms must have for addition, subtraction, multiplication, and division. For simplicity, we focus our attention on these operations when the inputs are normalized floating-point numbers and the result is expressed as a normalized floating-point number.

To add or subtract two floating-point numbers, one of the representations has to be adjusted so that the exponents are equal before the significands are added or subtracted. For accuracy, this unnormalization always is done to the number with the smaller exponent. For example, to add the two floating-point numbers

![tmp7-56_thumb[1] tmp7-56_thumb[1]](http://lh5.ggpht.com/_X6JnoL0U4BY/S095Ms3XJZI/AAAAAAAAGuM/sKT-vJEkQ98/tmp756_thumb1_thumb%5B1%5D.png?imgmax=800)

we first unnormalize the number with the smaller exponent and then add as shown.

![tmp7-57_thumb[1] tmp7-57_thumb[1]](http://lh3.ggpht.com/_X6JnoL0U4BY/S095rVPe_iI/AAAAAAAAGuY/1uSfR_e3XgU/tmp757_thumb1_thumb%5B1%5D.png?imgmax=800)

(For this example and all those that follow, we give the value of the exponent in decimal and the 24-bit magnitude of the significand in binary.) Sometimes, as in adding,

![tmp7-58_thumb[1] tmp7-58_thumb[1]](http://lh6.ggpht.com/_X6JnoL0U4BY/S095tibwRTI/AAAAAAAAGuk/LqjGLikMPu8/tmp758_thumb1_thumb%5B1%5D.png?imgmax=800)

the sum will have to be renormalized before it is used elsewhere. In this example

![]()

is the renormalization step. Notice that the unnormalization process consists of repeatedly shifting the magnitude of the significand right one bit and incrementing the exponent until the two exponents are equal. The renormalization process after addition or subtraction may also require several steps of shifting the magnitude of the significand left and decrementing the exponent. For example,

![tmp7-60_thumb[1] tmp7-60_thumb[1]](http://lh4.ggpht.com/_X6JnoL0U4BY/S095x9SJ5RI/AAAAAAAAGu0/BWuCDluCZrw/tmp760_thumb1_thumb%5B1%5D.png?imgmax=800)

requires three left shifts of the significand magnitude and three decrements of the exponent to get the normalized result:

![]()

With multiplication, the exponents are added and the significands are multiplied to get the product. For normalized numbers, the product of the significands is always less than 4, so that one renormalization step may be required. The step in this case consists of shifting the magnitude of the significand right one bit and incrementing the exponent. With division, the significands are divided and the exponents are subtracted. With normalized numbers, the quotient may require one renormalization step of shifting the magnitude of the significand left one bit and decrementing the exponent. This step is required only when the magnitude of the divisor significand is larger than the magnitude of the dividend significand. With multiplication or division it must be remembered also that the exponents are biased by 127 so that the sum or difference of the exponents must be rebiased to get the proper biased representation of the resulting exponent.

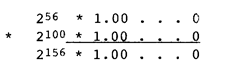

In all of the preceding examples, the calculations were exact in the sense that the operation between two normalized floating-point numbers yielded a normalized floatingpoint number. This will not always be the case, as we can get overflow, underflow, or a result that requires some type of rounding to get a normalized approximation to the result. For example, multiplying

yields a number that is too large to be represented in the 32-bit floating-point format. This is an example of overflow, a condition analogous to that encountered with integer arithmetic. Unlike integer arithmetic, however, underflow can occur, that is, we can get a result that is too small to be represented as a normalized floating-point number. For example,

![tmp7-63_thumb[1] tmp7-63_thumb[1]](http://lh5.ggpht.com/_X6JnoL0U4BY/S096CB3ht2I/AAAAAAAAGvU/QsUKY8D6Uc8/tmp763_thumb1_thumb%5B1%5D.png?imgmax=800)

yields a result that is too small to be represented as a normalized floating-point number with the 32-bit format.

The third situation is encountered when we obtain a result that is within the normalized floating-point range but is not exactly equal to one of the numbers (14). Before this result can be used further, it will have to be approximated by a normalized floating-point number. Consider the addition of the following two numbers.

![tmp7-64_thumb[1] tmp7-64_thumb[1]](http://lh6.ggpht.com/_X6JnoL0U4BY/S096DsNUf-I/AAAAAAAAGvc/JJYO64XE0-4/tmp764_thumb1_thumb%5B1%5D.png?imgmax=800)

The exact result is expressed with 25 bits in the fractional part of the significand so that we have to decide which of the possible normalized floating-point numbers will be chosen to approximate the result. Rounding toward plus infinity always takes the approximate result to be the next larger normalized number to the exact result, while rounding toward minus infinity always takes the next smaller normalized number to approximate the exact result. Truncation just throws away all the bits in the exact result beyond those used in the normalized significand. Truncation rounds toward plus infinity for negative results and rounds toward minus infinity for positive results. For this reason, truncation is also called rounding toward zero. For most applications, however, picking the closest normalized floating-point number to the actual result is preferred. This is called rounding to nearest. In the case of a tie, the normalized floating-point number with the least significant bit of 0 is taken to be the approximate result. Rounding to nearest is the default type of rounding for the IEEE floating-point standard. With rounding to nearest, the magnitude of the error in the approximate result is less than or equal to the magnitude of the exact result times 2~24.

One could also handle underflows in the same way that one handles rounding. For example, the result of the subtraction

![tmp7-65_thumb[1] tmp7-65_thumb[1]](http://lh4.ggpht.com/_X6JnoL0U4BY/S096I999OBI/AAAAAAAAGvk/CWUQWbGn5L0/tmp765_thumb1_thumb%5B1%5D.png?imgmax=800)

![tmp7-66_thumb[3] tmp7-66_thumb[3]](http://lh4.ggpht.com/_X6JnoL0U4BY/S096KHNwB2I/AAAAAAAAGvs/ijpzqyk3zMY/tmp766_thumb3_thumb%5B2%5D.png?imgmax=800)

could be put equal to 2~126 * 1.0000. More frequently, all underflow results are put equal to 0 regardless of the rounding method used for the other numbers. This is termed flushing to zero. The use of denormalized floating-point numbers appears natural here, as it allows for a gradual underflow as opposed to, say, flushing to zero. To see the advantage of using denormalized floating-point numbers, consider the computation of the expression (Y — X) + X. IfY-X underflows, X will always be the computed result if flushing to zero is used. On the other hand, the computed result will always be Y if denormalized floating-point numbers are used. The references mentioned at the end of the topic contain further discussions on the merits of using denormalized floating point numbers. Implementing all of the arithmetic functions with normalized and denormalized floating-point numbers requires additional care, particularly with multiplication and division, to ensure that the computed result is the closest represented number, normalized or denormalized, to the exact result. It should be mentioned that the IEEE standard requires that a warning be given to the user when a denormalized result occurs. The motivation for this is that one is losing precision with denormalized floating-point numbers. For example, if during the calculation of the expression (Y — X) * Z. If Y -X underflows, the precision of the result may be doubtful even if (Y — X) * Z is a normalized floating-point number. Flushing to zero would, of course, always produce zero for this expression when (Y — X) underflows.

The process of rounding to nearest, hereafter just called rounding, is straightforward after multiplication. However, it is not so apparent what to do after addition, subtraction, or division. We consider addition/subtraction. Suppose, then, that we add the two numbers

![tmp7-67_thumb[1] tmp7-67_thumb[1]](http://lh4.ggpht.com/_X6JnoL0U4BY/S096Ln2CeJI/AAAAAAAAGv0/cvO14FaUwOg/tmp767_thumb1_thumb.png?imgmax=800)

(The enclosed bits are the bits beyond the 23 fractional bits of the significand.) The result, when rounded, yields 2° * 1.0 . . . 010. By examining a number of cases, one can see that only three bits need to be kept in the unnormalization process, namely.

![tmp7-68_thumb[2] tmp7-68_thumb[2]](http://lh5.ggpht.com/_X6JnoL0U4BY/S096NJhBBiI/AAAAAAAAGv8/Sh9oWuxcCI8/tmp768_thumb2_thumb.png?imgmax=800)

where g is the guard bit, r is the round bit, and s is the sticky bit. When a bit b is shifted out of the significand in the unnormalization process.

![tmp7-69_thumb[1] tmp7-69_thumb[1]](http://lh6.ggpht.com/_X6JnoL0U4BY/S096TMOSm2I/AAAAAAAAGwE/XF3E26CPDDA/tmp769_thumb1_thumb.png?imgmax=800)

Notice that if s ever becomes equal to 1 in the unnormalization process, it stays equal to 1 thereafter or “sticks” to 1. With these three bits, rounding is accomplished by incrementing the result by 1 if

![tmp7-70_thumb[1] tmp7-70_thumb[1]](http://lh5.ggpht.com/_X6JnoL0U4BY/S096VFAhg9I/AAAAAAAAGwM/mSqfpgFxrSQ/tmp770_thumb1_thumb.png?imgmax=800)

If adding the significands or rounding causes an overflow in the significand bits (only one of these can occur), a renormalization step is required. For example,

![tmp7-71_thumb[1] tmp7-71_thumb[1]](http://lh5.ggpht.com/_X6JnoL0U4BY/S096aU9oCrI/AAAAAAAAGwU/6pqSpo6HhfU/tmp771_thumb1_thumb.png?imgmax=800)

![tmp7-72_thumb[1] tmp7-72_thumb[1]](http://lh4.ggpht.com/_X6JnoL0U4BY/S096cbDHXBI/AAAAAAAAGwc/AtpESWE0HNQ/tmp772_thumb1_thumb.png?imgmax=800)

The rounding process for addition of numbers with opposite signs (e.g., subtraction) is exactly like that above except that the round byte must be included in the subtraction, and renormalization may be necessary after the significands are subtracted. In this renormalization step, several shifts left of the significand may be required where each shift requires a bit b for the least significant bit of the significand. It may be obtained from the round byte as shown below. (The sticky bit may also be replaced by zero in the process pictured without altering the final result. However, at least one round bit is required.) After renormalization, the rounding process is identical to (16). As an example,

![tmp7-73_thumb[1] tmp7-73_thumb[1]](http://lh6.ggpht.com/_X6JnoL0U4BY/S096ekoULiI/AAAAAAAAGwk/BHOFKVo5YzQ/tmp773_thumb1_thumb.png?imgmax=800)

which, after renormalization and rounding, becomes 2_1 * 1.1… 10 0. Subroutines for floating-point addition and multiplication are given in Hiware’s C and C++ libraries. To illustrate the principles without an undue amount of detail, the subroutines are given only for normalized floating-point numbers. Underflow is handled by flushing the result to zero and setting an underflow flag, and overflow is handled by setting an overflow flag and returning the largest possible magnitude with the correct sign. These subroutines conform to the IEEE standard but illustrate the basic algorithms, including rounding. The procedure for addition is summarized in Figure 7.20, where one should note that the significands are added as signed-magnitude numbers.

One other issue with floating-point numbers is conversion. For example, how does one convert the decimal floating-point number 3.45786* 104 into a binary floating-point number with the IEEE format? One possibility is to have a table of binary floating-point numbers, one for each power of ten in the range of interest. One can then compute the expression

![]()

using the floating-point add and floating-point multiply subroutines. One difficulty with this approach is that accuracy is lost because of the number of floating point multiplies and adds that are used. For example, for eight decimal digits in the decimal significand, there are eight floating-point multiplies and seven floating-point adds used in the conversion process. To get around this, one could write 3.45786 * 104 as .345786 * 105 and multiply the binary floating-point equivalent of 105 (obtained again from a table) by the binary floating-point equivalent of .345786. This, of course, would take only one floating-point multiply and a conversion of the decimal fraction to a binary floatingpoint number.

1. Attach a zero round byte to each significand and unnormalize the number with the smaller exponent.

2. Add significands of operands (including the round byte).

3. If an overflow occurs in the significand bits, shift the bits for the magnitude and round byte right one bit and increment the exponent.

4. If all bits of the unrounded result are zero, put the sign of the result equal to + and the exponent of the result to the most negative value; otherwise, renormalize the result, if necessary, by shifting the bits of the magnitude and round byte left and decrementing the exponent for each shift.

5. If underflow occurs, flush the result to zero, and set the underflow flag; otherwise, round the result.

6. If overflow occurs, put the magnitude equal to the maximum value, and set the

overflow flag.

Figure 720. Procedure for Floating-Point Addition

Converting the decimal fraction into a binary floating-point number can be carried out in two steps.

1. Convert the decimal fraction to a binary fraction.

2. Convert the binary fraction to a binary floating-point number.

Step 2 is straightforward, so we concentrate our discussion on Step 1, converting a decimal fraction to a binary fraction.

Converting fractions between different bases presents a difficulty not found when integers are converted between different bases. For example, if

![tmp7-75_thumb[1] tmp7-75_thumb[1]](http://lh3.ggpht.com/_X6JnoL0U4BY/S096hni9b_I/AAAAAAAAGw0/Wjbp27pMc9g/tmp775_thumb1_thumb.png?imgmax=800)

that is, bi is not equal to 0 for infinitely many values of i. (As an example of this, expand the decimal fraction 0.1 into a binary one.) Rather than trying to draw analogies to the conversion of integer representations, it is simpler to notice that multiplying the right-hand side of (18) by s yields bi as the integer part of the result, multiplying the resulting fractional part by s yields b2 as the integer part, and so forth. We illustrate the technique with an example.

Suppose that we want to convert the decimal fraction .345786 into a binary fraction so that

![]()

Then bi is the integer part of 2 * (.345786), b2 is the integer part of 2 times the fractional part of the first multiplication, and so on for the remaining binary digits. More often than not, this conversion process from a decimal fraction to a binary one does not

terminate after a fixed number of bits, so that some type of rounding must be done. Furthermore, assuming that the leading digit of the decimal fraction is nonzero, as many as three leading bits in the binary fraction may be zero. Thus, if one is using this step to convert to a binary floating-point number, probably 24 bits after the leading zeros should be generated with the 24th bit rounded appropriately. Notice that the multiplication by 2 in this conversion process is carried out in decimal so that BCD arithmetic with the DAA instruction is appropriate here much like the CVBTD subroutine of Figure 7.9.

The conversion of a binary floating-point number to a decimal floating-point number is a straightforward variation of the process above and is left as an exercise.

This section covered the essentials of floating-point representations, arithmetic operations on floating-point numbers, including rounding, overflow, and underflow, and the conversion between decimal floating-point numbers and binary floating-point numbers. Hi Ware’s C and C++ libraries illustrate subroutines for adding, subtracting, and multiplying single-precision floating-point numbers. This section and these libraries should make it easy for you to use floating-point numbers in your assembly language programs whenever you need their power.

Floating-Point Arithmetic and Conversion (Microcontrollers)

Next post: Fuzzy Logic (Microcontrollers)

Previous post: Long Integer Arithmetic (Microcontrollers)