abstract

Support vector machines and kernel methods are increasingly popular in genomics and computational biology due to their good performance in real-world applications and strong modularity that makes them suitable to a wide range of problems, from the classification of tumors to the automatic annotation of proteins. Their ability to work in a high dimension and process nonvectorial data, and the natural framework they provide to integrate heterogeneous data are particularly relevant to various problems arising in computational biology. In this topic, we survey some of the most prominent applications published so far, highlighting the particular developments in kernel methods triggered by problems in biology, and mention a few promising research directions likely to expand in the future.

introduction

Recent years have witnessed a dramatic evolution in many fields of life science with the apparition and rapid spread of so-called high-throughput technologies, which generate huge amounts of data to characterize various aspects of biological samples or phenomena. To name just a few, DNA sequencing technologies have already provided the whole genome of several hundreds of species, including the human genome (International Human Genome Sequencing Consortium, 2001; Venter, 2001). DNA microarrays (Schena, Shalon, Davis, & Brown, 1995), that allow the monitoring of the expression level of tens of thousands of transcripts simultaneously, opened the door to functional genomics, the elucidation of the functions of the genes found in the genomes (DeRisi, Iyer, & Brown, 1997). Recent advances in ionization technology have boosted large-scale capabilities in mass spectrometry and the rapidly growing field of proteomics, focusing on the systematic, large-scale analysis of proteins (Aebersold & Mann, 2003). As biology suddenly entered this new era characterized by the relatively cheap and easy generation of huge amounts of data, the urgent need for efficient methods to represent, store, process, analyze, and finally make sense out of these data triggered the parallel development of numerous data-analysis algorithms in computational biology. Among them, kernel methods in general and support vector machines (SVMs) in particular have quickly gained popularity for problems involving the classification and analysis of high-dimensional or complex data. Half a decade after the first pioneering papers (Haussler, 1999; T. S. Jaakkola, Diekhans, & Haussler, 1999; Mukherjee, Tamayo, Mesirov, Slonim, Verri, & Poggio, 1998), these methods have been applied to a variety of problems in computational biology, with more than 100 research papers published in 2004 alone. The main reasons behind this fast development involve, beyond the generally good performances of SVM on real-world problems and the ease of use provided by current implementations, (a) the particular capability of SVM to resist high-dimensional and noisy data, typically produced by various high-throughput technologies, (b) the possibility to model linear as well as nonlinear relationships between variables of interest, and (c) the possibility to process nonvectorial data, such as biological sequences, protein structures, or gene networks, and to easily fuse heterogeneous data thanks to the use of kernels. More than a mere application of well-established methods to new data sets, the use of kernel methods in computational biology has been accompanied by new developments to match the specificities and the needs of the field, such as methods for feature selection in combination with the classification of high-dimensional data, the invention of string kernels to process biological sequences, or the development of methods to learn from several kernels simultaneously. In order to illustrate some of the most prominent applications of kernel methods in computational biology and the specific developments they triggered, this topic focuses on selected applications related to the manipulation of high-dimensional data, the classification of biological sequences, and a few less developed but promising applications. This topic is therefore not intended to be an exhaustive survey, but rather to illustrate with some examples why and how kernel methods have invaded the field of computational biology so rapidly.

classification of high-dimensional data

Several recent technologies, such as DNA micro-arrays, mass spectrometry, or various miniaturized assays, provide thousands of quantitative parameters to characterize biological samples or phenomena. Mathematically speaking, the results of such experiments can be represented by high-dimensional vectors, and many applications involve the supervised classification of such data. Classifying data in high dimension with a limited number of training examples is a challenging task that most statistical procedures have difficulties in dealing with, due in particular to the risk of overfit-ting the training data. The theoretical foundations of SVM and related methods, however, suggest that their use of regularization allows them to better resist to the curse of dimension than other methods. SVMs were therefore naturally tested on a variety of data sets involving the classification of high-dimensional data, in particular, for the analysis of tumor samples from gene expression data, and novel algorithms were developed in the framework of kernel methods to select a few relevant features for a given high-dimensional classification problem.

Tumor Classification from Gene Expression Data

The early detection of cancer and prediction of cancer types from gene expression data have been among the first applications of kernel methods in computational biology (Furey, Cristianini, Duffy, Bednarski, Schummer, & Haussler, 2000; Mukherjee et al., 1998) and remain prominent. These applications indeed have potentially important impacts on the treatment of cancers, providing clinicians with objective and possibly highly accurate information to choose the most appropriate form of treatment. In this context, SVMs were widely applied and compared with other algorithms for the supervised classification of tumor samples from expression data of typically several thousands of genes for each tumor. Examples include the discrimination between acute myeloid and acute lymphoblastic leukemia (Mukherjee et al.); colon cancer and normal colon tissues (Moler, Chow, & Mian, 2000); normal ovarian, normal nonovarian, and ovarian cancer tissues (Furey et al.); melanoma; soft-tissue sarcoma and clear-cell sarcoma (Segal, Pavlidis, Noble, et al., 2003); different types of soft-tissue sarcomas (Segal, Pavlidis, Antonescu, et al., 2003); and normal and gastric tumor tissues (Meireles et al., 2003), to namejust a few. Another typical application is the prediction of the future evolution of a tumor, such as the discrimination between relapsing and nonrelapsing Wilms tumors (Williams et al., 2004), the prediction of metastatic or nonmetastatic squamous cell carcinoma of the oral cavity (O’Donnell et al., 2005), or the discrimination between diffuse large B-cell lymphoma with positive or negative treatment outcome (Shipp et al., 2002).

The SVMs used in these studies are usually linear hard-margin SVMs, or linear soft-margin

SVMs with a default C parameter value. Concerning the choice of the kernel, several studies observe that nonlinear kernels tend to decrease performance (Ben-Dor, Bruhn, Friedman, Nach-man, Schummer, & Yakhini, 2000; Valentini, 2002) compared to the simplest linear kernel. In spite of contradictory opinions (Pochet, De Smet, Suykens, & De Moor, 2004), this is coherent with the intuition that the complexity of learning nonlinear functions in very high dimension does not play in their favor. On the other hand, the choice of hard-margin SVM, sometimes advocated as a default method when data are linearly separable, is certainly worth questioning in more detail. Indeed, the theoretical foundations of SVM suggest that in order to learn in high dimension, one should rather increase the importance of regularization as opposed to fitting the data, which corresponds to decreasing the C parameter of the soft-margin formulation. A few recent papers highlight indeed the fact that the choice of C has an important effect on the generalization performance of SVM for the classification of gene expression data (Huang & Kecman, 2005).

A general conclusion of these numerous studies is that SVMs generally provide good classification accuracy in spite of the large dimension of the data. For example, in a comparative study of several algorithms for multiclass supervised classification, including naive Bayes, ^-nearest neighbors, and decision trees, Li, Zhang, and Ogihara (2004, p. 2434) note that ” [SVMs] achieve better performance than any other classifiers on almost all the datasets.” However, it is fair to mention that other studies conclude that most algorithms that take into account the problem of large dimension either through regularization or through feature selection reach roughly similar accuracy on most data sets (Ben-Dor et al.). From a practical point of view, the use of the simplest linear kernel and the soft-margin formulation of SVM seems to be a reasonable default strategy for this application.

Feature selection

In the classification of microarray data, it is often important, for classification performance, biomarker identification, and the interpretation of results, to select only a few discriminative genes among the thousands of candidates available on a typical microarray. While the literature on feature selection is older and goes beyond the field of kernel methods, several interesting developments with kernel methods have been proposed in the recent years, explicitly motivated by the problem of gene selection from microarray data.

For example, Su, Murali, Pavlovic, Schaf-fer, and Kasif (2003) propose to evaluate the predictive power of each single gene for a given classification task by the value of the functional minimized by a one-dimensional SVM, trained to classify samples from the expression of only the single gene of interest. This criterion can then be used to rank genes and select only a few with important predictive power. This procedure therefore belongs to the so-called filter approach to feature selection, where a criterion (here using SVM) to measure the relevance of each feature is defined, and only relevant features according to this criterion are kept.

A second general strategy for feature selection is the so-called wrapper approach, where feature selection alternates with the training of a classifier. The now widely used recursive feature elimination (RFE) procedure of Guyon, Weston, Bamhill, and Vapnik (2002), which iteratively selects smaller and smaller sets of genes and trains SVMs, follows this strategy. RFE can only be applied with linear SVMs, which is nevertheless not a limitation as long as many features remain, and works as follows. Starting from the full set of genes, a linear SVM is trained and the genes with the smallest weights in the resulting linear discrimination function are eliminated. The procedure is then repeated iteratively starting from the set of remaining genes and stops when a desired number of genes is reached.

Finally, a third strategy for feature selection, called the embedded approach, combines the learning of a classifier and the selection of features in a single step. A kernel method following this strategy has been implemented in the joint classifier and feature optimization (JCFO) procedure of Krishnapuram, Carin, and Hartemink (2004). JCFO is, roughly speaking, a variant of SVM with a Bayesian formulation, in which sparseness is obtained both for the features and the classifier expansion in terms of a kernel by appropriate choices of prior probabilities. The precise description of the complete procedure to train this algorithm, involving an expectation-maximization (EM) iteration, would go beyond the scope of this topic, and the interested reader is referred to the original publication for further practical details.

Generally speaking, and in spite of these efforts to develop clever algorithms, the effect of feature selection on the classification accuracy of SVM is still debated. Although very good results are sometimes reported, for example, for the JCFO procedure (Krishnapuram et al., 2004), several studies conclude that feature selection, for example, with procedures like RFE, do not actually improve the accuracy of SVM trained on all genes (Ambroise & McLachlan, 2002; Ramaswamy et al., 2001). The relevance of feature-selection algorithms for gene expression data is therefore currently still a research topic that practitioners should test and assess case by case.

other High-Dimensional Data in computational Biology

While early applications of kernel methods to high-dimensional data in genomics and bioinformatics mainly focused on gene expression data, a number of other applications have flourished more recently, some being likely to expand quickly as major applications of machine learning algorithms. For example, studies focusing on tissue classification from data obtained by other technologies, such as methylation assays to monitor the patterns of cytosine methylation in the upstream regions of genes (Model, Adorjan, Olek, & Piepenbrock, 2001), or array comparative genomic hybridization (CGH) to measure gene copy number changes in hundreds of genes simultaneously (Aliferis, Hardin, & Massion, 2002), are starting to accumulate. A huge field of application that barely caught the interest of the machine learning community is proteomics, that is, the quantitative study of the protein content of cells and tissues. Technologies such as tandem mass spectrometry to monitor the protein content (proteins or characteristic fragments of proteins) of a biological sample are now well developed, and the classification of tissues from these data is a future potential application of SVM (Wagner, Naik, & Pothen, 2003; Wu et al., 2003). Applications in toxicogenomics (Steiner et al., 2004), chemogenomics (Bao & Sun, 2002; Bock & Gough, 2002), and the analysis of single-nucleotide polymorphisms (Listgarten et al., 2004; Yoon, Song, Hong, & Kim, 2003) are also promising applications for which the capacity of SVM to classify high-dimensional data has only started to be exploited.

sequence classification

The various genome sequencing projects have produced huge amounts of sequence data that need to be analyzed. In particular, the urgent need for methods to automatically process, segment, annotate, and classify various sequence data has triggered the fast development of numerous algorithms for strings. In this context, the possibility offered by kernel methods to process any type of data as soon as a kernel for the data to be processed is available has been quickly exploited to offer the power of state-of-the-art machine learning algorithms to sequence processing.

Problems that arise in computational biology consist of processing either sets of sequences of a fixed length, or sets of sequences with variable lengths. From a technical point of view, the two problems differ significantly: While there are natural ways to encode fixed-length sequences as fixed-length vectors, making them amenable to processing by most learning algorithms, manipulating variable-length sequences is less obvious. In both cases, many successful applications of SVM have been reported, combining ingenious developments of string kernels, sometimes specifically adapted to a given classification task, with the power of SVM.

Kernels for Fixed-Length sequences

Problems involving the classification of fixed-length sequences appear typically when one wants to predict a property along a sequence, such as the local structure or solvent accessibility along a protein sequence. In that case, indeed, a common approach is to use a moving window, that is, to predict the property at each position independently from the others, and to base the prediction only on the nearby sequence contained in a small window around the site of interest. More formally, this requires the construction of predictive models that take a sequence of fixed length as input to predict the property of interest, the length of the sequences being exactly the width of the window.

To fix notations, let us denote by p the common length ofthe sequences, and by a typical sequence, where each x is a letter from the alphabet, for example, an amino acid. The most natural way to transform such a sequence into a vector of fixed length is to first encode each letter itself into a vector of fixed length , and then to concatenate the codes of the successive letters to obtain a vector of size n =pl for the whole sequence. A simple code for letters is the following so-called sparse encoding: The size of the alphabet is denoted by a, and the i the letter of the alphabet is encoded as a vector of dimension a containing only zeros, except for the i the dimension that is set to l. For example, in the case of nucleotide sequences with alphabet (A,C,G,T), the codes for A, C, G, and T would respectively be (1,0,0,0), (0,1,0,0), (0,0,1,0), and (0,0,0,1), while the code for the sequence of length 3 AGT would be (1,0,0,0,0,0,1,0,0,0,0,1). Several more evolved codes for single letters have also been proposed. For example, if one has a prior matrix of pairwise similarities between letters, such as widely used similarity matrices between amino acids, it is possible to replace the 0/1 sparse encoding of a given letter by the vector of similarity with other letters; hence, the A in the previous example could, for instance, be represented by the vector (1,0,0.5,0) to emphasize one’s belief that A and G share some similarity. This is particularly relevant for biological sequences where mutations of single letters to similar letters are very common. Alternatively, instead of using a prior matrix of similarity, one can automatically align the sequence of interest to similar sequences in a large sequence database, and encode each position by the frequency of each letter in the alignment. As a trivial example, if our previous sequence AGT was found to be aligned to the following sequences, AGA, AGC, CGT, and ATT, then it could be encoded by the vector (0.8,0.2,0,0,0,0,0.8,0.2,0.2,0.2,0,0.6), corresponding to the respective frequencies of each letter at each position.

In terms of a kernel, it is easy to see that the inner product between sparsely encoded sequences is the number of positions with identical letters. In this representation, any linear classifier, such as that learned by a linear SVM, associates a weight to each feature, that is, to each letter at each position, and the score of a sequence is the sum of the scores of its letters. Such a classifier is usually referred to as a position-specific score matrix in bioinformatics. Similar interpretations can be given for other letter encodings. An interesting extension of these linear kernels for sequences is to raise them to some small power d in that case, the dimension of the feature space used by kernel methods increases, and the new features correspond to all products of d original features. This is particularly appealing for sparse encoding because a product of d binary factors is a binary variable equal to 1 if and only if all factors are 1, meaning that the features created by the sparse encoding to the power d exactly indicate the simultaneous presence of up to d particular letters at d particular positions. The trick to take a linear kernel to some power is therefore a convenient way to create a classifier for problems that involve the presence of several particular letters at particular positions.

A first limitation of these kernels is that they do not contain any information about the order of the letters: They are, for example, left unchanged if the letters in all sequences are shuffled according to any given permutation. Several attempts to include ordering information have been proposed. For example, Ratsch, Sonnenburg, and Scholkopf (2005) replace the local encoding of single letters by a local encoding of several consecutive letters; Zien, Ratsch, Mika, Scholkopf, Lengauer, and Muller (2000) propose an ingenious variant to the polynomial kernel in order to restrict the feature space to products of features at nearby positions only.

A second limitation of these kernels is that the comparison of two sequences only involves the comparison of features at identical positions. This can be problematic in the case of biological sequences where the insertion of deletions of letters is common, resulting in possible shifts within a window. This problem led Meinicke, Tech, Morgenstem, and Merkl (2004) to propose a kernel that incorporates a comparison of features at nearby positions using the following trick: If a feature f (e.g., binary or continuous) appears at position i in the first sequence, and a feature g appears at position j in the second sequence, then the kernel between the two sequences is increased by , where is a basic kernel between the features f and g such as the simple product, and c is a parameter that controls the range at which features are compared. When c is chosen very large, then one recovers the classical kernels obtained by comparing only identical positions(i =j); the important point here is that for smaller values of c, features can contribute positively even though they might be located at different positions on the sequences.

The applications of kernels for fixed-length sequences to solve problems in computational biology are already numerous. For example, they have been widely used to predict local properties along protein sequences using a moving window, such as secondary structure (Guermeur, Lifschitz, & Vert, 2004; Hua & Sun, 2001a), disulfide bridges involving cysteines (Chen, in, Lin, & Hwang, 2004; Passerini & Frasconi, 2004), phosphoryla-tion sites (Kim, Lee, oh, Kimm, & Koh, 2004), interface residues (Res, Mihalek, & Lichtarge, 2005; Yan, Dobbs, & Honavar, 2004), and solvent accessibility (Yuan, Burrage, & Mattick, 2002). Another important field of application is the annotation of DNA using fixed-length windows centered on a candidate point of interest as an input to a classifier to detect translation initiation sites (Meinicke et al., 2004; Zien et al., 2000), splice sites (Degroeve, Saeys, De Baets, Rouze, & Van de Peer, 2005; Ratsch et al., 2005), or binding sites of transcription factors (O’Flanagan, Paillard, Lav-ery, & Sengupta, 2005; Sharan & Myers, 2005). The recent interest in short RNA such as antisense oligonucleotides or small interfering RNAs for the sequence-specific knockdown of messenger RNAs has also resulted in several works involving the classification of such sequences, which typically have a fixed length by nature (Camps-Valls, Chalk, Serrano-Lopez, Martin-Guerrero, & Sonnhammer, 2004; Teramoto, Aoki, Kimura, & Kanaoka, 2005). Another important application field for these methods is immunoinformatics, including the prediction of peptides that can elicit an immune response (Bhasin & Raghava, 2004; Donnes & Elofsson, 2002), or the classification of immunoglobulins collected from ill patients (Yu, Zavaljevski, Stevens, Yackovich, & Reif-man, 2005; Zavaljevski, Stevens, & Reifman, 2002). In most of these applications, SVM lead to comparable if not better prediction accuracy than competing state-of-the-art methods such as neural networks.

Kernels for variable-Length sequences

Many problems in computational biology involve sequences of different lengths. For example, the automatic functional or structural annotation of genes found in sequenced genomes requires the processing of amino-acid sequences with no fixed length. Learning from variable-length sequences is a more challenging problem than learning from fixed-length sequences because there is no natural way to transform a variable-length string into a vector. For kernel methods, this issue boils down to the problem of defining kernels for variable-length strings, a topic that has deserved a lot of attention in the last few years and has given rise to a variety of ingenious solutions. As summarized in Table 1, three main approaches have been followed in the process of kernel design from strings: (a) computing an inner product in an explicitly defined feature space, (b) deriving a kernel from probabilistic models on strings, and (c) adapting widely used measures of similarity. These general strategies, surveyed in more detail in this section, are relevant beyond the specific problem of designing kernels for biological sequences. For example, we point out below strong similarities between some of these kernels and kernels developed for speech-recognition applications (surveyed in more detail in the topics by Picone, Ganapathiraju, and Hamaker; and Wan).

The most common approach to make a kernel for strings, as for many other types of data, is to design explicitly a set of numerical features that can be extracted from strings, and then to form a kernel as a dot product between the resulting feature vectors. As an example, Leslie, Eskin, and Noble (2002) represent a sequence by the vector of counts of occurrences of all possible k-mers in the sequence for a given integer k, effectively resulting in a vector of dimension ak, where a is the size of the alphabet. As an example, the sequence AACGTCACGAA over the alphabet (A,C,G,T) is represented by the 16-dimensional vector (2,2,0,0,1,0,2,0,1,0,0,1,0,1,0,0) for k = 2; here, the features are the counts of occurrences of each 2-mer AA, AC, …, TG, TT lexicographically ordered. The resulting spectrum kernel between this sequence and the sequence ACGAAA, defined as the linear product between the two 16-dimensional representation vectors, is equal to 9. It should be noted that although the number of possible k-mers easily reaches the order of several thousands as soon as k is equal to 3 or 4, the classification of sequences by SVM in this high-dimensional space results in fairly good results. A major advantage of the spectrum kernel is its fast computation; indeed, the set of k-mers appearing in a given sequence can be indexed in linear time in a tree structure, and the inner product between two vectors is linear with respect to the nonzero coordinates, that is, at most linear in the total lengths of the sequences. Several variants to the basic spectrum kernel have also been proposed, including, for example, kernels based on counts of k-mers appearing with up to a few mismatches in the sequences (Leslie, Eskin, Cohen, Weston, & Noble, 2004).

Table 1. A typology ofkernels for variable-length biological sequences

|

Strategy |

Example |

|

Define a (possibly high-dimensional) feature space of interest |

- Physico-chemical kernels (Wang et al., 2004 ; Zhang et al., 2003a) - Spectrum, mismatch kernels (Leslie et al., 2002, 2004) - Pairwise, motif kernels (Logan et al., 2001; Liao & Noble, 2003; Ben-Hur & Brutlag, 2003) |

|

Derive a kernel from a generative model |

- Fisher kernel (Jaakkola et al., 2000) - Pair HMM kernel (Watkins, 2000 ; Haussler, 1999) - Mutual information kernels (Cuturi & Vert, 2005) - Marginalized kernels (Tsuda et al., 2002; Kin et al., 2002; Kashima et al., 2004 ; Vert et al., 2006) |

|

Derive a kernel from a measure of similarity |

- Local alignment kernels (Saigo et al., 2004 ; Vert et al., 2004) |

Another natural approach to represent variable-length strings by fixed-length numerical vectors is to replace each letter by one or several numerical features, such as physicochemical properties of amino acids, and then to extract features from the resulting variable-length numerical time series using classical signal processing techniques such as Fourier transforms (Wang, Yang, Liu, Xu, & Chou, 2004) or autocorrelation analysis (S.-W. Zhang, Pan, Zhang, Zhang, & Wang, 2003). For example, if denote P numerical features associated with the successive letters of a sequence of length P, then the autocorrelation function r.for a given j > 0 is defined by:

One can then keep a fixed number of these coefficients, for example , and create an n-dimensional vector to represent each sequence.

Finally, another popular approach to design features and therefore kernels for biological sequences is to “project” them onto a fixed dictionary of sequences or motifs using classical similarity measures, and to use the resulting vector of similarities as a feature vector. For example, Logan, Moreno, Suzek, Weng, and Kasif (2001) represent each sequence by a 10,000-dimensional vector indicating the presence of 10,000 motifs of the BLOCKS database; similarly, Ben-Hur and Brutlag (2003) use a vector that indicates the presence or absence of about 500,000 motifs in the eMOTIF database, requiring the use of a tree structure to compute efficiently the kernel without explicitly storing the 500,000 features. Liao and Noble (2003) represent each sequence by a vector of sequence similarities with a fixed set of sequences.

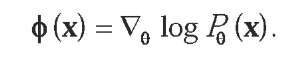

A second general strategy that has been followed for kernel design is to derive kernel functions from probabilistic models. This strategy has been particularly motivated by the fact that, before the interest in string kernels grew, a number of ingenious probabilistic models had been defined to represent biological sequences or families of sequences, including, for example, Markov and hidden Markov models for protein sequences, and stochastic context-free grammars for RNA sequences (Durbin, Eddy, Krogh, & Mitchison, 1998). Several authors have therefore explored the possibility to use such models to make kernels, starting with the seminal work of T. Jaakkola, Diekhans, and Haussler (2000) that introduced the Fisher kernel. The Fisher kernel is a general method to extract a fixed number of features from any data x for which a parametric probabilistic model P0 is defined. Here, 0 represents a continuous n-dimensional vector of parameters for the probabilistic model, such as transition and emission probabilities for a hidden Markov model, and each P0 is a probability distribution. Once a particular parameter 90 is chosen to fit a given set of objects, for example, by maximum likelihood, then an n-dimensional feature vector for each individual object x can be extracted by taking the gradient in the parameter space of the log likelihood of the point:

The intuitive interpretation of this feature vector, usually referred to as the Fisher score in statistics, is that it represents how changes in the n parameters affect the likelihood of the point x. In other words, one feature is extracted for each parameter of the model; the particularities of the data point are seen from the eyes of the parameters of the probabilistic model. The Fisher kernel is then obtained as the dot product of these n-dimensional vectors, eventually multiplied by the inverse of the Fisher information matrix to render it independent of the parametrization of the model.

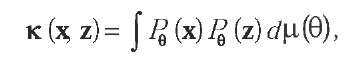

A second line of thought to make a kernel out of a parametric probabilistic model is to use the concept of mutual information kernels (Seeger, 2002), that is, kernels of the form:

where do is a prior distribution on the parameter space. Here, the features correspond to the likelihood of the objects under all distributions of the probabilistic model; objects are considered similar when they have large likelihood under similar distributions. An important difference between the kernels seen so far is that here, no explicit extraction of finite-dimensional vectors can be performed. Hence, for practical applications, one must chose probabilistic models that allow the computation of the integral above. This was carried out by Cuturi and Vert (2005) who present a family of variable-length Markov models for strings and an algorithm to perform the exact integral over parameters and models in the same time, resulting in a string kernel with linear complexity in time and memory with respect to the total length of the sequences.

Alternatively, many probabilistic models for biological sequences, such as hidden Markov models, involve a hidden variable that is marginalized over to obtain the probability of a sequence, which be written as:

For such distributions, Tsuda, Kin, and Asai (2002) introduced the notion of a marginalized kernel, obtained by marginalizing a kernel for the complete variable over the hidden variable. More precisely, assuming that a kernel k0 for objects of the form (x,h) is defined, the marginalized kernel for observed objects X is given by:

In order to motivate this definition with a simple example, let us consider a hidden Markov model with two possible hidden states to model sequences with two possible regimes, such as introns and exons in eukaryotic genes. In that case, the hidden variable corresponding to a sequence x of length P is a binary sequence h of length P describing the states along the sequence. For two sequences x1 and x2, if the correct hidden states h1 and h2 were known, such as the correct decomposition into introns and exons, then it would make sense to define a kernel taking into account the specific decomposition of the sequences into two regimes; for example, the kernel for complete data could be a spectrum kernel restricted to the exons, that is, to positions with a particular state. Because the actual hidden states are not known in practice, the marginalization over the hidden state of this kernel using an adequate probabilistic model can be interpreted as an attempt to apply the kernel for complete data by guessing the hidden variables. Similar to the mutual information kernel, marginalized kernels can often not be expressed as inner products between feature vectors, and they require computational tricks to be computed. Several beautiful examples of such kernels for various probabilistic models have been worked out, including hidden Markov models for sequences (Tsuda et al.; Vert, Thurman, & Noble, 2006), stochastic context-free grammars for RNA sequences (Kin, Tsuda, & Asai, 2002), and random walk models on graphs for molecular structures (Kashima, Tsuda, & Inokuchi, 2004).

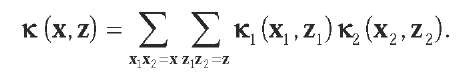

Following a different line of thought, Haussler (1999) introduced the concept of convolution kernels for objects that can be decomposed into subparts, such as sequences or trees. For example, the concatenation of two strings x1 and x2 results in another string x = x1 x2. If two initial string kernels K1and k2 are chosen, then a new string kernel is obtained by the convolution of the initial kernels following the equation:

Here, the sum is over all possible decompositions of x and z into two concatenated subsequences. The rationale behind this approach is that it allows the combination of different kernels adapted to different parts of the sequences, such as introns and exons or gaps and aligned residues in alignment, without knowing the exact segmentation of the sequences. Besides proving that the convolution of two kernels is a valid kernel, Haussler (1999) gives several examples of convolution kernels relevant for biological sequences; for example, he shows that the joint probability P(x,z) of two sequences under a pair hidden Markov model (HMM) is a valid kernel under mild assumptions, a property that was also proved independently by Watkins (2000).

Finally, a third general strategy to design kernels for strings is to start from classical measures of similarity between strings and turn them into kernels. In the case of biological sequences, for example, a scheme known as Smith-Waterman local alignment score (Smith & Waterman, 1981) is widely used to compare sequences. Saigo, Vert, Ueda, and Akutsu (2004) show that a slight modification of this measure of similarity is a valid kernel, called the local alignment kernel. In fact, the proof of the positive definiteness of the new kernels builds on the study of convolution kernels presented above (Vert, Saigo, & Akutsu, 2004). Interestingly, the Smith-Waterman alignment score between biological sequences optimizes an alignment between sequences by dynamic programming, just like dynamic time warping (DTW) algorithms compare variable-length sequences in speech recognition. DTW kernels that violate the positive semidefiniteness assumption have been proposed for speaker-recognition applications; adapting the local alignment kernel to this context, that is, replacing a Viterbi optimization with a forward summation in the dynamic programming algorithm, would result in a valid DTW kernel for speech applications. The local alignment kernel gives excellent results on the problem of detecting remote homologs of proteins, suggesting that combining domain knowledge (in the form of a relevant measure of similarity turned into a kernel) with kernel methods is a promising research direction.

These kernels for variable-length sequences have been widely applied, often in combination with SVM, to various classification tasks in computational biology. Examples include the prediction of protein structural or functional classes from their primary sequence (Cai, Wang, Sun, & Chen, 2003; Ding & Dubchak, 2001; T. Jaakkola et al., 2000; Karchin, Karplus, & Haussler, 2002; Vert et al., 2004), the prediction of the subcellular localization of proteins (Hua & Sun, 2001b; Matsuda, Vert, Saigo, Ueda, Toh, & Akutsu, 2005; Park & Kanehisa, 2003), the classification of transfer RNA (Kin et al., 2002) and noncod-ing RNA (Karklin, Meraz, & Holbrook, 2005), the prediction of pseudoexons and alternatively spliced exons (Dror, Sorek, & Shamir, 2005; X. H.-F. Zhang, Heller, Hefter, Leslie, & Chasin, 2003b), the separation of mixed plant-pathogen expressed sequence tag (EST) collections (Friedel, Jahn, Sommer, Rudd, Mewes, & Tetko, 2005), the classification of mammalian viral genomes (Rose, Turkett, Oroian, Laegreid, & Keele, 2005), and the prediction of ribosomal proteins (Lin, Kuang, & Kolatkar, 2002).

This short review of kernels developed for the purpose of biological sequence classification, besides highlighting the dynamism of research in kernel methods resulting from practical needs in computational biology, naturally raises the practical question of which kernel to use for a given application. Although no clear answer has emerged yet, some lessons can be learned from early studies. First, there is certainly no kernel universally better than others, and the choice of kernel should depend on the targeted application. Intuitively, a kernel for a classification task is likely to work well if it is based on features relevant to the task; for example, a kernel based on sequence alignments, such as the local alignment kernel, gives excellent results on remote homology detection problems, while a kernel based on the global content of sequences in short subsequences, such as the spectrum kernel, works well for the prediction of subcellular localization. Although some methods for the systematic selection and combination of kernels are starting to emerge (see the next section), an empirical evaluation of different kernels on a given problem seems to be the most common way to chose a kernel. Another important point to notice, besides the classification accuracy obtained with a kernel, is its computational cost. Indeed, practical applications often involve data sets of thousands or tens of thousands of sequences, and the computational cost of a method can become a critical factor in this context, in particular in an online setting. The kernels presented above differ a lot in their computational cost, ranging from fast linear-time kernels like the spectrum kernel to slower kernels like the quadratic-time local alignment kernel. The final choice of kernel for a given application often results from a trade-off between classification performance and computational burden.

other applications and future trends

Besides the important applications mentioned in the previous sections, several other attempts to import ideas of kernel methods in computational biology have emerged recently. In this section, we highlight three promising directions that are likely to develop quickly in the near future: the engineering of new kernels, the development of methods to handle multiple kernels, and the use of kernel methods for graphs in systems biology.

More Kernels

The power of kernel methods to process virtually any sort of data as soon as a valid kernel is defined has recently been exploited for a variety of data, besides high-dimensional data and sequences. For example, Vert (2002) derives a kernel for phylo-genetic profiles, that is, a signature indicating the presence or absence of each gene in all sequenced genomes. Several recent works have investigated kernels for protein 3-D structures, a topic that is likely to expand quickly with the foreseeable availability of predicted or solved structures for whole genomes (Borgwardt, Ong, Schonauer, Vishwanathan, Smola, & Kreigel, 2005; Dobson & Doig, 2003). For smaller molecules, several kernels based on planar or 3-D structures have emerged, with many potential applications in computational chemistry (Kashima et al., 2004; Mahe, Ueda, Akutsu, Perret, & Vert, 2005; Swamidass, Chen, Bruand, Phung, Ralaivola, & Baldi, 2005). This trend to develop more and more kernels, often designed for specific data and applications, is likely to continue in the future because it has proved to be a good approach to obtain efficient algorithms for real-world applications. A nice by-product of these efforts, which is still barely exploited, is the fact that any kernel can be used by any kernel method, paving the way to a multitude of applications such as clustering.

Integration of Heterogeneous Data

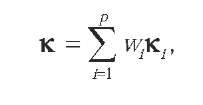

Operations on kernels provide simple and powerful tools to integrate heterogeneous data or multiple kernels; this is particularly relevant in computational biology, where biological objects can typically be described by heterogeneous representations, and the availability of a large number of possible kernels for even a single representation raises the question of choice or combination of kernels. Suppose, for instance, that one wants to perform a functional classification of genes based on their sequences, expression over a series of experiments, evolutionary conservation, and position in an interaction network. A natural approach with kernel methods is to start by defining one or several kernels for each sort of data, that is, string kernels for the gene sequences, vector kernels to process the expression profiles, and so forth. The apparent heterogeneity of data types then vanishes as one simply obtains a family of kernel functions . In order to learn from all data simultaneously, the simplest approach is to define an integrated kernel as the sum of the initial kernels:

The rationale behind this sum is that if each kernel is a simple dot product, then the sum of dot products is equal to the dot product of the concatenated vectors. In other words, taking a sum of kernels amounts to putting all features of each individual kernel together; if different features in different kernels are relevant for a given problem, then one expects the kernel method trained on the integrated kernel to pick those relevant features. This idea was pioneered by the authors of Pavlidis, Weston, Cai, and Noble (2002), in which gene expression profiles and gene phylogenetic profiles are integrated to predict the functional classes of genes, effectively integrating evolutionary and transcriptional information.

An interesting generalization of this approach is to form a convex combination of kernels of the form:

where the w. are nonnegative weights. Lanckriet, De Bie, Cristianini, Jordan and Noble (2004) propose a general framework based on semidefinite programming to optimize the weights and learn a discrimination function for a given classification task simultaneously. Promising empirical results on gene functional classification show that by integrating several kernels, better results can be obtained than with each individual kernel.

Finally, other kernel methods can be used to compare and search for correlations between heterogeneous data. For example, Vert and Kanehisa (2003) propose to use a kernelized version of canonical correlation analysis (CCA) to compare gene expression data on the one hand with the position of genes in the metabolic network on the other hand. Each type of data is first converted into a kernel for genes, the information about gene positions in the metabolic network being encoded with the so-called diffusion kernel (Kondor & Vert, 2004). These two kernels define embeddings of the set of genes into two Euclidean spaces, in which correlated directions are detected by CCA. It is then shown that the directions detected in the feature space of the diffusion kernel can be interpreted as clusters in the metabolic network, resulting in a method to monitor the expression patterns of metabolic pathways.

Kernel Methods in systems biology

Another promising field of research where kernel methods can certainly contribute is systems biology, which roughly speaking focuses on the analysis of biological systems of interacting molecules, in particular, biological networks.

A first avenue of research is the reconstruction of biological networks from high-throughput data. For example, the prediction of interacting proteins to reconstruct the interaction network can be posed as a binary classification problem—given a pair of proteins, do they interact or not?—and can therefore be tackled with SVM as soon as a kernel between pairs of proteins is defined. However, the primary data available are about individual proteins. In order to use SVM in this context, it is therefore natural to try to derive kernels for pairs of proteins from kernels for single proteins. This has been carried out, for example, by Bock and Gough (2001) who characterize each protein by a vector and concatenate two such individual vectors to represent a protein pair. Observing that there is usually no order in a protein pair, Martin, Roe, and Faulon (2005) and Ben-Hur and Noble (2005) propose to define a kernel between pairs (A,B) and (C,D) by the equation:

where k^ denotes a kernel for an individual protein and k is the resulting kernel for pairs of proteins. The rationale behind this definition is that in order to match the pair (A,B) with the pair (C,D), one can either try to match A with C and B with D, or to match A with D and B with C. Reported accuracies on the problem of protein-interaction prediction are very high, confirming the potential of kernel methods in this fast-moving field.

A parallel approach to network inference from genomic data has been investigated by Yamanishi, Vert, and Kanehisa (2004), who show that learning the edges of a network can be carried out by first mapping the vertices, for example, the genes, onto a Euclidean space, and then connecting the pairs of points that are close to each other in this embedding. The problem then becomes that of learning an optimal embedding of the vertices, a problem known as distance metric learning that recently caught the attention of the machine learning community and for which several kernel methods exist (Vert & Yamanishi, 2005).

Finally, several other emerging applications in systems biology, such as inference on networks (Tsuda & Noble, 2004) or the classification of networks (Middendorf et al., 2004), are likely to be subject to increasing attention in the future due to the growing interest and amount of data related to biological networks.

conclusion

This brief survey, although far from being complete, highlights the impressive advances in the applications of kernel methods in computational biology in the last 5 years. More than just importing well-established algorithms to a new application domain, biology has triggered the development of new algorithms and methods, ranging from the engineering of various kernels to the development of new methods for learning from multiple kernels and for feature selection. The widespread diffusion of easy-to-use SVM software and the ongoing integration of various kernels and kernel methods in major computing environments for bioinformatics are likely to foster again the use of kernel methods in computational biology as long as they will provide state-of-the-art methods for practical problems. Many questions remain open regarding, for example, the automatic choice and integration of kernels, the possibility to incorporate prior knowledge in kernel methods, and the extension of kernel methods to more general kernels that are positive definite, suggesting that theoretical developments are also likely to progress quickly in the near future.