introduction

Information extraction (IE) technology has been defined and developed through the US DARPA Message Understanding Conferences (MUCs). IE refers to the identification of instances of particular events and relationships from unstructured natural language text documents into a structured representation or relational table in databases. It has proved successful at extracting information from various domains, such as the Latin American terrorism, to identify patterns related to terrorist activities (MUC-4). Another domain, in the light of exploiting the wealth of natural language documents, is to extract the knowledge or information from these unstructured plain-text files into a structured or relational form. This form is suitable for sophisticated query processing, for integration with relational databases, and for data mining. Thus, IE is a crucial step for fully making text files more easily accessible.

background

The advent of large volumes of text databases and search engines have made them readily available to domain experts and have significantly accelerated research on bioinformatics. With the size of a digital library commonly exceeding millions of documents, rapidly increasing, and covering a wide range of topics, efficient and automatic extraction of meaningful data and relations has become a challenging issue. To tackle this issue, rigorous studies have been carried out recently to apply IE to biomedical data. Such research efforts began to be called biomedical literature mining or text mining in bioinformatics (de Bruijn & Martin, 2002; Hirschman et al., 2002; Shatkay & Feldman, 2003). In this article, we review recent advances in applying IE techniques to biomedi-cal literature.

Main Thrust

This article attempts to synthesize the works that have been done in the field. Taxonomy helps us understand the accomplishments and challenges in this emerging field. In this article, we use the following set of criteria to classify the biomedical literature mining related studies:

1. What are the target objects that are to be extracted?

2. What techniques are used to extract the target objects from the biomedical literature?

3. How are the techniques or systems evaluated?

4. From what data sources are the target objects extracted?

Target objects

In terms of what is to be extracted by the systems, most studies can be broken into the following two major areas: (1) named entity extraction such as proteins or genes; and (2) relation extraction, such as relationships between proteins. Most of these studies adopt information extraction techniquesusingcuratedlexiconornaturallanguage processing for identifying relevant tokens such as words or phrases in text (Shatkay & Feldman, 2003).

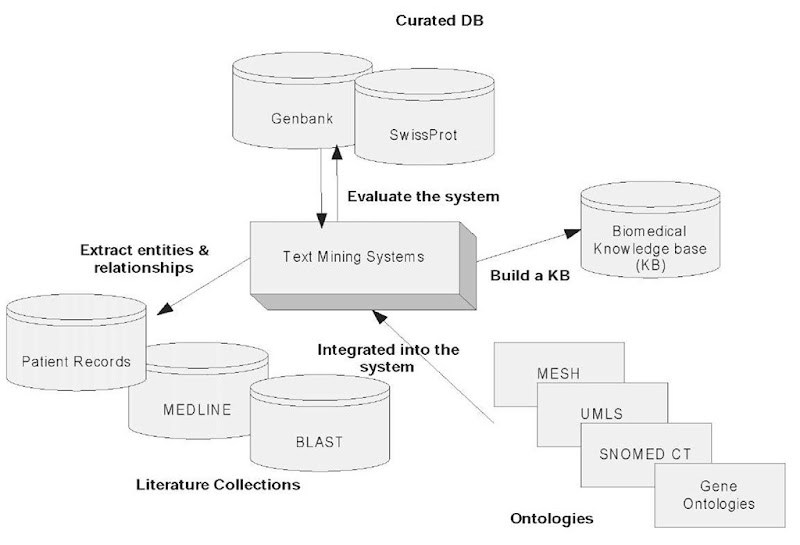

Figure 1. An overview of a biomedical literature mining system

In the area of named entity extraction, Proux et al. (2000) use single word names only with selected test set from 1,200 sentences coming from Flybase. Collier, et al. (2000) adopt Hidden Markov Models (HMMs) for 10 test classes with small training and test sets. Krauthammer et al. (2000) use BLAST database with letters encoded as 4-tuples of DNA. Demetriou and Gaizuaskas (2002) pipeline the mining processes, including hand-crafted components and machine learning components. For the study, they use large lexicon and morphology components. Narayanaswamy et al. (2003) use a part of speech (POS) tagger for tagging the parsed MEDLINE abstracts. Although Narayanaswamy and his colleagues (2003) implement an automatic protein name detection system, the number of words used is 302, and, thus, it is difficult to see the quality of their system, since the size of the test data is too small. Yamamoto, et al. (2003) use morphological analysis techniques for preprocessing protein name tagging and apply support vector machine (SVM) for extracting protein names. They found that increasing training data from 390 abstracts to 1,600 abstracts improved F-value performance from 70% to 75%. Lee et al. (2003) combined an SVM and dictionary lookup for named entity recognition. Their approach is based on two phases: the first phase is identification of each entity with an SVM classifier, and the second phase is post-processing to correct the errors by the SVM with a simple dictionary lookup. Bunescu, et al. (2004) studied protein name identification and protein-protein interaction. Among several approaches used in their study, the main two ways are one using POS tagging and the other using the generalized dictionary-based tagging. Their dictionary-based tagging presents higher F-value. Table 1 summarizes the works in the areas of named entity extraction in biomedical literature.

The second target object type of biomedical literature extraction is relation extraction. Leek (1997) applies HMM techniques to identify gene names and chromosomes through heuristics. Blaschke et al. (1999) extract protein-protein interactions based on co-occurrence of the form

“… p1___I1___p2″ within a sentence, where p1, p2

are proteins, and I1 is an interaction term. Protein names and interaction terms (e.g., activate, bind, inhibit) are provided as a dictionary. Proux (2000) extracts an interact relation for the gene entity from Flybase database. Pustejovsky (2002) extracts an inhibit relation for the gene entity from MEDLINE. Jenssen, et al. (2001) extract a gene-gene relations based on co-occurrence of the form “… g1.. ,g2…” within a MEDLINE abstracts, where g1 and g2 are gene names. Gene names are provided as a dictionary, harvested from HUGO, LocusLink, and other sources. Although their study uses 13,712 named human genes and millions of MEDLINE abstracts, no extensive quantitative results are reported and analyzed. Friedman, et al. (2001) extract a pathway relation for various biological entities from a variety of articles. In their work, the precision of the experiments is high (from 79-96%). However, the recalls are relatively low (from 21-72%). Bunescu et al. (2004) conducted protein/protein interaction identification with several learning methods, such as pattern matching rule induction (RAPIER), boosted wrapper induction (BWI), and extraction using longest common subsequences (ELCS). ELCS automatically learns rules for extracting protein interactions using a bottom-up approach. They conducted experiments in two ways: one with manually crafted protein names and the other with the extracted protein names by their name identification method. In both experiments, Bunescu, et al. compared their results with human-written rules and showed that machine learning methods provide higher precisions than human-written rules. Table 2 summarizes the works in the areas of relation extraction in biomedical literature.

Table 1. A summary of works in biomedical entity extraction

|

Author |

Named Entities |

Database |

No of Words |

Learning Methods |

F value |

|

Collier et al. (2000) |

Proteins and DNA |

MEDLINE |

30000 |

HMM |

73 |

|

Krauthammer et al. (2000) |

Gene and Protein |

Review articles |

5000 |

Character sequence mapping |

75 |

|

Demetriou and Gaizauskas (2002) |

Protein, Species, and 10 more |

MEDLINE |

30,000 |

PASTA template filing |

83 |

|

Narayanaswamy (2003) |

Protein |

MEDLINE |

302 |

Hand -crafted rules and co-occurrence |

75.86 |

|

Yamamoto et al. (2003) |

Protein |

GENIA |

1600 abstracts |

BaseNP recognition |

75 |

|

Lee et al. (2003) |

Protein DNA RNA |

GENIA |

10,000 |

SVM |

77 |

|

Bunescu (2004) |

Protein |

MEDLINE |

5,206 |

RAPIER, BWI, TBL, k-NN , SVMs, MaxEnt |

57.86 |

Techniques used

The most commonly used extraction technique is co-occurrence based. The basic idea of this technique is that entities are extracted based on frequency of co-occurrence of biomedical named entities such as proteins or genes within sentences. This technique was introduced by Blaschke, et al. (1999). Their goal was to extract information from scientific text about protein interactions among a predetermined set of related programs. Since Blaschke and his colleagues’ study, numerous other co-occurrence-based systems have been proposed in the literature. All are associated with information extraction of biomedical entities from the unstructured text corpus. The common denominator of the co-occurrence-based systems is that they are based on co-occurrences of names or identifiers of entities, typically along with activation/dependency terms. These systems are differentiated one from another by integrating different machine learning techniques such as syntactical analysis or POS tagging, as well as ontologies and controlled vocabularies (Hahn et al., 2002; Pustejovsky et al., 2002; Yakushiji et al., 2001). Although these techniques are straightforward and easy to develop, from the performance standpoint, recall and precision are much lower than any other machine-learning techniques (Ray & Craven, 2001).

Table 2. A summary of relation extraction for biomedical data

|

Authors |

Relation |

Entity |

DB |

Learning Methods |

Precision |

Recall |

|

Leek (1997) |

Location |

Gene |

OMIM |

HMM |

80% |

36% |

|

Blaschke (1999) |

Interact |

Protein |

MEDLINE |

Co-occurrence |

n/a |

n/a |

|

Proux (2000) |

Interact |

Gene |

Flybase |

Co-occurrence |

81% |

44% |

|

Puste-jovsky (2001) |

Inhibit |

Gene |

MEDLINE |

Co-occurrence |

90% |

57% |

|

Jenssen (2001) |

Location |

Gene |

MEDLINE |

Co-occurrence |

n/a |

n/a |

|

Friedman (2001) |

Pathway |

Many |

Articles |

Co-occurrence & thesauri |

96% |

63% |

|

Bunescu (2004) |

Interact |

Protein |

MEDLINE |

RAPIER, BWI, ELCS |

n/a |

n/a |

In parallel with co-occurrence-based systems, the researchers began to investigate other machine learning or NLP techniques. One of the earliest studies was done by Leek (1997), who utilized Hidden Markov Models (HMMs) to extract sentences discussing gene location of chromosomes. HMMs are applied to represent sentence structures for natural language processing, where states of an HMM correspond to candidate POS tags, and probabilistic transitions among states represent possible parses of the sentence, according to the matches of the terms occurring in it to the POSs. In the context of biomedical literature mining, HMM is also used to model families of biological sequences as a set of different utterances of the same word generated by an HMM technique (Baldi et al., 1994).

Ray and Craven (2001) have proposed a more sophisticated HMM-based technique to distinguish fact-bearing sentences from uninteresting sentences. The target biological entities and relations that they intend to extract are protein subcellular localizations and gene-disorder associations. With a predefined lexicon of locations and proteins and several hundreds of training sentences derived from Yeast database, they trained and tested the classifiers over a manually labeled corpus of about 3,000 MEDLINE abstracts. There have been several studies applying natural language tagging and parsing techniques to biomedical literature mining. Friedman, et al. (2001) propose methods parsing sentences and using thesauri to extract facts about genes and proteins from biomedical documents. They extract interactions among genes and proteins as part of regulatory pathways.

Evaluation

One of the pivotal issues yet to be explored further in biomedical literature mining is how to evaluate the techniques or systems. The focus of the evaluation conducted in the literature is on extraction accuracy. The accuracy measures used in IE are precision and recall ratio. For a set of N items, where N is either terms, sentences, or documents, and the system needs to label each of the terms as positive or negative, according to some criterion (positive, if a term belongs to a predefined document category or a term class). As discussed earlier, the extraction accuracy is measured by precision and recall ratio. Although these evaluation techniques are straightforward and are well accepted, recall ratios often are criticized in the field of information retrieval, when the total number of true positive terms is not clearly defined.

In IE, an evaluation forum similar to TREC in information retrieval (IR) is the Message Understanding Conference (MUC). Participants in MUC tested the ability of their systems to identify entities in text to resolve co-reference, extract and populate attributes of entities, and perform various other extraction tasks from written text. As identified by Shatkay and Feldman (2003), the important challenge in biomedical literature mining is the creation of gold-standards and critical evaluation methods for systems developed in this very active field. The framework of evaluating biomedical literature mining systems was recently proposed by Hirschman, et al. (2002). According to Hirschman, et al. (2002), the following elements are needed for a successful evaluation: (1) challenging problem; (2) task definition; (3) training data; (4) test data; (5) evaluation methodology and implementation; (6) evaluator; (7) participants; and (8) funding. In addition to these elements for evaluation, the existing biomedical literature mining systems encounter the issues of portability and scalability, and these issues need to be taken into consideration of the framework for evaluation.

Data sources

In terms of data sources from which target biomedical objects are extracted, most of the biomedical data mining systems focus on mining MEDLINE abstracts of National Library of Medicine. The principal reason for relying on MEDLINE is related to complexity. Abstracts occasionally are easier to mine, since many papers contain less precise and less well supported sections in the text that are difficult to distinguish from more informative sections by machines (Andrade & Bork, 2000). The current version of MEDLINE contains nearly 12 million abstracts stored on approximately 43GB of disk space. A prominent example of methods that target entire papers is still restricted to a small number of journals (Friedman et al., 2000; Krauthammer et al., 2002). The task of unraveling information about function from MEDLINE abstracts can be approached from two different viewpoints. One approach is based on computational techniques for understanding texts written in natural language with lexical, syntactical, and semantic analysis. In addition to indexing terms in documents, natural language processing (NLP) methods extract and index higher-level semantic structures composed of terms and relationships between terms. However, this approach is confronted with the variability, fuzziness, and complexity of human language (Andrade & Bork, 2000). The Genies system (Friedman et al., 2000; Krauthammer et al., 2002), for automatically gathering and processing of knowledge about molecular pathways, and the Information Finding from Biological Papers (IFBP) transcription factor database are natural language processing based systems.

An alternative approach that may be more relevant in practice is based on the treatment of text with statistical methods. In this approach, the possible relevance of words in a text is deduced from the comparison of the frequency of different words in this text with the frequency of the same words in reference sets of text. Some of the major methods using the statistical approach are Abstracts and the automatic pathway discovery tool of Ng and Wong (1999). There are advantages to each of these approaches (i.e., grammar or pattern matching). Generally, the less syntax that is used, the more domain-specific the system is. This allows the construction of a robust system relatively quickly, but many subtleties may be lost in the interpretation of sentences. Recently, GENIA corpus has been used for extracting biomedical-named entities (Collier et al., 2000; Yamamoto et al., 2003). The reason for the recent surge of using GENIA corpus is because GENIA provides annotated corpus that can be used for all areas of NLP and IE applied to the biomedical domain that employs supervised learning. With the explosion of results in molecular biology, there is an increased need for IE to extract knowledge to build databases and to search intelligently for information in online journal collections.

future trends

With the taxonomy proposed here, we now identify the research trends of applying IE to mine biomedical literature.

1. A variety of biomedical objects and relations are to be extracted.

2. Rigorous studies are conducted to apply advanced IE techniques, such as Random Common Field and Max Entropy based HMM to biomedical data.

3. Collaborative efforts to standardize the evaluation methods and the procedures for biomedical literature mining.

4. Continue to broaden the coverage of curated databases and extend the size of the biomedi-cal databases.

conclusion

The sheer size of biomedical literature triggers an intensive pursuit for effective information extraction tools. To cope with such demand, the biomedical literature mining emerges as an interdisciplinary field that information extraction and machine learning are applied to the biomedical text corpus.

In this article, we approached the biomedical literature mining from an IE perspective. We attempted to synthesize the research efforts made in this emerging field. In doing so, we showed how current information extraction can be used successfully to extract and organize information from the literature. We surveyed the prominent methods used for information extraction and demonstrated their applications in the context of biomedical literature mining

The following four aspects were used in classifying the current works done in the field: (1) what to extract; (2) what techniques are used; (3) how to evaluate; and (4) what data sources are used. The taxonomy proposed in this article should help identify the recent trends and issues pertinent to the biomedical literature mining.

KEY TERMs

F-Value: Combines recall and precision in a single efficiency measure (it is the harmonic mean of precision and recall): F = 2 * (recall * precision) / (recall + precision).

Hidden Markov Model (HMM): A statistical model where the system being modeled is assumed to be a Markov process with unknown parameters, and the challenge is to determine the hidden parameters from the observable parameters, based on this assumption.

Natural Language Processing (NLP): A subfield of artificial intelligence and linguistics. It studies the problems inherent in the processing and manipulation of natural language.

Part of Speech (POS): A classification of words according to how they are used in a sentence and the types of ideas they convey. Traditionally, the parts of speech are the noun, pronoun, verb, adjective, adverb, preposition, conjunction, and interjection.

Precision: The ratio of the number of correctly filled slots to the total number of slots the system filled.

Recall: Denotes the ratio of the number of slots the system found correctly to the number of slots in the answer key.

Support Vector Machine (SVM): A learning machine that can perform binary classification (pattern recognition) as well as multi-category classification and real valued function approximation (regression estimation) tasks.