INTRODUCTION

Recently, multimedia applications are undergoing explosive growth due to the monotonic increase in the available processing power and bandwidth. This incurs the generation of large amounts of media data that need to be effectively and efficiently organized and stored. While these applications generate and use vast amounts of multimedia data, the technologies for organizing and searching them are still in their infancy. These data are usually stored in multimedia archives utilizing search engines to enable users to retrieve the required information.

Searching a repository of data is a well-known important task whose effectiveness determines, in general, the success or failure in obtaining the required information. A valuable experience that has been gained by the explosion of the Web is that the usefulness of vast repositories of digital information is limited by the effectiveness of the access methods. In a nutshell, the above statement emphasizes the great importance of providing effective search techniques. For alphanumeric databases, many portals (Baldwin, 2000) such as google, yahoo, msn, and excite have become widely accessible via the Web. These search engines provide their users keyword-based search models in order to access the stored information, but the inaccurate search results of these search engines is a known drawback.

For multimedia data, describing unstructured information (such as video) using textual terms is not an effective solution because the information cannot be uniquely described by a number of statements. That is mainly due to the fact that human opinions vary from one person to another (Ahanger & Little, 1996), so that two persons may describe a single image with totally different statements. Therefore, the highly unstructured nature of multimedia data renders keyword-based search techniques inadequate. Video streams are considered the most complex form of multimedia data because they contain almost all other forms, such as images and audio, in addition to their inherent temporal dimension.

One promising solution that enables searching multimedia data, in general, and video data in particular is the concept of content-based search and retrieval (Deb, 2004). The basic idea is to access video data by their contents,for example, using one of the visual content features. Realizing the importance of content-based searching, researchers have started investigating the issue and proposing creative solutions. Most of the proposed video indexing and retrieval prototypes have the following two major phases (Flinkner et al., 1995):

• Database population phase consisting of the following steps:

• Shot boundary detection. The purpose of this step is to partition a video stream into a set of meaningful and manageable segments (Idris & Panchanathan, 1997), which then serve as the basic units for indexing.

• Key frames selection. This step attempts to summarize the information in each shot by selecting representative frames that capture the salient characteristics of that shot.

• Extracting low-level features from key frames. During this step, some of the low-level spatial features (color, texture, etc.) are extracted in order to be used as indices to key frames and hence to shots. Temporal features (e.g., object motion) are used too.

• The retrieval phase. In this stage, a query is presented to the system that in turns performs similarity matching operations and returns similar data (if found) back to the user.

In this article, each of the these stages will be reviewed and expounded. Moreover, background, current research directions, and outstanding problems will be discussed.

VIDEO SHOT BOUNDARY DETECTION

The first step in indexing video databases (to facilitate efficient access) is to analyze the stored video streams. Video analysis can be classified into two stages: shot boundary detection and key frames extraction (Rui, Huang & Mchrotra, 1998a). The purpose of the first stage is to partition a video stream into a set of meaningful and manageable segments, whereas the second stage aims to abstract each shot using one or more representative frames.

In general, successive frames (still pictures) in motion pictures bear great similarity among themselves, but this generalization is not true at boundaries of shots. A shot is a series of frames taken by using one camera. A frame at a boundary point of a shot differs in background and content from its successive frame that belongs to the next shot. In a nutshell, two frames at a boundary point will differ significantly as a result of switching from one camera to another, and this is the basic principle that most automatic algorithms for detecting scene changes depend upon.

Due to the huge amount of data contained in video streams, almost all of them are transmitted and stored in compressed format. While there are large numbers of algorithms for compressing digital video, the MPEG format (Mitchell, Pennebaker, Fogg & LeGall, 1997) is the most famous one and the current international standard. In MPEG, spatial compression is achieved through the use of a DCT-based (Discrete Cosine Transform-based) algorithm similar to the one used in the JPEG standard. In this algorithm, each frame is divided into a number of blocks (8×8 pixel), then the DCT transformation is applied to these blocks. The produced coefficients are then quantized and entropy encoded, a technique that achieves the actual compression of the data. On the other side, temporal compression is accomplished using a motion compensation technique that depends on the similarity between successive frames on video streams. Basically, this technique codes the first picture of a video stream (I frame) without reference to neighboring frames, while successive pictures (P or B frames) are generally coded as differences to that reference frame(s). Considering the large amount of processing power required in the manipulation of raw digital video, it becomes a real advantage to work directly upon compressed data and avoid the need to decompress video streams before manipulating them.

A number of research techniques was proposed to perform the shot segmentation task such as template matching, histogram comparison, block-based comparison, statistical models, knowledge-based approach, the use of AC coefficients, the use of motion vectors, and the use of supervised learning systems (Farag & Abdel-Wahab, 2001a, 2001c).

KEY FRAMES SELECTION

The second stage in most video analysis systems is the process of KFs (Key Frames) selection (Rui, Huang & Mchrotra, 1998) that aims to abstract the whole shot using one frame or more. Ideally, we need to select the minimal set of KFs that can faithfully represent each shot. KFs are the most important frames in a shot since they may be used to represent the shot in the browsing system, as well as be used as access points. Moreover, one advantage of representing each shot by a set of frames is the reduction in the computation burden required by any content analysis system to perform similarity matching on a frame-by-frame basis, as will be discussed later. KFs selection is one of the active areas of research in visual information retrieval, and a quick review of some proposed approaches follows.

Clustering algorithms are proposed to divide a shot into M clusters, then choose the frame that is closest to the cluster centroid as a KF. An illumination invariant approach is proposed that applies the color constancy feature to KFs production using hierarchical clustering. The VCR system (Farag & Abdel-Wahab, 2001b, 2001c) uses two algorithms to select KFs (AFS and ALD). The AFS is a dynamic adapted algorithm that uses two levels of threshold adaptation—one based on the input dimension, and the second relying upon a shot activity criterion to further improve the performance and reliability of the selection. AFS employs the accumulated frame summation of luminance differences of DC frames. The second algorithm, ALD, uses absolute luminance difference and employs a statistical criterion for the shot-by-shot adaptation level, the second one.

FEATURE EXTRACTION

To facilitate access to large video databases, the stored data need to be organized; a straightforward way to do such organization is the use of index structures. In case of video databases we even need multi-dimension index structures to account for the multiple features used in indexing. Moreover, we are in need of tools to automatically or semi-automatically extract these indices for proper annotation of video content. Bearing in mind that each type of video has its own characteristics, we also need to use multiple descriptive criteria in order to capture all of these characteristics.

The task of the feature extraction stage is to derive descriptive indexes from selected key frames in order to represent them, then use the indexes as metadata. Any further similarity matching operations will be performed over these indexes and not over the original key frames data. Ideally, content-based retrieval (CBR) of video should be accomplished based on automatic extraction of content semantics that is very difficult. Thus, most of the current techniques only check the presence of semantic primitives or calculate low-level visual features. There are mainly two major trends in the research community to extract indices for proper video indexing and annotation. The first one tries to automatically extract these indices,while the second trend performs iconic annotation of video by manually (with human help) associating icons to parts of the video stream. One example of the latter trend uses a multi-layered representation to perform the annotation task, where each layer represents a different view of video content. On the other hand, works on the first trend, automatic extraction of content indices, can be divided into three categories:

• Deriving indices for visual elements using image-indexing techniques. For example, using the color and texture as low-level indices.

• Extracting indices for camera motion (panning, zooming, etc.). Generally, optical flow is used in such techniques.

• Deriving indices for region/object motion. One system detects major objects/regions within the frames using optical flow techniques.

Color and texture are commonly used indexing features in most of the above systems. Color feature extraction can work directly on the original decoded video frame or on its DC form. One technique converts the color space of DC video frames (YCbCr in case of MPEG) to the traditional RGB color space, then derives color histograms from the RGB space. Deriving the histogram can be done in many ways. An efficient technique uses some of the most significant bits of each color component to form a codeword. For instance, the most significant two bits of each color component are selected and concatenated to form a 6-bit codeword. This codeword forms a 64-bin color histogram that is used as the color feature vector. This histogram is a good compromise between computational efficiency and representation accuracy.

Many techniques are proposed in the literature to perform texture feature extraction. Some of them use auto-regression and stochastic models, while others use power spectrum and wavelet transform (Bimbo, 1999). The main disadvantage of these techniques is that they are computationally expensive.

THE RETRIEVAL SYSTEM

The basic objective of any automated video indexing system is to provide the user with easy-to-use and effective mechanisms to access the required information. For that reason, the success of a content-based video access system is mainly measured by the effectiveness of its retrieval phase. The general query model adapted by almost all multimedia retrieval systems is the QBE (Query By Example) (Yoshitaka & Ichikawa, 1999). In this model, the user submits a query in the form of an image or a video clip (in case of a video retrieval system) and asks the system to retrieve similar data. QBE is considered to be a promising technique, since it provides the user with an intuitive way of query presentation. In addition, the form of expressing a query condition is close to that of the data to be evaluated.

Upon the reception of the submitted query, the retrieval stage analyzes it to extract a set of features, then performs the task of similarity matching. In the latter task, the query-extracted features are compared to the features stored into the metadata, then matches are sorted and displayed back to the user based on how close a hit is to the input query. A central issue here is how the similarity matching operations are performed and based on what criteria (Farag & Abdel-Wahab, 2003d). This central theme has a crucial impact on the effectiveness and applicability of the retrieval system.

Many techniques have been proposed by various researchers in order to improve the quality, efficiency, and robustness of the retrieval system. Some of these techniques are listed below.

• Relevance feedback. In this technique the user can associate a score to each of the returned clips, and this score is used to direct the following search phase and improve its results.

• Clustering of stored data. Media data are grouped into a number of clusters in order to improve the performance of the similarity matching.

• Use of linear constraints. To come up with better formal definitions of multimedia data similarity, some researchers proposed the use of linear constraints that are based upon the instant-based-point-formalism.

• Improving browsing capabilities. Using KFs and mosaic pictures to allow easier and more effective browsing.

• Use of time alignment constraints. The application of this technique can reduce the task of measuring video similarity to finding the path with minimum cost in a lattice. The latter task can be accomplished using dynamic programming techniques.

• Optimizing similarity measure. By defining optimized formulas to measure video similarity instead of using the exhaustive similarity technique in which every frame in the query is compared with all the frames into the database (a computationally prohibitive technique).

• Human-based similarity criteria. This technique tries to implement some factors that humans most probably use to measure the similarity of video data.

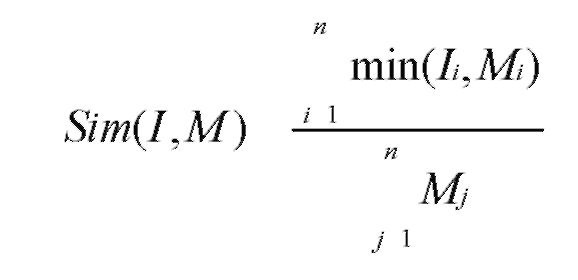

Most of the above techniques end up with calculating the similarity between two frames. Below is one example of how similarity between the colors of two frames (/ and M) represented by their color histograms can be calculated using the normalized histogram intersection. If Sim approaches zero, the frames are dissimilar, and if it is near one, the frames are similar in color.

FUTURE TRENDS

Some of the important issues under investigation are surveyed briefly in this section. Efficient algorithms need to be proposed to parse video streams containing gradual transition effects. Detecting semantic objects inside video frames is also an open challenge that needs to be addressed. Moreover, multi-dimension indexing structure is one of the active areas of research that can be further explored. Determining the similarity of video data is another area of research that requires more exploration to come up with more approaches that are effective.

CONCLUSION

In this article, we briefly review the need and significance of video content-based retrieval systems and explain their four basic building stages. Current research topics and future work directions are covered too. The first stage, the shot boundary detection, divides video streams into their constituent shots. Each shot is then represented by one or more key frame(s) in a process known as key frames selection. The feature extraction stage, the third one, derives descriptive indexes such as color, texture, shapes, and so forth from selected key frames, and stores these feature vectors as metadata. Finally, the retrieval system accepts a user query, compares indexes derived from the submitted query with those stored into the metadata, and then returns search results sorted according to the degree of similarity to the query. At the end, we need to emphasize the importance of content-based video retrieval systems and assert that there is still a considerably large number of open issues that require further research to achieve more efficient and robust indexing and retrieval systems.

KEY TERMS

Content-Based Access: A technique that enables searching multimedia databases based on the content of the medium itself and not based on keywords description.

Key Frames Selection: The selection of a set of representative frames to abstract video shots. Key frames (KFs) are the most important frames in a shot, so that they can represent the shot in both browsing and similarity matching operations, as well as be used as access points.

Query by Example: A technique to query multimedia databases where the user submits a sample query and asks the system for similar items.

Retrieval Stage: The last stage in a content-based retrieval system where the extracted features from the query are compared to those stored in the metadata and matches are returned to the user.

Shot Boundary Detection: A process with the objective of partitioning a video stream into a set of meaningful and manageable segments.

Video Indexing: The selection of indices derived from the content of the video to help organize video data and metadata that represents the original video stream.

Video Shot: A sequence of contiguous video frames taken using the same camera.