The Intelligent Display – DPVL and Beyond

In each of the applications examined so far, it has been noted that an additional measure of “intelligence,” of information processing capability, has been required of the display or interface over the traditional display device. The support of higher resolutions requires support for compression and decompression, if unreasonably high interface capacity requirements are to be avoided. Adding more sophisticated displays to portable, wireless devices brings similar needs, to enable support for these with the limited capacities of a wireless channel. Head-mounted displays typically require at least sufficient “intelligence” in the interface for the conversion of the image and transmission format into something suited to this display type.

This points to a trend that has already started to influence the shape of display interface standards. With a much wider range of display technologies, applications, and formats, the traditional interface approach – in which the interface specifications were very strongly tied to a single display type and image format – no longer works well. The original television broadcast standards, arguably among the first truly widespread display interfaces, were clearly designed around the assumption of a CRT-based receiver and a wireless transmission path. The analog PC display interface standards began in a similar manner, and then had to be adapted to the changing needs of that industry, in supporting a wider range of display types and formats than originally anticipated.

The true advantage of the digital class of interfaces is not in its better compatibility with any particular display type, but rather that it has enabled the use of digital storage and processing techniques. The use of these has the effect of decoupling the display from the interface, or, from a different perspective, permitting the interface to carry image information with little or no attention to the type, format, or size of the display on which the image will ultimately appear.

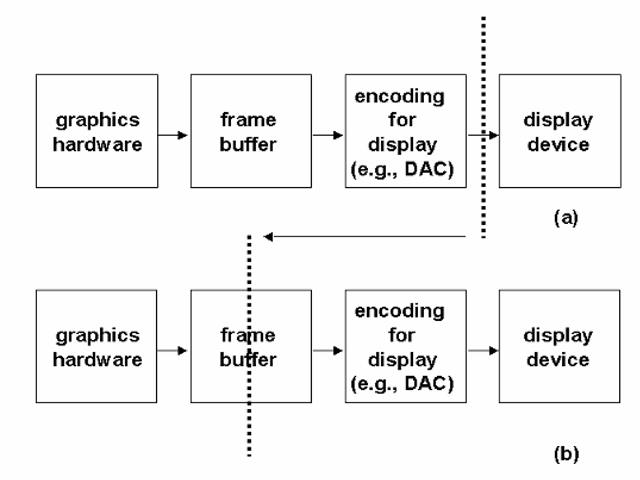

The Digital Packet Video Link (DPVL) proposed standard represents the first significant step away from the traditional display interface. In “packetiz-ing” the image information, DPVL brings networking concepts onto digital interfaces originally used simply to duplicate the original analog RGB model. This has the effect of moving the physical interface between the “host system” and the “display” farther back along the traditional chain (Figure 13-2). Or, again to look at the situation from the other side, the traditional “display interface” now is buried within the display product, along with a significant amount of digital processing and storage hardware.

In the future, then, it is likely that the “intelligent display” model will dominate many markets and applications. Rather than seeing dedicated video channels between products, image information will be treated as any other, and transmitted along general-purpose, very-high-capacity digital data interfaces. Video information has the characteristic of being time-critical in nature – the data for each field or frame must be received in time for that frame to be assembled and displayed as part of a steady stream – but this is not unique to video. Audio samples share this characteristic, and data channels intended to carry either or both must therefore support isochronous data transmission. But this is becoming more and more common, particularly in digital standards for home networking and entertainment.

Figure 13-2 The effect of systems such as DPVL, or others which move the display interface to more of a “network” model, is to move the location of the display interface more “upstream” in our overall system model. In (a), the dotted line shows the traditional location, within the system, of the physical display connection – between an output specifically intended for a particular display type or device, such as an analog video connection, and the display itself. Making the display into a networked peripheral, and permitting such features as conditional update, etc., requires more “intelligence” and image data storage within the physical display product – and hence moves the display interface logically closer to the source of this data (b).

We must also consider the question of just how much intelligence is likely to be transferred to the “display” product in the future, vs. it being retained in a host product such as a PC. So far, we have still considered the interface as carrying information corresponding to complete images, albeit compressed, packetized, or otherwise in need of “intelligent” processing prior to display. But should we also expect the display to take over the task of image composition and rendering as well? You may recall that, in the very early days of computer graphics, this was often the case – the graphics hardware was packaged within the CRT display, and connected to the computer itself via a proprietary interface. However, this was really more a case of packaging convenience for some manufacturers; there has to date never been a widely accepted standard “display” interface, between physically separate products, over which graphics “primitives” or commands for the rendering of images were passed in a standard manner. Obviously, it would be possible to develop such a standard, but two factors argue against it. First, the history of graphics hardware development has shown much more rapid progress than has been the case in displays, in terms of basic performance. As a result, display hardware has tended to have a much longer useful life prior to obsolescence than has graphics hardware, and it has made little sense to package the two together. There has also generally been a need to merge in images from other sources – such as television video -with the locally generated computer graphics prior to display. And, until recently, the highspeed, bidirectional digital channel needed to separate the graphics hardware from the rest of the computer simply was not available in a practical form – meaning an interface that could be implemented at a reasonable cost, and which would operate over an acceptably long distance.

And, at this point, there is little motivation for placing the rendering hardware within the display product in most systems. Instead, we can reasonably expect a range of image sources – as well of sources of information in other forms, such as audio and plain text and numeric information – all to transmit to an output device which will process, format, and ultimately display the information to the user. In this model, there is no distinct “display interface” between products; instead, images, both moving and still, are treated as just another type of data being conveyed over a general-purpose channel.

Into The Third Dimension

One particularly “futuristic” display has yet to be discussed here. A staple of the science-fiction story, the truly three-dimensional display has long been a part of many people’s expectations for future imaging systems. To date, however, displays which have attempted to provide depth information have seen only very limited success, and in relatively few specialized applications. Most of these have been stereoscopic displays, rather than providing a truly three-dimensional image within a given volume. And for the most part, these have been accommodated within existing display interface standards, often by simply providing separate image generation hardware and interfaces for each eye’s display. At best, accommodating stereoscopic display within a single interface is done by providing some form of flag or signal indicating which image – left or right – is being transmitted at the moment, and then using an interleaved-field form of transmission (Figure 13-3).

Several different approaches have been tried, with varying degrees of success, to create a true volumetric three-dimensional display. Most of these fall into two broad categories: the projection of light beams or complete images onto a moving screen (which sweeps through the intended display volume, synthesizing an apparent 3-D view from these successive 2-D images), or, more recently, the synthesis of holographic images electronically. Examples of the former type have been shown by several groups, including prototype displays demonstrated by Sony and Texas Instruments. The latter class, the fully electronic holographic display – again a favorite of science-fiction authors – has also been demonstrated in very crude form, but shows some promise. But a truly useful holographic display, or for that matter any three-dimensional display suitable for widespread use, faces some very significant obstacles.

The mechanical approaches to obtaining a three-dimensional image are burdened with the same problems as have faced all such designs – the limited speeds available from mechanical assemblies, especially those in which significant mass must be moved, and the associated problems of position accuracy and repeatability (not to mention the obvious reliability concerns). These products have generally taken the form of a projection surface, often irregularly shaped, being swept through or rotated within the volume in which the “3-D” image is to appear. In the examples mentioned above, for instance, Texas Instrument’s display creates images by projecting beams of light (possibly lasers) onto a translucent surface rotating within a dome; Sony’s prototype 3-D display uses a similar approach, but with a more-or-less conventional CRT display as the image source. Others have employed similar approaches. The images produced to date, from any such display, do appear “threedimensional”, but all suffer from poor resolution (as compared with current 2-D displays), color, and/or stability.

Figure 13-3 Stereoscopic display. One common means of display stereoscopic imagery is to use an ordinary two-dimensional display, but transmitting left- and right-eye images as alternating frames. Special glasses are used that shutter the “wrong” eye, based on the state of a stereo synchronization signal that cycles at half the frame rate.

Holographic imaging through purely electronic means would seem to be the ideal solution. Most people are already somewhat familiar with holograms; they have appeared in wide range of popular media, although it must be admitted that their use has been primarily restricted to novelties and a very few security applications (as in the holograms on many credit cards). They produce a very convincing three-dimensional effect, from what appears to be a very simple source. Surely adapting this technology to electronic displays will result in a practical 3-D imaging system!

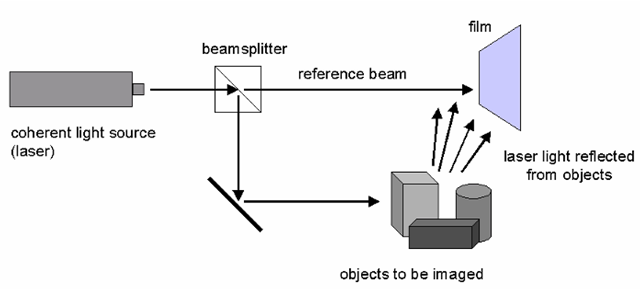

However, a quick overview of the principles behind holography will show that this is far easier said than done. A hologram is basically a record of an optical interference pattern. In the classic method (shown in Figure 13-4), a coherent, monochromatic light source (such as a laser) is used to illuminate the object to be imaged, as well as providing a phase reference (via a beam splitter). If the light from the object is directed to a piece of film, along with the reference beam, the film records the resulting interference pattern produced by the combining of the two beams. After developing the film, if the resulting pattern is again illuminated by the same light source, the rays reflected from the original object are recreated, providing a three-dimensional image of that object.

Holograms have many interesting properties – which we will not be able to examine here in depth, no pun intended – but the key point, from the perspective of creating an electronic display, is the complexity of the stored image.

Figure 13-4 Holography. In the classic hologram process, a coherent light source, such as a laser, creates two beams of light – one used as a reference, and the other reflected from the objects to be imaged. Film captures the interference pattern created when the two beams meet.

Fine details in the original translate to high-frequency components in the resulting interference pattern, across the entire area of the hologram. The ability of the film to adequately capture these is a limiting factor in determining the spatial resolution of the resulting holographic image. It is commonly known that film holograms of this type may be divided into several pieces, with each piece retaining the ability to show the complete original image, as if by magic. What is not commonly understood is how this occurs. A somewhat simplistic explanation is that the nature of the hologram stores image information across the entire area of the original film, but in a transformed manner that is, in a sense, the opposite of a normal photograph. Coarse detail, such as the general shape of the object, is stored redundantly across the entire hologram, and is retained if the hologram is later divided. But fine details are stored as information spread across the original, and will be progressively degraded as the area used to recover the image is reduced. Reproducing extremely fine detail requires the storage of a highly accurate image of the interference pattern, over a large area. In terms of creating or transmitting a hologram electronically, this implies a very large amount of information to be handled for each 3-D image. (It also implies an imaging device with resolution capabilities similar to those of photographic film – resolution at least in the upper hundreds, if not low thousands, of pixels per centimeter – but that is yet another problem.) An image area just ten centimeters square might easily require 100 million pixels or more to be a marginally practical holographic imager; assuming our usual eight bits per sample of luminance, the information required for a single frame is truly staggering.

This points out the major difficulty, purely from an interface perspective, with any threedimensional display system. Even stereoscopic display, which simply involves separate twodimensional images (one per eye) doubles the capacity requirements of the interface, if the other aspects of the image (pixel format, color depth, frame rate, etc.) are to be maintained vs. a more conventional 2-D display. A true volumetric display potentially increases the information content by several orders of magnitude; consider, for instance, a 1024 x 1024 x 1024 “voxel” (volume pixel) image space, as compared with a more conventional 1024 x 1024 2-D image. To be sure, the data-handling requirements for such displays can be reduced considerably by applying a little intelligence to the problem; for instance, there is no need to transmit information corresponding to samples that are “hidden” by virtue of being located inside solid, opaque objects! It is also certainly possible to extend the compression techniques employed with two-dimensional imagery into the third dimension. But the storage and processing capabilities required to achieve these steps literally see a geometric increase above what was needed for a comparable two-dimensional image system. The numbers become very large just for a static 3-D image, and would grow still further if a series of “3-D” frames (“boxes”?) were to be transmitted for motion imagery. We must therefore conclude that the notion of three-dimensional display systems, while certainly attractive, will remain impractical for all but some very high-end, specialized applications for the foreseeable future.

Conclusions

In this final topic, we have examined some of the possible future directions for display products and the expected impact on display interface requirements for each. To summarize these:

• While there may be some need for higher resolution in many applications, we can also expect the current display formats to remain popular for quite some time. The higher resolution displays, when and where they do come to market, will require significantly different types of display interfaces than simply the repeated transmission of the raw image data that has characterized past approaches.

• Portable products can reasonably be expected to become much more sophisticated, and so will require more sophisticated displays; portable displays will become larger (to the limits imposed by the need for portability itself), providing higher resolution, and more and more often the ability to support full-color images and often motion video. However, the nature of the portable product demands a wireless information between that product and the source of the displayed information.

• These two factors argue for a continued transfer of “intelligence” – image processing and storage capability – to the device or product containing the display. This will be used to support more sophisticated compression techniques, increased reliance on local image processing, etc., primarily in order to make more efficient use of the capacity of the interface between the product and its host system. The “host system” may not even exist as a single entity, but rather be, in reality, a distributed set of image sources, all accessed through a general-purpose data network. In such a situation, no real “display interface” exists except within the display product itself, between the image-processing “front end” and the actual display. Coming standards such as the Digital Packet Video Link (DPVL) specification represent the first step along that path.

• Some new display types will succeed, and will place new demands on the physical and electrical interfaces to be used on many products – with a clear example being the development of high-resolution, “head-mounted” or “eyeglass” displays, for portable products and other applications. Others, such as a true three-dimensional display system, are not likely to impact the mainstream for the foreseeable future, due to the nature of the obstacles on their particular road to commercial practicality.

The one overall conclusion that one can always draw from looking over the history of electronic imaging, and studying the trends as they appear at present, is that our conclusions and predictions made today will in many cases turn out to be completely wrong. As recently as 40 years ago, practically all electronic displays were CRT-based; the personal computer, its “laptop” offspring, the cellular telephone, or even the digital watch and pocket calculator – each of which were driven by, and in turn drove, display technology development – were not even imagined. Even color television was still in its infancy. The history of the display industry has shown it to be remarkably innovative and surprising. While we would like to think we have a good understanding of where it will be in even five or ten years, new developments and the new products and markets that drive them have a tendency to ignore our predictions and expectations, and go where they please. At the very least, it seems safe to say that the delivery of information via electronic displays will be with us for quite some time to come, and with it the need for ever better and more reliable means of conveying this information – the display interface will remain, truly, the interface between the “information superhighway” and its users.