Abstract

Size-based scheduling is a promising solution to improve the response time of small flows (mice) that have to share bandwidth with large flows (elephants). To do this, one important task is to track the size of the ongoing flows at the router. However, most of the proposed size-based schedulers either employ the trivial way of tracking the size information of all flows, or require changes at end-hosts. Hence, either they are not scalable or they increase complexity. This paper proposes a new way of performing size-based scheduling in a practical and scalable fashion, by identifying and ‘de-prioritizing’ elephants only at times of high load. We exploit TCP’s behaviour by using a mechanism that detects a window of packets — called spikes — when the buffer length exceeds a certain threshold. This spike-detection is used to identify elephant flows and thereafter de-prioritize them. Two-level processor-sharing (TLPS) scheduling is employed to schedule flows in two queues, one with the high-priority flows, and the other with the de-prioritized flows. We perform studies, using both analyses and simulations, to highlight the properties of the spike-detection mechanism. We show that, the proposed mechanism not only improves the response time of mice flows in a scalable way, but also gives better response times to other flows by treating them preferentially as long as they do not overload the high-priority queue.

Keywords: Queueing, Scheduling, Flows, Networks, Performance modelling.

Introduction

The flow-size statistics in the Internet reveal strong heavy-tail behaviour — a small percentage of flows (large in size) contribute to a large percentage of the Internet’s traffic volume. It is also referred to as the mice-elephant phenomenon, where 80% of the flows that contribute to 20% of the traffic are called mice flows, and the remaining 20%, the elephant flows. Examples of mice (small) flows include tweets, chats, web queries, HTTP requests, etc., for which users expect very short response times; while elephant (large) flows are usually the downloads that run in the background (say, a kernel image or a movie), the response times for which are expected to be higher than that for the mice flows by orders of magnitude.

The current Internet architecture has a FCFS server and a drop-tail buffer at most of its nodes (routers and switches). This along with the fact that most of the flows in the Internet are carried by TCP [7], hurt the response times of mice flows adversely. Specifically, it can be argued that the current TCP/IP architecture is biased against small TCP flows, for the following reasons:

— As mice flows do not have much data, they almost always complete in the slow-start (SS) phase, never reaching the congestion-avoidance phase; and thus typically having a small throughput.

— A packet loss to a small flow most often results in a time-out due to the small congestion window (cwnd) size; and time-outs increase the completion time of small flow many folds.

— The increase in round-trip-time (RTT) due to large queueing delays hurts the small flows more than the large flows. For the large flows, the large cwnd makes up for the increase in RTT; whereas, this is not the case for small flows and is quite perceivable.

The biases against mice flows become more relevant today, with recent studies showing an increase in the mice-elephant phenomenon, with a stronger shift towards a 90-10 rule [3]. Solutions to this problem can be grouped into a class of priority scheduling mechanisms based on flow size. These size-based schedulers give priority to ‘potential’ small flows over the large flows. An important task in size-based scheduling systems, is therefore, to identify and distinguish between mice and elephant flows. The trivial way of tracing the sizes of all flows is simply not scalable with increasing traffic, as flow-size tracking requires the maintenance of a flow-table, with the size-counter being updated/initialized on the arrival of every packet. Hence, the existing solutions face a roadblock when it comes to implementation, as most of them depend on tracing the sizes of all flows. Other known alternative is complex — [2] proposed a an implementation of the PS+PS strategy, by modifying the TCP protocol at end-hosts. This leads to other complications and unintended consequences, like reducing the randomness of initial sequence numbers, besides making it depend on end-host adaptations.

This work proposes a new way to perform size-based scheduling in a scalable way, taking a deviation from the conventional way of needing to track the size of flows. The proposed mechanism exploits the TCP behaviour during SS phase to detect and de-prioritize large flows, so as to give preference to small flows. We call the burst of packets in a congestion window that arrives at a bottleneck router a spike. This paper explores a mechanism to detect large flows, by detecting spikes with corresponding large sizes — indeed we call it spike-detection. The spike-detection mechanism detects large flows at times of ‘high’ load, and de-prioritizes them instantly. We show that, this along with a TLPS scheduling of flows, improve the response time of small flows, and also of other flows as long as the buffer length is below a certain threshold.

We detail on how to perform size-based scheduling using the new spike-detection mechanism in section 2, before discussing on the related works in section 3. The spike-detection mechanism is elaborated in section 4. In section 5, we present an integrated spike/packet model using fixed point approach. The performance of this new system is analyzed using simulations, the goals and settings of which are stated in section 6. The performance analysis and discussion of results are done in section 7.

Size-Based Scheduling Using Spike-Detection

We assume that a TCP flow either

— completes in its exponential-growth phase without any loss until completion (i.e., in the SS phase), or,

— experiences loss(es) and reduces its cwnd.

In the latter case, it will complete in either the SS phase or the congestion-avoidance phase depending on the kind of loss experienced. The emphasis here is that, the initial exponential growth of a flow is not limited by the value of ssthresh, but due the network bandwidth. As the capacity of the network core and that of the access links increase, it is reasonable to assume end-hosts will tune the TCP parameters lest the exponential-growth phase is clamped due to a limiting value of ssthresh.

Next we explain the system, referred from now on as TLPS/SD, with two important modules: (1) spike-detection (SD) mechanism, and (2) two-level-processor-sharing (TLPS) scheduling. The physical queue at the router is divided into two virtual queues, Qi and Q2. Each queue schedules flows using processor-sharing (PS) discipline. Strict priority scheduling is assumed between the two queues, with Qi having the higher priority. The queue Qi is served at line capacity C; whereas, Q2 is served only when Q1 is empty.

The decision on where to queue an arriving packet is based on the following — if it belongs to a large flow, it is sent to Q2; or else to Q1. The mechanism for classifying a flow as large is using the spike-detection mechanism. The spike-detection mechanism is triggered only when the length of Q1, in packets, exceeds a certain pre-defined value [. Once a flow is detected as large, it is de-prioritized, and all the further packets of the flow are sent to the low-priority queue, Q2.

Related Work

Size-based scheduling strategies can be classified into two, based on whether the strategies have knowledge of the flow size (flow service demand) in advance or not. They are anticipating and non-anticipating strategies.

Anticipating Strategies: These assume knowledge of flow size on its arrival to the system. One such policy is the shortest-remaining-processing-time (SRPT) policy, which always serves the flow in the system that needs the shortest remaining service time. SRPT is known to be optimal among all policies with respect to the mean response time1 [12]. The improvement of the response time brought by SRPT, over PS, becomes striking with the variability in the service time distribution. SRPT scheduling has been used effectively in web servers [6]. The disadvantage of the policy comes from the need to anticipate the flow size. While this information is available in web servers, schedulers in routers do not have prior knowledge of the size of an arriving flow.

Non-Anticipating Strategies: These policies instead use the ongoing size, or age, of a flow for taking scheduling decisions. The ongoing size of the flow is the size it has attained until the current scheduling instance. In the following, we review some important non-anticipating scheduling strategies.

Foreground-Background (FB) or LAS Scheduling: FB policy gives priority to the flow that has the minimum ongoing size among all the competing flows, and serves it before serving any other flow [9]. FB policy is shown to be optimal with respect to the mean response time when the distributions have decreasing hazard rate (DHR) [13]. The policy has been studied for flows at a bottleneck queue, in the name of LAS (least-attained-service) [11]. LAS not only decreases the delay and the loss rate of small flows compared to an FCFS packet scheduler with drop-tail buffer, but causes negligible increase in delay for large flows. But the implementation of LAS requires the knowledge of the running number of the packets of each flow, so as to find the youngest ongoing flow. This, along with the other drawbacks, such as unfairness and scalability issue, have motivated researchers to explore other means of giving priority to small flows, one such being the PS+PS model proposed in [2].

PS+PS Scheduling: The PS+PS scheduling uses two PS queues, with priority between them [2]. The first 0 packets of every flow are served in the high-priority queue, say Qi, and the remaining packets (if any) are served in the low-priority queue, say Q2. Hence all flows of size less than or equal to 0 get served in the high-priority queue. Q2 is served only when Q1 is empty. The mean of the flow size distribution thus turns out to be the mean of the truncated distribution.

It is proved that the PS+PS model reduces the mean overall response time (E[T]) in comparison to PS, for the DHR class of distributions. In addition, the authors proposed an implementation of this model; but it relies on TCP sequence numbers, requiring them to start from a set of possible initial numbers. This not only makes the scheme TCP-dependent, but also reduces the randomness of initial sequence numbers that TCP flows can have.

MLPS Discipline: The PS+PS model can be seen as a specific case of the multilevel-processor-sharing (MLPS) discipline [8]. In the context of prioritizing small flows, [1] demonstrates that the mean delay of a two-level MLPS can be close to that of FB in the case of Pareto and hyper-exponential distributions, belonging to the DHR class. An ideal implementation of an MLPS discipline would require the size information of flows in the system.

Sampling and Scheduling: An intuitive way to track large flows is is to use (real-time) sampling to detect large flows (thus classifying them), and use this information to perform size-based scheduling. SIFT, proposed in [10], uses probabilistic sampling [14], along with the PS+PS scheduler. A flow is ‘small’ as long as it is not sampled. All such undetected flows go to the higher priority queue until they are sampled. This system was analyzed using ‘average delay’ (average of the delay of all small flows, and all large flows) for varying load, as a performance metric. Though it is an important metric, it does not reveal the worst-case behaviours in the presence of sampling. This is more important here, as the sampling strategy has a disadvantage: there can be false positives; i.e., small flows if sampled will be sent to the lower priority queue. Deviating from this simple strategy, [4] proposed to use a threshold-based sampling, derived from the well-known ‘Sample and Hold’ strategy [5], along with PS+PS scheduling. In this policy, the size of a sampled flow is tracked until it crosses a threshold. This threshold can be the same as used in PS+PS scheduling to ensure that there are no false positives, but only false negatives. Though this reduces the number of packet processing required to track flows, by an order of magnitude, we later show that the performance attained by flows are better under TLPS/SD policy.

Spike-Detection Mechanism

As discussed earlier, we exploit the behaviour of TCP flows during SS phase to detect large flows. We refer to cwnd, the congestion window of a TCP flow, during the SS phase as ‘spike’. That is, the size of a spike is the size of cwnd during the SS phase. The size of a TCP flow with spike size 2n, is at least Ym=o 2l = 2V+1 — 1. We define a large flow as a flow that has a spike size greater than 2n packets. That is, all flows with size greater than or equal to 2v+1 packets are large flows. Note that (irrespective of whether the spike is detected or not) this definition of elephant flows is similar to that found in literature; i.e., flows with sizes beyond a pre-defined threshold are elephant flows.

The next question is, ‘how is a spike of size 2n detected?’. We focus on an outgoing bottleneck link at a router. For the model, we assume the queue Q1 to be of infinite size. Further, we assume that a TCP sender sends an entire cwnd at one go. This is a fair assumption for windows of moderate sizes. On parameters n and [, the values are such that, 0 < 2n < [.

To understand the system, consider the end of an idle period in Q1. Let n = 4. A new (TCP) flow joins the system. The flow is initially sent to Q1, where it comes with one packet in the first round. The packet is not dropped at the bottleneck in focus (as [ > 2n); therefore (and assuming it does not get dropped anywhere else in the path), the sender receives acknowledgement for the packet. So, in the next round (the next RTT), it sends two packets, in the third round four packets, and so on and so forth until Q1 starts building up. In the case of a single flow, the sender has to have a higher capacity than the bottleneck link for the queue to build up. Once the queue length exceeds 3 packets, the detection mechanism inspects the packets in the queue. Since 3 > 2n, the single flow contributed to more than 2n packets, and hence gets classified as a large flow, and is de-prioritized. This de-prioritization involves two steps: (i) the flow is tagged as 'large', and (ii) all the further packets of this large flow will be directed to Q2.

If there were multiple new flows that entered the system, then there is a possibility that no flow has more than 2n packets in the buffer even when the buffer length exceeded 3 packets. In this scenario, none of the flows get classified as large flow. Out of these, the small flows will complete before being able to send more than 2n packets. But, the large flows will continue (in their SS phases) coming with double the window size every round, thereby increasing the probability of getting detected. In the worst case, a large flow will go undetected until a maximum window size of 3 is reached. After that, it single-handedly causes the queue length to exceed 3 packets, thereby getting detected and de-prioritized. Observe that, if all the flows that cause the queue build-up were small, then they would share the bandwidth equally and complete in Q1.

In reality, a large burst of small flows might cause a finite-size queue to overflow. For large buffers this can be taken as a rare event; otherwise, the flow whose packet gets dropped switches to congestion-avoidance phase, and continues in Q1, until its window size gets detected.

Now, let us trace the cwnd of a new flow. The cwnd increases exponentially every RTT interval. If the size of the flow is less than 2V+1, the cwnd does not go higher than 2n (even if it enters congestion-avoidance phase). Every flow that has a size greater than or equal to 2n+1, will fall in either of the following:

— Completes in Q1: In this scenario, the flow does not cause the buffer to exceed 3 packets; or in other words, the flow never gets tagged as large. Hence it continues and completes in the SS phase. Obviously, this set of flows do not have cwnd greater than 3 packets. Therefore the maximum size of flows that complete during the SS phase is 23 — 1.

— Completes in Q2: This happens when the flow gets detected as large at some point during its life-time in Q1, and henceforth gets its remaining packets to be queued in Q2. No flow of size less than 2v+1 comes to this queue. Besides, the first 2v+1 packets (and possible more) of every flow that arrived at Q2, were served in Q1.

Note that, for de-prioritizing a flow detected and tagged as 'large', a flow-table is essential. This flow-table contains a flow identifier — a hash function of the common five-tuple of source and destination IP addresses, source and destination port addresses and protocol — and a field called on, a boolean, indicating if the flow is 'on' and not completed, in the considered interval. At the beginning of every interval, for each entry in the table, the on field is set to 0. An arriving packet that has a matching flow-id in the table, sets the corresponding on field to 1, if not already so. At the end of the interval, only those flows with on =1,are retained; others are removed. The length of the interval should be set to a value that corresponds to the mean off-time of flows.

Integrated Packet/Spike Model

In this section, we model the high-priority queue using fixed point approach. First, we formulate the throughput of the queue Q1 where arrivals and services are in spikes. Next, we model Q1 at packet level; and finally, we integrate both the models to obtain solution for throughput at Q1 as a function of the threshold 3 for Q1.

Spike Level

Here we model Q 1 , as a 'spike queue', where data arrive and get serviced as spikes. The spike arrivals are correlated, as the arrival of a spike of size 2x of a flow is possible only if none of the previous sizes (2®, i = 0 ...x — 1) is not detected (and hence sent to Q2). Similarly, the spike-size distribution is also dependent on the detection process.

For simplicity, we assume [ to be a power of 2. Let P1(y) denote the probability that a spike of size 2y packets is not detected. Similarly, let P2(y) denote the probability that none of the spikes with sizes greater than 2n packets and less than or equal to 2y packets is detected.

Let F denote the cumulative distribution of flow sizes (in packets). The spike-arrival rate, A, can be derived from the flow-arrival rate A, and the number of spikes brought by the flows.

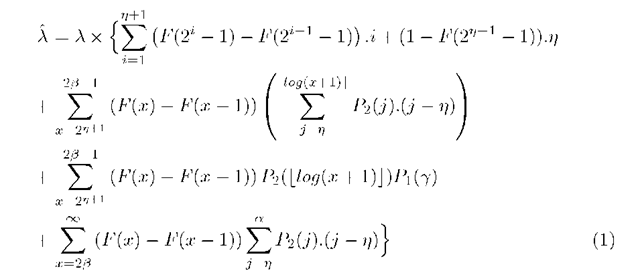

where

The first term in the above equation is the number of spikes contributed by all flows with sizes not greater 2v+1 — 1. All flows with sizes greater than 2v+1 — 1 will contribute at least n spikes, and is accounted in the second term. The last three terms take the correlation of spikes into consideration. The third and fourth terms account for the spikes generated by flows of size between 2v+1 — 1 and 23 — 1, conditioned that a spike of a specific size arrives at Q1 only if all the spikes of lower sizes also arrived at Q1. The same applies to the last term.

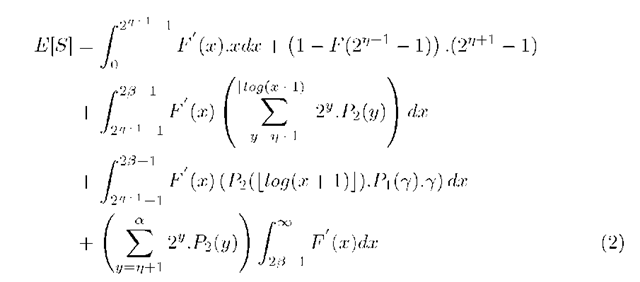

The mean spike-size, E[S], is obtained from the flow-size distribution, with dependence on when the flow gets detected; as once a flow is detected no more spike from that flow arrives at Q1.23 — 1, conditioned that a spike of a specific size arrives at Q1 only if all the spikes of lower sizes also arrived at Q1. The same applies to the last term.

The mean spike-size, E[S], is obtained from the flow-size distribution, with dependence on when the flow gets detected; as once a flow is detected no more spike from that flow arrives at Q1.

The throughput at Q1, given the line capacity C, can be written as,

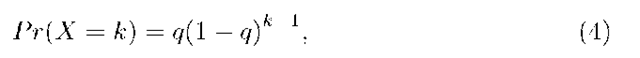

The queue Q1 is modeled at the packet-level as an MX/M/1 queue. That is, services are in packets, and arrivals are in batches, where a spike is taken as a batch of packets. The batch size X being geometrically distributed;

where 0 < q < 1. The value for q is set such that, 1/q = E[S]. The batch-arrival process is Poisson with rate A, the spike-arrival rate. The mean packet-arrival rate is A/q. Therefore, the load of this queue, p = tT.

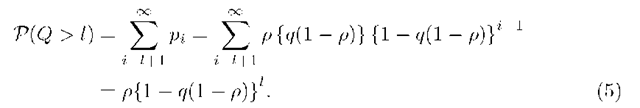

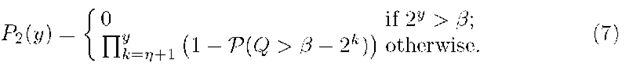

The probability that the length of the buffer exceeds l packets, P(Q > l), is a function of pi, the probability that there are i packets in the queue.

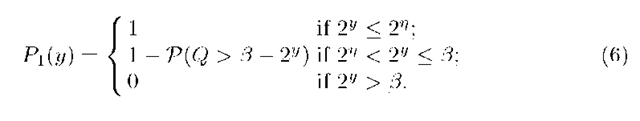

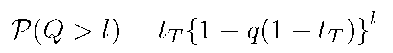

Given n and 3, the probability of not detecting a spike of size 2y packets,

Next, the probability that none of the spikes of sizes greater than 2n and less than or equal to 2y gets detected is,

Integrated Model

Equations (1) and (2) increase in (both) P1 and P2; and P1 and P2 decreases in P(Q > l). Therefore, tT is a decreasing function, say g, of P;

Now, P in Eq. (5) can be re-written as,

To solve by iteration, the above two equations can be written as,

and,

Therefore the throughput in Q1, and hence the mean response times of flows completing on Q1, is dependent on the parameter [, besides the arriving load.