1. Introduction

This article examines strategies for quantification from sectioned biological material at the electron microscope (EM) level. Recently there has been increasing demand for rigorous quantification and for linking morphological results with physiological and biochemical data. To achieve this it has been essential to develop a body of methods that allows accurate, unbiased, and efficient estimation of thin section and 3D quantities. This article describes current approaches to quantitation of both structure and immunolabeling. Sectioning is a powerful tool for revealing the internal structure of cells and tissue. At the ultrastructural level it provides samples that are thin enough for clear display of internal cell components and presents the opportunity to sample 3D specimens appropriately. Here the focus is exclusively on quantification on thin sections of biological material.

Structural display is the sine qua non of good quantitative EM because one cannot quantify what cannot be seen. Therefore optimal specimen preparation and structure contrasting lie at the heart of all successful quantification regimes; however, these lie outside the scope of this article (see Griffiths, 1993; Liou et al., 1996). Methods for molecular contrasting at the EM are also now well established and these range from the less popular preembedding approaches, which use either particulate or enzyme-based methods producing electron dense enzyme reactions to the more widely used on-section labeling that utilizes particulate markers. Particulate markers have the inherent advantage of being countable and have been proved to yield a signal that can report on antigen concentration. Currently the most important particular marker system in widespread use is the colloidal gold (Lucocq, 1993a) but other methods such as quantum dots (Giepmans et al., 2005) are showing promise of improved labeling quality.

2. The basics of sampling

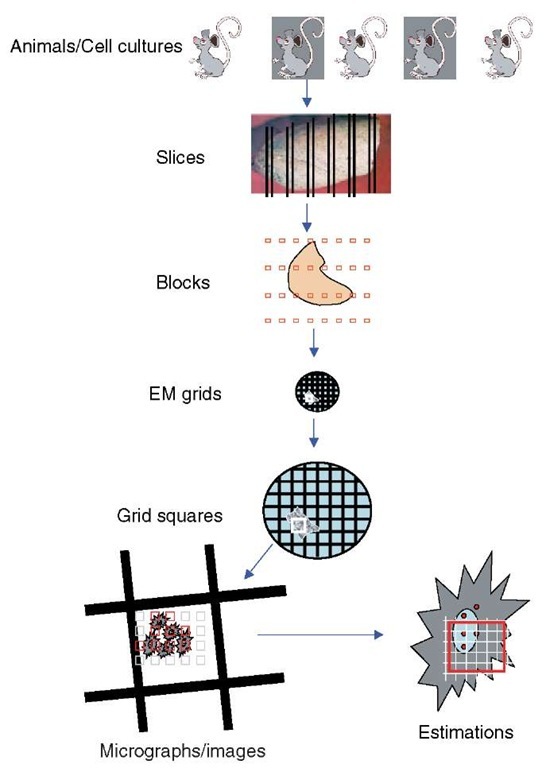

Using sections to provide readouts of structure or immunolabel introduces the problem of sampling (Lucocq, 1993b). All sections, whether they are immunolabeled or used for structural quantitation, are small samples ultimately derived from an animal/experiment, culture, organ, tissue, cell, or organelle. It is important that the information contained in the sections used is representative of the “experimental universe” from which they are drawn, whether this is a set of animals or cell cultures. To ensure this representation is fair, sampling must be strictly nonselective and so simple uniform random sampling is the mainstay of all EM quantitative approaches. In fact this is a minimum requirement at all levels of the sampling hierarchy (animals/experiments, dishes, blocks, sections, EM grids, EM grid holes; see Figure 1). In heterogeneous biological systems simple random sampling may not always be the most efficient strategy and can be modified to improve the accuracy of results obtained from doing a particular amount of work. The best-known modification is systematic random sampling, in which a random start point ensures unbiasedness, and subsequent samples are spaced in a pattern at regular intervals through the organ, specimen, block, or section (see Figure 1). When biological samples are heterogeneous, systematic random sampling is usually more efficient, partly because it spreads-out “measurements” to sense local variations. By comparison, simple random samples may tend to cluster or become dispersed and thereby over- and underrepresent certain regions. Systematic random sampling is also simpler to set up than simple random sampling. As we shall see, depending on the intended readout it is almost always necessary to randomize the position of the section within the specimen of interest; for some parameters, it may also be important to randomize orientation as well.

3. Immunolabel quantitation

Because labeling systems are applied to sections that produce essentially 2D images of structure, this represents the simplest system to illustrate principles of quantification. After the introduction of the particulate markers, many studies used purely qualitative assessment of labeling. However, assessing small changes in labeling intensity or amounts of dispersed label is difficult by eye and comparisons between conditions, or between different compartments, demanded unbiased quantitative measures. Sectioning opens up cellular compartments and makes them available to the immunogold labeling reagents that are applied to the sections. The random placement of sections at the lower end of a random sampling-based hierarchy therefore ensures that the components of compartments/structures in the animal/cell line have similar probability of encountering the reagents. This is an important advantage over permeabilization techniques in which penetration of the reagents is at the mercy of both structural variables and permeabilization conditions that are difficult to control (Griffiths, 1993).

In an ideal world there would be a direct and proportionate relationship between particle labeling and protein antigens irrespective of their location in the cell, but in reality the relationship is influenced by the structural context in which the antigen is sitting and other factors such as modification of the antigen or the characteristics of the antibody probes. Thus, the number of particles labeling each protein (often termed the labeling efficiency) can vary from compartment to compartment or from structure to structure (Lucocq, 1994; Griffiths and Hoppeler, 1986). Important work from the Utrecht group (e.g., Posthuma et al., 1988) determined that variation is due to different penetration of labeling reagents and sought to eliminate these differences by embedding fixed cells or tissues in either polyacrylamide or Lowicryl resins. As things stand, most workers accept the underlying variation in labeling efficiency between compartments. It is worth noting also that labeling efficiency over the same compartment take out may remain constant from experiment to experiment so that labeling intensity may correlate rather well with local changes in the concentration of antigen.

Figure 1 Sampling hierarchy. Quantitative estimations using electron microscopy are carried out on microscope viewing-screen images or micrographs of thin-sectioned material. These represent very small samples of the organ/tissue/cells from individual animals or cell cultures. The problem faced is how to sample slices, blocks, EM grids, EM grid holes, and micrographs, so that the estimations contain fair estimates of the parameters required. An absolute necessity is random selection of the items at each level of the sampling hierarchy. Increases in efficiency can be obtained from a systematic array of samples that is applied randomly. In the case shown here the organ has been systematically sampled first with slices and then as EM blocks. Sections from these blocks are sampled at a randomly selected EM grid hole and systematically spaced micrograph sections taken. Finally a randomly positioned geometric probe with systematic lattice structure is applied to each micrograph (see text for details and discussion of cases in which randomization of orientation is also a requirement). In general, studies have found that higher levels of the hierarchy are the major contributors to the overall variance and it is advisable to study a minimum of three and preferably five animals/cultures/experiments. Experience has shown that 10-20 micrographs and 100-200 events at the estimation stage may be sufficient to reduce the contribution from this level to a minimum. At the intermediate levels of the hierarachy, the number required will be determined by the heterogeneity of the specimen

3.1. Estimation of labeling (antigen) distribution

Estimating the distribution of particulate labeling is of fundamental importance in quantitative EM. Mapping studies enable informed judgements about the antigen distribution and are a good way of identifying the compartments or structures that contain pools of antigen. It is important to realize that while the distribution of labeling over a number of compartments/structures may be relatively insensitive to overall changes in the intensity of the labeling signal, it may be biased by focal changes in labeling intensities in ways that are difficult to interpret (see discussion of labeling density below).

Strategies for estimation of labeling distribution depend on obtaining a systematic sample from a representative labelled section. As described above, random sampling (simple or systematic) of animals/experiments, dishes, and blocks with random positioning of the section within the blocks ensures fair representation of different elements in the sampling hierarchy (Figure 1; note that the issue of how section orientation affects labeling efficiency has not been addressed systematically and it may be pertinent to include measures that randomize orientation as well as position in labeling studies (see below)). When thin sections are mounted on EM grids they may be of variable quality or may be so extensive as to preclude sampling the whole section area. Under these conditions smaller regions of the section can be selected by random (or systematic random) selection of EM grid holes containing sections of interest. It is worth reiterating the adage that one cannot quantify what cannot be seen and so adequate contrasting of sections of the appropriate thickness to reveal the structure of interest is important.

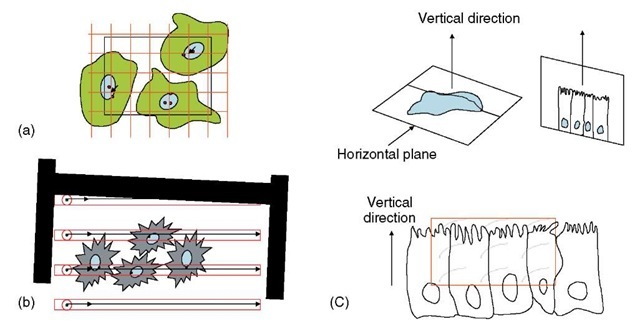

The most efficient way of sampling a selected labeled section (or more limited area of the section) is to obtain a systematic random sample and this can be achieved in a number of ways. In one approach, the selected section area can be scanned in strips at the electron microscope (Figure 2a), at a magnification at which the particulate marker and the structures of interest can be identified. Initially the magnification selected should be the lowest that allows both structures/compartments and particulate marker to be clearly seen as this maximizes the sample size. The strips are defined by translocation of the section under the screen and 10-20 of them are systematically spaced (with a random start) and cover the whole of the selected area (see Lucocq et al., 2004). A second approach is to take micrographs at systematic random locations across the selected area of the section (Figure 2b). Again the magnification used should be the minimum that allows both particles and structure to be visualized. The number of compartments assessed would depend on the underlying biology and the goals of the experiment (see Mayhew et al., 2002; Griffiths et al., 2001). Particles are counted and assigned to compartments during the scanning procedure and approximately 200 particles per grid are enough to describe the distribution over 10-15 compartments (Lucocq et al., 2004). If a more precise value of the labeling fraction in one particular compartment is required, then 100-200 particles should be examined over that individual compartment (Lucocq et al., 2004). This level of sampling has also been found to be sufficient in many stereological studies. With these guidelines in mind it may be pertinent at some stage of a study to increase or decrease the total number of sampled particles. Increases in particle numbers counted may be achieved simply by increasing sample size (scan or micrographs), while decreases follow from increasing magnification used in the scanning approach or by decreasing the quadrat size on micrographs. It is generally advisable to apply unbiased counting rules to the scanning strips or to quadrats used for counting on micrographs, especially when the size of the gold particles becomes significant relative to the quadrat (> 100th of the quadrat size; Lucocq, 1994).

Figure 2 Labeling distribution. Systematic sampling of particulate immunolabeling can be achieved using all available sections on an EM grid. But usually an area of interest such as an EM grid hole (or holes) containing optimally contrasted section(s) is selected at random. In the scanning method (a) this area of interest is translocated under the viewing screen to create systematically spaced strips- initially at a magnification sufficient to visualize both the labeling and structures of interest. The uppermost corner of the grid hole can be used as a randomly placed marker to position the scans. In the micrograph method, (b) images (containing relevant cell compartments/labeling) are recorded at systematically spaced locations, again using the uppermost corner of the EM grid hole as a marker of random position

Recently, powerful methods for comparison of the raw particle count distribution between groups have been introduced (Mayhew et al., 2004). This type of analysis is facilitated by the use of contingency table analysis with statistical degrees of freedom for chi-squared values being determined by the number of compartment and number of experimental groups of cells (see Mayhew et al., 2004 for discussion and details). The method enables identification of compartments/structures in which the major between-group differences reside.

3.2. Estimation of labeling density

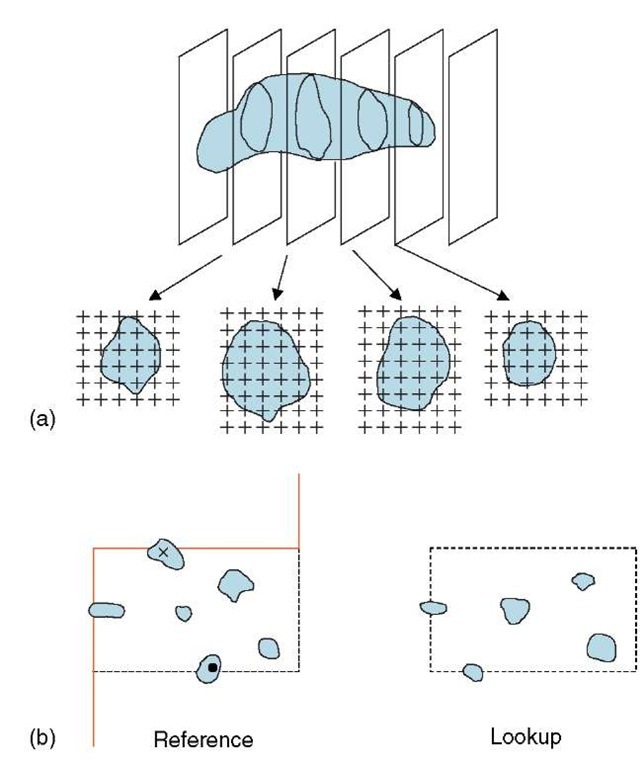

The local intensity of particulate immunolabeling reflects the local concentration of a component antigen (Griffiths, 1993) and is therefore a biochemically relevant quantity for that compartment/structure. The labeling intensity can be found by relating label to the size of a structure/compartment, and in the classical approach, labeling density is related to the absolute size of profiles displayed on the ultrathin sections. Profile sizes can be estimated by interactions of geometrical probes such as points or lines as systematic test grids (see Figure 3a). Points are used to estimate organelle area and lines to estimate profile length (usually membranes) (see Griffiths, 1993; Lucocq, 1994). With the number of probe hits, the spacing of the probes in the grid and magnification, a simple formula converts the counts to areas or lengths from which the absolute density values can be estimated (see Figure 3). Notice that to ensure unbiasedness of the estimates, the grid of geometric probes is applied as a systematic series of points or lines. For unbiased estimations, there is a requirement for random placement of points (for area estimation) or random placement and orientation of lines (for profile length estimation); and a course for random sampling throughout the hierarchy (as yet the need for random orientation in 3D has not been systematically investigated; see discussion below for surface estimation). Traditionally when the grid is applied to micrographs/digital camera images, the density is reported per square micron or density per micron length of membrane, thereby allowing

Figure 3 Labeling density, volume, and surface density. Labeling density can be expressed as labeling per unit profile area or per unit length of profile. As shown in (a), the area can be estimated using point hits over the structure. The points can be defined as the corners of lattice squares and the profile area, A, can be computed from the product of area associated with each point on the lattice (a/p) and the total number of point hits (EP) on the stucture. Here, there are two point hits (arrows) over the nucleoplasm and the number of labeling particles is two. Random placement of the lattice relative to the specimen is a requirement. The profile length of nuclear envelope (here represented by a single membrane trace) can be estimated from the number of intersections (I) of the envelope with lattice lines (in this case 9), which should be randomly oriented and positioned relative to the membrane profiles. Trace length is computed using (4/n )Id where d is the real distance between the lines ((2/n )Id is the formula if only one set of parallel lines are used). Assignment of gold particles to membranes (in this case 3) is decided on the basis of acceptance zones within which dispersion of label is considered to be possible – for example, two particle widths for 10-nm diameter particles (se Griffiths, 1989a). As described in the text, labeling may be related to point counts or intersections without conversion to absolute units. Relating labeling to intersection counts can also be achieved by an adaptation of the scanning approach in which gold particle counts are related to intersections of structures with a scanning line defined as a feature located in the EM viewing screen moves relative to the section (b).

The systematic lattice illustrated in (a) can also be used to estimate volume and surface density in 3D stereologically. For unbiased estimation of volume density, the lattice is randomly placed inside the specimen by ensuring random selection/placement at all levels in the hierarchy. The fraction of points that fall over the structure of interest relative to a reference space, reports on the volume density of the structure in the reference space.

Intersection of structures with lattice lines (I) enables estimation of the surface density using the formula 2I/L where L is the total line length applied to the specimen. Either the lines or the specimen must be randomly placed and oriented in space for unbiased estimations (see text for some details on generating isotropic lines). (c) Illustrates one method of generating isotropic uniform random lines in space. A recognizable horizontal plane (in this case the base of a columnar cell sheet) enables a vertical direction to be identified. A section along the vertical direction is taken in uniform random position and at random angle of rotation around the vertical axis. On this section plane, isotropic lines can be represented by sine weighted lines, for example, cycloid arcs usually applied as a systematic lattice (test system in red quadrat of figure). Intersections of linear profiles with these test lines allow surface density to be estimated according to the formula 2I/L (see Baddeley et al., 1987; Howard and Reed, 1997 for details) interpretation and comparison with other studies. This type of density information can also be used in combination with section thickness measurements to estimate density of labeling over structures in 3D (see, e.g., Griffiths and Hoppeler, 1984).

More recently, for certain comparative purposes, particulate labeling has been related to point or intersection counts without “converting” to absolute area or length units (see Figure 3). Density can be expressed as gold particles per point or intersection (Watt et al., 2002; 2004; Mayhew et al., 2002) and this index of labeling intensity has been used to test for preferential labeling over individual or groups of compartments. In one approach, the labeling densities are compared to those obtained if the existing gold labeling were randomly spread over all compartments to provide a relative labeling index (RLI; see Mayhew et al., 1996, 1999). The intensity data can be obtained on micrographs or from scanning in strips at the electron microscope using the viewing screen in which a feature acts to trace a scanning line for intersection counting (see Figure 3b). This type of approach cannot at present be applied to both organelle and membrane surfaces simultaneously.

4. Structure quantification

Analysis of structural parameters in 3D from sections requires specifically designed stereology that employs sampling with geometrical probes. The geometrical probes are generally applied to sections as systematic arrays of points, lines, planes, or brick-shaped volumes with strict rules for multistage random sampling animals, cells, blocks, sections, and micrographs. Simple formulae convert the counts into 3D quantities. As a rule of thumb the dimensions of the probe and the parameter to be estimated must add up to at least three, for example, points (0D) can only be used for volume estimation (3D) and lines (1D) but not points (0D) may be used to estimate surface (2D).

4.1. Volume fraction and surface density

Volume fraction and surface density are simply measures of the concentration of volume or surface inside the volume of some reference structure (see Figure 3a; for reasons of clarity and simplicity, length density estimation is not dealt with here (see Howard and Reed, 1998 for details). Volume fraction can be estimated using an array of points that are placed at a random location within a specimen. The fraction of points that fall on a structure relative to a reference space/structure is an estimate of the fraction occupied by the structure of interest. This is an extension from the principle outlined by Delesse (1847); see also Thompson, 1930), which states that the fractional area displayed by a structure reports on the fraction of the reference space occupied by that structure. The random/systematic random positioning of the points on the section again depends on correct sampling throughout the hierarchy and is employed down to the level of the section and the systematic array probes applied as a grid to the micrograph (Figure 3a). The orientation of the specimen can be arbitrary. Importantly, the readout is a ratio and is therefore sensitive to changes in volume of both the structure and the reference space. It is therefore advisable to have information on the size of the reference space (see below), or at least its stability. Once the reference space volume is known, then the absolute volume of the structure inside that space can be computed easily from the product of the volume fraction and the reference space volume. Volume fraction has been one of the most used estimators in electron microscopy but is less often combined with reference space volume estimation.

Surface density is usually estimated in a volume. In cell biology, this might represent the packing density of nuclear membrane in the cytoplasm or mitochondrial cristae within mitochondria. Again caution is required in interpreting the readout ratio and knowledge of the reference space size enables absolute values for surface to be estimated from the product of surface density and reference volume. The estimates are most often made using systematic arrays of line probes that are randomly positioned and randomly oriented in 3D space, relative to the specimen (Figure 3b). A number of strategies have been developed to ensure randomness of orientation (isotropy) of probe or specimen. These include embedding the specimen in an isotropic sphere, which is rolled before embedment (the disector; Nyengaard and Gundersen, 1992); dicing tissue/cell pellets and allowing them to settle haphazardly (which is less rigorous; Stringer et al., 1982); and making two successive positioned oriented sections through a structure (the orientator; Mattfeldt et al., 1990). Finally, a powerful way to generate isotropic lines in space is to orient sections in a fixed direction vertical to an identifiable horizontal plane associated with the object, and then generate sine weighted isotropic lines on the sections (vertical section method; Baddeley et al., 1986; Michel and Cruz-Orive, 1988; see Figure 3c). This elegant solution allows orientation of sections along specific directions that display features of cell organization such as polarity and is well suited to surface estimation in cell monolayers sectioned in a direction perpendicular to the culture dish. Irrespective of how the isotropy is generated, the number of intersections of lines with the surface features of interest can be easily converted into a surface density value. The product of surface density and reference volume will then provide an estimate of the total surface (see Figure 3 legend and Griffiths et al., 1984, 1989a,b for examples).

For both volume and surface density estimations, errors may arise when structures are small compared to the section (e.g. 50-200 nm). Peripheral grazing sections result in lost caps that decrease the number of point or line encounters and underestimate surface or volume density. On the other hand as structures become small relative to the section, they overproject their profiles into the final image and lead to overestimation of size densities. Correction factors based on model shapes for the small structures are available but these should be used under carefully controlled conditions in which the models used correspond closely to the morphology of the structure in question (Weibel and Paumgartner, 1978; Weibel, 1979).

4.2. Reference volume

The volume of organs, tissue components, cells, or organelles, are of interest in their own right but they also represent important reference spaces in which stereological densities can be converted into absolute values.

One of the most powerful methods for volume estimation is that devised by the seventeenth century priest Bonaventura Cavalieri who was a disciple of Galileo (Cavalieri, 1635). This approach is well suited to EM slices that are relatively thin compared to the object (a similar principle can also be applied using sections that are relatively thick Gual-Arnau and Cruz-Orive, 1998). A series of sections through the entire object are prepared at a regular spacing with the first slice lying at a uniform random start location within the section interval. The area of the object profiles as displayed on these sections is estimated by counting point hits of a lattice grid overlaid on the object profile (Gundersen and Jensen, 1987). A simple formula converts the point counts summed over all sections into the estimated object volume (Figure 4a). Importantly, 5-10 sections are generally enough to obtain precise estimates of objects irrespective of their orientation and shape. The precision of volume estimate obtained using the Cavalieri method can be assessed as the coefficient of error (Gundersen and Jensen, 1987), which decreases as the number of sections (sampling intensity) is increased. The Cavalieri method is now widely used in light microscopy and radiology (Roberts et al., 2000) and in electron microscopy it is an excellent method for cell or organelle size estimation that can be combined with other estimators (Lucocq et al., 1989; McCullough and Lucocq, 2005). Other methods for estimating particle volume are outlined below.

Figure 4 Volume and particle number. (a) Volume estimation using the Cavalieri sections method in EM. A systematic sample of equally spaced sections (usually 5-10) is placed at a random start location. The total profile area (A) is the product of the area associated with each point on the lattice (a/p) and the total number of point hits on the profiles (EP). The distance between the sections (k ) is obtained from the product of the section thickness (t ) and the number of sections in each interval (ns). The volume estimate is computed from A x k .(b) Principle of using a disector to count particles. In the reference section, particle profiles are selected if they are enclosed entirely in the quadrat or crossed by the dotted acceptance line. Profiles that encounter the continuous forbidden line are ignored. Selected particles that disappear in the lookup (•) section are counted. Although the particle (x ) has no profile in the lookup it is not counted because its profile hits the forbidden line. See Gundersen (1988) for further details

4.3. Counting and sampling in 3D

The problem with sampling or counting items from 3D space with sections of cells or tissues is that the probability that a structure appears in a section is related to its size (actually its height in the direction of sectioning) and not its number. For many years quantitative biologists attempted to circumvent the problem by assuming model shapes, thereby enabling conversion of profile size distributions into particle numbers. Such model-based approaches (see Weibel, 1979 for discussion) can be inherently biased and the breakthrough in number estimation and sampling came in 1984 when Sterio reported using a two-section approach to identify and count particles in 3D space (Sterio, 1984; Mayhew and Gundersen, 1996). The essence of this approach is to sample profiles of particles displayed in a section using two-dimensional unbiased counting rules applied to a quadrat (Figure 4b) and to assess which of the selected particles are then not displayed in a second parallel section. This amounts to counting particle tops or edges and is particularly suited if the particles are entirely convex and therefore do not contain multiple edges. For vesicles or spheroidal or tubular structures such as nuclei or particulate mitochondria, this is not a problem. However for more complex organelles such as Golgi apparatus or interconnected mitochondria, the number of edges may be multiple and varied. The union of the sampling section (and its quadrat) with the second section (the lookup) is termed a disector. If disectors are placed at random or systematic random through the specimen, then the probability that a particle (nucleus, vesicle, etc.) is selected in this way is related only to its number. Once selected, this sampled structure can be further characterized. Implementation of disectors at the EM level demands accurate positioning of fields for sampling and lookup at appropriate magnifications. Important questions arise as to distance between sections in the disectors, relative to the particles in the population. Clearly the distance must be close enough to avoid missing any particles between the sections; but the method is based on comparing the profiles in sampling section and the lookup section – so it is advisable to keep the sections close enough to enable particles sectioned by both sections to be used as a reference. As a general guide disectors about one-third the height of the average particle are usually sufficient. Since the preparation of disectors for use in EM is fairly labor intensive, then each section in the disector can be used alternately as the reference and lookup to improve efficiency (see Smythe et al., 1989)

4.3.1. Uses of disectors

One straightforward readout from disectors is the particle density in the volume of any reference space that can be identified in the disector. The volume of this reference space can be estimated using point counting applied to the structures displayed in the quadrat used for sampling. Interestingly the reciprocal of the particle density in particle volume turns out to be the mean number-weighted particle volume (Gundersen, 1986). The volume of particles can be also assessed using Cavalieri estimator although this requires further examination of a section stack through the entire sampled particle. At the EM level disectors have been used to sample and count coated endocytic structures (Smythe et al., 1989) and to characterize mitotic Golgi fragments (Lucocq et al., 1989).

An elegant application of the disector can be used to estimate the numerical ratio of a small structure to a larger one (the number of peroxisomes per cell nucleus, e.g., Gundersen, 1986). In a stack of sections one pair of sections is used to sample the larger structure and estimate its numerical density in a reference space. At a random location within this disector interval, a smaller disector, composed of two more closely spaced sections, is then used to sample the smaller structure and the density estimated. The ratio of the two densities reports the numerical ratio of the two structures and since the estimates are obtained using the same average section thickness, then no section thickness measurement is required and no determination of the total reference volume is required. Double disectors have been used in electron microscopy to estimate the number of coated pits per cell (Smythe et al., 1989), the number of Golgi fragments/vesicles per cell (Lucocq et al., 1989), the number of synapses (Jastrow et al., 1997; Mayhew 1996) and the number of gold particles per cell (Lucocq, 1992).

In the case of small structures such as vesicles there is a special case in which all structures sectioned by the ultrathin section do not have a profile in the next section. Thus the probability of finding a disappearing profile is 1. Under these conditions there may be no need for a lookup section to be used. This convenient adaptation of the disector must be used with caution and preliminary work is needed to determine the proportion of structures that are detected by one section but disappear in the next. This approach has been used to count Golgi vesicles in dividing HeLa cells (Lucocq et al., 1989).

4.4. Particle volume

As already discussed, the volume of structures sampled using disectors can be estimated either by using Cavalieri sections method or from the reciprocal of the particle density in the disector. However there are other methods for particle volume estimation. Unlike those just mentioned, they all require some element of isotropy. The selector (Cruz-Orive, 1987) samples particles with disectors and uses a systematic stack of sections through the particle to place sampling points within the particle volume. From these points line probes are used to estimate the volume of the particle. The distance between the sections need not be known but the orientation of the line probes must be isotropic. A refinement of the selector principle is the nucleator (Gundersen et al., 1988), which uses a central structure such as a nucleolus that can be sampled with constant probability as a source for the isotropic lines (Henrique et al., 2001). This tends to be more efficient than the selector and uses fewer sections. Another powerful method is the rotator, which takes advantage of the Pappus theorem. This states that the product of the distance traveled by the center of gravity of a figure and its area can be used to estimate its total volume in rotation (Jensen and Gundersen, 1993). This gives the possibility of estimating the volume of an object when sections lie along a defined axis but are allowed to randomly assume any angle of rotation around this axis. This approach has been used at the EM level to estimate cell volume around the centriolar structures and the volume of the endoplasmic reticulum at different locations within mitotic HeLa cells (McCullough and Lucocq, 2005; Mironov and Mironov, 1998). Finally it is also worth mentioning the point sample intercept method (Gundersen and Jensen, 1985; Gundersen et al., 1988), which allows estimation of particle volume on single sections. Points are used as probes to probe particle volume and isotropic lines through these points can be used to estimate the particle volume, but this is weighted according to the volume and not number of particles.

5. 2D and 3D spatial distributions

Quantitation of total or aggregate quantities have been the main focus for EM quantitation and are often referred to as first-order stereology. However the statistical study of spatial distributions is of biological value in both 2D and in 3D and has been referred to as second-order stereology.

Statistical approaches for assessing the distribution of point processes in 2D have been presented by Diggle (1983) and Ripley (1981). The first-order property of such a point pattern is its intensity, expressed as number of items per unit area, whereas the second-order property is characterized, for example, by Ripley’s K function, which is sensitive to the distribution of interparticle distances which can be compared to an expected number of neighbors within a distance of any point. Membrane lawns and freeze fracture techniques present 2D surfaces that can be labeled with particulate markers and the distribution of the labeling provides clues to clustering or receptors, lipids, and downstream signaling molecules. A recent application of these principles has been used to examine protein distribution at the plasma membrane (Prior et al., 2003). Development of 3D spatial analysis is in its infancy but the use of linear dipole probes has shown some promise in assessing the spatial distribution of volume features at the EM level (Mayhew, 1999; see also Reed and Howard, 1999). Molecular or subcellular organelle distributions have yet to be examined using this methodology.

Finally, this review covers only some of the basic principles and the reader is referred to more extensive basic texts (such as Howard and Reed, 1998) and to the International Society for Stereology (ISS) website (http://www.stereologysociety.org/) for further details. Advice from those experienced in this field can be invaluable to anyone embarking on a quantitative EM study.