BLOB Classification

Each of the objects in Fig. 7.6 has now been identified as separate BLOBs using, for example, the Grass-Fire algorithm. The task is now to determine which BLOB is a circle and which is not. As suggested above we can use the circularity feature for this purpose. In Fig. 7.6 the values of the circularity of the different BLOBs are listed. The question is now how to define which BLOBs are circles and which are not based on their feature values. For this purpose we make a prototype model of the object we are looking for. That is, what are the feature values of a perfect circle and what kind of deviation will we accept? In a perfect world we will not accept any deviations from the prototype, but in practice the object or the segmentation of the object will not be perfect so we have to accept some deviations. For our example with the circles, we can define the prototype to have a circularity of 1 and a deviation of ±0.15, meaning that BLOBs with circularity values in the range [0.85, 1.15] are accepted as circles.

What if we are looking for large circles? For this task one feature is not sufficient and we therefore use both the circularity and the area, see Fig. 7.6. These two features span a 2-dimensional feature space as seen in Fig. 7.7. The prototype model will now be two dimensional with each feature having an allowed range. Together these two ranges will form a rectangle and if a BLOB in an image has feature values inside the dashed rectangle, then it is a large circle otherwise it is not, see Fig. 7.7. This approach is known as a box classifier and the area of the rectangle is known as the decision region.

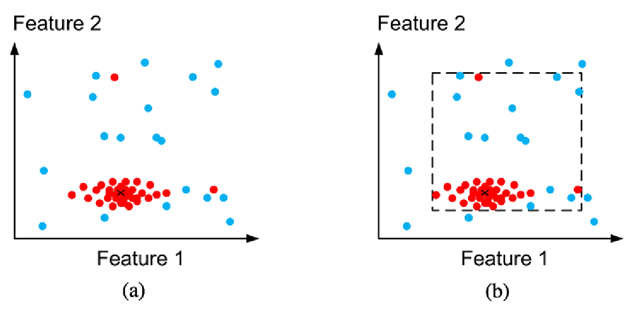

Fig. 7.8 A 2D feature space where each point is a feature vector, i.e., a BLOB. The red points are from the object we are trying to recognize while the blue are from non-object BLOBs. (a) Input. (b) The decision region in a box classifier where the maximum and minimum values are used to defined the decision region

Often it is not possible to define the prototype model beforehand and we therefore need to learn it,The procedure is to run the developed system on typical input images (the more the better) and calculate the feature values for each BLOB (both large circles and any other BLOBs that might appear in the system). Each BLOB will result in a point in the feature space. In Fig. 7.8(a) an example is illustrated where the red points are from large circles and the blue points are from other BLOBs. The task we are faced with is to figure out the decision region of the prototype model so that as many correct BLOBs as possible are included in the decision region and at the same time exclude as many of the incorrect BLOBs as possible. We can see that the density of the red points is not uniform, but tends to be higher at the center of the “point cloud” of red points, indicated by a cross in the figure. This is a typical phenomenon independent of which features we are working with and the center is therefore a good representation of where the prototype is located in the feature space. The center can be calculated as the mean of all the red points, see Eqs. 7.2.

We can see in the figure that the red points are spread out differently in the x-and y-direction. This is also a typical phenomenon and by analyzing how the points are spread out we can learn the size of the decision region in Fig. 7.7. The simplest way is to find the minimum and maximum values of the features and let these values define the decision region. This can, however, lead to an incorrect classification if we have outliers. An outlier is a point that is far away from the other points in the feature space, see Fig. 7.8(b). A better approach is therefore is express the spreading of the points using the variance. Like the mean, the variance is a statistical measure that expresses something about the data. Concretely, the variance measures the average distance the points are from the mean. So a big variance means the points are spread out and a small variance means the points are gathered closely around the mean.

When we have the means and variances of the different features the box classifier should be replaced by a statistical classifier since this is a more accurate approach. In the box classifier we have a binary decision; is a new feature vector (BLOB) inside or outside the rectangle? In a statistical classifier we instead measure a distance between a new feature vector (BLOB) and the prototype. The smaller the distance the more likely it is that the BLOB is the same type (here a large circle) as the prototype. To make this approach operational we need to threshold the distance and hence end up with a binary decision region like the dashed box in Fig. 7.7. The difference is that the region is now a more precise ellipse and not a rectangle, see Fig. 7.7. One statistical classifier is the weighted Euclidean distance in our case defined as

where WEDf, prototype) is the weighted Euclidean distance between feature vector ~fi, i.e., the ith BLOB, and the prototype. fi (cir) and fi (area) are the circularity and area of the ith BLOB, respectively. The rest of the parameters in the equation are the means and variances of the two features of the prototype. In the general case with p different features the weighted Euclidean distance measure is defined as

where mj is the jth feature. If the variances of all features are the same, then we can ignore them and end up with the Euclidean distance measure (ED), where the decision region is a circle in 2D (see Fig. 7.7):

It should be noticed that the three equations above assume that the scale of the features are the same. In our example the problem is that the area is measured in 1000 s and circularity is a value close to 1. This means that the area will dominate the distance measure completely. The solution is to normalize the features so they are scaled similarly and are in the same interval, e.g. [0,1]. This can be obtained, for example, as

Further Information

The grass-fire algorithm can be modified to also operate on gray-scale and color images. The first modification is that the algorithm does not scan the entire image, but instead starts at a so-called seed point often defined interactively by a user. The second modification is that an object pixel is a pixel within a certain gray-scale or color range. The range can for example be defined as the value of the seed point ± a small value. A more robust approach is to define the range based on the statistics of the pixels located in the vicinity of the seed point.The effect this algorithm will have is that a region centered around the seed point will be selected. One might think of the algorithm as a combination of thresholding and connected component analysis. The algorithm is known as region growing and can for example be applied to remove the red-eye effect in pictures.

The grass-fire algorithm is not the only connected component analysis algorithm that exits. But no matter which algorithm is used it is very often combined with the feature extraction process since both need to process each pixel in a BLOB. Combining them will speed up the system. Many other features than those described in this topic exist, especially more advanced shape features such as Hu moments. Furthermore, many new features can be defined/optimized with respect to a concrete application.

A common question when doing BLOB classification is whether a simple box classifier is sufficient. The answer depends on the application. If the feature vectors of the non-object BLOBs and the object BLOBs are far apart in the feature space, then the exact position and shape of the decision region is not critical and hence a box classifier will suffice. This is the situation in Fig. 7.7. The accuracy of the box classifier goes down as the feature vectors becomes similar. This is illustrated in Fig. 7.9 where it can be seen that the weighted Euclidean distance classifier outperforms the box classifier.

Another line of argumentation is that the number of parameters needed to be defined in the box classifier (the shape of the rectangle) increases as the number of feature increase. In the weighted Euclidean distance classifier only one parameter (a threshold on the distance) has to be decided independent on how many features are used.

Sometimes we will have features that are dependent. Dependency means that if we know something about one feature we can say something about another feature. If for example we as features have area and perimeter, then it is very likely that the value of the perimeter increases as the area increases. Dependency in data can result in the point cloud having an orientation that is neither vertical nor horizontal, see Fig. 7.9(c). In these cases both the box classifier and the weighted Euclidean distance classifier will fail. Instead we must use the Mahalanobis distance classifier. It is a statistical classifier measuring the distance between an unknown feature vector and the prototype. So like the two other statistical classifiers presented above it only requires one parameter to be defined no matter how many features are used. In fact, the Euclidean distance classifier and the weighted Euclidean distance classifier are both special cases on the Mahalanobis distance classifier. In Fig. 7.9(c) the decision regions for all three classifiers can be seen. Many other classifiers exist and are used in fields such as computer vision, machine learning and artificial intelligence. Many topics can therefore be found on this matter, see for example [8].

Fig. 7.9 A 2D feature space where each point is a feature vector, i.e., a BLOB. The red points are from the object we are trying to recognize while the blue are from non-object BLOBs. (a) Box classifier. (b) Weighted Euclidean distance classifier. (c) Mahalanobis distance classifier (rotated ellipse). Box classifier (green rectangle). Weighted Euclidean distance classifier (yellow ellipse)

No matter what, before choosing a particular classifier always capture a lot of training data and see how they spread out in the feature space. Just by looking at a figure like Fig. 7.9 you can often get a very good impression of how to proceed with the classification, but also, and equally important, an understanding of the quality of the chosen features.

![tmp7470-15_thumb[2] tmp7470-15_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/07/tmp747015_thumb2_thumb.png)

![tmp7470-16_thumb[2] tmp7470-16_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/07/tmp747016_thumb2_thumb.png)

![tmp7470-17_thumb[2] tmp7470-17_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/07/tmp747017_thumb2_thumb.png)

![tmp7470-18_thumb[2] tmp7470-18_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/07/tmp747018_thumb2_thumb.png)