Introduction

It is very appropriate that a topic dealing primarily with display interfaces devotes at least some time to a discussion of human vision, with at least a functional description of how it works and what its limitations are. As pointed out in the previous topic, the viewer should be considered a part of the display system, and arguably the most important part. The ultimate objective of any display system, after all, is to communicate information in visual form to one or more persons; to be most effective in achieving that goal, we need to understand how these people will receive this information. In other words, the behavior and limitations of human vision determine to a very great extent the requirements placed on the remaining, artificial portions of the display system.

As we are primarily concerned with describing human vision at a practical, functional level, this topic does not go into great detail of the anatomy and physiology of the eye (or more generically, the eye/brain system), except where relevant to that goal. It must also be noted that some simplification in these areas will unavoidably occur. Our aim is to provide a description of how well human vision performs, rather than to go in to the specifics of how it works.

From the perspective of one trying to design systems to produce images for human viewing, the visual system has the following general characteristics:

1. It provides reasonably high acuity - the ability to distinguish detail within an image – at least in the central area of the visual field. However, we will also see that even those blessed with the most acute vision do not have very good acuity at all outside of this relatively small area. In brief, we do not see as well as we sometimes think we do, over as large an area as we might believe.

2. Humans in general have excellent color vision; we can distinguish very subtle differences in color, and place great importance on this ability – which is relatively rare in mammals, at least to the extent that we and the other higher primates possess it.

3. Our vision is stereoscopic; in other words, we have two eyes which are positioned properly, and used by the full visual system (which must be considered as included the visual interpretation performed by the brain) to provide us with depth cues. In short, we “see in three dimensions”.

4. We have the ability to “see motion” - in simple terms, our visual system operates quickly enough so as to be able to discern objects even if they are not stationary within the visual field, at least up to rates of motion commonly encountered in everyday life. Fast objects may not be seen in great detail, but are still perceived and quite often we can at least distinguish their general shape, color, and so forth. In fact, human vision is relatively good, especially in the periphery of the visual field, at detecting objects in motion even when they cannot be “seen” in detail. We will see, however, that this has a disadvantage in terms of the usability of certain display technologies – this ability to see even high-speed motion also results in seeing rapidly varying light sources as just that: sources of annoying “flicker” within the visual field.

While our eyes truly are remarkable, it is very tempting – and all-too common – to believe that human visual perception is better than it really is, and is always the standard by which the performance of such things should be judged. Our eyes are not cameras, and in many ways fall short of “cameralike” performance in many objective measures. They are nothing less, but also nothing more, than visual sense organs evolved to meet the needs of our distant ancestors. So while we should never consider the human eye as anything less than the amazing organ that it is, we must also become very aware of its shortcomings if we are to properly design visual information systems.

The Anatomy of the Eye

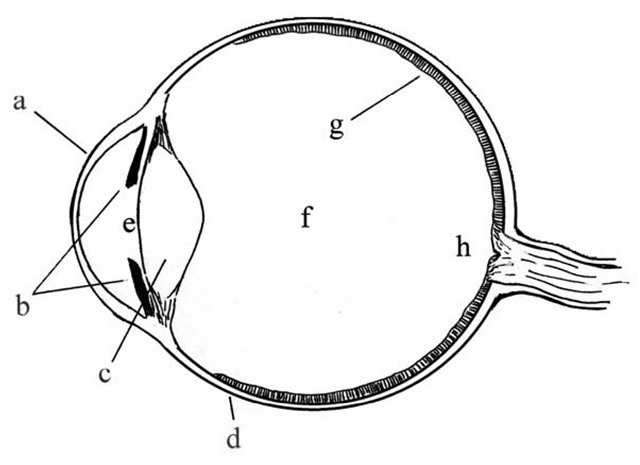

As this is not intended to be a true anatomy text, we simplify the task of studying the eye by concentrating only on those structures which directly have to do with determining the eye’s performance as an image capture device. In cross-section, the human eye resembles a simple box camera, albeit one that is roughly spherical in shape rather than the cubes of early cameras (Figure 2-1). Many of the structures of the eye have, in fact, direct analogs in simple cameras (Figure 2-2).

Following the optical path from front to back, light enters the eye through a clear protective layer – the cornea – and passes through an opening in the front called the pupil. The size of the pupil is variable, through the expansion and contraction of a surrounding ring of muscular tissue, the iris. The iris is a primary means of permitting the eye to adapt to varying light levels, controlling the amount of light which enters the eye by varying the size of the pupil. Immediately behind the pupil is the lens of the eye, a sort of clear bag of jelly which is connected to the rest of the eye’s structure through muscles which can alter its shape and thus change its optical characteristics. Light is focused by the lens on the inner rear surface of the eyeball, which is an optically receptive layer called the retina. The retina is analogous to film in a camera, or better to the sensing array in an electronic video camera. As we will see in greater detail, the retina is actually an array of many thousands of individual light- sensing cells, each of which can be considered as taking a sample of the image delivered to this surface.

Figure 2-1 The basic anatomy of the human eye. This simplified cross-sectional view of the eye shows the major structures to be discussed in this topic. (a) Cornea; this is the transparent front surface of the eyeball. (b) Iris; the colored portion of the eye, surrounding and defining the pupil as seen from the front. (c) Lens; the lens is a transparent, disc-shaped structure whose thickness is controlled by the supporting fibers and musculature, thereby altering its focal length. Along with the refraction provided by the cornea, the lens determines the focus of images on the retina. (d) The sclera, or the “white” of the eye; this is the outer covering, which gives the eyeball its shape. (e) Pupil. As noted, the size of the pupil, which is the port through which light enters the eye, is controlled by the iris. The space at the front of the eye, between the lens and the cornea, is filled with a fluid called the aqueous humor. (f) Most of the eyeball is filled with a thicker transparent jelly-like substance, the vitreous humor. (g) The retina. This is the active inner surface of the eye, containing the receptor cells which convert light to nerve impulses. (h) The point at which the optic nerve enters the eye and connects to the retina is, on the inner surface, the optic disk, which is devoid of receptor cells. This results in a blind spot, as discussed in the text.

Figure 2-2 The eye is analogous to a simple box camera, with a single lens focusing images upside-down on the film (which corresponds to the retina).

The actions of these three structures - the iris (and the pupil it defines), the lens, and the retina – primarily determine the performance of the eye from our perspective, at least in the typical, normal, healthy eye. To complete our review of the eye’s basic anatomy, three additional features should be discussed. The eye is supported internally by two separate fluids which fill its internal spaces: the aqueous humor, which fills the space between the cornea and the lens, and the somewhat firmer vitreous humor, which fills the bulk of the eyeball behind the lens. Ideally, these are completely clear, and so do not factor into the performance of the eye in the context of this discussion. (And obviously, when they are not, it will be the concern of an ophthalmologist, not a display engineer!) Finally, the remaining visible portion of the eye, the white area surrounding the cornea and iris, is the sclera.

Of the three structures we are most concerned with, it is the retina that is the most complex, at least in terms of its impact on the performance of human vision in the normal eye. The retina may be the “film” in the camera, but its functioning is vastly different and far more complex than that of ordinary film. The retina incorporates four different types of light-sensing cells, which provide varying degrees of sensitivity to light across the visible spectrum, but which are not evenly distributed across the retinal surface. This is also the portion of the eye which directly connects to the brain and nervous system, via the optic nerve.

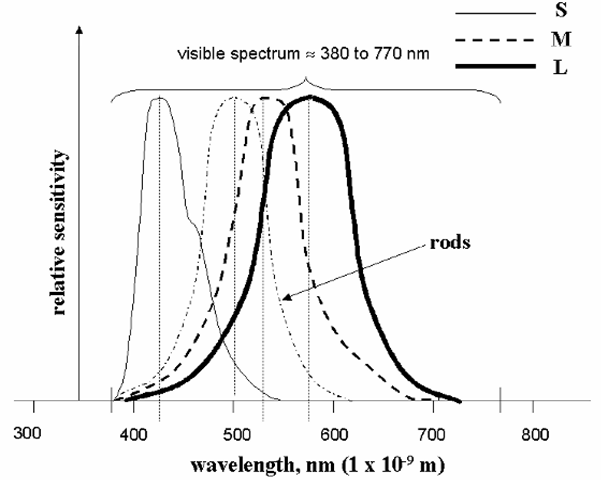

The cells of the retina fall into two main types, named for their shape: rods are the most sensitive to light in general, but respond across the entire range of visible wavelengths. In contrast, the cones are somewhat less sensitive overall, but exist in three different varieties, each of which is sensitive primarily to only a portion of the spectrum. Humans typically perceive light across slightly less than one octave of wavelengths, from about 770 nm (deep red) to perhaps 380-390 nm (deep blue or violet). The relative sensitivities of the rod cells and the three types of cones are shown in Figure 2-3. It should be clear from this diagram that the cones are what permit our eyes to discriminate colors,while the rods are essentially “luminance-only” sensors which handle the bulk of the task of providing vision in low-light situations. (This is why colors seem pale or even absent at night; as light levels drop below the point where the cones can function, it is only the rods which provide us with a “black and white” view of the world.) There are approximately 6-10 million cone cells across the retina, and about 120 million rods. (This imbalance has implications in terms of visual acuity, as will be seen shortly).

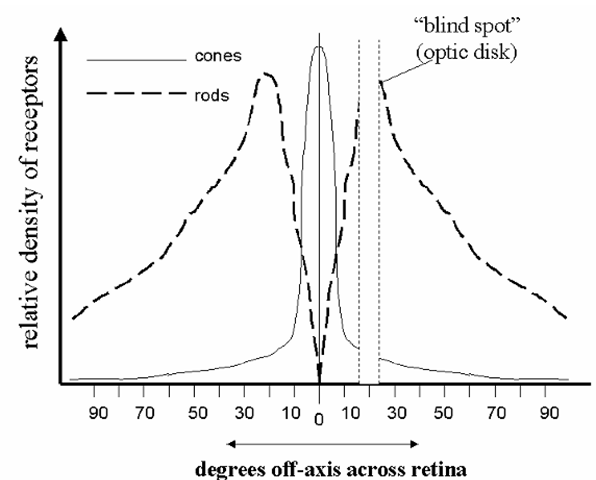

As noted above, the distribution of these cells is not even across the surface of the retina. The densest packing of cone cells occurs near the center of the visual field, in an area called the fovea, which is less than a millimeter in diameter. As we move out to the periphery of the retina, the number of cone cells per unit area drops off markedly. The distribution of rods also shows a similar pattern, but not as dramatically, and with the difference that there are no rods at all in the fovea itself (Figure 2-4). These distributions impact visual performance in several ways; first, visual acuity – the ability to distinguish detail within an image – drops off rapidly outside of the central portion of the visual field. Simply put, we are actually able to discern fine detail only in the central portion of our field of view. This is the natural result of having fewer light-sensing cells – which may be viewed as “sample points” for this purpose – outside of that area. The effective spatial sampling frequency resulting from the lower number of cells in the periphery can even lead to some interesting aliasing artifacts in this portion of the field of vision. The outside areas of our visual fields are basically best at detecting motion, and seeing it in low-light conditions – survival skills evolved for avoiding predators. Primitive man had little need for seeing a fast-moving attacker in detail; the requirement was to quickly detect something big enough, and moving quickly enough, to be a threat.

Figure 2-3 Approximate normalized sensitivities of the rods and the three types of cone cells in the human retina. Peak cone sensitivities are at approximately 420 nm for the “short-wavelength” (S) cells, 535 nm for the “medium” (M), and 565 nm for the “long” (L), and the peak for the rods is very close to 500 nm. The S, M, and L cones are sometimes thought of as the “blue,” “green,”, and “red” receptors, respectively, although clearly their responses are not strictly limited to those colors.

Figure 2-4 Typical distributions of the rod and cone cells across the retina. Note that there are essentially no rods in the very center of the visual field (the fovea), and no receptors at all at the point where the optic nerve enters the eye (the “blind spot”).

The absence of rods in the very center of the visual field also means that we do not see low-luminance objects well if we look directly at them. This is the underlying factor behind an old stargazer’s trick: when attempting to view dim stars in the night sky, you are advised to look slightly away from the target area. If you stare directly at such an object, you’re placing its image on a portion of the retina which is not good at seeing in low light (there are no rods in the fovea); moving the direction of your gaze slightly away puts the star’s image onto a relatively more “rod-rich” area, and it suddenly pops into view!

Even the central area of the retina, the part used for seeing detailed images in full color, is not without anomalies. Slightly off from the actual center of the retina (in terms of the visual field) is the point at which the optic nerve enters the eye. Surprisingly, there are no light-sensitive cells of any type in this location, resulting in a blind spot in this part of the field. The existence of the blind spot is easily demonstrated. Using the simple diagram below, focus the gaze of your right eye on the left-hand spot (or the left eye on the right spot), and then move the topic in and out from your face. At a distance of perhaps three or four inches, the “other” spot will vanish – having entered the portion of the field of view occupied by the blind spot.

We are not normally aware of this apparently severe flaw in our visual system for the same reason we do not notice its other shortcomings; simply put, we have learned – or rather, evolved – to deal with them. In this case, the brain seems to “fill in” the area of the blind spot with what it expects to be there, based on the surrounding portion of the visual field. In the example above, when the spot “vanishes”, we see what appears to be just more of the empty white expanse of the page, even though this is clearly not what is “really” there. Other examples of the compensations built into the eye/brain system will be discussed shortly.

One form of deficiency in the retina is common enough to warrant some attention here. As was noted above, the three types of cone cells are the means through which the eye/brain system sees in color, and the fact that these types have sensitivity curves which peak in different portions of the visible spectrum is what permits the discrimination of different colors. There are, speaking in approximate terms, cones which are primarily sensitive to red light, others sensitive to green light, and a third type for detecting blue light. The brain integrates the information given by these three types of cells to determine the color of a given object or light source.However, many people are either lacking in one or more types, have fewer than the normal number, or lack the usual sensitivity in a given type (through the partial or complete lack of the visual pigments responsible for the color discrimination capability of these cells). All of these result in a specific form of “color blindness”, more properly known as color vision deficiency. Contrary to the popular name, this condition only rarely means a complete lack of all ability to perceive color; instead, “color blindness” more often refers to the inability to distinguish certain shades. The particular colors which are likely to be confused depend on the type of cell in which the person is deficient. By far the most common form of color vision deficiency is marked by an inability to distinguish certain shades of red and green. (This condition is linked to the absence of a gene on the X chromosome, and so is far more common in males than in females. For it to occur in a female, the gene must be missing in both X chromosomes.) Approximately 8% of the male population is to some degree “red/green color blind”, or to give the condition its proper name are dichromats, having effectively only two types of color-distinguishing cells. (Red/green confusion is only the most common form of dichromatism; similar conditions exist which are characterized by confusion or lack of perception of other colors in the spectrum, resulting from deficiencies in different cone types.) In contrast, only about 0.003% of the total population are completely without the ability to perceive color at all – a condition called achromatopsia. Such people perceive the world in terms of luminance only; they see in shades of gray, although often with greater sensitivity to luminance variations than those with “normal” vision.

Visual Acuity

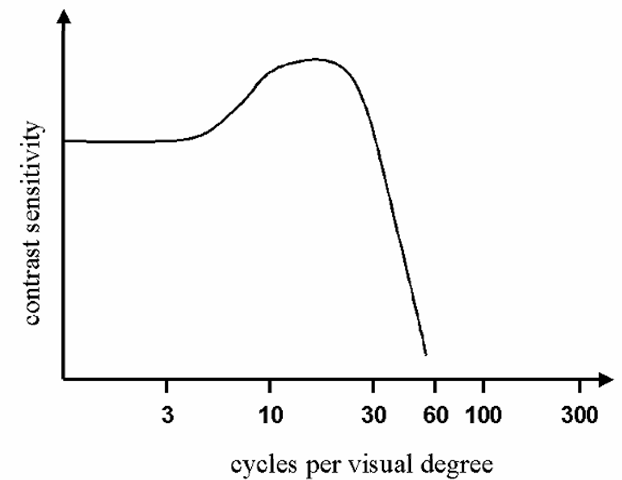

“Acuity” refers to the ability to discriminate detail; in measuring visual acuity, we generally are determining the smallest distance, in terms of the fraction of the visual field, over which a change in luminance or color can be detected by a given viewer, under a certain set of conditions. Acuity is to the eye what resolution is to the display hardware; the ability to discern detail, as opposed to the display’s ability to properly present it. Measuring acuity generally involves presenting the viewer with images of increasingly fine detail, and determining at which point the detail in question can no longer be distinguished. One of the more useful types of images to use for this is produced by varying luminance in a sinusoidal fashion, along one dimension only; this results in a pattern of alternating dark and light lines, although without sharp transitions. Two such patterns, of different spatial frequencies, are shown in Figure 2-5. The limits to visual acuity under a given set of conditions are determined by noting the frequency, in terms of cycles per degree of the visual field, at which the variations are no longer detected and the “lines” merge into a perception of an even luminance level across the image.

Figure 2-5 Two patterns of different spatial frequencies. The pattern on the right has a frequency, along the horizontal direction, roughly twice that of the pattern on the left. (Both represent sinusoidal variations in luminance.) It is common to express both display resolution and visual acuity in terms of the number of cycles that can be resolved per unit distance or per unit of visual angle.

The acuity of the eye is ultimately limited by the number of receptors per unit area in the retina, since there can be no discrimination of spatial detail variations across a single receptor. For this reason, as noted above, visual acuity is always highest near the center of the visual field, within the fovea, where the receptor cells are the most densely packed. However, the actual acuity of the eye at any given moment will typically be limited to somewhat below this ultimate figure by other factors, notably the performance limits of the lens. Remembering also that a major part of the eye’s ability to adapt comes from the action of the iris -which changes size of the pupil, and so the effective diameter of the lens – the ambient light level also affects the limit of visual acuity.

A graph of typical human visual acuity, under conditions typical of those experienced in the viewing of standard electronic displays, is shown as Figure 2-6. Note that there is a peak around 10-20 cycles per degree, and a rapid decline above this frequency. A commonly assumed limit on visual acuity is 60 cycles per degree, or one cycle per minute (limited by the size of the cone cells in the fovea, each of which occupies about half a minute of the field.). To better visualize this, one minute of angle within the visual field represent an object about the size of a golf ball, seen from a distance of about 150 m. One degree of the visual field is approximately the portion of the field occupied by the sun or the full moon, which is also roughly the portion of the field covered by the fovea. The ability to resolve details separated by one minute of visual arc is also the assumed acuity referred to when saying you have “20/20” vision – which simply means that you see the details on a standard eye chart (a test image with high contrast information) as well at a distance of 20 feet as a “normal” person is expected to. (In Europe, this same level of acuity is referred to as “6/6” vision, which is essentially the same thing using meters.)

Note, however, that this refers to acuity only in terms of luminance variations only – basically, how well one sees “black and white” detail – and then only for items at or very near the center of the visual field.

Figure 2-6 Relative human visual acuity, in terms of contrast sensitivity vs. cycles of luminance variation per visual degree, under viewing conditions typical of those experienced in normal office lighting, etc.. 60 cycles per visual degree is commonly taken as the practical limit on human visual acuity.

Due to the lower numbers of the color-discriminating cone cells, and the larger area covered by each (plus the fact that three cells, one of each type, are required to distinguish between all possible colors), spatial acuity in terms of color discrimination is much poorer than that for luminance-only variations. Typically, spatial acuity measured with color-only variations (i.e., comparing details which differ only in color but not in luminance) is no better than half that of the luminance-only acuity. And, as the density of both types of receptors falls off dramatically toward the periphery of the visual field, so does spatial acuity – especially rapidly in terms of color discrimination. As the receptors can be viewed as sampling the image projected onto the retina, we can also consider the possibility of sampling artifacts appearing in the visual field. This can be readily demonstrated; grid or alternating-line patterns which appear quite coarse when seen directly can appear to change in spatial frequency or orientation when in the periphery of the field, due to aliasing effects caused by the lower spatial sampling frequency in this area.

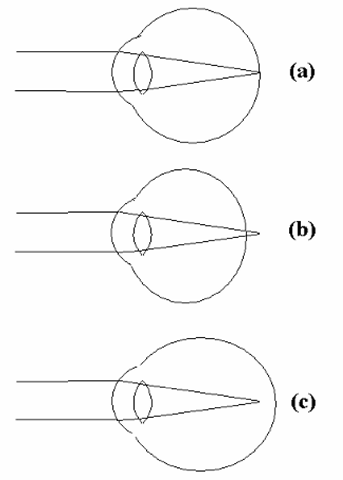

Not all people, of course, possess this level of “normal” visual acuity. Distortions in the shape of the eyeball or lens result in the inability of the lens to properly focus the image on the retina. Should the eyeball be somewhat elongated, increasing the distance from lens to retina, the eye will be unable to focus on distant objects (Figure 2-7c), resulting in nearsightedness or myopia. This can also occur if the lens is unable to thicken (to become more curved), which is needed for a shorter focal length. If the eye is shorter, front to back,

Figure 2-7 Loss of acuity in the human eye. (a) In the normal eye, images of objects at various distances from the viewer are focused onto the retina by the combined action of the cornea and the lens. (b) If the eyeball is effectively shorter than normal, or if the lens is unable to focus properly, nearby objects will not be properly focused, a condition known as farsightedness or hyperopia. (c) Similarly, if the eyeball is longer than normal or through an inability of the lens to focus on distant objects, the individual suffers from nearsightedness or myopia. (A general loss of visual acuity, independent of the distance to the object been seen, is called astigmatism, and commonly results from irregularities in the shape of the cornea.)

If the eye is shorter, front to back, than ideal (Figure 2-7b), or the lens is unable to become sufficiently flat, it will be unable to properly focus on nearby objects, and the person is said to be farsighted (the condition of hyperopia). A general distortion of focus (usually along a particular axis) results from deformations of the cornea, in the condition called astigmatism. In all but very severe cases of each condition, “normal” vision is restored through the use of corrective external lenses (eyeglasses or contact lenses), or, more recently, through surgically altering the shape of the cornea (typically through the use of lasers). The ability of the eye to properly focus images may also be affected by physical illness, exhaustion, eyestrain (such as through lengthy concentration on detailed, close-up work), and age.