Introduction

As the CRT may be considered to be the first electronic display technology to enjoy widespread acceptance and success (a success which continues to this day), the analog interface standards developed to support it have been the mainstay of practically all “standalone” display markets and applications – those in which the display device is a physically separate and distinct part of a given system. This topic and the next examine and describe these, and trace the history of their development from the early days of television to today’s high-resolution desktop computer monitors. But since the standards for these modern monitors owe a great deal to the work that has been done, over the past 50 years and more, in television, we begin with an in-depth look at that field.

Early Television Standards

Broadcast television is a particularly interesting example of a display interface problem, and the solutions that were designed to address this problem are equally intriguing. It was the first case of a consumer-market, electronic display interface, and one which is complicated by the fact that it is a wireless interface – it must deliver image (and sound) information in the form of a broadcast radio transmission, and still provide acceptable results within the constraints that this imposes. The story of the development of this standard is fascinating as a case study, and becomes even more so as we look at how the capabilities and features of the broadcast television system grew while still maintaining compatibility with the original standard. In addition, the histories of television and computer-display standards turn out to be intertwined to a much greater degree than might initially be expected.

The notion that moving images could be captured and transmitted electronically was the subject of much investigation and development in the 1920s and 1930s. Demonstrations of television systems were made throughout this period, initially in very crude form (involving cumbersome mechanical scanning and display systems), but becoming progressively more sophisticated, until something very much like television as it is known today was demonstrated in the late 1930s. Systems were shown both in Europe and in North America during this time; notably, a transmission of the opening ceremonies of the 1936 Olympic Games in Berlin is often cited as the first television broadcast, although it was seen only in a few specially equipped auditoriums. A demonstration of a monochrome, or “black and white” television system by RCA at the 1939 New York City World’s Fair – including the first televised Presidential address, by Franklin D. Roosevelt – is generally considered the formal debut of a practical system in the United States. Unfortunately, the outbreak of World War II brought development to a virtual standstill in much of rest of the world. Development continued to some degree in the US, but obviously at a slower pace than might otherwise have been the case.

The goal of television was to deliver a high-resolution moving image within a transmitted bandwidth (and other constraints) that could be accommodated within a practical broadcast system. As it was clear that the display device itself would have to be a CRT, the question of the required image format was in part determined by what could be resolved by the viewer, at expected home viewing distances, and using the largest tubes that could reasonably be expected at the time. It was anticipated that most viewers would be watching television from a distance of approximately 3 m (9-10 feet). Given a picture height of at most 0.5 m, as limited by the available CRTs, it was therefore reasonable (based on the limits of visual acuity) to establish a goal of providing images of at least the low hundreds of lines per frame. And, as it was desirable that television provide “square” resolution (an equal degree of delivered resolution in both the horizontal and vertical directions), this also, when coupled with the target image aspect ratio, becomes the driving force behind the minimum required bandwidth for the television signal.

The original television standards were therefore developed to provide a 4:3 aspect ratio image, with a minimum of several hundred lines per frame, and with a sufficiently high frame rate so as to both deliver acceptable motion and to avoid undesirable flicker as the images were presented on a CRT. In order to minimize the appearance of visible artifacts resulting from interference at the local power-line frequencies, the vertical deflection rate of the CRT display was set to match the power-line rate; 60 Hz in North America and 50 Hz in Europe. However, to transmit complete frames of 400-500 lines or more at this rate would require an unacceptably wide broadcast channel; therefore, all of the original television standards worldwide employed a 2:1 interlaced scanning format.Coupling this with the desire for reasonably close horizontal (line) rates in all worldwide standards (to allow for some commonality in receiver design) was a major factor in the final selection of the basic format standards still in use today. These are a 525 lines/frame system, with a 60 Hz field rate, used primarily in North America and Japan; and a 625 lines/frame, 50 Hz standard used in the rest of the world. (For convenience, these are often referred to as the “525/60” and “625/50” standards. It is important to note that these should not properly be referred to as the “NTSC” or “PAL/SECAM” standards.Earlier experimental standards, notably a 405-line system which had been developed in the UK, and an 819-line system in France, were ultimately abandoned in favor of these.

The basic parameters of these two timing and format standards are shown in Table 8-1. Table 8-1 Standard television format/timing standards.

|

525/60 (original) |

525/60 (with color) |

625/50 |

|

|

Vertical (field) rate (Hz) |

60.00 |

59.94+ |

50.00 |

|

Scanning format |

2:1 interlaced |

2:1 interlaced |

2:1 interlaced |

|

Lines per frame |

525 |

525 |

625 |

|

Lines per field |

262.5 |

262.5 |

312.5 |

|

Line rate (kHz) |

15.750 |

15.734.26+ |

15.625 |

|

Vert. timing details |

|||

|

Active lines/fielda |

242.5 |

242.5 |

287.5 |

|

Blank lines/field |

20 |

20 |

25 |

|

Vert. “front porch”b (lines) |

3 |

3 |

2.5 |

|

Vert sync. pulse widthb (lines) |

3 |

3 |

2.5 |

|

Vert. “back porch”b,c (lines) |

3 + 11 |

3 + 11 |

2.5 + 17.5 |

|

Horiz. timing details |

|||

|

Horiz. line time (μβ) (H) |

63.492 |

63.555 |

64.000 |

|

Horiz. active time ^s)a,d |

52.86 |

51.95 |

|

|

Horiz. blanking (μ^) |

10.7d |

10.7d |

12.05 |

|

Horiz. “front porch” |

1.3 |

1.3 |

1.5 |

|

Horiz. sync pulse width |

4.8 |

4.8 |

4.8 |

|

Horiz. “back porch”d |

4.5 |

4.5 |

5. |

Horizontal front porch, sync pulse width, and back porch values are approximations; in the standard definitions, these are given as fractions of the total line time, as: FP = 0.02 H (min); SP = 0.075 H (nom); BP = remainder of specified blanking period.

a Not all of the active area is visible on the typical television receiver, as it is common practice to “overscan” the display. The number of lines lost varies with the adjustment of the image size and position, but is typically between 5 and 10% of the total.

b Due to the interlaced format used in both systems, the vertical blanking interval is somewhat more complex than this description would indicate. “Equalizing pulses,” of twice the normal rate of the horizontal synchronization pulses but roughly half the duration, replace the standard H. sync pulse during the vertical front porch, sync pulse, and the first few lines of the back porch.

c The “back porch” timings given here are separated into the time during which equalization pulses are produced (thepostequalization period) and the remaining “normal” line times. d Derived from other values specified in these standards.

Broadcast Transmission Standards

Establishing the basic format and timing standards for the television systems was only the first step. The images captured under these standards must then be transmitted as a “radio” broadcast, and again a number of interesting choices were made as to how this would be done.

First, we need to consider the bandwidth required for a transmission of the desired resolution. The 525 lines/frame format actually delivers an image equivalent to approximately 300-350 lines of vertical resolution; this is due to the effects of interlacing, the necessary spot size of display device, etc.. In the parlance of the television industry, this relationship is called the Kell factor, defined as the ratio between the actual delivered resolution, under ideal conditions, and the number of lines transmitted per frame. Television systems are generally assumed to operate at a Kell factor of about 0.7; with 485 lines of the original 525 available for “active” video (the other 40 lines constitute the vertical blanking intervals), the “525/60” system is expected to deliver a vertical resolution equal to about 340 lines. If the system is to be “square” – to deliver an equivalent resolution in the horizontal direction – it must horizontally provide 340 lines of resolution per picture height. In terminology more familiar to the computer industry, a 4:3 image with 340 lines of vertical resolution should provide the equivalent of 453 “pixels” for each horizontal line. (It is important to note that these original television standards, defining purely “analog”, continuous-scan systems, do not employ the concept of a “pixel” as it is understood in image-sampling terms.The concern here was not for discrete picture elements being transmitted by the system, but only for the visible detail which could be resolved in the final image.)

With a 15,750 Hz line rate, and approximately 20% of the line time required for horizontal blanking/retrace, the horizontal resolution requirement translates to luminance variations (cycles between white and black; using the “pixel” viewpoint, two pixels are required for each cycle) at a fundamental rate of approximately:

Therefore, we can conclude that to deliver an image of the required resolution, using the formats and frame/field rates previously determined, will require a channel of about 4.5 MHz bandwidth at a minimum, in a simple analog broadcast system. (By a similar calculation, we would expect the 625/50 systems to require somewhat wider channels in order to maintain “square” resolution.)

These numbers are very close to the actual parameters established for the broadcast television standards. In the US, and in most other countries using the 525/60 scanning standard, television channels each occupy 6 MHz the broadcast spectrum. The video portion of the transmitted signal – that which contains the image information, as opposed to the audio – is transmitted using vestigial-sideband amplitude modulation (VSB; see Figure 8-1); this results in the smallest possible signal bandwidth, while not requiring the complexities in the receiver of a true single-sideband (SSB) transmission. The video carrier itself is located 1.25 MHz up from the bottom of each channel, with the upper sideband permitted to extend 4.2 MHz above the carrier, and the vestigial lower sideband allocated 0.75 MHz down from the carrier. The audio subcarrier is placed 4.5 MHz above the video carrier (or 5.75 MHz from the bottom edge of the channel). In all analog television broadcast standards, the audio is transmitted using frequency modulation (FM); in the US, and other countries using the 6 MHz channel standard, a maximum bandwidth of ±200 kHz, centered at the nominal audio subcarrier frequency, is available for the audio information; the US system uses a maximum carrier deviation of ±25 kHz.

Figure 8-1 The spectrum of a vestigial-sideband (VSB) monochrome television transmission. The specific values shown above are for the North American standard, and most others using a 6 MHz channel (details for other systems are given in Table 8-2). Note that with the vestigial-sideband system, the receiver is required to provide a selectivity curve with an attenuation of the lower frequencies of the video signal, to compensate for the added energy these frequencies would otherwise receive from the vestigial lower sideband. (The shape of the luminance signal spectrum is an example only, and not intended to represent any particular real-world signal.)

The complete set of international standards for channel usage in the above manner was eventually coordinated by the Comité Consultatif International en Radiodiffusion (CCIR), and each standard assigned a letter designator. The 6 MHz system described above, used in the United States, Canada, Mexico, and Japan, is referred to as “CCIR-M”. As noted, the 625/50 systems generally required greater video bandwidths, and so other channelizations and channel usage standards were developed for countries using those. A more complete list of the various systems recognized by the CCIR standards is presented later in this topic, following the discussion of color in broadcast television systems.

One last item to note at this point is that all worldwide television broadcast systems, with the exception of those of France, employ negative modulation in the video portion of the transmission. This means that an increase in the luminance in the transmitted image is represented by a decrease in the amplitude of the video signal as delivered to the modulator, resulting in less depth of modulation. In other words, the “blacker” portions of the image are transmitted at a higher percentage of modulation than the “whiter” portions. This is due to the system employed for incorporation synchronization pulses into the video stream. During the blanking periods, “sync” pulses are represented as excursions below the nominal level established for “black”. Therefore, the highest modulation – and therefore the most powerful parts of the transmitted signal – occurs at the sync pulses. This means that the receiver is more likely to deliver a stable picture, even in the presence of relatively high noise levels. The specifications require that the peak white level correspond to a modulation of 12.5%; this is to ensure an adequate safety margin so that the video signal does not overmodulate its carrier. (Overmodulation in such a system results in carrier cancellation, and a problem known as “intercarrier buzz”, characterized by audible noise in the transmitted sound. This problem arose again, however, when color was added to the system, as discussed shortly.)

Closed-Circuit Video; The RS-170 and RS-343 Standards

As an “over-the-air”, broadcast transmission, the television signal has little need for (or even the possibility of) absolute standards for signal amplitude; the levels for “white,” “black,” and intermediate luminance levels could only be distinguished in terms of the depth of modulation for each. Even here, standard levels must be established in a relative sense, and there remains a need for absolute level standards for “in-studio” use – ensuring that equipment which is directly connected (closed-circuit video, as opposed to wireless RF transmission) will be compatible.

The video signal is normally viewed as “white-positive,” as shown in Figure 8-2. Either as an over-the-air transmission, or in the case of AC-coupled inputs typical of video equipment, this signal has no absolute DC reference available. Therefore, the reference for the signal must be re-established by each device using it, by noting the level of the signal during a specified time. In a video transmission, there are only two times during which the signal can be assumed to be at a stable, consistent reference level – the sync pulses and the blanking periods. Therefore, the blanking level was established as the reference for the definition of all other levels of the signal. This permits video equipment to easily establish a local reference level – a process referred to as DC restoration – by clamping the signal a specified time following each horizontal sync pulse, at a point when the signal may safely be assumed to be at the blanking level.

To define the amplitude levels, the concept of defining an arbitrary unit based on the peak-to-peak amplitude of the signal was introduced.

Figure 8-2 One line of a monochrome video signal, showing the standard levels. Where two values are given, as in 0.714V/0.700V, the first is per the appropriate North American standards (EIA RS-343 levels, 525/60 timing) while the second is the standard European (625/50) practice. The original EIA RS-170 standard used the 525/60 timing, but defined the signal amplitudes as follows: Sync tip, -0.400V; blank (reference level); black, +0.075V (typical); white, +1.000V. Note: All signal amplitudes assume a 75-Ω system impedance. Modern video equipment using the North American system uses a variation of the original 525/60 timing, but the 1.000Vp-p signal amplitude originally defined by RS-343.

The Institute of Radio Engineers, or IRE, established the standard of considering the amplitude from the blanking reference to the “white” level (the maximum positive excursion normally seen during the active video time) to be “100 IRE units,” or more commonly simply “100 IRE.” The tips of the synchronization pulses were defined to be negative from the reference level by 40 IRE, for an overall peak-to-peak amplitude of 140 IRE. The US standards for video also established the requirement that the “black” level – the lowest signal level normally seen during the active video time -would be slightly above the reference level. This difference between the blanking and black levels is referred to as the “pedestal” or setup of the signal. The nominal setup in the US standards was 7.5 IRE, but with a relatively wide tolerance of ±5 IRE.

The first common standard for wired or closed-circuit video was published in the 1940s by the Electronic Industries Association (EIA) as RS-170. In addition to the US system’s signal amplitude and timing definitions already discussed, this standard established two other significant requirements applicable to “wired” video. First, the transmission system would be assumed to be of 75 Ω impedance, with AC-coupled inputs. The signal amplitudes were also defined in absolute terms, although still relative to the blanking level. The blank-to-white excursion was set at 1.000V, for an overall peak-to-peak amplitude of the signal of 1.400V (from the sync tips to the nominal white level).

A later “closed-circuit” standard had an even greater impact, in setting the stage for the later analog signal standards used by the personal computer industry. Originally intended as a standard for closed-circuit video systems with higher resolution than broadcast television, RS-343 closely resembled RS-170, but had two significant differences. First, several new timing standards were established for the “high-res” closed-circuit video. But more importantly, RS-343 defined an overall signal amplitude of exactly 1.000V. The definitions in terms of IRE units were retained, with the blank-to-white and blank-to-sync amplitudes still considered to be 100 IRE and 40 IRE, respectively, but the reduction in peak-to-peak amplitude made for some seemingly arbitrary figures in absolute terms. The white level, for example, is now 0.714 V positive with respect to blanking, while the sync tips are 0.286V negative. The “setup” of the black level now becomes a nominal 0.054V. The timing standards of RS-343 are essentially forgotten now, but the basic signal level definitions or later derivatives continue on in practically all modern video systems.

European video standards were developed along very similar lines, but with the significant difference that no setup was used (the blank and black levels are identical). In practice, this results in little if any perceivable difference. Setup was introduced to permit the CRT to be in cutoff during the blanking times, but slightly out of cutoff (and therefore in a more linear range of operation) during the active video time. But this cannot be guaranteed for the long term through the setup difference alone, as the cutoff point of the CRT will drift with time due to the aging of the cathode. So most CRT devices will generate blanking pulses locally, referenced to the timing of the sync pulses, to ensure that beam is cutoff during the retrace times. Therefore, the difference in setup between the European and original North American standards became primarily a complication when mixing different signal sources and processing equipment within a given system.

The European standards also, in doing away with the setup requirement, simplified the signal level definitions within the same 1.000Vp_p signal. The white level was defined as simply 0.700V positive with respect to blanking (which remains the reference level), with the sync tips at 0.300V negative. The difference in the sync definition is generally not a problem, but the difference in the white-level definition can lead to some difficulties if equipment de signed to the two different standards is used in the same system. At this time, the +0.700/ -0.300 system, without setup, has become the most common for interconnect standards (as documented in the SMPTE 253M specification), although the earlier RS-343 definitions are still often seen in many places.

One last point should be noted with regard to the monochrome video standards. As the target display device – the only display device suitable for television which was known at the time – was the CRT, the non-linearity or gamma of that display had to be accounted for. All of the television standards did this by requiring a non-linear representation of luminance. The image captured by the television camera is expected to be converted to the analog video signal using a response curve that is the inverse of the expected “gamma” curve of the CRT. The various video standards are based on an assumed display gamma of approximately 2.2, which is slightly under the actual expected CRT gamma. This results in a displayed image which is somewhat more perceptually pleasing than correcting to a strict overall linear system response. (Note: a strict “inverse gamma” response curve for cameras, etc., is not followed at the extreme low end of the luminance range. For example, below 0.018 of the normalized peak luminance at reference white, the current ANSI/SMPTE standard (170M) requires a linear response at the camera. This is to correct for deviations from the expected theoretical “gamma curve” in the display and elsewhere in the system, and to provide a somewhat better response to noise in this range.

Color Television

Of all the stages in the development of today’s television system, there is none more interesting, either in terms of the technical decisions made or the political processes of the development, than the addition of color support to the original “black-and-white-only” system. Even at the time of the introduction of the first commercial systems, the possibility of full-color video was recognized, and in fact had been shown in several separate demonstration systems (dating back as far as the late 1920s). However, none of the experimental color systems had shown any real chance of being practical in a commercial broadcast system, and so the original television standards were released without any clear path for future extension for the support of color.

By the time that color was seriously being considered for broadcast use, in the late 1940s and early 1950s, the black-and-white system was already becoming well-established. It was clear that the addition of color to the standard could not be permitted to disrupt the growth of this industry, by rendering the installed base of consumer equipment suddenly obsolete. Color would have to coexist with black-and-white, and the US Federal Communications Commission (FCC) announced that any color system would have to be completely compatible with the existing standards in order to be approved. This significantly complicated the task of adding color to the system. Not only would the additional information required for the display of full-color images have to be provided within the existing 6 MHz channel width, but it would have to be transmitted in such a way so as not to interfere with the operation of existing black-and-white receivers.

Ultimately, two systems were proposed which met these requirements and became the final contenders for the US color standard. The Columbia Broadcasting System (CBS) backed a field-sequential color system which involved rotating color filters in both the camera and receiver. As the sequential red, green, and blue fields were still transmitted using the established black-and-white timing and signal-level standards, they could still be received and properly displayed by existing receivers. These images, displayed on such a receiver, would still appear in total to be a black-and-white image, in the absence of the color filter system.In fact, the filter wheels and synchronization electronics required for color display could conceivably be retrofitted to existing receivers, permitting a less-expensive upgrade path for consumers. (Television receivers were still quite expensive at this time; a typical black-and-white television chassis – with CRT but without cabinet – sold for upwards of $200.00 in 1950, at a time when a new car could be purchased for only about three times this.)

The CBS system, originally conceived by Dr. Peter Goldmark of their television engineering staff, had been demonstrated as early as 1940, but was not seen as sufficiently ready so as to have any impact on the introduction of the black-and-white standards. CBS continued development of the system, though, and it was actually approved by the FCC in October of 1950. However, throughout this period the CBS technique drew heavy opposition from the Radio Corporation of America (RCA), and its broadcast network (the National Broadcasting Company, NBC), under the leadership of RCA’s president, David Sarnoff. RCA was committed to development of black-and-white television first, to be followed by their own “all-electronic” compatible color system. RCA’s system had the advantage of not requiring the cumbersome mechanical apparatus of the field-sequential CBS method, and provided even better compatibility with the existing black-and-white standards. Under the RCA scheme, the video signal of the black-and-white transmission was kept virtually unchanged, and so could still be used by existing receivers. Additional information required for the display of color images would be added elsewhere in the signal, and would be ignored by black-and-white sets. A key factor enabling the all-electronic system was RCA’s concurrent development of the tricolor CRT or “picture tube”, essentially the phosphor-triad design of the modern color CRT. This permitted the red, green, and blue components of the color image to be displayed simultaneously, rather than requiring that they be temporally separated as in the CBS method. In 1953, the FCC, acting on the recommendation of the National Television System Committee (NTSC) – a group of industry engineers and scientists, established to advise the Commission on technical matters relating to television – reversed its earlier decision and approved what was essentially the RCA proposal. The new standard would come to be known as “NTSC color.”

NTSC Color Encoding

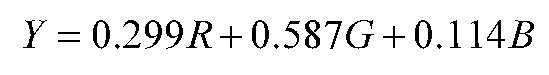

The NTSC color encoding system is based on the fact that much of the information required for the color image is already contained within the existing black-and-white, or luminance, video information. As seen in the earlier discussion of color, the luminance, or Y, signal can be derived from the primary (RGB) information; the conversion, using the precise coefficients defined for the NTSC system, is

If the existing video signal is considered to be the Y information derived in this manner, all that is required for a full-color image is the addition of two more signals to permit the recovery of the original RGB information. What is not clear, however, is how this additional information could be accommodated within the available channel.

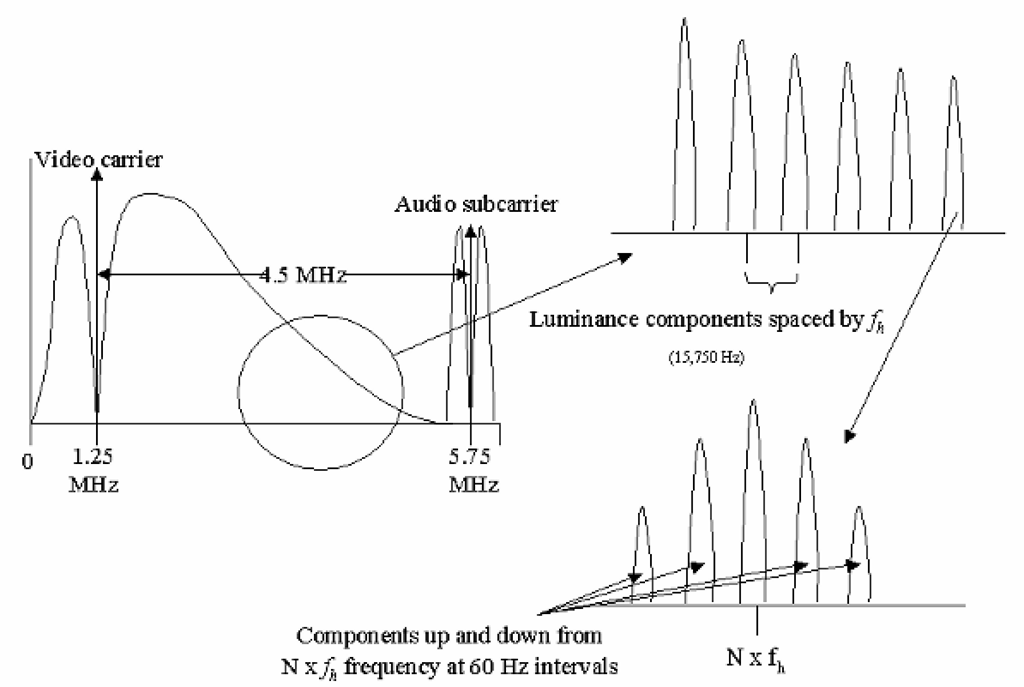

Two factors make this possible. First, it was recognized that the additional color information did not have to be provided at the same degree of spatial resolution as the original luminance signal.Therefore, the additional signals representing the supplemental color information would not require the bandwidth of the original luminance video. Second, the channel is not quite as fully occupied by the monochrome video as it might initially appear. By virtue of the raster-scan structure of the image, the spectral components of the video signal cluster around multiples of the horizontal line rate; as the high-frequency content of the image is in general relatively low, the energy of these components drops off rapidly above and below these frequencies. This results in the spectrum of the video signal having a “picket fence” appearance (Figure 8-3), with room for information to be added “between the pickets.”

In the NTSC color system, this was achieved by adding an additional carrier – the color subcarrier – at a frequency which is an odd multiple of one-half the line rate. This ensures that the additional spectral components of the color information, which similarly occur at multiples of the line rate above and below their carrier, are centered at frequencies between those of the luminance components (Figure 8-4).

Figure 8-3 Details of the spectral structure of a monochrome video signal. Owing to the raster-scan nature of the transmission, with its regular line and field structure, the spectral components appear clustered around multiples of the line rate, and then around multiples of the field rate. This “picket fence” spectral structure provides space for the color signal components which might not be obvious at first glance – “between the pickets” of the luminance information.

Figure 8-4 Through the selection of the color subcarrier frequency and modulation method, the components of the color information (“chrominance”) are placed between the “pickets” of the original monochrome transmission. However, their remained a concern regarding potential interference between the chrominance components and the audio signal, as detailed in the text.

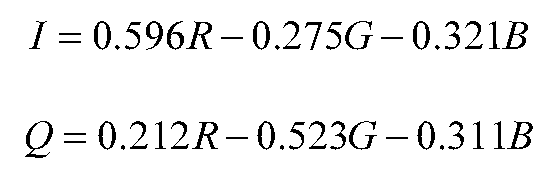

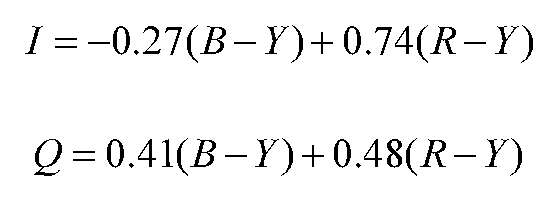

Two signals carrying the color, or chrominance, information are added to the transmission via quadrature modulation of the color subcarrier. Originally, these were named the I and Q components (for “in-phase” and “quadrature”), and were defined as follows:

These may also be expressed in terms of the simpler color-difference signals B-Y and R-Y as follows:

and in fact these expressions correspond to the method actually used to generate these signals from the original RGB source.

(Note: the above definitions of the I and Q signals are no longer in use, although still permissible under the North American broadcast standards; they have been replaced with appropriately scaled versions of the basic color-difference signals themselves, as noted below in the discussion of PAL encoding.)

At this point, the transformation between the original RGB information and the YIQ representation is theoretically a simple matrix operation, and completely reversible. However, the chrominance components are very bandwidth-limited in comparison to the Y signal, as would be expected from the earlier discussion. The I signal, which is sometimes considered roughly equivalent to the “orange-to-cyan” axis of information (as the signal is commonly displayed on a vectorscope), is limited to at most 1.3 MHz; the Q signal, which may be considered as encoding the “green-to-purple” information, is even more limited, at 600 kHz bandwidth. It is this bandlimiting which makes the NTSC color encoding process a lossy system. The original RGB information cannot be recovered in its original form, due to this loss.

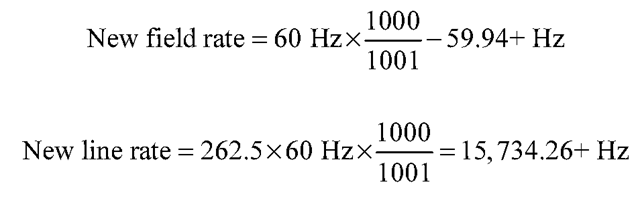

One other significant modification was made to the original television standard in order to add this color information. As noted earlier, placing the color subcarrier at an odd multiple of half the line rate put the spectral components of the chrominance signals between those of the existing luminance signal. However, the new color information would come very close to the components of the audio information at the upper end of the channel, and a similar relationship between the color and audio signals was required in order to ensure that there would be no mutual interference between them. It was determined that changing the location of either the chroma subcarrier or the audio subcarrier by a factor of 1000/1001 would be sufficient (either increasing the audio subcarrier frequency by this amount, or decreasing the chroma subcarrier to the same degree). Concerns over the impact of a change of the audio frequency on existing receivers led to the decision to move the chroma subcarrier. But to maintain the desired relationship between this carrier and the line rate, all of the video timing had to change by this amount. Thus, in the final color specification, the field rate and line rate both change as follows:

With these changes, the color subcarrier was placed at 455/2 times the new line rate, or approximately 3.579545 MHz. above the video carrier. These changes were expected to be small enough such that existing receivers would still properly synchronize to the new color transmissions as well as the original black-and-white standard.

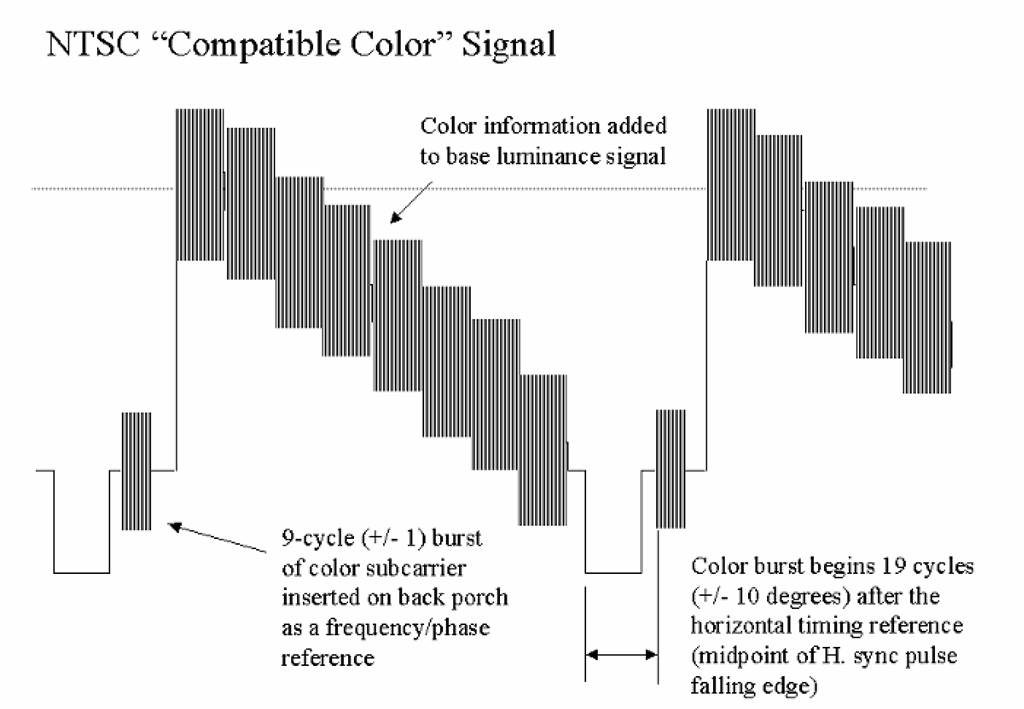

With the quadrature modulation technique used to transmit the I and Q signals, the chroma subcarrier itself is not a part of the final signal. In order to permit the receiver to properly decode the color transmission, then, a short burst (8-10 cycles) of the color subcarrier is inserted into the signal, immediately following the horizontal sync pulse (i.e., in the “back porch” portion of the horizontal blanking time) on each line (Figure 8-5). This chroma burst is detected by the receiver and used to synchronize a phase-locked loop, which then provides a local frequency reference used to demodulate the chroma information.

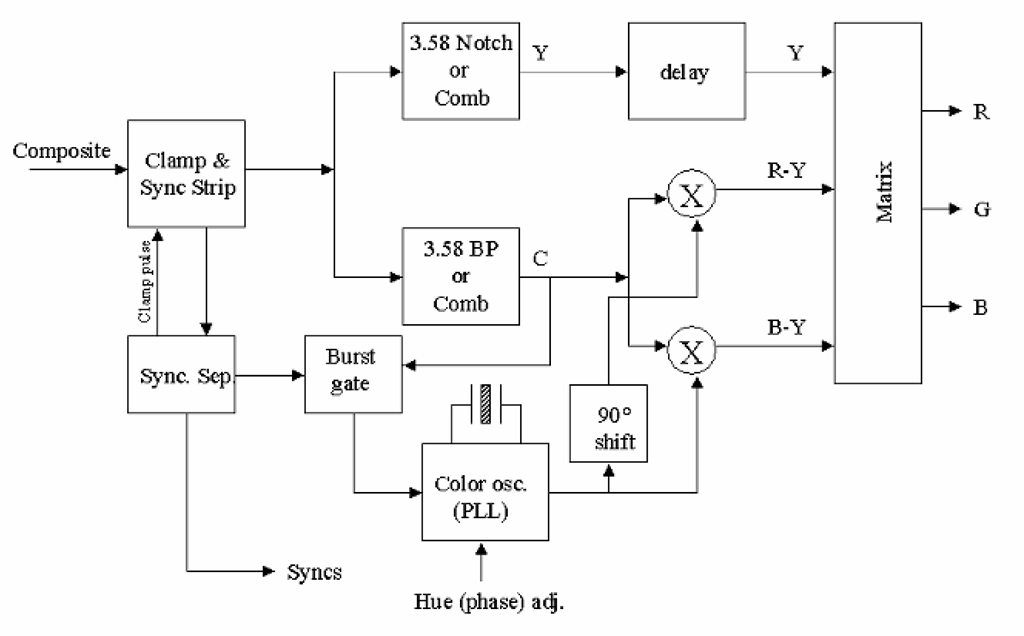

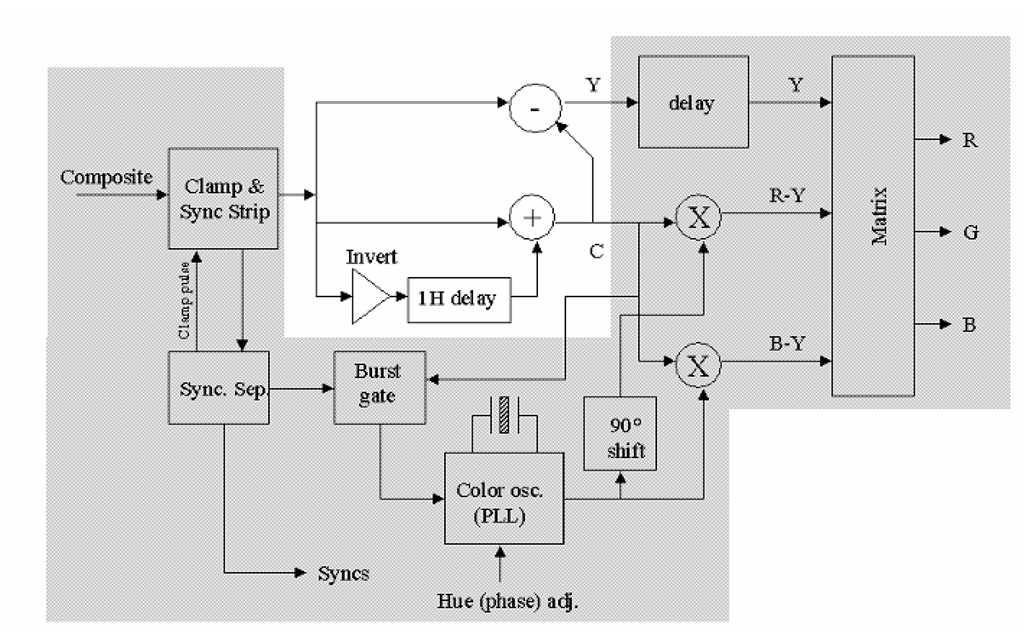

A complete NTSC encoding system is shown in block-diagram form as Figure 8-6.

With the chrominance and luminance (or, as they are commonly referred to, “Y” and “C”) components of the signal interleaved as described above, there is still a potential for mutual interference between the two. The accuracy of the decoding in the receiver depends to a great extent on how well these signals can be separated by the receiver.

Figure 8-5 The completed color video signal of the NTSC standard, showing the “color burst” reference signal added during the blanking period.

Figure 8-6 Block diagram of NTSC color video encoding system.

Figure 8-7 The interleaved luminance and chrominance components, showing the filter response required to properly separate them – i.e., a “comb filter”.

This is commonly achieved through the use of a comb filter, so called due to its response curve; it alternately passes and stops frequencies centered about the same points as the chrominance and luminance components, as shown in Figure 8-7. An example of one possible color decoder implementation for NTSC is shown in Figure 8-8, with a simple comb filter implementation shown in Figure 8-9. This takes advantage of the relative phase reversal of the chroma subcarrier on successive active line times (due to the relationship between the subcarrier frequency and the line rate) to eliminate the chroma components from the luminance channel, and vice-versa. Failure to properly separate the Y and C components leads to a number of visible artifacts which in practice are quite common in this system. A prime example is chroma crawl, which refers to the appearance of moving blotches of color in areas of the image which contain high spatial frequencies along the horizontal direction. This results from these high-frequency luminance components being interpreted by the color decoding as chroma information. This artifact is often seen, for instance, in newscasts when the on-camera reporter is wearing a finely striped or checked jacket.

Figure 8-8 Block diagram of a color television decoder for the NTSC color system.

Figure 8-9 The NTSC decoder with a simple comb filter (outside the shaded area). This relies on the relative phase reversal of the chroma subcarrier on alternate transmitted lines to eliminate the chrominance information from the Y signal. This results in a loss of vertical resolution in the chrominance signal, but this can readily be tolerated as noted in the text.

An additional potential problem results from the color components being added to the original luminance-only signal definition. As shown in Figure 8-5, the addition of these signals results in a increase in the peak amplitude of the signal, possibly beyond the 100 IRE positive limit (the dotted line in the figure). This would occur with colors which are both highly saturated and at a high luminance, as with a “pure” yellow or cyan. The scaling of the color-difference signals, as reflected in the above definitions, was set to minimize the possibility of overmodulation of the video carrier, but it is still possible within these definitions for this to occur. For example, a fully saturated yellow (both the R and G signals at 100%) would result in a peak amplitude of almost 131 IRE above the blanking level, or a peak-to-peak signal of almost 171 IRE. This would severely overmodulate the video carrier; with the definition of 100 IRE white as 12.5% modulation discussed earlier, the 0% modulation point and thus the absolute limit on “above-white” excursions is 120 IRE, or 160 IRE for the peak-to-peak signal. Careful monitoring of the signals and adjustments to the video gain levels are required in television production to ensure that overmodulation does not occur. This was considered acceptable at the time of the original NTSC specification, as highly saturated yellows and cyans are rare in “natural” scenes as would be captured by a television camera. However, the modern practice of using various forms of electronically generated imagery, such as computer graphics or electronic titling systems, can cause problems due to the saturated colors these often produce.