Introduction

It seems that in any discussion of electrical interfaces, the question of “analog vs. digital” types always comes up, with considerable discussion following as to which is better for a given application. Unfortunately, much of the ensuing discussion is often based on some serious misunderstandings about exactly what these terms mean and what advantages and disadvantages each can provide. This has, at least in part, come from the tendency we have of trying to divide the entire world of electronics into “analog” and “digital”. But while these two do have significant differences, we will also find that there are more similarities than are often admitted, and some of the supposed advantages and disadvantages to each approach turn out to have very little to do with whether the system in question is “analog” or “digital” in nature.

In truth, it must be acknowledged that the terms “analog” and “digital” do not refer to any significant physical differences; both are implemented within the limitations of the same electrical laws, and one cannot in many cases distinguish with certainty the physical media or circuitry intended for each. Nor can one distinguish, with certainty, “analog” from “digital” signals simply by observing the waveform. Consider Figure 6-1; it is not possible to say for certain that this signal is carrying binary, digital information, or is simply an analog signal which happens at the time to be varying between two values. Is there really any such thing as an “analog signal” or a “digital signal”, or should we look more carefully into what these terms really mean?

There has been an unfortunate tendency to confuse the word “analog” with such other terms as “continuous” or “linear,” when in fact neither of these is necessary for a system to be considered “analog”. Similarly, “digital” is often taken to mean “discrete” or “sampled” when in fact there are also sampled and/or discrete analog systems. Much of what we know -or at least think we know – about the advantages and disadvantages of the two systems is actually based on such misunderstandings, as we will soon see.

Figure 6-1 An electrical signal carrying information. Many would be tempted to assume that this is a “digital” signal, but such an assumption is unwarranted; it is possible this could be an analog signal that happens to be varying between two levels, or perhaps it only has two permissible levels. The terms “analog” and “digital” properly refer only to two different means of encoding information onto a signal – they say nothing about the signal itself, only about how it is to be interpreted.

Fundamentally, at least as we consider these terms from an interfacing perspective, we must recognize that the terms “analog” and “digital” actually refer to two broad classes of methods of encoding information as an electrical signal. The words themselves are the best clues to their true meanings; in an analog system, information about a given parameter -sound, say, or luminance – is encoded by causing analogous variations in a different parameter, such as voltage. This is really all there is to analog encoding. Simply by calling something “analog,” you do not with certainty identify that encoding as linear or even continuous. In a similar manner, the word “digital” really indicates a system in which information is encoded in the form of “digits” – that is to say, the information is given directly in numeric form. We are most familiar with “digital” systems in which the information is carried in the form of binary values, as this is the simplest means of expressing numbers electronically. But this is certainly not the only means of “digital” encoding possible.

Even with this distinction clear, there is still the question of which of these systems – if either of them – is necessarily the “best” for a given display interfacing requirement. There remain many misconceptions regarding the supposed advantages and disadvantages of each. So with these basic definitions in mind, let us now consider these two systems from the perspective of the suitability of each to the problem of display interfacing.

“Bandwidth” vs. Channel Capacity

One advantage commonly claimed for digital interface systems is in the area of “bandwidth”- that they can convey more information, accurately, than competing analog systems. This must be recognized, however, as rather sloppy usage of the term bandwidth, which properly refers only to the frequency range over which a given channel is able to carry information without significant loss (where “significant” is commonly defined in terms of decibels of loss, with the most common points defining channel bandwidth being those limits at which a loss of 3 dB relative to the mid-range value is seen – the “half-power” points.) With the bandwidth of a given channel established, the theoretical limits of that channel in terms of its information capacity is given by Shannon’s theorem for the capacity of a noisy channel, as noted in the previous topic:

where C is the channel capacity, BW is the 3 dB bandwidth of the channel in hertz, and S/N is the ratio of signal to noise power in this channel. Note that while this value is expressed in units of bits per second, there is no assumption as to whether analog or digital encoding is employed. In fact, this is the theoretical limit on the amount of information that can be carried by the channel in question, one that may only be approached by the proper encoding method. Simple binary encoding, as is most often assumed in the case of a “digital” interface, is actually not a particularly efficient means of encoding information, and in general will not make the best use from this perspective of a given channel. So the supposed inherent advantage of digital interfaces in this area is, in reality, non-existent, and very often an analog interface will make better use of a given channel. We must look further at how the two systems behave in the presence of noise for a complete understanding.

Digital and Analog Interfaces with Noisy Channels

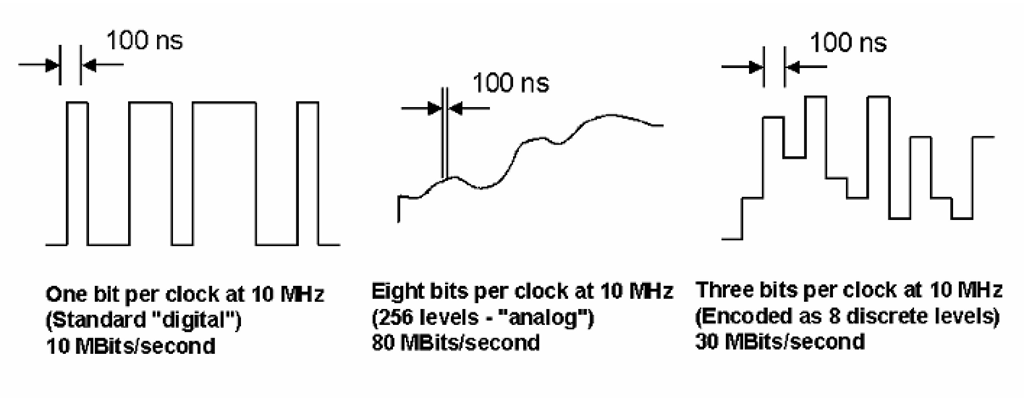

A significant difference between the two methods of encoding information is their behavior in the presence of noise. Some make the erroneous assumption that “digital” systems are inherently more immune to noise; this is not the case, if a valid comparison between systems of similar information capacity is to be made. This is essentially what Shannon’s theorem tells us; ultimately, the capacity of any channel is limited by the effect of noise, which, as its level increases, makes it increasingly difficult to distinguish the states or symbols being conveyed via that channel. Consider the signals shown in Figure 6-2. The first is an example of what most people think of as a “digital” signal, a system utilizing binary encoding, or possessing only two possible states for each “symbol” conveyed. (In communications theory, the term symbol is generally used to refer to the smallest packet of information which is transmitted in a given encoding system; in this case, it is the information delimited by the clock pulses.) The second represents what many would view as an “analog” system – in the case of a video interface, this might be the output of a D/A converter being fed eight-bit luminance information on each clock. The output of an 8-bit D/A has 256 possible states – but if each of these states can be unambiguously distinguished, meaning that the difference between adjacent levels is greater than the noise level – eight bits of information are thereby transmitted on each clock pulse. If the clock rate is the same in each case, eight times as much information is being transmitted over any given period of time by the “analog” signal as by the “digital”. The third example in the figure is an intermediate case – three bits of information being transmitted per clock, represented as eight possible levels or states per clock in the resulting signal.

This may seem to be a very elementary discussion, but it should serve to remind us of an important point – that which we call “analog” and “digital” signals are, again, simply two means of encoding information as an electrical signal. The “inherent” advantages and disadvantages of each may not be so clear-cut as we originally might have thought.

This comparison of the three encoding methods gives us a clearer understanding of how the noise performance differs amongst them; the simple binary form of encoding is more immune to noise, if only because the states are readily distinguishable (assuming the same overall signal amplitudes in each case). But it cannot carry as much information as the “analog” example in our comparison, unless the rate at which the symbols or states are transmitted (more precisely, the rate at which the states may change) is increased dramatically. This is a case of trading off sensitivity to random variations in amplitude – the “noise” we have been assuming so far – with sensitivity to possible temporal problems such as skew and the rise/fall time limitations of the system.

Figure 6-2 Three signals using different encoding methods. The first, which is a “digital” signal per the common assumptions, conveys one bit of information per clock, or 10 MBit/s at a 10 MHz clock rate. Many people would assume the second signal to be “analog” – however, it could also be viewed as encoding eight bits per clock (as any of 256 possible levels), and so providing eight times the information capacity as the first signal. The third is an intermediate example: three bits per clock, encoded as eight discrete levels. As long as the difference between least-significant-bit transitions may be unambiguously and reliably detected, the data rates indicated may be maintained.

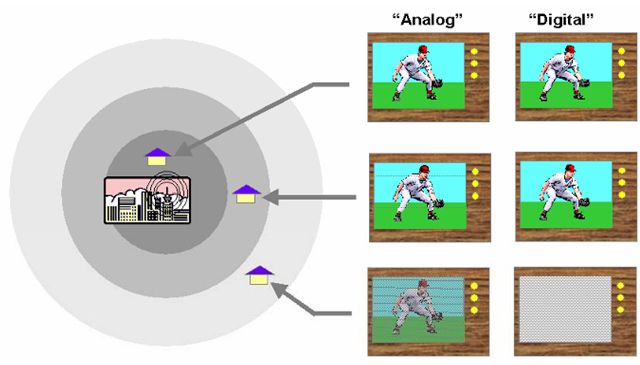

There is one statement which can be made in comparing the two which is generally valid: in an analog system, by the very fact that the signal is varying in a manner analogous to the variations of the transmitted information, the less-important information is the first to fall prey to noise. In more common terms, analog systems degrade more gracefully in the presence of noise, losing that which corresponds to the least-significant bits, in digital terms, first. With a digital system, in which the differences between possible states generally does not follow the “analog” method, it is quite common to have all bits equally immune – and also equally vulnerable – to noise. Digital transmissions tend to exhibit a “cliff” effect; the information is received essentially “perfectly” until the noise level increases to the point at which the states can no longer be distinguished, and at that point all is lost (see Figure 6-3). Intermediate system are possible, of course, in which the more significant bits of information are better protected against the effects of noise than the less-significant bits. This is, again, one of the basic points of this entire discussion – that “analog” and “digital” do not refer to two things which are utterly different in kind, but which rather may be viewed in many cases as points along a continuum of possibilities.

Figure 6-3 Analog and digital systems (in this case, television) in the presence of noise. Analog encoding automatically results in the least-significant bits of information receiving less “weighting” than the more significant information, resulting in “graceful degradation” in the presence of noise. The image is degraded, but remains usable to quite high noise levels. A “digital” transmission, in the traditional sense of term, would normally weight all bits equally in terms of their susceptibility to noise; each bit is as likely as another to be lost at any given noise level. This results in the transmission remaining essentially “perfect” until the state of the bits can no longer be reliably determined, at which point the transmission is completely lost – a “cliff effect.”

Practical Aspects of Digital and Analog Interfaces

There are some areas in which digital and analog video interfaces, at least in their most common examples, differ in terms of the demands placed on physical and electrical connections and in the performance impact of these. As commonly implemented, a “digital” interface typically means one which employs straight binary encoding of values, transmitted in either a parallel (all the bits of a given sample sent simultaneously) or serial fashion. “Analog” interfaces, in contrast, are generally taken to mean those which employ a varying voltage or current to represent the intended luminance (or other quantity) at that instant. If we are comparing systems of similar capacity, and/or those which are carrying image data of similar format and timing, then some valid comparisons can be made between these two.

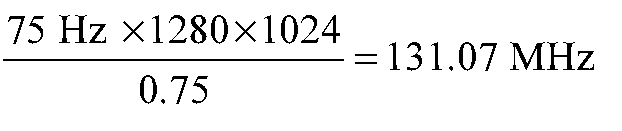

Let us consider both digital and analog interfaces carrying a 1280 x 1024 image at 75 frames/s, and in the form of separate red, green, and blue (RGB) channels. It will further be assumed that each of these channels is providing an effective eight bits of luminance information for each sample (i.e., “24-bit color”, typical of current computer graphics hardware). If we assume that 25% of the total time is spent on “blanking” or similar overheard, then the peak pixel or sample rate (regardless of the interface used) is

We will consider three possible implementations; first, a typical “analog video” connection, with three physical conductors each carrying one color channel. Second, a straightforward parallel-digital interface, with 24 separate conductors each carrying one bit of information per sample, and finally a serial/parallel digital interface as is commonly used in PC systems today – three separate channels, one each for red, green, and blue, but each carrying serialized digital data.

If the “analog” system uses standard video levels, typically providing approximately 0.7 V between the highest (“white”) and lowest (“black” or “blank”) states, then in an 8 bit per color system, each state will be distinguished by about 2.7 mV. Further, at a 131 MHz sample rate, each pixel’s worth of information has a duration of only about 7.6 ns. Clearly, this imposes some strict requirements on the physical connection. Over typical display interface distances (several meters, at least), signals of such a high frequency will demand a con-trolled-impedance path, in order to avoid reflections which would degrade the waveform. Further, the cable losses must be minimized, and kept relatively constant over the frequency range of the signal in order to avoid attenuation of high-frequency content relative to the lower frequencies in the signal. The sensitivity of such a system to millivolt-level changes also argues for a design which will protect each signal from crosstalk and from external noise, if the intended accuracy is to be preserved. The end result of all this is that analog video interfaces typically require a fairly high-quality, low-loss transmission medium, generally a coaxial cable or similar, with appropriate shielding and the use of impedance-controlled connector system.

The simple parallel digital system might be expected to fare a bit better in terms of its demands on the physical interface, but this is generally not the case. While the noise margin would be considerably better (we might assume a digital transmission method using the same 0.7 V p-p swing of the analog video, just for a fair comparison), this advantage is generally more than negated by the need for eight times the number of physical conductors and connections. And even with this approach, the problems of impedance control,. noise, and crosstalk cannot be completely ignored.

But what of the serial digital approach? Surely this provides the best of both worlds, combining a low physical connection count with the advantages – in noise immunity, etc. – of a “digital” approach. However, there is again a tradeoff. In serializing the data, the transmission rate on each conductor increases by a factor of eight – the bit rate on each line will be over 1 GHz, further complicating the problems of noise (both in terms of susceptibility and emissions) and overall signal integrity. Such systems generally have to employ fairly sophisticated techniques to operate reliably in light of expected signal-to-signal skew, etc.. Operation at such frequencies again requires some care in the selection of the physical medium and connectors.

It is also often claimed that one of the two systems provides some inherent advantages in terms of radiated noise, and the ability of the system using the interface to comply with the various standards in this area. However, the level of EM noise radiated by a given interface, all else being equal, is primarily determined by the overall signal swing and the rate at which the signal transitions. There is no clear advantage in this regard to an interface using either “analog” or “digital” encoding of the information it carries.

Digital vs. Analog Interfacing for Fixed-Format Displays

An often-heard misconception is that the fixed-format display types, such as the LCD, PDP, etc., are “inherently digital”; this belief seems to come from the confusion between “digital” and “discrete” which was mentioned earlier. In truth, the fact that these types employ regular arrays of discrete pixels says little about which form of interface is best suited for use with them. Several of these types actually employ analog drive at the pixel level – the LCD being the most popular example at present – regardless of the interface used. It should be noted that analog-input LCD panels are actually fairly common, especially in cases where these are to be used as dedicated “television” monitors (camcorder viewfinder panels being a prime example). The factor which provides the functional benefit in current “digital interface” monitors is not that the video information is digitally encoded, but rather that these interfaces provide a pixel clock, through which this information can be unambiguously assigned to the discrete pixels. The difficulty in using traditional “CRT-style” analog video systems with these displays comes from the lack of such timing information, forcing the display to generate its own clock with which to sample the incoming video.

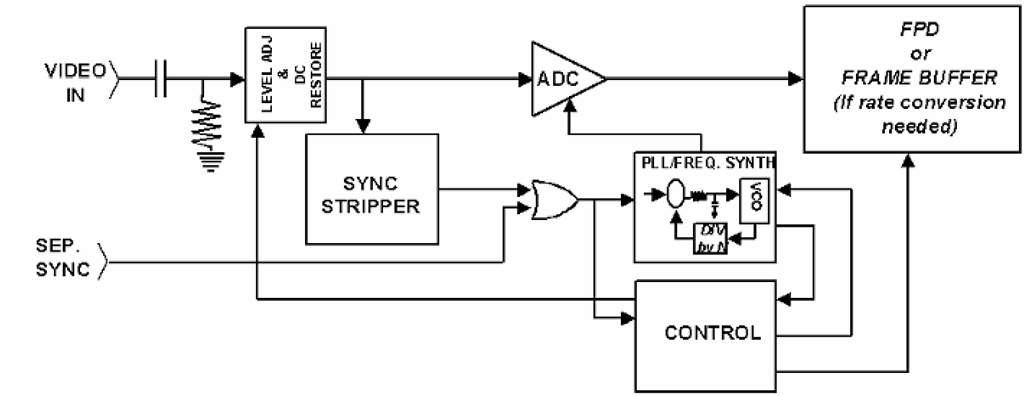

Most often, analog-input monitors based on one of the fixed-format display types, such as an LCD monitor, will derive a sampling clock from the horizontal synchronization pulses normally provided with analog video (Figure 6-4). This is the highest-frequency “clock” typically available, although it still must be multiplied by a factor in the hundreds or thousands to obtain a suitable video sampling clock. This relatively high multiplication factor, coupled with the fact that the skew between the horizontal sync signal and the analog video is very poorly controlled in many systems employing separate syncs, is what makes the job of properly sampling the video difficult. The results often show only poor to fair stability at the pixel level, which sometimes leads to the erroneous conclusion that the analog video source is “noisy.” But it should be clear at this point that the difficulties of properly implementing this sort of connection have little to do with whether the video information is presented in analog or digital form; “digital” interfaces of the most common types would have even worse problems were they to be deprived of the pixel clock signal.

Figure 6-4 Regeneration of a sampling clock with standard analog video. In almost all current analog video interface standards, no sampling clock is provided – making it difficult to use such interfaces with fixed-format displays such as LCDs. To permit these types to operate with a conventional analog input, the sampling clock is typically regenerated from the horizontal synchronization signal using PLL-based frequency synthesis techniques. This can work quite well, but in some cases may be difficult due to poor control of the sync skew and jitter relative to the video signal(s).

Still, digital interfaces have proven to be worthwhile for many fixed-format, non-CRT display types. This results in part from the lack of pixel-level timing information in the conventional analog standards, as reviewed above, and also in part from the fact that these display technologies have traditionally been designed with “digital” inputs, to facilitate their use as embedded display components in various electronic systems (calculators, computers, etc.). There is also one other significant advantage when fixed-format displays are to be used as replacements for the CRT in most computer systems. Due to the inherent flexibility of the CRT display, “multi-frequency” monitors (those which can display images over a very wide range of formats and update rates) have become the norm in the computer industry. The only way to duplicate this capability, when using display devices providing only one fixed physical image format, is to include some fairly sophisticated image scaling capabilities, achieved in the digital domain. With the need for digital processing within the display unit itself, a “digital” form of interface most often makes the most sense for these types.

Digital Interfaces for CRT Displays

With the rise of non-CRT technologies and the accompanying development of digital interface standards aimed specifically at these displays, there has quite naturally been considerable discussion regarding the transition of the CRT displays to these new interfaces. Several advantages have been claimed in order to justify the adoption of a digital interface model by this older, traditionally analog-input, technology. Some of these have been discussed already, but there are several new operational models for the CRT that have been proposed in this discussion. These deserve some attention here.

Several of the claimed advantages of digital-input CRT monitors are essentially identical to those previously mentioned for the LCD and other types; that such interfaces are “more immune” to the effects of noise, provide “higher bandwidth”, improved overall image quality as a result of these, etc.. The arguments made in the previous section still apply, of course; they are not dependent on the display technology in question. The CRT-based monitor is even less concerned with the one advantage “digital” interfaces provide for the non-CRT types: the presence of pixel-level timing information (a “pixel clock” or “video sampling clock”). The CRT, by its basic nature and inherent flexibility, is not especially hampered by an inability to distinguish discrete “pixels” in the incoming data stream. The important concern in CRT displays is that the horizontal and vertical deflection of the beam be performed in a stable, consistent manner, and this is adequately provided for by the synchronization information present in the existing analog interface standards. To justify the use of a digital interface in these products, then, we must look to either new features which would be enabled by such an interface (and not possible via the traditional analog systems), or the enabling of a significant cost reduction, or both.

The argument for using digital interfaces with CRT displays as a cost-reduction measure is based on the assumption that this will permit functions currently performed in the analog domain to be replaced with presumably cheaper digital processing. Most often, the center of attention in this regard is the complexity required of “fully analog” CRT monitors in providing the format and timing flexibility of current multi-frequency CRT displays. Admittedly, this does involve significant cost and complexity in the monitor design, with results which sometimes are only adequate. However, there is little question that the final output of the deflection circuits must be “analog” waveforms, to drive the coils of the deflection yoke. So simplification of the monitor design through digital methods is generally assumed to mean that the display will be operated at a single fixed timing (only one set of sweep rates), thereby turning the CRT itself into a “fixed-format” display! In this model, as in the LCD and other monitor types, the adaptation to various input formats would be performed by digital image scaling devices, producing as their output the one format for which (presumably) the display has been optimized. (The other possible alternative would be to simply operate the entire system at a single “standard” format/timing – however, this is not dependent on the adoption of a digital-interface model, and in fact is the norm for some current CRT-dominated applications such as television or the computer workstation market.)