INTRODUCTION

Natural language understanding and assessment is a subset of natural language processing (NLP). The primary purpose of natural language understanding algorithms is to convert written or spoken human language into representations that can be manipulated by computer programs. Complex learning environments such as intelligent tutoring systems (ITSs) often depend on natural language understanding for fast and accurate interpretation of human language so that the system can respond intelligently in natural language. These ITSs function by interpreting the meaning of student input, assessing the extent to which it manifests learning, and generating suitable feedback to the learner. To operate effectively, systems need to be fast enough to operate in the real time environments of ITSs. Delays in feedback caused by computational processing run the risk of frustrating the user and leading to lower engagement with the system. At the same time, the accuracy of assessing student input is critical because inaccurate feedback can potentially compromise learning and lower the student’s motivation and metacognitive awareness of the learning goals of the system (Millis et al., 2007). As such, student input in ITSs requires an assessment approach that is fast enough to operate in real time but accurate enough to provide appropriate evaluation.

One of the ways in which ITSs with natural language understanding verify student input is through matching. In some cases, the match is between the user input and a pre-selected stored answer to a question, solution to a problem, misconception, or other form of benchmark response. In other cases, the system evaluates the degree to which the student input varies from a complex representation or a dynamically computed structure. The computation of matches and similarity metrics are limited by the fidelity and flexibility of the computational linguistics modules.

The maj or challenge with assessing natural language input is that it is relatively unconstrained and rarely follows brittle rules in its computation of spelling, syntax, and semantics (McCarthy et al., 2007). Researchers who have developed tutorial dialogue systems in natural language have explored the accuracy of matching students’ written input to targeted knowledge. Examples of these systems are AutoTutor and Why-Atlas, which tutor students on Newtonian physics (Graesser, Olney, Haynes, & Chipman, 2005; VanLehn , Graesser, et al., 2007), and the iSTART system, which helps students read text at deeper levels (McNamara, Levinstein, & Boonthum, 2004). Systems such as these have typically relied on statistical representations, such as latent semantic analysis (LSA; Landauer, McNamara, Dennis, & Kintsch, 2007) and content word overlap metrics (McNamara, Boonthum, et al., 2007). Indeed, such statistical and word overlap algorithms can boast much success. However, over short dialogue exchanges (such as those in ITSs), the accuracy of interpretation can be seriously compromised without a deeper level of lexico-syntactic textual assessment (McCarthy et al., 2007). Such a lexico-syntactic approach, entailment evaluation, is presented in this chapter. The approach incorporates deeper natural language processing solutions for ITSs with natural language exchanges while remaining sufficiently fast to provide real time assessment of user input.

BACKGROUND

Entailment evaluations help in the assessment of the appropriateness of student responses during ITS exchanges. Entailment can be distinguished from three similar terms (implicature, paraphrase, and elaboration), all of which are also important for assessment in ITS environments (McCarthy et al, 2007).

The terms entailment is often associated with the highly similar concept of implicature. The distinction is that entailment is reserved for linguistic-based inferences that are closely tied to explicit words, syntactic constructions, and formal semantics, as opposed to the knowledge-based implied referents and references, for which the term implicature is more appropriate (McCarthy et al., 2007). Implicature corresponds to the controlled knowledge-based elaborative inferences defined by Kintsch (1993) or to knowledge-based inferences defined in the inference taxonomies in discourse psychology (Graesser, Singer, & Trabasso, 1994).

The terms paraphrase and elaboration also need to be distinguished from entailment. A paraphrase is a reasonable restatement of the text. Thus, a paraphrase is a form of entailment, yet an entailment is not necessarily a paraphrase. This asymmetric relation can be understood if we consider that John went to the store is entailed by (but not a paraphrase of) John drove to the store to buy supplies. The term elaboration refers to information that is generated inferentially or associa-tively in response to the text being analyzed, but without the systematic and sometimes formal constraints of entailment, implicature, or paraphrase. Examples of each term are provided below for the sentence John drove to the store to buy supplies.

Entailment: John went to the store. (Explicit, logical implication based on the text)

Implicature: John bought some supplies. (Implicit, reasonable assumption from the text, although not explicitly stated in the text)

Paraphrase: He took his car to the store to get things that he wanted.

(Reasonable re-statement of all and only the critical information in the text)

Elaboration: He could have borrowed stuff. (Reasonable reaction to the text)

Evaluating entailment is generally referred to as the task of recognizing textual entailment (RTE; Dagan, Glickman, & Magnini, 2005). Specifically, it is the task of deciding, given two text fragments, whether the meaning of one text logically infers the other. When it does, the evaluation is deemed as T (the entailing text) entails H (the entailed hypothesis). For example, a text (from the RTE data) of Eyeing the huge market potential, currently led by Google, Yahoo took over search company Overture Services Inc last year would entail a hypothesis of Yahoo bought Overture. The task of recognizing entailment is relevant to a large number of applications, including machine translation, question answering, and information retrieval.

The task of textual entailment has been a priority in investigations of information retrieval (Monz & de Rijke, 2001) and automated language processing (Pazienza, Pennacchiotti, & Zanzotto, 2005). In related work, Moldovan and Rus (2001) analyzed how to use unification and matching to address the answer correctness problem. Similar to entailment, answer correctness is the task of deciding whether candidate answers logically imply an ideal answer to a question.

THE LEXICO-SYNTACTIC ENTAILMENT AppROACH

A complete solution to the textual entailment challenge requires linguistic information, reasoning, and world knowledge (Rus, McCarthy, McNamara, & Graesser, in press). This chapter focuses on the role of linguistic information in making entailment decisions. The overall goal is to produce a light (i.e. computationally inexpensive), but accurate solution that could be used in interactive systems such as ITSs. Solutions that rely on processing-intensive deep representations (e.g., frame semantics and reasoning) and large structured repositories of information (e.g., ResearchCyc) are impractical for interactive tasks because they result in lengthy response times, causing user dissatisfaction.

One solution for recognizing textual entailment is based on subsumption. In general, an obj ect X subsumes an object Y if and only if X is more general than or identical to Y. Applied to textual entailment, subsump-tion translates as follows: hypothesis H is entailed from T if and only if T subsumes H. The solution has two phases: (I) map both T and H into graph structures and (II) perform a subsumption operation between the T-graph and H-graph. An entailment score, entail(T,H), is computed, quantifying the degree to which the T-graph subsumes the H-graph.

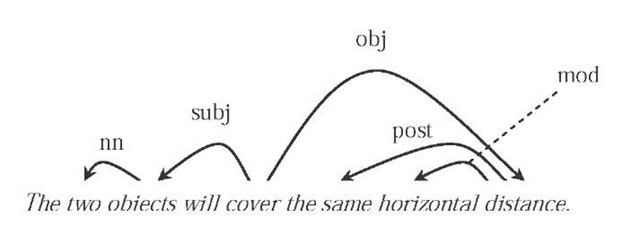

In phase I, the two text fragments involved in a textual entailment decision are initially mapped onto a graph representation. The graph representation employed is based on the dependency-graph formalisms of Mel’cuk (1998). The mapping relies on information from syntactic parse trees. A phrase-based parser is used to derive the dependencies. Although a dependency-parser may be adopted, our particular research agenda required partial phrase parsers for other tasks such as computing cohesion metrics. Having a phrase-based and dependency parser integrated in the system would have led to a heavier, less interactive system. A parse tree groups words into phrases and organizes these phrases into hierarchical tree structures from which syntactic dependencies among concepts can be detected. The system uses Charniak’s (2000) parser to obtain parse trees and Magerman’s (1994) head-detection rules to obtain the head of each phrase. A dependency tree is generated by linking the head of each phrase to its modifiers in a systematic mapping process. The dependency tree encodes exclusively local dependencies (head-modifiers), as opposed to long-distance (remote) dependencies, such as the remote subject relation between bombers and enter in the sentence The bombers managed to enter the embassy compounds. Thus, in this stage, the dependency tree is transformed onto a dependency graph by generating remote dependencies between content words. Remote dependencies are computed by a naive-Bayes functional tagger (Rus & Desai, 2005). An example of a dependency graph is shown in Figure 1 for the sentence The two objects will cover the same horizontal distance. For instance, there is a subject (subj) dependency relation between objects and cover.

In phase II, the textual entailment problem (i.e., each T and H) is mapped into a specific example of graph isomorphism called subsumption (also known as containment). Isomorphism in graph theory addresses the problem of testing whether two graphs are the same.

A graph G = (V, E) consists of a set of nodes or vertices V and a set of edges E. Graphs can be used to model the linguistic information embedded in a sentence: vertices represent concepts (e.g., bombers,joint venture) and edges represent syntactic relations among concepts (e.g., the edge labeled subj connects the verb cover to its subject objects in Figure 1). The Text (T) entails the Hypothesis (H) if and only if the hypothesis graph is subsumed (or contained) by the text graph.

The subsumption algorithm for textual entailment (Rus et al., in press) has three major steps: (1) find an isomorphism between VH (set of vertices of the Hypothesis graph) and Vp (2) check whether the labeled edges in H, EH, have correspondents in E.; and (3) compute score. In step 1, for each vertex VH, a correspondent VT node is sought. If a vertex in H does not have a direct correspondent in T, a thesaurus is used to find all possible synonyms for vertices. Step 2 takes each relation in H and checks its presence in T. The checking is augmented with relation equivalences among linguistic phenomena such as possessives and linking verbs (e.g. be, have). For instance, tall man would be equivalent to man is tall. A normalized score for vertices and edge mapping is then computed. The score for the entire entailment is the sum of each individual vertex and edge matching score. Finally, the score must account for negation. The approach handles both explicit and implicit negation. Explicit negation is indicated by particles such as no, not, neither… nor and the shortened form n’t. Implicit negation is present in text via deeper lexico-semantic relations among linguistic expressions. The most obvious example is the antonymyrelation among words, which is retrieved from WordNet (Miller, 1995). Negation is accommodated in the score after making the entailment decision for the Text-Hypothesis pair (without negation). If any one of the text fragments is negated, the decision is reversed, but if both are negated the decision is retained (double-negation), and so forth.

Figure 1. An example ofa dependency graph

entailment for Intelligent Tutoring Systems

The problem of evaluating student input in ITSs with natural language understanding is modeled here as a textual entailment problem. Results of this approach are shown on data sets from two ITSs: AutoTutor and iSTART. Data from theAutoTutor experiments involve college students learning Newtonian physics, whereas data from iSTART involve adolescent and college students constructing explanations about science texts.

AutoTutor

AutoTutor (autotutor.org) teaches topics such as Newtonian physics, computer literacy, and critical thinking by holding a dialogue in natural language with the student. The system presents deep-reasoning questions to the student that call for explanations or other elaborate answers. AutoTutor has a list of anticipated good answers (or expectations) and a list of misconceptions associated with each main question. AutoTutor guides the student in articulating the expectations through a number of dialogue moves and adaptively responds to the student by giving short feedback on the quality of student contributions.

To understand how the entailment approach helps to assess the appropriateness of student responses in Au-toTutor, consider the following AutoTutor problem:

Suppose a runner is running in a straight line at constant speed, and the runner throws a pumpkin straight up. Where will the pumpkin land? Explain why.

An expectation for this problem is The object will continue to move at the same horizontal velocity as the person when it is thrown. A real student answer is The pumpkin and the runner have the same horizontal velocity before and after release. The expert judgment of this response was very good. Such expectation/student-input (E-S) pairs can be viewed as an entailment pair of Text-Hypothesis. The task is to find the truth value of the student answer based on the true fact encoded in the expectation. Rus and Graesser (2006) examined how the lexico-syntactic system described in the previous section performed on a test set of 125 E-S pairs collected from a sample of AutoTutor tutorial dialogues. The lexico-syntactic approach provided the best accuracy (69%), whereas a Latent Semantic Analysis (LSA, Landauer et al., 2007) approach yielded an accuracy of 60%. Such a result illustrates the value of augmentingAutoTutor with lexico-syntactic natural language understanding.

iSTART (Interactive Strategy Trainer for Active Reading and Thinking)

The primary goal of iSTART (istartreading.com) is to help high school and college students learn to use reading comprehension strategies that support deeper understanding. iSTART’s design combines the power of self-explanation in facilitating deep learning (Mc-Namara et al., 2004) with content-sensitive, interactive strategy training. The iSTART system helps students learn to self-explain using a variety of reading strategies (e.g., rewording the text, or paraphrasing; or elaborating on the text by linking textual content to what the reader already knows). The final stage of the iSTART process requires students to self-explain sentences from two short passages. Scaffolded feedback is provided to the students based on the quality of the student responses.

The entailment evaluation has been used in two iSTART studies. In Rus et al. (2007), a corpus of iSTART self-explanation responses was evaluated by an array of textual evaluation measures. The results demonstrated that the entailment approach was the most powerful distinguishing index of the self-explanation categories (Entailer: F(1,1228) = 25.05, p < .001; LSA: F(1,1228) = 2.98, p > .01). In McCarthy et al. (2007), iSTART self explanations were hand-coded for degree of entailment, paraphrase, versus elaboration. Once again, the entailment evaluation proved to be a more powerful predictor of these categories than traditional measures: for entailment, the Entailer was a significant predictor (t= 9.61, p < .001) and LSA was a marginal predictor (t = -1.90, p = .061); for elaboration and for paraphrase the Entailer was again a significant predictor

FUTURE TRENDS

While the results of the entailment evaluation have been encouraging, a variety of developments of the approach are underway. For example, there are plans to weight words by their specificity and to learn syntactic patterns or transformations that lead to similar meanings. The current negation detection algorithm will be extended to assess plausible implicit forms of negation in words such as denied, denies, without, ruled out. A second extension addresses issues of relative opposites: knowing that an object is not hot does not entail that the object is cold (i.e., it could simply be warm).

CONCLUSION

Recognizing and assessing textual entailment is a prominent and challenging task in the fields of Natural Language Processing and Artificial Intelligence. This chapter presented a lexico-syntactic approach to the task of evaluating entailment. The approach is light, using minimal knowledge resources, yet it has delivered high performance in evaluations of three data sets involving natural language interactions in ITSs. The entailment approach is a promising step in achieving the goal of fast and effective evaluation of student contributions in short text exchanges, which is needed to provide optimal feedback and responses to student learners.

key terms

Dependency: Binary relations between words in a sentence whose label indicates the syntactic relation among the two words.

Entailment: The task of deciding whether a text fragment logically or semantically infers another text fragment.

Expectation: A stored (generally ideal) answer to a problem, against which input is evaluated; concept used in ITSs.

Graph Subsumption: A specific example of graph isomorphism. Isomorphism exists when two graphs are equivalent. Subsumption can be viewed as subgraph isomorphism.

Intelligent Tutoring System: Interactive, feedback-based computer systems designed to help students learn various topics.

Latent Semantic Analysis: A statistical technique for human language understanding based on words that co-occur in documents of large corpora.

Natural Language Processing: The science of capturing the meaning of human language in computational representations and algorithms.

Natural Language Understanding and Assessment: An NLP subset focusing on evaluating natural language input in intelligent tutoring systems.

Syntactic Parsing: The process of discovering the underlying structure of sentences.