INTRODUCTION

Almost all autonomous robots need to navigate. We define navigation as do Franz & Mallot (2000): “Navigation is the process of determining and maintaining a course or trajectory to a goal location” (p. 134). We allow that this definition may be more restrictive than some readers are used to – it does not for example include problems like obstacle avoidance and position tracking – but it suits our purposes here.

Most algorithms published in the robotics literature localise in order to navigate (see e.g. Leonard & Dur-rant-Whyte (1991a)). That is, they determine their own location and the position of the goal in some suitable coordinate system. This approach is problematic for several reasons. Localisation requires a map of available landmarks (i.e. a list of landmark locations in some suitable coordinate system) and a description of those landmarks. In early work, the human operator provided the robot with a map of its environment. Researchers have recently, though, developed simultaneous localisation and mapping (SLAM) algorithms which allow robots to learn environmental maps while navigating (Leonard & Durrant-Whyte (1991b)). Of course, autonomous SLAM algorithms must choose which landmarks to map and sense these landmarks from a variety of different positions and orientations. Given a map, the robot has to associate sensed landmarks with those on the map. This data association problem is difficult in cluttered real-world environments and is an area of active research.

We describe in this chapter an alternative approach to navigation called visual homing which makes no explicit attempt to localise and thus requires no landmark map. There are broadly two types of visual homing algorithms: feature-based and image-based. The feature-based algorithms, as the name implies, attempt to extract the same features from multiple images and use the change in the appearance of corresponding features to navigate. Feature correspondence is – like data association – a difficult, open problem in real-world environments. We argue that image-based homing algorithms, which provide navigation information based on whole-image comparisons, are more suitable for real-world environments in contemporary robotics.

BACKGROUND

Visual homing algorithms make no attempt to localise in order to navigate. No map is therefore required. Instead, an image IS (usually called a snapshot for historical reasons) is captured at a goal location S = (x. , y). Note that though S is defined as a point on a plane, most homing algorithms can be easily extended to three dimensions (see e.g. Zeil et al. (2003)) . When a homing robot seeks to return to Sfrom a nearby position C = (xc, y ), it takes an image Icand compares it with I The home vector H = S – Cis inferred from the disparity between I and Ic (vectors are in upper case and bold in this work). The robot’s orientation at C and S is often different; if this is the case, image disparity is meaningful only if IC is rotated to account for this difference. Visual homing algorithms differ in how this disparity is computed.

Visual homing is an iterative process. The home vector H is frequently inaccurate, leading the robot closer to the goal position but not directly to it. If H does not take the robot to the goal, another image IC is taken at the robot’s new position and the process is repeated.

The images IS and IC are typically panoramic grayscale images. Panoramic images are useful because, for a given location (x,y) they contain the same image information regardless of the robot’s orientation. Most researchers use a camera imaging a hemispheric, conical or paraboloid mirror to create these images (see e.g. Nayar (1997)).

Some visual homing algorithms extract features from Is and I and use these to compute image disparity. Alternatively, disparity can be computed from entire images, essentially treating each pixel as a viable feature. Both feature-based and image-based visual homing algorithms are discussed below.

FEATURE-BASED VISUAL HOMING

Feature-based visual homing methods segment I and Ic into features and background (the feature extraction problem). Each identified feature in the snapshot is then usually paired with one feature in I (the correspondence problem). The home vector is inferred from – depending on the algorithm – the change in the bearing and/or apparent size of the paired features. Generally, in order for feature-based homing algorithms to work properly, they must reliably solve the feature extraction and correspondence problems.

The Snapshot Model (Cartwright & Collett (1983))

The first visual homing algorithm to appear in the literature and the source of the term “snapshot” to describe the goal image – matches each snapshot feature with the current feature closest in bearing (after both images are rotated to the same external compass orientation). Features in (Cartwright & Collett (1983)) were black cylinders in an otherwise empty environment. Two unit vectors, one radial and the other tangential, are associated with each feature pair. The radial vector is parallel to the bearing of the snapshot feature; the tangential vector is perpendicular to the radial vector. The direction of the radial vector is chosen to move the agent so as to reduce the discrepancy in apparent size between paired features. The direction of the tangential vector is chosen to move the agent so as to reduce the discrepancy in bearing between paired features. The radial and tangential vectors for all feature pairs are averaged to produce a homing vector. The Snapshot Model was devised to explain the behaviour of nest-seeking honeybees but has inspired several robotic visual homing algorithms.

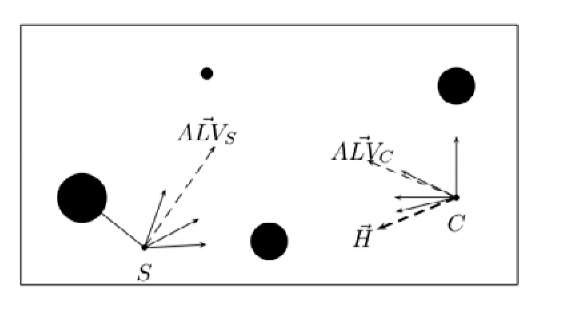

One such algorithm is the Average Landmark Vector (ALV) Model (Moller et al. (2001)). The ALV Model, like the Snapshot Model, extracts features from both Ic and Is TheALV Model, though, does not explicitly solve the correspondence problem. Instead, given features extracted from I, the algorithm computes and stores a unit vector ALVS in the direction of the mean bearing to all features as seen from S. At c, the algorithm extracts features from I and computes their mean bearing, encoded in the unit vector ALVC . The home vector H is defined as ALVC -ALVS. Figure 1 illustrates home vector computation for a simple environment with four easily discernible landmarks.

Several other interesting feature-based homing algorithms can be found in the literature. Unfortunately, space constraints prevent us from reviewing them here.

Two algorithms of note are: visual homing by “surfing the epipoles” (Basri et al. (1998) and the Proportional Vector Model (Lambrinos et al. (2000)).

The Snapshot and ALV Models were tested by their creators in environments in which features contrasted highly with background and so were easy to extract. How is feature extraction and correspondence solved in real-world cluttered environments? One method is described in Gourichon et al. (2002). The authors use images converted to the HSV (Hue-Saturation-Value) colour space which is reported to be more resilient to illumination change than RGB. Features are defined as image regions of approximately equal colour (identified using a computationally expensive region-growing technique). Potential feature pairs are scored on their difference in average hue, average saturation, average intensity and bearing. The algorithm searches for a set of pairings which maximise the sum of individual match scores. The pairing scheme requires O(n2) pair-score computations (where n is the number of features). The algorithm is sometimes fooled by features with similar colours (specifically, pairing a blue chair in the snapshot image with a blue door in the current image). Gourichon et al. did not explore environments with changing lighting conditions.

Several other methods feature extraction and correspondence algorithms appear in the literature; see e.g. Rizzi et al. (2001), Lehrer & Bianco (2000) and Gaussier et al. (2000). Many of these suffer from some of the same problems as the algorithm of Gourichon et al. described above. The appearance of several competing feature extraction and correspondence algorithms in recent publications indicates that these are open and difficult problems; this is why we are advocating image-based homing in this chapter.

Figure 1. Illustration of Average Landmark Vector computation. See Section titled “Feature-based Visual Homing” for details

IMAGE-BASED VISUAL HOMING

Feature-based visual homing algorithms require consistent feature extraction and correspondence over a variety of viewing positions. Both of these are still open problems in computer vision. Existing solutions are often computationally intensive. Image-based visual homing algorithms avoid these problems altogether. They infer image disparity from entire images; no pixel is disregarded. We believe that these algorithms present a more viable option for real-world, real-time robotics.

Three image-based visual homing algorithms have been published so far; we describe these below.

Image Warping

The image warping algorithm (Franz et al. (1998)) asks the following question: When the robot is at c in some unknown orientation, what change in orientation and position is required to transform Ic into I ? The robot needs to know the distance to all imaged objects in I to answer this question precisely. Not having this information, the image warping algorithm makes the assumption that all objects are at an equal (though unknown) distance from S. The algorithm searches for the values of position and orientation change which minimises the mean-square error between a transformed I and I . Since the mean square error function is rife with local minima, the authors resort to a brute force search over all permissible values of position and orientation change.

Unlikely as the equal distance assumption is, the algorithm frequently results in quite accurate values for H Unlike most visual homing schemes, image warping requires no external compass reference. Unfortunately, the brute force search for the homing vector and the large number of transformations of Ic carried out during this search make image warping quite computationally expensive.

Homing with Optic FIow Techniques

When an imaging system moves from S to C , the image of a particular point in space moves from Is(x,y) to Ic(x’,y). This movement is called optic flow and (x-x\ y-y’) is the so called pixel displacement vector. Vardy & Moller (2005) demonstrate that the home vector H can be inferred from a single displacement vector so long as the navigating robot is constrained to move on a single plane. Several noisy displacement vectors can be combined to estimate H.

Vardy & Moller (2005) describe a number of methods, adapted from the optic flow literature, to estimate the displacement vector. One of the most successful methods – BlockMatch – segments the snapshot image into several equal-sized subimages. The algorithm then does a brute force search of a subset of Ic to find the best match for each subimage. A displacement vector is computed from the centre of each subimage to the centre of its match pair in Ic

A less computationally intensive algorithm estimates the displacement vector from the intensity gradient at each pixel in Ic. The intensity gradient at a particular pixel can be computed straightforwardly from intensities surrounding that pixel. No brute-force search is required.

In comparative tests, Vardy & Moller demonstrated that their optic flow based methods perform consistently better than image warping in several unadulterated indoor environments. A drawback to the optic flow homing methods is that the robot is constrained to move on a single plane. The authors do not provide a way to extend their algorithm to three dimensional visual homing.

Surfing the Difference Surface

Zeil et al. (2003) describe a property of natural scenes which can be exploited for visual homing: as the Euclidean distance between S and c increases, the pixel-by-pixel root mean square (RMS) difference between IS and Ic increases smoothly and monotonically. Labrosse and Mitchell discovered this phenomenon as well; see Mitchell & Labrosse (2004). Zeil et al. reported that the increase in the RMS signal was discernible from noise up to about three meters from S in their outdoor test environment; they call this region the catchment area.

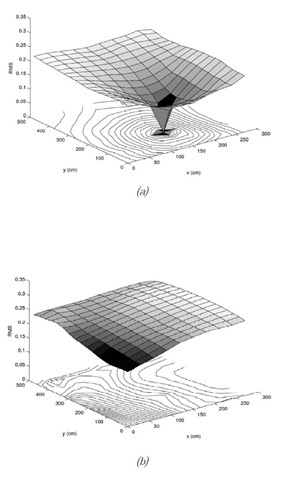

RMS, when evaluated at locations in a subset of the plane surrounding S, forms a mathematical surface, the difference surface. A sample difference surface is shown in Figure 2(a) (see caption for details).

Zeil et al. describe a simple algorithm to home using the RMS difference surface. Their “Run-Down” algorithm directs the robot to move in its current direction while periodically sampling the RMS signal. When the current sample is greater than the previous, the robot is made to stop and turn ninety degrees (clockwise or counter-clockwise, it does not matter). It then repeats the process in this new direction. The agent stops when the RMS signal falls below a pre-determined threshold. We have explored a biologically inspired difference surface homing method which was more successful than “Run-Down” in certain situations (Zampoglou et al. (2006)).

Figure 2. Two difference surfaces formed using the RMS image similarity measure. Both the surfaces and their contours are shown. In each case, the snapshot I was captured at x=150cm, y=150cm in a laboratory environment. (a) The snapshot was captured in the same illumination conditions as all other images. Notice the global minimum at the goal location and the absence of local minima. (b) Here we use the same snapshot image as in (a) but the lighting source has changed in all other images. The global minimum no longer appears at the goal location. When different goal locations were used, we observed qualitatively similar disturbances in the difference surfaces formed. The images used were taken from a database provided by Andrew Vardy which is described in Vardy & Moller(2005).

Unlike the optic flow methods described in the previous section, visual homing by optimising the difference surface is easily extensible to three dimensions (Zeil et al. (2003)).

Unfortunately, when lighting conditions change between capture of IS and IC , the minimum of the RMS difference surface often fails to coincide with S, making homing impossible (Figure 2(b)).

FUTURE TRENDS

No work has yet been published comparing the efficacy ofthe image-based homing algorithms described above. This would seem the logical next step for image-based homing researchers. As we mentioned in the section titled “Surfing the Difference Surface,” the difference surface is disrupted by changes in lighting between captures of ISand Ic. This problem obviously demands a solution and is a focus of our current research. Finally, it would be interesting to compare standard map-based navigation algorithms with the image-based visual homing methods presented here.

CONCLUSION

Visual homing algorithms - unlike most of the navigation algorithms found in the robotics literature – do not require a detailed map of their environment. This is because they make no attempt to explicitly infer their location with respect to the goal. These algorithms instead infer the home vector from the discrepancy between a stored snapshot image taken at the goal position and an image captured at their current location.

We reviewed two types ofvisual homing algorithms: feature-based and image-based. We argued that image-based algorithms are preferable because they make no attempt to solve the tough problems of consistent feature extraction and correspondence – solutions to which feature-based algorithms demand. Of the three image-based algorithms reviewed, image warping is probably not practicable due to the computationally demanding brute force search required. Work is required to determine which of the two remaining image-based algorithms is more effective for robot homing in real-world environments.

key terms

Catchment Area: The area from which a goal location is reachable using a particular navigation algorithm.

Correspondence Problem: The problem of pairing an imaged feature extracted from one image with the same imaged feature extracted from a second image. The images may have been taken from different locations, changing the appearance of the features.

Image-based Visual Homing: Visual homing (see definition below) in which the home vector is estimated from the whole-image disparity between snapshot and current images. No feature extraction or correspondence is required.

Feature Extraction Problem: The problem of extracting the same imaged features from two images taken from (potentially) different locations.

Navigation: The process of determining and maintaining a course or trajectory to a goal location.

Optic Flow: The perceived movement of objects due to viewer translation and/or rotation.

Snapshot Image: In the visual homing literature, this is the image captured at the goal location.

Visual Homing: A method of navigating in which the relative location of the goal is inferred by comparing an image taken at the goal with the current image. No landmark map is required.