IIS Logs

Microsoft’s Internet Information Server (IIS) is a Web server platform that’s popular with both users and attackers. It is easy for administrators to install—to the point that sometimes they aren’t even aware that they have a Web server running on their system. It is also a very popular target for attackers, and with good reason. Many times there are vulnerabilities to the Web server due to coding or configuration issues that, when left unaddressed, leave not only the Web server software but the entire platform open to exploitation. One of the best ways to uncover attempts to compromise the IIS Web server or details of a successful exploit is to examine the logs generated by the Web server.

The IIS Web server logs are most often maintained in the %WinDir%\System32\ LogFiles directory. Each virtual server has its own subdirectory for log files, named for the server itself. In most situations, only one instance of the Web server might be running, so the log subdirectory will be W3SVC1. During an investigation, you might find multiple subdirectories named W3SVCn, where n is the number of the virtual server. However, the location of the logs is configurable by the administrator and can be modified to point to any location, even a shared drive. By default, the log files are ASCII text format (this is also configurable by the administrator), meaning that they are easily opened and searchable. In many cases, the log files can be quite large, particularly for extremely active Web sites, so opening and searching the file by hand isn’t going to be feasible or effective. Searches can be scripted using Perl scripts or grep searches, or if you’re looking for something specific, the find/search capability found in whichever editor you choose to use might also work.

Speaking of searches, one of the biggest questions investigators face is, how do we cull through voluminous Web server logs to find what might be the proverbial needle in a haystack? On a high-volume server, the log files can be pretty large, and searching through them for relevant data can be an arduous task. Sometimes using a victim’s incident report can help an investigator narrow the time frame of when the attack occurred, allowing for a modicum of data reduction. However, this doesn’t always work. It is not uncommon for an investigator to find a system that had been compromised weeks or even months before any unusual activity was reported. So, what do you do?

Warning::

When analyzing IIS log files, one thing to keep in mind is that the time stamp for the events will most likely be in GMT format (http://support.microsoft.com/kb/194699). When IIS logs in W3C Extended log file format, which is the default, time stamps are logged in GMT format, rather than based on the local time zone format for the system. As a result, the IIS logs will roll over to the next day at midnight GMT (per http://support.microsoft.com/kb/944884), which will also need to be taken into account when performing analysis.

Also, be aware of fields that may be available in the logs on different versions of IIS. For example, IIS 6.0 and 7.0 include a "time-taken" field (http://support.microsoft.com/kb/944884) that may be useful in analysis of the logs.

Awhile back—a long while in Internet years, all the way back to 1997—Marcus Ranum developed an outline for what he referred to as "artificial ignorance" (AI). The basic idea is that if you remove all legitimate activity from the Web server logs, what you have left should be "unusual."

Tools & Traps…

Implementing "AI"

I’ve used the "artificial ignorance" method for filtering various items, and it has been a very useful technique. I had written a Perl script that would reach out across the enterprise for me (I was working in a small company with between 300 and 400 employees) and collect the contents of specific Registry keys from all the systems that were logged in to the domain. I could run this script during lunch and come back to a nice log file that was easy to parse and open in Excel. However, it was pretty large, and I wanted to see only those things that required my attention. So, I began examining some of the entries I found, and as I verified that each entry was legitimate, I would add it to a file of "known-good" entries. Then I would collect the contents of the Registry keys and log only those that did not appear in the known-good file. In a short time, I went from several pages of entries to fewer than half a page of items I needed to investigate.

With Web server logs, it is a fairly straightforward process to implement this type of AI. For example, assume that you’re investigating a case in which a Web server might have been compromised, and there are a very small number of files on the server—the index.html file and perhaps half a dozen other HTML files that contain supporting information for the main site (about.html, contact.html, links.html, etc.).

IIS Web server logs that are saved in ASCII format (which is the default) have a rather simple format, so it is a fairly easy task to use your favorite scripting language to open the file, read in each log entry, one line at a time, and perform processing. IIS logs will generally have column headers located at the top of the file, or that information might be somewhere else in the file if the Web server was restarted. Using the column headers as a key, you can then parse each entry for relevant information, such as the request verb (GET, HEAD, or POST), the page requested, and the status or response code (you can find a listing of IIS 5.0 and 6.0 status codes at http://support.microsoft.com/kb/318380) that was returned. If you find a page that was requested that is not on your list of known-good pages, you can log the filename, date/time of the request, source IP address of the request, and the like to a separate file for analysis.

Warning::

I am not providing code for this technique, simply because not all IIS Web logs are of the same format. The information that is logged is configurable by the Web server administrator, so I really cannot provide a "one size fits all" solution. Further, the exact specifications of a search may differ between cases. For example, in one case you might be interested in all pages that were requested that are not part of the Web server; in another case, you might be interested only in requests issued from a specific IP address or address range. In yet another case, you might be interested only in requests that generated specific response codes.

"Artificial ignorance" is one approach to take when searching Web server logs; this technique is very flexible and can be implemented on a wide range of logs and files. Another technique you can use is to look for specific artifacts left behind by specific attacks. This technique can be very useful in cases where more information about the infrastructure, the level of access the attacker obtained, and other specifics are known. Also, if there seems to be a particular vulnerability that was released around the time of the intrusion or there is an increase in reported attempts against a specific exploit, searching for specific artifacts could be an effective technique.

Warning::

I used to marvel at how some attacks just grew in popularity, and I figured that it had to do with the success of the attack itself. In some cases, a great deal of very technically detailed and accurate information is available about attacks. For example, a SQL injection cheat sheet that addresses a number of variations on SQL injection attacks is available from http://ferruh.mavituna.com/sql-injection-cheatshee-oku/. From information within this cheat sheet, something very interesting that you may find in logs as a result of a SQL injection attack is the use of the spjpassword keyword. This keyword is usually used for password changes, and tells the Microsoft SQL Server not to log the command. For an attacker, this is an issue only if logging is enabled on the SQL server; the attack still appears in the Web server logs. However, it is kind of sneaky!

For example, if an IIS Web server uses a Microsoft SQL database server as a back end, one attack to look for is SQL injection (http://en.wikipedia.org/wiki/SQL_injection). An attacker may use queries submitted to the Web server to be processed by the back-end database server to extract information, upload files to the server, or extend their reach deep into the network infrastructure. A telltale sign of a SQL injection attack is the existence of xp_cmdshell in the log file entries. Xp_cmdshell is an extended stored procedure that is part of the Microsoft SQL server that can allow an attacker to run commands on the database server with the same privileges as the server itself (which is usually System-level privileges). In mid- to late 2007, we saw a number of these attacks that were essentially plain-text attacks, in that once indications of the SQL injection were found within the IIS Web server log files (usually by doing a keyword search for xp_cmdshell), the analyst could clearly see the attacker’s activities. In many cases, the attacker would perform network reconnaissance using tools native to the Microsoft SQL Server system, such as ipconfig /all, nbtstat —c, netstat —ano, variations of the net commands to map out other systems on the network or to add user accounts to the system, as well as using ping.exe or other tools to determine network connectivity from the system. Once this was done, the attacker would then download tools to the SQL server using tftp.exe or ftp.exe (after using echo commands to create a File Transfer Protocol [FTP] script file). In one particular instance, the attacker broke an executable file into 512-byte chunks, and then wrote each chunk to a database table. Once all of the chunks were loaded into the database, the attacker told the database (again, all of this was being done remotely through the Web server) to extract the chunks, reassemble them into a single file, and then launch that file. Fascinatingly, it worked!

Tip:

It is important to note that during a SQL injection attack, the Web server itself is not actually compromised and directly accessed by the attacker. The attacker issues the specifically constructed queries to the Web server, which then forwards those queries to the database for processing. Another important factor that an analyst should keep in mind when examining Web server log files is that the Web server status or response code does not indicate whether the SQL injection code succeeded.

As the year rolled into spring 2008, the news media published a number of articles that described the use of SQL injection to subvert Web servers by injecting malicious JavaScript files into the Web server pages. Although this highlighted the SQL injection issue, it certainly ignored the arguably more malicious attacks that continued to lead to a complete subversion of the victim’s network infrastructure. Analysts started to see more and more pervasive attacks, but there was also a marked increase in the sophistication of the SQL injection attack techniques used, as they were no longer using plain-text, ASCII commands. Keyword searches were not finding any hits on xp_cmdshell, even when it was clear that some form of access similar to what could be attained through SQL injection had been achieved. A closer look revealed that attackers were now using DECLARE and CAST statements to encode their commands in hexadecimal strings, or in sequences of character sets (e.g., the character "%20" is equivalent to a space). Other unique terms, such as nvarchar, were also being used in the SQL injection statements. For an example of what such a log file entry would look like, see the blog post "The tao of SQL Injection exploits" (http://dominoyesmaybe.blogspot.com/2008/05/tao-of-sql-injection-exploits.html). As a result of these new adaptations to the attack, new analysis and detection techniques needed to be developed.

By default, the IIS Web server will record its logs in a text-based format. This format consists of a number of fields, the sequence of which will appear in the #Fields: line at the top of the log file. One means of analysis that can be used to detect SQL injection attacks regardless of encoding is to parse through the logs, extracting the cs-uri-stem field, which is the target Web page, such as default.asp or jobs.asp. Then, for each unique cs-uri-stem field, keep track of the lengths of the cs-uri-query fields, which show the actual query entered for the target Web page. As SQL injection commands are very often much longer than the normal queries sent to those pages during regular activity, you can easily track down log entries of interest. The cs-uri-stem field can also be used to determine which Web pages were vulnerable to SQL injection attacks, based solely on the contents of the logs themselves.

You can search for a number of other issues based on various keywords or phrases. For example, the existence of vti_auth\author.dll in the Web server logs can indicate the issue (http://xforce.iss.net/xforce/xfdb/3682) with the permissions on FrontPage extensions that can lead to Web page defacements. Other signatures I have used in the past to look for the Nimda (www.cert.org/advisories/CA-2001-26.html) worm (see the "System Footprint" section in the CERT advisory) included attempts to execute cmd.exe and tftp.exe via URLs submitted to the Web browser.

Notes from the Field…

Web Server Logs

A number of engagements have involved Web server log analysis, and in several instances I’ve seen clear indications of the use of automated Web server scanning applications based on the "footprints" of the application in the logs. When I find these entries, I like to ask the Web server administrator whether the organization is subject to scans based on regulatory compliance requirements. Sometimes the response is "yes," and the dates and IP addresses of the scans can be tied directly to authorized activity, but this is not always the case.

Analysis of IIS (and other Web server) logs can be an expansive subject, one suitable for an entire topic all on its own. However, as with most log files, the principles of data reduction remain the same: Remove all the entries that you know should be there, accounting for legitimate activity. Or, if you know or at least have an idea of what you’re looking for, you can use signatures to look for indications of specific activity.

Notes from the Field…

FTP Logs

I was assisting with an investigation in which someone had access to a Windows system via a remote management utility (such as WinVNC or pcAnywhere) and used the installed Microsoft FTP server to transfer files to and from the system. Similarly to the IIS Web server, the FTP server maintains its logs in the LogFiles directory, beneath the MSFTPSVCx subdirectory. There were no indications that the individual did anything to attempt to hide or obfuscate his presence on the system, and we were able to develop a timeline of activity using the FTP logs as an initial reference. Thanks to the default FTP log format, we had not only the date/time stamp of his visits and the username he used, but also the FTP address from which his connections originated. We correlated that information to date/time stamps from activity in the Registry (e.g., UserAssist keys, etc.) and the Event Logs (several event ID 10 entries stated that the FTP connection had timed out due to inactivity) to develop a clearer picture of this individual’s activity on the system.

Log Parser

Now that we’ve discussed both the Windows Event Logs and the IIS Web server logs, it’s a good time to mention a tool that Microsoft produced that has been extremely useful to analysts (even though it does not get a great deal of attention from the vendor). That tool is Log Parser (the URL is quite long and may change, so suffice it to say that the best way to locate a link to the tool is to Google for log parser), a powerful tool that allows you to use SQL to search a number of text- or XML-based files, as well as binary files such as Event Logs and the Registry, and output the data into text, SQL, or even syslog format.

To make the tool easier to use, a Visual Log Parser GUI is available from www.codeplex.com/visuallogparser.

Also, a number of resources are available that provide examples of the use of Log Parser at varying levels of complexity, such as the Windows Dev Center (www.windowsdevcenter.com/pub/a/windows/2005/07/12/logparser.html). I have heard of analysts who use Log Parser as a matter of course, as well as those who use it for specific tasks, such as parsing through significant numbers of Event Logs from multiple systems.

Web Browser History

On the opposite end of the Web server logs is the Internet Explorer Web browsing history. Internet Explorer is installed by default on Windows systems and is the default browser for many users. In some cases, as with corporate users, some corporate intranet Web sites (for submitting timecard information or travel expenses) could be specifically designed for use with Internet Explorer; other browsers (such as Firefox and Opera) are not supported. When Internet Explorer is used to browse the Web, it keeps a history of its activity that the investigator can use to develop an understanding of the user’s activity as well as to obtain evidence. The Internet Explorer browser history files are saved in the user’s profile directory, beneath the Local Settings\Temporary Internet Files\Content.IE5 subdirectory. Beneath this directory path, the investigator might find several subdirectories with names containing eight random characters. The structure and contents of these directories, including the structure of the index.dat files within each of these directories, has been covered at great length via other resources, so we won’t repeat that information here. For live systems, investigators can use the Web Historian tool (Version 1.3 is available from Mandiant.com at the time of this writing) to parse the Internet browser history. When examining an image, the investigator can use tools such as ProDiscover’s Internet History Viewer to consolidate the browser history information into something that is easy to view and understand. The index.dat file from each subdirectory (either from a live system or when extracted from an image) can be viewed using tools such as Index Dat Spy (www.stevengould.org/index.php?option=com_content&task=view&id=57&Itemid=220) and Index.dat Analyzer (www.systenance.com/indexdat.php).

Tip::

Often when you’re conducting an investigation, there are places you can look for information about what you should expect to see. For example, if the auditing on a system is set to record successful logons and you can see from the Registry when various users last logged on, you should expect to see successful logon event records in the Security Event Log. With regard to Internet browsing history, Internet Explorer has a setting for the number of days it will keep the history of visited URLs. You can find that setting in the user’s hive (ntuser.dat file, or the HKEY_CURRENT_USER hive if the user is logged on), in the \Software\ Microsoft\Windows\CurrentVersion\Internet Settings\URL History key. The value in question is DaysToKeep, and the default setting is 0×014, or 20 in decimal notation. If the data associated with the value is not the default setting, you can assume that the value has been changed, most likely by choosing Tools from the Internet Explorer menu bar and selecting Internet Options, then looking in the History section of the General tab. The LastWrite time for the Registry key will tell you when the value was changed.

Many investigators are familiar with using the Internet browsing history as a way of documenting a user’s activities. For example, you could find references to sites from which malicious software tools may be downloaded, MySpace.com, or other sites that the user should not be browsing. As with most aspects of forensic artifacts on a system, what you look for as "evidence" really depends on the nature of your case. However, nothing should be overlooked; small bits of information can provide clues or context to your evidence or to the case as a whole. However, not all users use the Internet Explorer browser, and a number of other browsers are available—namely, Mozilla, Firefox, Opera, and Google’s Chrome browser. Freely available tools from NirSoft (www.nirsoft.net/utils/) allow you to view the history, cache, and cookie archives for a number of browsers, as well as (in some cases) retrieve passwords maintained by the browser itself. All of these tools can provide extremely valuable information during an examination.

Other tools available for forensic analysis of browsers include Firefox Forensics (F3) and Google Chrome Forensics, both available (for a fee) from Machor Software (www.machor-software.com/home), and Historian from Gaijin (free, in German, from www.gaijin.at/dlhistorian.php). A number of articles that cover Web browser forensics are also available, such as John McCash’s SANS Forensics blog post on Safari forensics (http://sansforensics. wordpress.com/2008/10/22/safari-browser-forensics/), as well as a two-part series of articles on Web browser forensics from Keith Jones and Rohyt Belani at the SecurityFocus Web site (part 1 is at www.securityfocus.com/infocus/1827). Searching Google for browser forensics reveals a great deal of information, although some is not as detailed as the articles I’ve mentioned. However, a great deal of work is being performed and documented in this area, so keep your eyes open.

Other Log Files

Windows systems maintain a number of other, less well-known log files, during both the initial installation of the operating system and day-to-day operations. Some of these log files are intended to record actions and errors that occur during the setup process. Other log files are generated or appended to only when certain events occur. These log files can be extremely valuable to an investigator who understands not only that they exist but also what activities cause their creation or expansion and how to parse and understand the information they contain. In this section, we’re going to take a look at several of these log files.

Setuplog.txt

The setuplog.txt file, located in the Windows directory, is used to record information during the setup process, when Windows is installed. Perhaps the most important thing to an investigator about this file is that it maintains a time stamp on all the actions that are recorded, telling you the date and time the system was installed. This information can help you establish a timeline of activity on the system.

An excerpt of a setuplog.txt file from a Windows XP SP2 system is shown here:

Warning::

While writing this topic, I made sure to take a look at the various versions of Windows in regard to the setuplog.txt file. On Windows 2000, the file had time stamps, but no dates were included. On Windows XP and 2003, the contents of the file were similar in that each entry had a time stamp with a date. I did not find a setuplog.txt file on Vista.

As you can see from the excerpt of the setuplog.txt file from an XP system, the date and time stamp are included with each entry.

Warning::

If you’re analyzing an image from a system and the time stamps that you see in the setuplog.txt file don’t seem to make sense (e.g., the timeline doesn’t correspond to other information you’ve collected), the system might have been installed via a ghosted image or restored from backup. Keep in mind that the setuplog.txt file records activity during an installation, so the operating system must be installed on the system for the file to provide useful information.

Setupact.log

The setupact.log file, located in the Windows directory, maintains a list of actions that occurred during the graphical portion of the setup process. On Windows 2000, XP, and 2003, this file has no time stamps associated with the various actions that are recorded, but the dates that the file was created and last modified will provide the investigator with a clue as to when the operating system was installed. On Vista, this file contains entries that do include time and date stamps on many of the actions that are recorded.

Setupapi.log

The setupapi.log file (maintained in the Windows directory) maintains a record of device, service pack, and hotfix installations on a Windows system. Logging on Windows XP and later versions of Windows is more extensive than on previous versions, and although Microsoft uses this file primarily for troubleshooting purposes, the information in this file can be extremely useful to an investigator.

Microsoft maintains a document called "Troubleshooting Device Installation with the SetupAPI Log File" that provides a good deal of extremely useful information about the setupapi.log file. For example, the setupapi.log file contains a Windows installation header section that lists the operating system version along with other information. If the setupapi.log file is deleted for any reason, the operating system creates a new file and inserts a new Windows installation header.

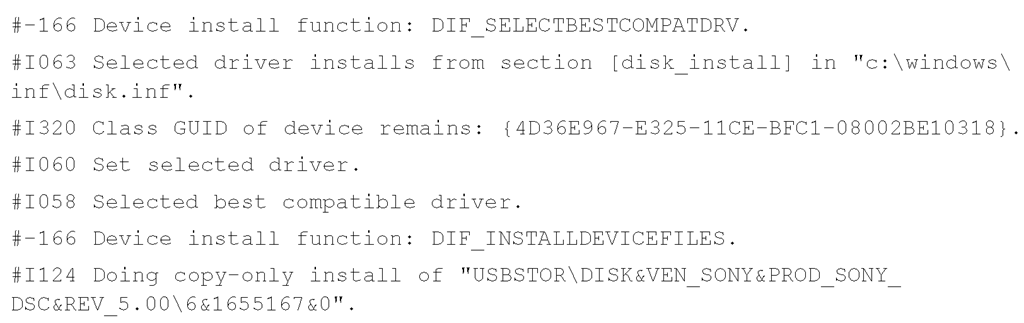

Device installations are also recorded in this file, along with time stamps that an investigator can use to track this sort of activity on the system.When a particular kind of device is first attached to the system, a driver has to be located and loaded to support the device. In instances where multiple copies of the same type of device are attached to a Windows system, only the first device attached will cause the driver to be located. All subsequent devices of the same type that are connected to the system will make updates in the Registry. Take a look at this excerpt from a setupapi.log file:

From this log file excerpt, we can see that a USB removable storage device manufactured by Sony was first connected to the system on October 18, 2006.However, the date and time stamp from the "Driver Install" section shows us the date that the device was first plugged into the system, which we can use along with the LastWrite time of the appropriate Registry key to determine a timeline of when the device was used on the system.