Priority Queuing

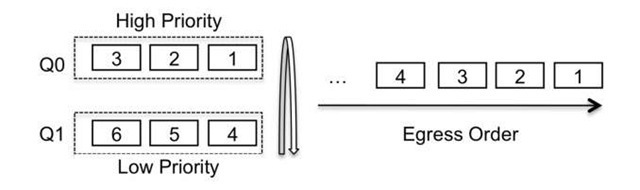

For applications that are sensitive to delay and that are not able to handle packet loss, the priority queuing (PQ) scheduling algorithm provides a simple method of supporting differentiated service classes. After a classification scheme has classified the packets and placed them into different queues with different priorities, the PQ algorithm handles the scheduling of the packets following a priority-based model. Packets are scheduled to be transmitted from the head of a given queue only if all queues of higher priority are empty. So the different levels of priority assigned to queues introduces the unfairness desired regarding of how queues are serviced. However, inside a single priority queue, packets are still scheduled in a FIFO order, as with any other queuing and scheduling method. The PQ algorithm operation is illustrated in Figure 7.7.

In Figure 7.7, queue zero (Q0) has the highest priority, so as long as there are packets in Q0, the scheduler serves only this queue. Queue one (Q1) has a low priority, so packets are removed from this queue only when Q0 is empty.

PQ offers benefits for traffic that requires no packet loss and low delay. With PQ, such applications can be selectively scheduled and their service can be differentiated from the bulk of the best-effort flows.

Figure 7.7 PQ scheduling

The PQ requirements for queuing and scheduling are minimal and not very complex compared with other, more elaborate queuing disciplines that also offer differentiated service.

However, PQ has its own set of limitations. If the volume of high- priority traffic becomes excessive, lower- priority queues may face a complete resource starvation. If their queuing rate remains constant but the rate of removal from the queue decreases, packet drops start to occur, either from the tail or the head of the queue, or possibly both. The drop rate for traffic placed in low-priority queues increases as the buffer space allocated to the low-priority queues starts to overflow. The result is not only packet drops but also increased latency. Regarding TCP sessions, the state of TCP traffic may become stale if it has to be retransmitted. Also, introducing such a conservative queuing discipline and scheduling mechanism can affect the network service to such extent that the service for the whole network decreases because of starvation of the majority of traffic and applications.For example, for Session Initiation Protocol (SIP) traffic, the PQ algorithm can prioritize SIP traffic. However, if DHCP offers cannot reach users and DNS servers cannot resolve queries to reach SIP servers and users, the gains obtained by favoring SIP traffic are limited because no users are available to establish SIP sessions. In addition, misbehaving high-priority flows can add delay and jitter to other high-priority flows that share the same queue. Effectively, a real-time service comprises several different applications and packet types, and all these applications and packet types must receive equivalent service levels for the real-time service to operate properly. Having all one’s eggs in the same basket is not a design for today’s Internet and for large-scale enterprise networks.

Priority queuing can be implemented both in a strict mode and in a rate-controlled mode. For example, the priority queue rate can be policed to drop or reclassify traffic if the rate increases beyond certain thresholds. Such thresholds can be specified as a percentage of the link bandwidth or an explicit rate. This method avoids starvation of lower-priority queues and can also provide control over the delay inserted in traffic in high-priority queues by establishing a limit on the maximum amount of traffic that can be queued.

PQ has the following benefits:

• The PQ algorithm provides a simple method of supporting differentiated service classes, in which different queues are serviced differently according to the priority assigned.

The buffering and scheduling computational requirements are low and not very complex, even when implementing PQ on network equipment with high-speed links.

• Low-delay and loss-sensitive applications such as real-time traffic can be effectively protected from other greedy traffic flows such as TCP sessions, and low delay and jitter can be maintained for the traffic classified as high priority.

PQ has the following limitations:

• Because of its absolute allocation of resource and scheduling services to the priority-classified traffic, the PQ algorithm can result in network malfunctioning because low-priority traffic becomes stalled. The cost of giving certain traffic a higher priority comes at the expense of penalizing lower-priority traffic.

• High-priority traffic must be very well provisioned and rate-controlled. Otherwise, service breakdowns can result for all traffic that is not high priority.

Weighted Fair Queuing

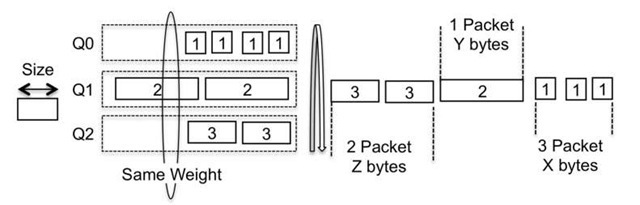

Weighted fair queuing (WFQ) is commonly referred as "bit-by-bit round robin," because it implements a queuing and scheduling mechanism in which the queue servicing is based on bits instead of packets. WFQ was developed in 1989 by Demers, Keshav, Shenke, and Zhang, and emulates the Generic Processor Sharing (GPS) concepts of virtual processing time. In WFQ, each queue or flow is allocated a weight that is a proportion of the interface rate or the shaping rate. WFQ is aware of packet sizes and can thus support variable-sized packets. The benefit is that sessions with big packets do not get more scheduling time than sessions with smaller packets, because effectively the focus of WFQ is bits and not packets. So there is no unfairness in the scheduling for sessions with smaller packet sizes. With WFQ, each queue is scheduled based on a computation performed on the bits of each packet at the head of the queue. Because the traffic computation is done based on stream of bits and not of packets, and because what the router receives and transmits are indeed packets, WFQ implicitly increases complexity. Figure 7.8 illustrates the WFQ scheduling principle.

In Figure 7.8, all queues have the same weight, and thus the number of bytes scheduled in each scheduling turn is the same for all queues and reflects the weight value. Queue zero (Q0) removes three packets with a total value of X bytes, queue one (Q1) removes one packet with Y bytes, and queue two (Q2) removes two packets with Z bytes. The weight factor is effectively an allowance for how many resources can be assigned and used.

The drawback of WFQ is that it is very resource-intensive because of the bit computations. The original WFQ idea also consumes many resources because the flows are not aggregated into classes with limited queues. Instead, each flow or stream gets its own queue or buffer quota, similar to FQ. Because of these high resource demands and the complex computation needed to check the state for each flow and its packets, WFQ has been implemented more on CPU-based platforms whose queuing disciplines are based on bus-based architectures.

Figure 7.8 WFQ scheduling

WFQ has the following benefits:

• The implementations based on WFQ algorithm provide service differentiation between classes and their aggregated traffic, rather than merely differentiating between individual flows. A weight allocated to each class divides the scheduling and bandwidth ratio for each class. Also, because WFQ is bits aware, it can handle packets of variable lengths.

WFQ has the following limitations:

• The original WFQ design is more of a queuing theory. The existing implementations do not follow the original concept in which each flow is allocated a weight. Instead, flows are aggregated by being classified into different service classes, and these classes are then assigned to queues.

• WFQ is extremely complicated to implement, as is FQ. Maintaining state information and computing the hash table are resource – intensive tasks.

Weighted Round Robin

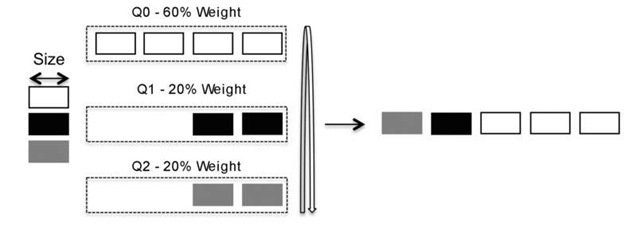

Weighted round robin (WRR) is a scheduling discipline that addresses the shortcomings of PQ and FQ. The basic concept of WRR is that it handles the scheduling for classes that require different bandwidth. WRR accomplishes this by allowing several packets to be removed from a queue each time that queue receives a scheduling turn. WRR also addresses the issue with PQ in which one queue can starve queues that are not high-priority queues. WRR does this by allowing at least one packet to be removed from each queue containing packets in each scheduling turn. At first glance, it may seem that WRR is very similar to WFQ. The difference between the two is that WFQ services bits at each scheduling turn, whereas WRR handles packets in each scheduling turn. The number of packets to be serviced in each scheduling turn is decided by the weight of the queue. The weight is usually a percentage of the interface bandwidth, thereby reflecting the service differences between the queues and the traffic classes assigned to those queues. Figure 7.9 illustrates WRR.

Figure 7.9 WRR scheduling

As illustrated in Figure 7.9 , three packets are removed from queue zero (Q0), one packet from both queue one (Q1) and queue two (Q2). The number of packets removed reflects the weight for the queue. As seen, Q0 has three times more weight than Q1 and Q2, then it removes three times more packets each scheduling turn.

WRR has no knowledge of the true sizes of the packets in the buffers that are to be scheduled. The queues and scheduling are generally optimized for an average packet size. However, the sizes are all just estimates and have no true meaning with regard to the actual traffic mix in each queue. This operation of WRR is both an advantage and an issue. Because WRR has no complex resources that demand state computation as with WFQ, which must transform bits to bandwidth scheduling, it is fairly simple to implement WRR. The result is a solution well-suited for handling a large number of flows and sessions, making WRR into something of a core QOS solution that can deal with large volumes of traffic and with congestion. The drawback of WRR is that it is unaware of bandwidth because it does not handle variable-sized packet. In terms of receiving a schedule turn, a 1500-byte packet is equivalent to a 64-byte packet. In practice, the packets’ weight counts only in each round of service scheduling. When the traffic mix is somewhat the same with regard to classes and queues, WRR is, over time, acceptably fair in its scheduling. However, in the short term, the differences can be great. And if traffic classes have large variations in traffic mix and traffic types, and thus large differences in packet sizes, the queuing situation can become quite unfair, favoring classes that are dominated by large packets. For example, a TCP-based traffic class can gain the advantage over a real-time traffic class whose packets are relatively small, as illustrated in Figure 7.10.

In Figure 7.10, queues Q1 and Q2 have the same weight. However, because the packets in Q1 are twice the size of packets in Q2, in reality Q1 ‘s bandwidth rate is twice that of Q2. WRR has the following benefits:

• The WRR algorithm is easy to implement.

• To implement differentiated service, each queue is allocated a weight that reflects the interface’s bandwidth. This scheme is fair because it avoids having one queue starve another queue and because it is possible to provide service priority by allocating various weights to the queues.

Figure 7.10 Comparing WRR with large and small packets

WRR has the following limitations:

• Because WRR is unaware of how different packet lengths are scheduled, scheduling can be unfair when queues have different sized packets. When one queue has mostly small packets while another has mostly big packets, more bandwidth is allocated to the queue with big packets.

• Services that have a very strict demand on delay and jitter can be affected by the scheduling order of other queues. WRR offers no priority levels in its scheduling.

Deficit Weighted Round Robin

With WRR for each scheduling turn, the number of packets that are granted service is based on a weight that reflects the bandwidth allocation for the queue. As discussed in the previous section, bandwidth allocation can be unfair when the average packet sizes are different between the queues and their flows. This behavior can result in service degradation for queues with smaller average packet sizes. Deficit Round Robin (DRR), or Deficit Weighted Round Robin (DWRR), is a modified weighted round-robin scheduling discipline that addresses the limitations of WRR. The original idea was proposed by M. Shreedhar and G. Varghese in 1995. Deficit algorithms are able to handle packets of variable size without knowing the mean size. A maximum packet size number is subtracted from the packet length, and packets that exceed that number are held back until the next scheduling turn.

While WRR serves every non-empty queue, DWRR serves packets at the head of every non-empty queue whose deficit counter is greater than the size of the packet at the head of the queue. If the deficit counter is lower, the queue is skipped and its credit value, called a quantum, is increased. The increased value is used to calculate the deficit counter the next time around when the scheduler examines the queue for service. If the queue is served, the credit is decremented by the size of packet being served.

Following are the key elements and parameters in implementing DWRR that affect the scheduling service for the queues:

• Weight, which is similar to WRR algorithm weight, reflects a proportion of the bandwidth on the outgoing interface.

• The quantum translates the weight value into bytes. With each scheduling turn, a value of quantum is added in proportion to the weight. Thus, the quantum is the throttle mechanism that allows the scheduling to be aware of the bandwidth.

• The value of credits can be positive or negative. Positive credits accrue when, at the end of a scheduler turn, there are leftover bytes that were not used in the queue ‘ s scheduling turn. This value is deferred to the queue’s next scheduling turn. Negative credits accrue when the queue has transmitted more than its bandwidth value in a scheduling turn, and thus the queue is in debt when the next scheduling turn comes around.

• The deficit counter, which provides bandwidth fairness, is the sum of the quantum and the credits. The scheduler removes packets until the deficit counter reaches a value of zero or until the size of the packets is larger than the remaining deficits. But if the queue does not have enough deficits to schedule a packet, the value of the deficit is retained until the next scheduling round, and the scheduler moves to the next queue.

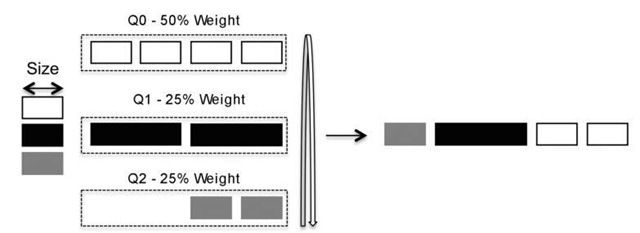

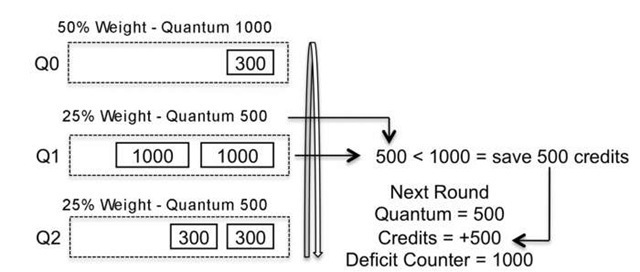

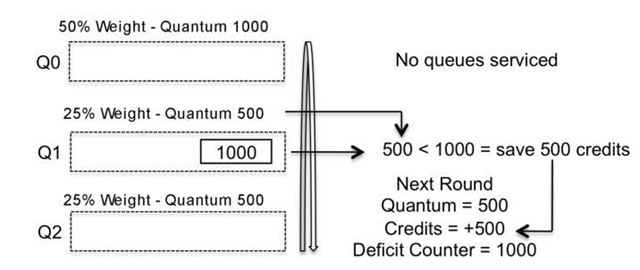

Let us go through DWRR in practice to illustrate its attributes. Consider a setup, as shown in Figure 7.11 with three queues with different weights that reflect the proportion of bandwidth of outgoing interface. Queue zero (Q0) has 50% of the bandwidth, and queues one (Q1) and two (Q2) each have 25% of the bandwidth. To simplify the example, all queues have an equal length. The queues have variable-sized packets and different numbers of packets. At each scheduling turn, credits are added to the quantum number of each queue that reflect the weight as a quota of the bandwidth.

Figure 7.11 DWRR scheduling configuration

Figure 7.12 DWRR scheduling, turn 1, Q0

Figure 7.13 DWRR scheduling, turn 1, Q1

Figure 7.12 shows the first scheduling turn and the process of dequeue for Q0. In Figure 7.12, we see that Q0 has three packets, two that are 300 bytes and one that is 500 bytes. Each scheduling turn, a quantum value of 1000 is added to Q0. In this turn, then, the deficit counter is 1000, which means that 1000 bytes can be subtracted from the queue. The 300-byte and 500-byte packets can be transmitted because they consume 800 credits from the 1000-credit bucket. But the last 300-byte packet cannot be transmitted in this turn, because 300 are bigger than the remaining 200 credits (1000 – 800 = 200). The result is that the scheduling jumps, in order, to the next queue with packets inside, and the 200 credits for Q0 are saved for next scheduling turn. Now let’s look at the next queue in order, Q1, as illustrated in Figure 7.13.

Q1 contains two 1000-byte packets, but the quantum per scheduling turn is 500 credits. This means that no packets can be transmitted in this scheduling cycle because not enough credits exist (500 < 1000). The result is that the deficit counter for Q1 is incremented with 500 credits for the next scheduling round. This example illustrates the power of deficit-style algorithms compared with plain round-robin systems. With DWRR, Q1 is actually punished because the available quantum and credit values are smaller than the actual packet sizes. This situation is proof that DWRR scheduling is aware of variable packet sizes, not just the number of packets to be queued. Let us move to the next queue in order, Q2 shown in Figure 7.14.

Figure 7.14 DWRR scheduling, turn 1, Q2

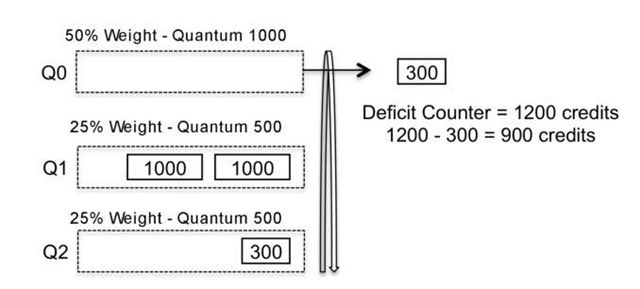

Figure 7.15 DWRR scheduling, turn 2, Q0

Queue 2 has a quantum value of 500, and because the packet at the head of the queue is 300 bytes, it is transmitted. The value of 300 is subtracted from the 500, resulting in 200 credits that are saved for the next scheduling turn. The scheduling wheel has now completed one full turn, and we are back at Q0, as illustrated in Figure 7.15 .

The Q0 deficit counter is now 1200. The 300-byte packet is transmitted and its value is subtracted from the deficit counter, resulting in a new value of 900 credits (see Figure 7.15). While implementations can vary, a common behavior is to set the deficit counter to zero if no packets are in the queue. Queues that behave this way are honored by the algorithm, but they receive no extra points for no work. You cannot get fame and glory unless the queue is filled with packets. Now the scheduler returns to the troubled Q1, from which no packets were transmitted in turn 1, as illustrated in Figure 7.16.

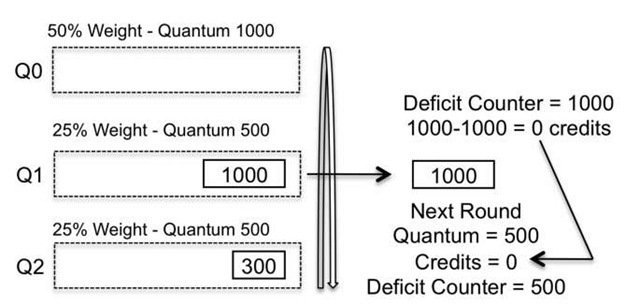

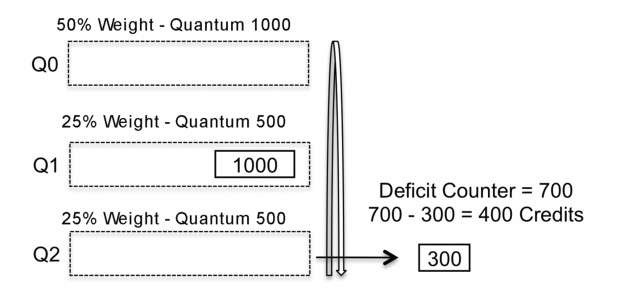

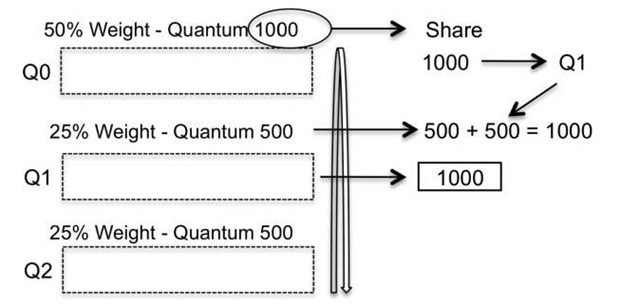

Finally, there is some good news for Q1. The updated quantum value is 500, and with the 500 credits saved from cycle 1, for a total of 1000 credits, Q1 can transmit one of the 1000-byte packets. Moving to Q2, the news is all good. The deficit counter is far bigger than the number bytes in the queue, so the last packet is transmitted, as illustrated in Figure 7.17.

Figure 7.16 DWRR scheduling, turn 2, Q1

Figure 7.17 DWRR scheduling, turn 2, Q2

Figure 7.18 DWRR scheduling, turn 3, Q1

Let us look at a third scheduling turn, which illustrates an interesting DWRR scenario. Q0 has no packets to schedule, so it is not serviced and the scheduler moves to Q1. This queue has the same problem, with big packets eating up the deficit counter, and Q1 cannot transmit its packet, as illustrated in Figure 7.18.

Scheduling turns, that is, bandwidth that has not been used by one queue can be shared with other queues. So for poor Q1, this is good news. The extra available quantum of credits from Q0 is shared with other queues that have packets to be transmitted, with the leftover quantum divided between the queues based on their weight: the more weight, the more of the extra quantum credits are allocated. Because Q2, had no packets in its buffer, the entire leftover quantum for Q0 is given to Q1. This is illustrated in Figure 7.19.

Figure 7.19 DWRR scheduling, turn 3, leftover bandwidth

How does a deficit-based system drop packets? The previous example did not explicitly examine this. In the packet-dropping schemes described earlier in this topic, packets can be dropped at the head of the queue when packets remain in the queue too long because of congestion, and packets can also be dropped from the tail when they cannot be placed into a full queue. This is also the process for DWRR. In the previous example, for ease of understanding, the credits were set to a low value. For the most part, the credit value reflects the MTU size of the outgoing interface. Thus, the credit refill rate should keep up with the queue refill rate to avoid a complete forwarding stalemate in the queue.

An alternative way to describe the state in which no scheduling occurs is with the concept of negative versus positive credit states. When a queue enters a negative state, it does not receive any service because it exceeded its rate credit in its last scheduling turn. A queue can enter negative credit territory if the last packet could not be transmitted because of insufficient credits, resulting in a deficit counter that is negative. Once the queue is in this negative credit state, it cannot schedule packets until the quantum of credits added returns the currently negative deficit counter value to a positive number. The only way to get into positive credit territory is to have the queue build up enough credits to be able to schedule packets again. In summary, a queue does not receive any scheduling slots unless the deficit counter is greater than zero.

Let us consider the earlier scenario with Q1. Here, Q1 is implicitly punished because it has attempted to use more than its quantum of credit. The result is that in the scheduling turn 1, no packets are transmitted because the quantum values are less than the number of bytes in the queue. In such a scenario, packets drops occur at the head of the queue because the packets stay in the queue too long and age out. In the case of severe congestion, clearing of the queue cannot keep up with the rate at which the queue is filled.

With the logic of negative credits, a queue is allowed to have a negative value for the deficit counter. Figure 7.20 shows that Q1 receives a negative credit value during the first scheduling turn because the 1000-byte packet is bigger than the quantum of 500. The result is a deficit counter- value of – 500.

Figure 7.20 DWRR scheduling, turn 1, Q2 with negative credits

At the next scheduling turn for Q1, the state is negative and the quantum of 500 is not higher than the deficit counter, so no packets are scheduled. Because the deficit counter is less than zero, the scheduling algorithm then skips to next queue. The good news, however, for Q1 is that at the third scheduling turn, the queue has a positive credit state and could schedule packets again. Whether Q1 actually drops the 1000-byte packet while it is waiting to receive a scheduling turn depends on the buffer depth and the fill rate of the queue. The negative versus positive credit state is a way to honor well-behaved queues and punish poorly behaved ones. DWRR has the following benefit:

• The DWRR algorithms limit the shortcomings with WRR because they are a more modern form of WFQ that incorporates scheduling aware of bits and packets.

DWRR has the following limitation:

• Services that have very strict demand on delay and jitter can be affected by other queues by the scheduling order. DWRR has no way to prioritize its scheduling.