Throughout this topic, we have always referred to the queuing and scheduling tool as the star of the QOS realm, the tool that makes crystal clear the principle of benefiting some at the expense of others. In this topic, we analyze the internals of the queuing and scheduling mechanism that allow such differentiation. But first, we analyze the parameters associated with any queuing and scheduling mechanism and their common ground. Only then do we present the different queuing and scheduling mechanisms. The most commonly deployed mechanism is PB-DWRR, which stands for priority-based deficit weighted round robin. However, we also present all other mechanisms that have preceded PB-DWRR because analyzing their pros and cons provides the necessary clues to understand the roots and goals of PB-DWRR and why the industry has moved towards it.

Queuing and Scheduling Concepts

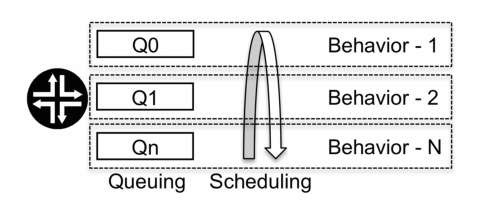

Queuing and scheduling applied to an interface allows traffic to be split into multiple queues so that the scheduler can decide which type of treatment the traffic inside each queue receives. If the traffic mapped to each queue belongs to a specific class of service, the scheduler can apply differentiated behavior to different classes of service, as illustrated in Figure 7.1.

The above is a quick recap of what has been previously discussed throughout this topic, so let us now dive more deeply into the internals of queuing and scheduling.

The two most important parameters associated with the queuing and scheduling mechanism are buffers and bandwidth. Buffering is the length of the queue, that is, how much memory is available to store packets. However, the entire packet does not need to be queued, as we see later; sometimes what is stored is a notification, which is a representation of the packet contents. The buffering value can be defined either as a time value during which packets are accepted on the interface on which queuing is enabled or as a physical size in terms of how many packets or packet notifications can reside in the queue at the same time. The buffering value is a quota of available memory and can be defined as milliseconds of traffic or absolute numbers of packets.

Figure 7.1 Queuing and scheduling applying different behaviors

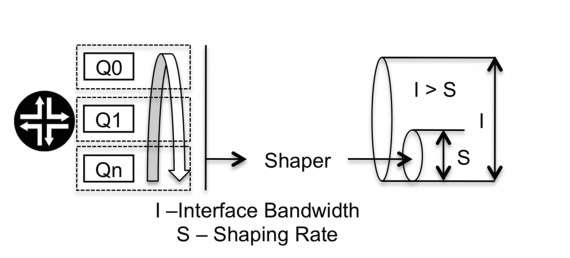

Figure 7.2 Bandwidth parameter in queuing and scheduling

The bandwidth parameter refers to the scheduling part of the equation. A total amount of bandwidth is made available to the queuing and scheduling mechanism. Scheduling determines how much is allocated to each queue. The total amount of bandwidth can be either the interface speed or the shaping rate if a shaper is applied after the scheduler, as illustrated in Figure 7.2

The queuing and scheduling discipline used determines how the resources are allocated. The requirement for the presence of queuing and scheduling is typically controlled by the presence of congestion. If resources are sufficient and there is no competition for resources, there is no need for queuing. One way to create congestion is to place more traffic on an interface than the outgoing line speed can support. Congestion can also be created artificially, by applying a shaping rate to the interface that imposes a speed limit lower than the maximum interface line speed. The leftover traffic is throttled or back- pressured into memory, which is then partitioned across the actual queues. The scheduler then services the queues and is responsible for the rate at which packets from each queue are transmitted.

A packet enters a queue at the tail, remains in the queue until it reaches the head, and then leaves the queue. In a queuing system, packets can be dropped from either the tail or the head of the queue, and can even be dropped from both at the same time. Most commonly, packets are dropped from the tail. When the queue buffer fill rate is much faster than the removal rate, the buffer fills up completely. The result is that no more packets can be placed in the buffer, and any newly arrived packet needs to be dropped.

Figure 7.3 Tail and head aging drops in a queue

But a queue can also drop packets from the head. Packets at the head of the queue are the ones that have moved from the tail to the head, and thus are those that have stayed in the queue for the longest amount of time. In case of extreme congestion and resource starvation, the queue receives no scheduling slots. To avoid stalling the queue, and having a hopelessly long delay for the traffic inside the queue, a maximum time is enforced on all packets in a queue for how long they are allowed to remain in the queue, waiting to be scheduled. The term for this is packet aging, meaning that the packets are aged out from the queue buffer because there is no point in trying to deliver them.

Tail drops and packet aging are not mutually exclusive. If, to drain the queue, the rate of dropping packets at the head of the queue cannot keep up with the rate at which packets are entering the queue at the tail, packet dropping can occur from both the tail and the head as a result of packet aging, as shown in Figure 7.3.

Packets and Cellification

Routers can handle packet queuing in two different ways, either queuing the entire packet or splitting it into fixed size cells, a process commonly called cellification. Queuing the entire packet is the older method and is conceptually simpler. It is commonly used in CPU processing-based forwarding routers. In this scenario, there is no split between the control and forwarding planes, which typically does not become a limiting factor if the supported interfaces are low- speed ones. However, queuing the entire packet has its drawbacks, which have led to the appearance and popularity of cellification. A packet has a variable length, where the minimum and maximum values are specified by the technology or optionally by the interface configuration. Also, a packet can contain several different headers. These possible variations in the packet length and in the number of possible headers create the challenge of how to manage memory resources. Also, the router CPU is affected, because every time the packet is processed, it needs to be read bit by bit. So different packet lengths imply different processing delays.

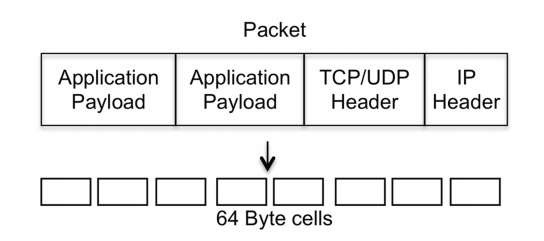

Figure 7.4 Cellification of a packet

The cellification process chops a packet into fixed-sized cells, which makes it easier for the router’s memory management to deal with the packets. It also provides consistency in terms of transmission times and slots for the buffering required. The cellification process in shown in Figure 7.4, which shows a packet containing several headers that is split into 64-bytes cells.

Cellification also has a special cell called the notification, also called the "cookie." The cookie contains the relevant pieces of the packet that are required for its processing inside the router. So instead of processing the entire packet every single time it needs to be evaluated by any tool, the router evaluates only the cookie. The idea is to create a representation of the packet with only the required information. For example, if an IP packet contains in its header the DSCP value of X, that is contained in the cookie. However, other information, e.g. the packet payload, may not be added to the cookie. The cookie contents are dynamic, so if the packet is assigned to a certain class of service, this information is written to the cookie. Also, if information contained in the packet header, such as the DSCP field, is changed, the cookie is updated as well. The cellification process is typically completely transparent both in terms of the router’s configuration and management. Nevertheless, it is important to understand its basics and the benefits it offers.

Different Types of Queuing Disciplines

Over a number of years, several mathematical studies have led to the design and creation of a variety of queuing algorithms. To describe all possible queuing mechanisms is impossible. However, some major well-known disciplines regarding scheduling are detailed in the next sections of this topic:

• First in, first out (FIFO) queuing

• Fair queuing (FQ)

• Priority queuing (PQ)

• Weighted fair queuing (WFQ)

• Weighted round robin (WRR)

• Deficit weighted round robin (DWRR)

• Priority-based deficit weighted round robin (PB-DWRR)

The most powerful of these and perhaps the most interesting is PB-DWRR. However, this topic discusses them all, because it is interesting to evaluate the pros and cons of each one and because doing so illuminates the evolutionary history of queuing algorithms and the reasons behind the popularity of PB-DWRR.

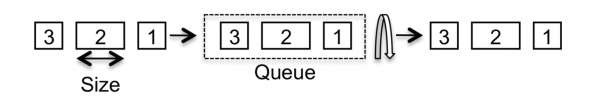

FIFO – First in, First out

FIFO is a well-known acronym for First In, First Out, and is probably the most basic queuing scheduling discipline. The principle behind FIFO queuing is that all packets are treated equally, by all being placed in the same queue. So in a nutshell, there is one queue and the scheduler only serves this queue. This mechanism implies that the removal rate is directly inherited from either the interface speed or from the shaping rate if there is a shaper applied. Because with any queuing mechanism there is no overtaking within a queue, the packets are serviced in the same order in which they were placed into the queue. This is illustrated in Figure 7.5.

For most router vendors, FIFO is probably the default behavior, with one queue for most transit traffic plus another one for locally generated control plane packets such as routing protocol packets.

Generally speaking, most hardware implementations need at least one buffer per interface to be able to build a packet before transmitting it. The function of the queue is to be a placeholder buffer that is used when the Layer 1 and Layer 2 headers are added to the packet. Providing a buffer that is the size of two to four packets helps the system to be able to utilize the interface efficiently and at line rate. FIFO service is predictable and the algorithm is simple to implement for a router or a switch. However, the FIFO algorithm is perhaps not a true "queuing and scheduling" solution because it does not provide any form of differentiated services, since traffic belonging to different classes of service share the same road lane (effectively the same queue) to the scheduler.

Under congestion conditions, FIFO queuing first introduces a delay as the queue starts to fill, and when the queue becomes full, all newly arrived packets are discarded. Here the implementation of the drop behavior can differentiate.

One alternative is to drop everything in the buffer to avoid having the queue collapse under the burden of stalled sessions. The reason for this behavior is to avoid a situation similar to the TCP silly syndrome, in which the receiving window gets smaller and smaller because most of the packets in the buffer memory are waiting to be processed.

Figure 7.5 FIFO scheduling

The result is that all sessions suffer service degradation, and the packets are stuck in the queue in the order in which they arrived. Despite the possible availability of large buffer memory, the buffers cannot be utilized properly because only a small number of senders’ congestion window (cwnd) can be used.

Another drop alternative is to use the more intelligent ways described earlier to clear the queue by dropping from the tail and by using packet aging. FIFO queuing has the following benefits:

• The FIFO algorithm is simple to implement.

• It provides acceptable performance if the queue saturation is low and short. In fact, FIFO can be seen as more of a burst-absorbing implementation than a true queuing strategy.

• If applications are TCP-based and not delay-sensitive, the process of removal from the queue is well suited because packet ordering is preserved at the cost of introducing delay. If moderate congestion occurs, TCP slows down because of RTT, but retransmissions are kept to a minimum.

FIFO queuing has the following limitations:

• FIFO provides no differentiated services. In cases of extreme congestion and buffer usage, all services are equally bad.

• Delay and jitter cannot be controlled because the queue depth usage can vary. Therefore, FIFO is not an appropriate solution for real-time applications. For example, a voice flow consisting of many packets might be stuck behind a 1500-byte TCP transfer with a large cwnd.

• Greedy flows can take up most of the queue depth and thus bursty flows can consume all available buffer space. Because TCP, by nature, tries to scale up the size of the transmitting window, session control is very difficult with a single queue or buffer.

Fair Queuing

The main disadvantage of FIFO queuing is that flows consisting of many packets can take up most of the bandwidth for less bandwidth-intensive applications, because FIFO does not separate flows or streams of packets. Fair queuing (FQ), also commonly called the fairness algorithm, is a scheduling algorithm that addresses the basic limitation of FIFO queuing. FQ classifies packet flows into multiple queues, offering a fair scheduling scheme for the flows to access the link. In this way, FQ separates traffic and flows, and avoids applications that consume less bandwidth being starved by applications that consume more bandwidth.

The scheduling is very much a statistical multiplexing process among the queues, with queues buffering packets that belong to different flows. FQ, defined by Nagle in 1985, takes into account packet size to ensure that each flow receives an equal opportunity to transmit an equal amount of data. Each queue is assigned the same weight; that is, the queues are scheduled with the same amount of bandwidth. This scheme avoids the problems of dealing with small and large packets in the queue, so the speed of removal from the queue is a function of a number of bits, not a function of a number of packets. So, for example, a queue with large packets has access to the same bandwidth as a queue with small packets, because in each scheduling turn the queue is serviced in terms of number of bits, not number of packets.

The main issue with the fairness algorithm is the fact that it is indeed fair, which may seem odd but it does invalidate the desired QOS goal of providing an uneven distribution of resources across different classes of service. If many queues must be visited in a fair order, flows that require low delay can suffer.

For routers to implement the FQ model, a hash function needs to separate flows from each other to map packets to a particular session. The hash function can compute the session or flow using a rich set of variables, such as a combination of the source port address, destination port addresses, and protocols, and possibly even higher-layer information beyond TCP, UDP, and port numbers. But once this classification is done, dynamically allocating memory and creating logical queues that exist only when the flow is active is a very resource-intensive task that is hard to implement when most of the packet forwarding takes place within a hardware-based architecture that has little interaction with processing resources. As a result, classifying and dynamically creating queues for each active hashed flow is simply not scalable.

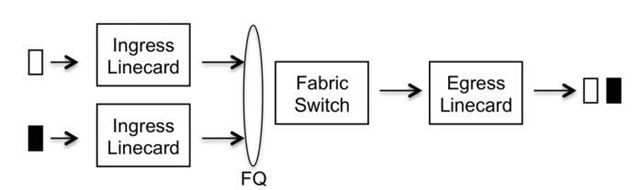

The fairness algorithm is a common tactic to handle oversubscribed backplanes on routers and switches. A common hardware architecture is to have a one-stage to two-stage fabric switch that connects line modules. In this scenario, incoming traffic from multiple ports is forwarded over a fabric to a single destination port. Here, fairness can ensure that the incoming ports on the line modules have equal access to the fabric so that they can be delivered to the ports on the outgoing module, as illustrated in Figure 7.6.

However, the fairness algorithm does not take into account the fact that the backplane is not aware of individual flows, which are the basis for the original idea of using FQ to create bandwidth fairness. So what is indeed implemented is a slight variation of the original FQ algorithm.

Figure 7.6 Fairness algorithm

The FQ algorithm implies that the data rate may fluctuate for short intervals, because the packets are delivered sequentially to achieve fairness in each scheduling cycle. This behavior can possibly lower the throughput, especially when compared to algorithms that focus more on maximum throughput. On the plus side, however, FQ is very effective in avoiding starvation of flows under heavy loads. FQ offers the following benefit:

• The FQ algorithm isolates each flow into its own logical queue. Thus, in theory, a greedy flow cannot affect other queues.

FQ has the following limitations:

• The FQ algorithm is extremely complicated to implement, and no example of a vendor implementation exists on high-speed routers or routers created to handle large numbers of sessions and large amounts of traffic. FQ is more of a theoretical construct than a practical paradigm.

• It is resource-intensive because many states and hashes must be computed and because memory must be allocated and reallocated based on changes in the session state.

• Delay and jitter can still be issues because each session hash is seen as a queue entity. If many sessions need to be scheduled, the multiplexing scheme used to determine the session slot interval can vary, depending on how many active sessions are actively transmitting packets. The wait time to the next scheduled service can be long if many sessions are active.