We had also another reason to assume an affect of ribonucleosides to the process of learning. Chemical specificity after learning may arise without acceptance of the complex mechanism of non-template protein synthesis. As components of proteins, peptides and many amino acids are neurotransmit-ters and realize intracellular communications, while some components of RNA chains, bases, participate in intracellular communications and are second messengers. Our data are in accord with the role of the chemical interactions of second messengers in a learning, which may integrate synaptic activity in the whole image. A chemical substrate of memory can be satisfactorily described as the mixture of simple substances within neurons. Functional interaction between chemoreceptors is an ancient mechanism. Even assembles of bacterial chemoreceptors that control chemotaxis work in a highly cooperative manner [1163]. Although networks of biochemical pathways have often been thought of as being only neuromodulatory, these pathways can also integrate and transfer information themselves. The temporal dynamics of chemical signals are at least as fundamental as changes in electrical processes. Interacting chemical pathways could form both positive and negative feedback loops and exhibit emergent properties such as bi-stability, chaotic behavior and periodicity. The efficiency of a synapse probably depends on the combination of simultaneously activated synapses to which it belongs [1264, 1272, 1292, 1064]. This is shown, particularly, by the possibility of disinhibition with a reduction in stimulus strength and by means of intracellular microiontophoresis. If part of a population of initially activated excitatory synapses evokes a larger EPSP than all the population, EPSP cannot be represented as the sum of the potentials generated by each synapse separately, for the whole must be greater than its parts. Chemical processes initiated in the synapses may perhaps interact in the neuron.

Simple enzymes ‘read’ the concentration of their substrate, produce a corresponding level of product and generate a monotonic relationship between input and output, which saturates as the concentration rises. The activity of protein can be altered by enzyme-catalyzed modification. Besides reaction to substrates, some proteins respond specifically to light, temperature, mechanical forces, voltage, pH, etc. Input-output relationships are often extremely rapid, less than 50 ^s. However, the ‘wiring’ between molecules depends on the diffusion coefficient and is rather slow. Biochemical signals might be generated and act locally in the cellular compartments and these processes are sometimes as slow as seconds or minutes [611]. For small molecules, second messengers and ions (as cyclic AMP) diffusion through a cell is relatively rapid, 100 ms [162] and diffusion between neighboring synapses may be as prompt as 1 ms. Considerations of memorizing by a mixture of substances are rather indeterminate. We tried to put these ideas into the model of neu-ronal memory and it was necessary to modify the classical model of a neuron [815, 548].

Considering that information arrives at a neuron through chemoreceptors and transmits to other cells through potential-sensitive channels, it would appear reasonable that chemical processes involved in learning and memory consolidation begin at the chemoreceptors and terminate on the excitable membrane channels. We can suppose that the chemical singularity during learning is generated as result of interactions of second messengers, which are specific to corresponding synapses. The simplest assumption is that a memory mechanism includes paired interactions of the excited synapses. This presumption we put as the basis of model of our neuronal chemical memory. The most frequently meeting pairs of simultaneously excited synapses are bound to their second messengers by chemical bonds. Thus, we have a second order model relative to the space of the neuron inputs. Synaptic influences in neurons are summed up in non-linear fashion. So, subthreshold synaptic inputs are modulated by voltage-gated channels. Blockage of transient potassium or potential-dependent sodium channels in neurons linearizes the summation [1289, 1166].

We have considered the model of single neuron learning. The starting point for this neuronal model is that the properties of excitable membranes are controlled by biochemical reactions occurring in the nerve cells, which has N inputs and one output. The set of excited and non-excited synapses is regarded as the input signal. Besides these N inputs, we introduce a special reinforcement input (this is the input that receives an US). The reinforcement from the environment arrives at the entire neural network as a response to the action of the whole organism, usually causes a generalized reaction of the neural system and, therefore, reaches almost each neuron. The introduction of the reinforcement input is supported by the experimental data that the analogs of associative learning may be executed at the neuronal level and, hence, conditioned and unconditioned stimuli converge in the neuron (see Sec. 1.6). Let us suppose that in the case of synapse excitation the specific second messengers xi are generated in the cell, where i is the synapse number. In the case of reinforcement input excitation – the second messenger R is generated. The substances xi interact with each other in pairs and generate the instantaneous memory information molecules wij about the current signal, namely second-order components, which partially merge information entering to activated synapses. Our model functions on the basis of probable intracellular chemical reactions. The set of chemical reactions corresponds to the set of differential equations. During learning the products of reactions are accumulated and then used for signal recognition, prediction of its probable consequences and decision-making related to the reconstruction of a neuron’s excitability. The neuron has N inputs, one output and a special input for the reward.

It is well known that during AP generation high-threshold potential-sensitive calcium channels are opened and the intracellular concentration of Ca?+ ions rapidly increases. Thus, the availability of calcium ions in the cell immediately after AP generation serves as an indicator of the neuronal response to the input signal. Intracellular Ca2+ concentration may serve steady sign of firing activity [1135]. Let the molecules of the instantaneous memory Wij, the result of simultaneous activation of synapses number i and j, interact with the second messengers R and Ca2+ ions. The molecules of the second messenger R are then transformed to an inactive form. As a result, they form the short-term memory molecules W+ and W-. The availability of the W+ molecules facilitates AP generation to obtain reinforcement (US) from the environment and the Wi- molecules prevent AP generation. The process of short-term memory formation is competitive. The reactions involved in this process cover all possible combinations of the neuronal response to the input signal and the subsequent reaction of the environment that serves to develop instrumental neuron learning.

We can assume that chemical singularity during learning is generated by interactions of inner messengers specific for the corresponding excited synapses and for the rewarding input. We may imagine a process of recognition in a neuron as the interaction of second messengers xi,xj, produced in the synapses i,j (activated by a signal that it is necessary to recognize), with the molecules of short-term memory Wij. There is a need in the search to establish a correlation between the appearances of this signal in the past, corresponding actions and the consequent appearance of rewards or punishments. In any computer, a search for the required signal in a memory is the most time and energy consuming operation, with the impossibility to exclude exhaustion among its components. In agreement with the idea of E.A. Liberman [735], this operation in the "molecular computer" is rather cheap; it happens at a cost of heat movements, which dictate the conditions for a combination of complementary molecules. Complementary molecules meet by chance, but their interactions are nonreversible. This is a stable state, since at this state the system is at the bottom of a local minimum of energy, such that small variations from this minimum increase the local free energy in the system. Coupling in the form of complementarity is the form of natural selection at the biochemical level [326].

In the succession of random collisions, molecular forces choose those pairs of xi:xj, which correspond to the indexes of memory Wij. Therefore, the input signal chooses the subset of memory molecules that corresponds to the set of pairs of activated synapses and information concerning lucky W+ and unlucky W- events as that information becomes available. The set of the chemical equations is described by the set of first order differential equations according to the laws of chemical kinetics.

We considered the effect of regulation of the properties of sodium channels. This allows simulation of the changes in the neuron’s electrical activity parameters occurring during learning, associated with its excitability (amplitude, duration and generation threshold). A neuron must decide, to generate or not to generate an AP. Neuron electrogenesis is determined by the properties of the membrane receptors and ion channels, which are controlled by metabolic processes occurring in the cell.

The neuronal model exhibits different excitability after the learning procedure relative to the different input signals (Fig. 1.33).

Fig. 1.33. Change in the spike responses of a 10-synapses model neuron during successive application of two different signals. At the left a neuron receives a reward for the AP generated in response to an input signal 1111110000. At the right the reward came following the AP absence in a response to the input signal 0000111111. The signals were presented in turn. Numbers designates the count of learning cycles. Ordinate membrane potential, mV. Abscissa time after the stimulus presentation, ms.

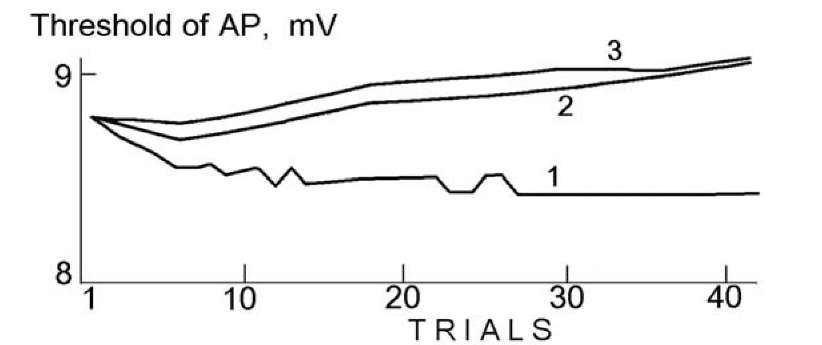

The neuron became more excitable within the response to a stimulus when AP generation lead to receipt of the reward. In this case, threshold and latency of the AP in the response to the CS+ decreased to the 32nd combination of the CS+ and reward (Fig. 1.33, left). When, oppositely, AP failure in the response to the signal was rewarded, excitability within this response decreased and, finally, the neuron fails to generate an AP in response to stimulus 30 (Fig. 1.33, right). This corresponds to the experimental data. A neuron executes a complex task. It classifies the stimuli not only according to their strength but also according to their biological values and transiently changes its own excitability. Fig. 1.34 demonstrates selective change in the threshold within response to the CS+ during classical conditioning. In the same period of training neuron displayed greater threshold within a responses to the signal CS-, which differed on the reinforced signal CS+. The more difference was the more differed threshold within the signal.

Fig. 1.34. Dependence of the AP threshold (mV) within the response on the number of a learning cycle during classical conditioning. A 10-synapsed model neuron received a reward, when it generated an AP in the response to the signal 1111110000 (curve 1). Signals 0111111000 (curve 2) and 0011111100 (curve 3) were applied following every fifth applications of signal 1 and did not coincide with a reward.

Our model demonstrates the principle possibility of the existence of chemical intra-neuronal memory based on a mixture of relatively simple substances. It does not need the construction of very complex macromolecules. Extension of the model from interaction of two to three second messengers makes capacity of neuronal memory more massive than it is known from experiments: a thousand variants for three-fold interactions in a 10 synapse (10 second messengers) neuron. This exceeds the specificity found in experiments with face recognition. Cooperative collision of several molecules has a low probability and may be accomplished by sequence collision and combining of two-three and afterward the next molecule [362]. Nevertheless, participation of several molecules in the same chemical act is sometimes possible: simultaneous collision of 3-4 Ca2+ ions with the Ca2+ sensor is possible and necessary for triggering transmitter exocytosis (Thompson 2000). Composition of the mixture may be stored in the DNA structure for producing simple peptides consisting of 2-3 amino acids. After second messenger interactions, molecules of instantaneous memory wij pick out molecules of short-term memory W+ij and Wi-for choice of the output reaction. These processes must be rather rapid. Which combinations of memory elements will be activated in the current behavior is determined by the environment and current motivation [1141]. Maintenance of the existing combination of molecules W+ and W-, which determines participation in the current behavior, is a slower process and needs a search of the location in the linear structure of DNA that must be faster than in a cellular volume. Choice of the current combination of short-term memory molecules W+j and W— has to serve as an apparatus of long-term memory.

The order of the model is restricted by the low probability of chemical reactions requiring a simultaneous collision of three or more substances. The model allows for pair interactions between excited synapses and correctly classifies the number of binary input signals of the order of N2, where N is the number of neuron inputs. The number of second messengers participating in a pair (or three-fold) of interactions is critical. A large variety of receptors is available in neurons, but simultaneous or even the successive interaction of several substances is an impracticable requirement. For this reason, the number of interacting substances should be less and the informational power of a neuron should be less, too, and a neuron perhaps only roughly evaluates signals. One second messenger may maintain a change of non-specific chemosensitivity or excitability through regulation of its absolute concentration. Two second messengers may maintain non-specific plasticity by means of regulation of their relative concentrations. Three second messengers may support rough specificity. Such a model describes the way for merging of input information and thus solves the ‘binding problem’, which never has been solved before. This is an important theoretical conclusion, but we cannot be sure of the physiological relevancy of the model.

Investigation of long-term memory is now in its infancy. In a majority of researches experimenters use a stationary environment when the existence of short-term memory is sufficient for proper functioning. A non-stationary environment requires a long time for investigating the reorganization of learning after a change in an environmental condition, for example, acquisition of one habit after preliminary acquisition of another or sudden replacement of rewards. Nevertheless such attempts were undertaken [1291, 352, 299, 21, 166, 944], but body of data till is too small in order to make any conclusion. Properties of complementary molecular interactions might be the basis for changing the quasi steady combination of short-term memory molecules to another quasi steady combination under control of long-term memory (about which we know almost nothing). Molecular interactions depend on context, since molecules will have diverse properties dependent upon microenvironment and the nature of the other molecules to which they are coupled [326]. DNA may participate in the reorganization of this context, but cannot preserve the entire long-term memory directly. This becomes obvious, if we take into consideration that a general volume of information in long-term memory to a marked degree exceeds the amount of genetic information. For instance, at least some persons can recite texts, the complexity of which surpasses the sequence of bases in their DNA. The arrangement of attributes in DNA cannot increase a possible capacity of storage, since previous events in memory must be arranged in time. Naturally, we are discussing here a suggested role of DNA only as an example of ordered structure. This reasoning is concerned with any construction ordered in space. Therefore, firstly, memory is scattered amongst neurons, secondly, memory constituents are not allocated to neurons and, thirdly, a neuron is not able to store a whole memory. If neurons store a coarse version of considerable volumes of memory, this, at least, does not contradict data known at the present time.