Abstract

Fast and accurate measurement of the 3D-shape of mass fabricated parts is increasingly important for a cost effective production process. For quality control of mm or sub-mm sized parts tactile measurement is unsuitable and optical methods have to be employed. This is especially the case for small parts which are manufactured in a micro deep drawing process. From a number of measurement techniques capable to measure 3D-shapes, we choose Digital Holography and confocal microscopy for further evaluation. Although the latter technique is well established for measuring of micro parts, its application in a production line suffers from insufficient measurement speed due to the necessity to scan through a large number of measurement planes. Digital Holography on the other hand allows for a large depth of focus because one hologram enables the reconstruction of the wave field in different depths. Hence, it is a fast technique and appears superior to standard microscopical methods in terms of application in a production line. In this paper we present results of shape recording of micro parts using Digital Holography and compare them to measurements performed by confocal microscopy. The results prove the suitability of Digital Holography as an inline quality control instrument.

Introduction

Modern mass production processes have to be cost-effective regarding the percentage of error-free manufactured parts. Therefore an adequate quality control by means of a fast and accurate measuring technique has to be established. Respecting the object under investigation in this paper, we will focus on a mm or sub-mm sized micro deep drawing part, which is produced and investigated in the Collaborative Research Centre "Micro Cold Forming" [1] at the University of Bremen, Germany. In Fig. 1 two examples of this bowl-like part are presented, which have dimensions of about 0.5 mm height and 1 mm width. Especially fast production process of this micro part must be inspected continuously because an unperceived failure in the forming process leads to a high number of defect parts.

Fig. 1 Two micro deep drawing parts, scanning electron microscope (SEM) image [1]

In order to control the quality of this part, tactile measurement is unsuitable and rapid non-destructive optical methods should be considered [2]-[8]. For example, confocal microscopy can provide precise inspection of micro objects. But concerning the necessity to scan through a large number of measurement planes, its integration in a production line does not appear practicable due to insufficient measurement speed. Currently there are no commercially available solutions, which at the same time provide a rapid and non-invasive investigation of microscopic objects. One of the major problems is the limited depth of focus, which is a common characteristic for any microscopic imaging technique. Here, Digital Holography is chosen due to the reasons below:

a. It is able to enhance the depth of focus considerably by means of combining several reconstructed wave fields associated with different observation planes from a single measurement [6];

b. It needs only one or two recordings of the holograms to recover the object wave field [7], [8].

In this paper we present a 3D-shape measurement of micro deep drawing parts by means of Digital Holography and compare them to results obtained by confocal microscopy. The results show that Digital Holography can be employed as an inline quality control instrument.

Principles

In this section the basic principles used for the digital-holographic measurement of the micro deep drawing part will be described. Firstly Digital Holography, including recording and reconstruction, will be introduced. Then we will present how to extract the 3D-shape from the holographically reconstructed phase information in the object domain. The micro parts investigated in this paper are mm or sub-mm sized and thus extremely large compared to the wavelength range of a normal laser, so that an ambiguity of the object shape derived from the reconstructed phase information arises. In order to solve this problem, we will finally discuss a method by means of contouring with two illumination directions.

Digital Holography: Recording

In terms of scalar diffraction theory, an optical wave field can be described by a real amplitude as well as a phase distribution. The light wave at a point r in space can be generally described as E(r)=A(r)exp[iq>(r)] with A(r) being its real amplitude distribution and 9(r) its phase distribution [7], [8]. In practice all sensors can only capture light intensity, defined by I(r)=|E(r)|2=E(r)E (r)=A2(r). Please note that in this process its phase 9(r) is lost. In this section we will present how Digital Holography records the phase information into the intensity and in the next section how it numerically reconstructs the object wave field, which holds both the amplitude and the phase distribution.

A basic holographic setup shown in Fig. 2(a) is used to record the wave field reflected from an object, which is called the object wave. It is superposed by a second wave field, the reference wave. In order to be coherent both have to originate from the same source of a laser. The resulting interference pattern, the hologram, is electronically captured by a CCD-camera and stored in a computer. In the following the holographic process will be mathematically described using the coordinate system shown in Fig. 2(b). As an example in the object volume an arbitrary object plane {x,y} is denoted by the area Z. The complex amplitude of the object wave can be described by EO(r)=AO(r)exp[i^O(r)] and the one of the reference wave by ER(r)=AR(r)exp[iq>R(r)]. Both waves interfere in the hologram plane {c;,r|} with z=0 at the surface of the CCD. The corresponding intensity, i.e. the hologram, is calculated by [6], [8]:

where IR and IO denote the individual intensities and * means the complex conjugate. The hologram is recorded by the CCD,digitized into a N*M pixel array and digitally stored in the computer. In the next section, we will see how to numerically reconstruct the object wave field from

Fig. 2 Digital Holography: (a) basic holographic setup [7] and (b) the coordinate system [8] 2.2 Digital Holography: Reconstruction

Throughout the past decades a number of ways to digitally reconstruct the hologram have been developed [8]. The reconstruction method applied in this paper will be presented in this section. Based on the Rayleigh-Sommerfeld formulation of diffraction the wave field u(c;,r|) in the hologram plane z=0 propagated from the wave field u(x,y) in the object plane z can be described by [9]

Here, p is the distance between a point in the object volume and a point on the hologram plane given by p=[(x-^)2+(y--q)2+z2] /2. When the aperture in the hologram plane is small compared to the distance z, the Fresnel approximation can be applied, which means p=z+(x-^)2/2z+(y–q)2/2z inside the exponential function as well as p=z outside of it can be considered. By means of this approximation and Eq. 2 we will define the propagation operator P [9]:

which includes a single Fourier transform denoted by F. The terms Q1 as well as Q2 are spatially varying phase factors, defined by:

Here, Q2 is a quadratic-phase exponential function, which is known as the chirp function. Positive z corresponds to propagation into the direction of the hologram plane, while using the inverse Fourier transform and conjugating the terms Q1 as well as Q2 results in back propagation P-1 into the direction of the reconstruction plane [9].

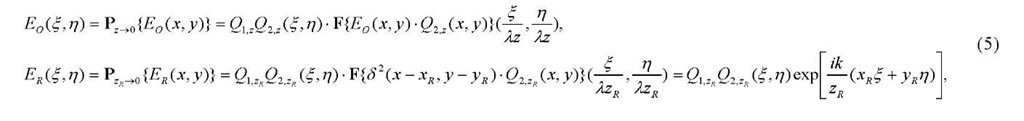

Since the phase information is encoded inside the two multiplicative terms EOER* and EREO* of the hologram H(^,-q) given in Eq. 1, we will look at these terms in more detail. Using the propagation operator P, we can describe the wave field EO and ER in the hologram plane by [8]

where the coordinates (xR,yR,zR) denote the source point of the spherical reference wave. When the reference wave is located close to the object with z=zR, the interesting term EOER* in Eq. 1 can be described by:

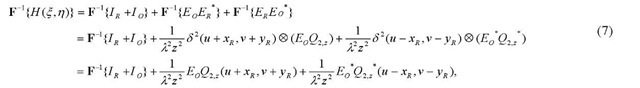

while EREO* in Eq. 1 can be described simply by conjugating Eq. 6. In order to obtain one of these two terms from the hologram, we can further filter the hologram simply by inverse Fourier transform [8]

where ® denotes the convolution. Here, IR is assumed to be constant and IO is a speckle field of the object wave. Their inverse Fourier transform in regard to the first term is located in the center. The second term contains EOQ2,z, which is shifted by (-xR,-yR); While the third term contains the conjugate of the EOQ2,z, which is oppositely shifted by (xR,yR). Hence, they are center-symmetrically located and can be spatially separated by selecting the coordinates of the reference wave (xR,yR) appropriately. According to it, we can take one of these two symmetric terms simply by means of eliminating the other two terms and then back filter the remaining result by means of Fourier transform to get EOER or EREO in the hologram plane {^/q}. Based on it and the back propagation, we can reconstruct the object wave field in any reconstruction plane z=zj by using for example [8],

Relation between the reconstructed Phase and the 3D Shape

We want to extract the 3D shape of the object under measurement from the reconstructed object wave field Eo®(x,y) in the j-th reconstruction plane z=zj with its complex amplitude EO®(x,y)=AO®(x,y)exp[i9O®(x,y)]. The scheme we used for this process is briefly presented in Fig. 3, where P is an arbitrary point on the surface of the object with the position rP=(x,y,z) and S is a point source with the position rS. Let us assume that any point P on the surface can be associated to a specific point B in the corresponding j-th reconstruction plane z=zj. This is especially true for those parts of the image, when the object appears to be in focus [4]:

The distance between the object and the source point is further assumed to be large compared to the dimensions of the object. In this case the light incident on any arbitrary point on the surface of the object can be described as a plane wave. Its phase 9(rP) is given by 9(rP)=k(rP-rS)= krP+c0. Here, the product between the position of the light source rS and the wave vector k is written by a constant c0, because the shape information of the object does not depend on it. When the components of the wave vector k=(kx,ky,kz) are introduced, the phase 9(rP) can be rewritten by 9(rP)=kxx+kyy+kzz+c0, where the first two terms can be ignored, because the shape of the object is only related to the coordinate z. The coordinate x and y constitute a phase ramp, which can either be determined by the geometrical parameters of the setup or by a calibration process using a flat surface. According to this and Eq. 9, if the angle of the illumination direction 0 is known and c0 is set to be c0=0 we obtain [4]:

From this equation we see, that the height information of the object in z direction is related to the reconstructed phase.

Fig. 3 Schematic describing the relation between the reconstructed phase 9O®(x,y) and the 3D shape of the object: P is a point on the surface of the object, which is associated to a point B on the reconstruction plane. The distance between the object and the light source should be large in comparison to the dimension of the object, so that the light incident on the point P can be regarded as a plane wave [4]

Contouring with two Illumination Directions

The contour of the object is encoded in the phase 9O®(x,y) as seen from Eq. 10. If the contour exceeds the range of the wavelength, the phase value becomes ambiguous in terms of fringes. In our case, the surface roughness of the object already exceeds that range, so that the phase value appears noisy and the geometry of the object can not be resolved. In order to solve this problem we use the contouring method by means of two illumination directions in this paper, which is a well established method to measure the shape of a diffusely reflecting object [8]. Generally this technique is based on two sequentially reconstructed phase distributions of the object wave 9O^®(x,y) and 9O2®(x,y), associated with shifting of a point source from position S1 to S2. According to Eq. 10, the shape information of the object can be calculated from the phase difference of these phase distributions 9O1®(x,y) and q>O,2®(x,y) [4]:

Here, A denotes the synthetic wavelength, which is strongly larger than the wavelength X and can be adjusted by selecting the angles of these two illumination directions 01 and 02 accordingly. It has to be noted, that this phase difference is independent from the distance of the reconstruction plane zj. That means the phase difference can be digitally reconstructed in any arbitrary reconstruction plane zj to obtain the fringes, which are associated to the height z according to Eq. 11. From the phase difference, the desired 3D shape information of the object can be directly extracted.

System Setup

A schematic setup for digital holographic contouring by means of two illumination directions [6] is presented in Fig. 4. A micro deep drawing part, which has the same dimensions as the parts shown in Fig. 1, is our object under measurement. It is illuminated by a plane wave, provided by an optical fiber in the front focal plane of a collimating lens. The angle of the plane wave can be changed by either shifting the source point or the lens. A second fiber is located close to the object and provides a spherical reference wave. In order to capture the hologram arising from the superposition of the reference wave and the light scattered from the object, an analyzer (polarization filter) is located in front of the camera which equalizes the polarization states of the interfering wave fields.

For our investigations we used a dye-laser with a wavelength of X=582 nm and the sensor of the camera has 2208 x 2208 pixels with a size of 3.5×3.5 ^m2. The distance between the object and the camera is set to be about 3 cm, in order to obtain a suitable speckle size compared to the pixel size of the camera. Considering the maximum frame rate of 9 Hz of this camera at its full resolution, recording the holograms with two different illumination directions takes less than 0.3 seconds.

Fig. 4 Sketch of the setup for digital holographic contouring by means of two illumination directions [6]

Experimental Results

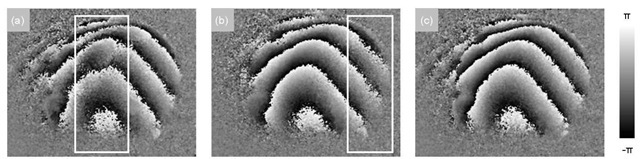

In order to show the capability for fast 3D-shape measurement by means of Digital Holography we have numerically reconstructed the complex amplitude EO(j)(x,y) of the object wave field. From the corresponding reconstructions in one plane we can calculate the phase differences Aq>O®(x,y) of the corresponding phase distributions associated with the two illumination directions. Unfortunately these data contain noise due to the limited depth of focus across a single reconstruction plane (see Eq. 11) in the out-of-focus areas. But all necessary information is stored in one set of two measurements and can be reconstructed numerically, which is the significant advantage of Digital Holography. The reconstruction of the phase differences in two different planes is shown in Fig. 5(a) and Fig. 5(b). Here, these noisy areas are marked by the white boxes. In order to recover an uncorrupted phase difference for the whole micro deep drawing part, we reconstructed the phase differences in four different reconstruction planes (j = 1, …, 4) and calculated a combined phase difference with all regions in focus, which is presented in Fig. 5(c). This procedure can be implemented in any reconstruction plane because of the independence of the phase difference A9O®(x,y) from the distance of the reconstruction plane zj.

Fig. 5 The phase differences of two phase distributions exemplarily (a) A9O(1)(x,y) in the lower, (b) A^O(4)(x,y) in the top observation plane and (c) an all-in-focus combined phase difference, obtained in only one set of two holographic measurements with regard to two illumination directions. All distributions are sampled by 690 x 540 pixels

To retrieve the 3D-shape from the combined phase difference, it has to be low-pass filtered [7] and then unwrapped, e.g. the discontinuous 2^-jumps arising from the sinusoidal nature of the phase have to be eliminated by adding multiples of 2n [10]. After subtracting the known phase ramp as mentioned in Section 2.3 and then cutting the noisy data in the surrounding areas off by means of a mask, the unwrapped phase values are inserted into Eq. 11.

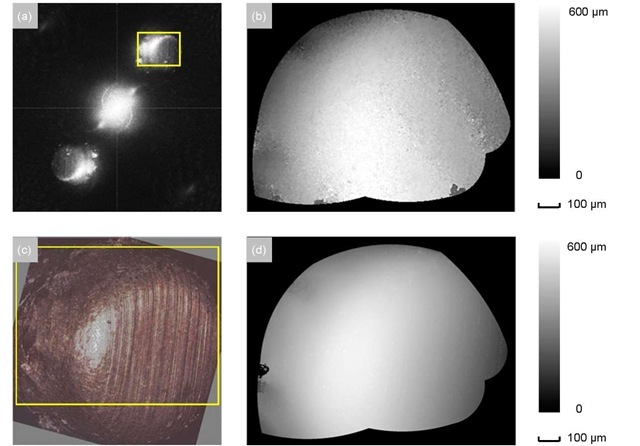

The real amplitude of the filtered hologram F-1 {H(c;/q)} is shown in Fig. 6(a), which has a center-symmetric distribution mentioned in Section 2.2. Cutting the area marked by the yellow box in Fig. 6(a), the final 3D-shape map with respect to the height z is shown in Fig. 6(b), which is sampled by 500 x 390 pixels and has a lateral resolution of about 2 ^m2. For comparison a measurement of the same region of this micro part is performed by confocal microscopy. In Fig. 6(c) the microscopic image is shown. Its shape map presented by Fig. 6(d) is masked with the same mask, which is sampled by 1500 x 1170 pixels and has a lateral resolution of about 0.7 ^m2. Although this microscopic measurement has a better resolution compared to the holographic one, it takes a considerable long time of about 6 minutes in this case to scan through the whole object. Please note, since the image of this micro part in Fig. 6(a) takes only half of the full quarter space, the resolution of this digital-holographic measurement can be further increased up to 1 ^m2, either by decreasing the distance between the object and the camera, or by applying an objective between the object and the camera in our setup [8].

Fig. 6 The top row shows the digital-holographic measurement of the micro deep drawing part: (a) the real amplitude of the filtered hologram F-1 {H(^,^)} and (b) the 3D-shape map from the created phase difference after subtracting the phase ramp, which is sampled by 500 x 390 pixels. In the bottom row is the confocal-microscopic measurement of the same region of this micro part for comparison: (c) the microscopic image and (d) its 3D-shape map, which is sampled by 1500 x 1170 pixels. Both of them have the same mask and a similar measuring direction. The yellow boxes in (a) and (c) mark the corresponding cutting areas in (b) and (d).

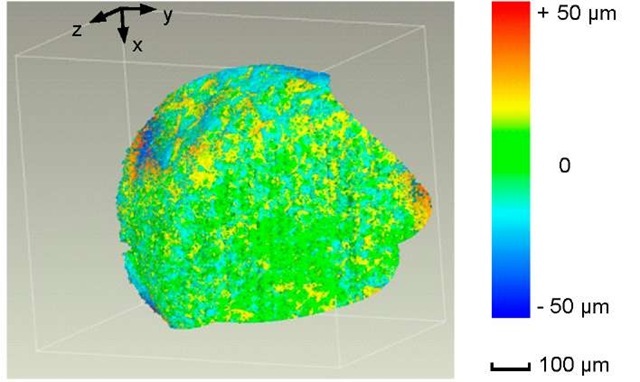

In order to compare these two shape maps in more detail, they have to be aligned iteratively by calculating the average error, while the microscopic measurement is fixed as reference and the holographic one is shifted as test step by step. In the case of holding the minimal average error with ca. ±13 ^m, we will assume that the two maps are in a best fit alignment. Based on it, a complete 3D shape comparison is presented in Fig. 7. The deviation of the holographic measurement compared to the microscopic reference is displayed in a colored map. Here the maximal error is between ca. ±50 ^m and appears mostly in the boundary areas due to the speckle noise.

Fig. 7 The 3D comparison between the digital-holographic and the confocal-microscopic shape map displayed in a false color representation. The maximal error is between ca. ±50 ^m, while the average error is between ca. ±13 ^m

Conclusion

In this paper we investigated Digital Holography as a tool for inline quality control in a fast manufacturing process like micro deep drawing. As an example the 3D-shape of a micro bowl with a diameter of 1 mm has been measured, which principally requires only a set of two recorded holograms. The evaluation of the data shows the strength of Digital Holography: In contrast to other techniques especially in microscopic applications, the focused area of the measurement can be arbitrarily changed during the data evaluation numerically by selecting the corresponding reconstruction plane of the measured wave field. The measurement has been compared to a measurement using confocal microscopy which is a well established standard method for measuring the shape of micro parts. The deviation between the two methods was less then ±50^m, though the resolution of the Digital Holography setup was not exactly adapted to the size of the object. Hence Digital Holography is well suited for the inline quality control of the micro parts. In the future the measurement velocity can even be increased by using a spatial light modulator for shifting the illumination direction automatically.

![Two micro deep drawing parts, scanning electron microscope (SEM) image [1] Two micro deep drawing parts, scanning electron microscope (SEM) image [1]](http://what-when-how.com/wp-content/uploads/2011/07/tmp16165_thumb_thumb.jpg)

![Digital Holography: (a) basic holographic setup [7] and (b) the coordinate system [8] 2.2 Digital Holography: Reconstruction Digital Holography: (a) basic holographic setup [7] and (b) the coordinate system [8] 2.2 Digital Holography: Reconstruction](http://what-when-how.com/wp-content/uploads/2011/07/tmp16167_thumb_thumb.jpg)

![Schematic describing the relation between the reconstructed phase 9O®(x,y) and the 3D shape of the object: P is a point on the surface of the object, which is associated to a point B on the reconstruction plane. The distance between the object and the light source should be large in comparison to the dimension of the object, so that the light incident on the point P can be regarded as a plane wave [4] Schematic describing the relation between the reconstructed phase 9O®(x,y) and the 3D shape of the object: P is a point on the surface of the object, which is associated to a point B on the reconstruction plane. The distance between the object and the light source should be large in comparison to the dimension of the object, so that the light incident on the point P can be regarded as a plane wave [4]](http://what-when-how.com/wp-content/uploads/2011/07/tmp16177_thumb_thumb.jpg)

![Sketch of the setup for digital holographic contouring by means of two illumination directions [6] Sketch of the setup for digital holographic contouring by means of two illumination directions [6]](http://what-when-how.com/wp-content/uploads/2011/07/tmp16179_thumb_thumb.jpg)