The ISATAP deployment on the SBM is nearly identical to that of the HM. Both models deploy a redundant pair of switches used to provide fault-tolerant termination of ISATAP tunnels coming from the hosts in the access layer. The only difference between the SBM and HM is that the SBM is using a new set of switches that are dedicated to terminating connections (ISATAP, configured tunnels, or dual-stack), while the HM uses the existing core layer switches for termination.

The following sections focus on the configuration of the interfaces on the service block switches (physical and logical) and the data center aggregation layer tunnel interfaces (shown only for completeness). The entire IPv4 network is the same as the one described in the HM configuration.

Also, the host configuration for the SBM is the same as the HM because the ISATAP router addresses are reused in this example. Similar to the HM configuration section, the loopback, tunnel, routing, and high-availability configurations are all presented.

Network Topology

To keep the diagrams simple to understand, the topology is separated into two parts: the ISATAP topology and the manually configured tunnel topology.

Figure 6-18 shows the ISATAP topology for the SBM. The topology is focused on the IPv4 addressing in the access layer (used by the host to establish the ISATAP tunnel), the service block (used as the termination point for the ISATAP tunnels), and also the IPv6 addressing used in the service block for both the p2p link and the ISATAP tunnel prefix. The configuration shows that the ISATAP availability is accomplished by using loopback interfaces that share the same IPv4 address between both SBM switches. To maintain prefix consistency for the ISATAP hosts in the access layer, the same prefix is used on both the primary and backup ISATAP tunnels.

Figure 6-18 SBM ISATAP Network Topology

Figure 6-19 shows the point-to-point tunnel addressing between the SBM and the data center aggregation layer switches. The p2p configuration is shown for completeness and is a reference for one way to connect the SBM to data center services. Alternatively, ISAT-AP, 6to4, or manually configured tunnels could be used to connect each server in the data center that provides IPv6 applications to the SBM. Also, dedicated IPv6 links could be deployed between the data center aggregation/access layers to the SBM that would bypass non-IPv6-capable services in the aggregation layer. Basically, there are many ways to achieve end-to-end connectivity between the hosts in the campus access layer to the services in the data center.

The topology diagram shows the loopback addresses on the service block switches (used as the tunnel source for configured tunnels) and the IPv6 addressing used on the manually configured tunnel interfaces.

Figure 6-19 SBM Manually Configured Tunnel Topology

Physical Configuration

The configurations for both service block switches are shown, including the core layer-facing interfaces. Configurations for the IPv4 portion of the previous topology are shown only for the service block switches. All other IPv4 configurations are based on existing campus design best practices and are not discussed in this section. Also, as was mentioned before, EIGRP for IPv6, OSPFv3, and other IPv6 routing protocols are fully supported. The following configurations use EIGRP for IPv4 and OSPFv3 for IPv6. EIGRP for both IPv4 and IPv6 was tested and is supported with no special considerations.

Example 6-39 shows the 6k-sb-1 IPv4 configuration to the core and also the dual-stack interface configuration to 6k-sb-2.

Example 6-39 IPv4-IPv6 Link Configurations for 6k-sb-1

Example 6-40 is the configuration for 6k-sb-2. The configuration is nearly identical with the exception of addressing.

Example 6-40 IPv4-IPv6 Link Configurations for 6k-sb-2

Tunnel Configuration

The tunnel and routing configuration for ISATAP is exactly the same as the HM. To avoid repeating information presented in previous sections, none of the configurations for the ISATAP tunneling and routing are explained, but the configuration is shown (see the HM example explanations).

The manually configured tunnel configurations are shown in Example 6-41 for the service block switch 6k-sb-1. The tunnel configurations for the data center aggregation switches (6k-agg-1/6k-agg-2) are identical to the service block except for address specifics.

Example 6-41 6k-sb-1 SBM Manual Tunnel Configuration

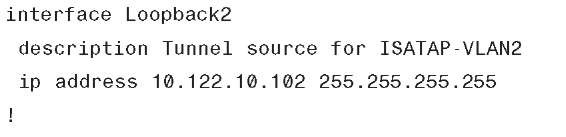

Example 6-42 shows the ISATAP configuration for 6k-sb-1. The configurations for 6k-sb-2 are not shown for brevity; the configurations are identical except for address values.

Example 6-42 6k-sb-1 SBM ISATAP Tunnel and Routing Configuration

QoS Configuration

The same QoS configurations and discussions from the "Implementing the Dual-Stack Model" section, earlier in this topic, apply to the SBM. Based on the example configuration shown in the case of the HM, the only change relates to the interfaces where the classification and marking policies are applied. In the SBM, the service policy is applied to the egress on the manually configured tunnels toward 6k-agg-1 and 6k-agg-2.

Example 6-43 shows that for 6k-sb-1, the service policy would be applied to TunnelO and Tunnel1.

Example 6-43 6k-sb-1 Apply QoS Policy Egress on Tunnel

The security considerations and configurations discussed in the DSM and HM sections apply directly to the SBM.

Summary

This topic analyzes various architectures for providing IPv6 services in campus networks. The DSM should be the goal of any campus deployment because no tunneling is performed. The HM is useful because it allows the existing campus infrastructure to provide IPv6 access for endpoints in the campus access layer through ISATAP tunnels. The SBM is a great interim design to provide end-to-end IPv6 between the campus access layer hosts and IPv6-enabled applications on the Internet or data center without touching the existing campus hardware.

The models discussed are certainly not the only ways to deploy IPv6 in this environment, but they provide options that can be leveraged based on environment, deployment schedule, and targeted services specifics.

Table 6-7 summarizes the benefits and challenges with each of the models discussed in this document.

Table 6-7 Benefits and Challenges of Various Models

|

Model |

Benefit |

Challenge |

|

Dual-stack model (DSM) |

No tunneling required No dependency on IPv4 Superior performance and highest availability for IPv6 unicast and multicast Scalable |

Requires IPv6 hardware-enabled campus switching equipment Operational challenges with supporting dual protocols -training/management tools |

|

Hybrid model (HM) |

Most of the existing IPv4-only campus equipment can be used (access and distribution layer) Per-user or per-application control for IPv6-service delivery Provides high availability for IPv6 access over ISATAP tunnels |

Tunneling is required; increase in operations and management Scale factors (number of tunnels, hosts per tunnel) IPv6 multicast is not supported Tunnel termination at core |

|

Service block model (SBM) |

Highly reduced time to delivery for IPv6-enabled services Requires no changes to existing campus infrastructure Similar to the HM in other advantages |

New IPv6 hardware capable, campus switches are required All cons from the HM |