INTRODUCTION

A synthetic human face is useful for visualizing information related to the human face. The applications include visual telecommunication (Aizawa & Huang, 1995), virtual environments and synthetic agents (Pandzic, Ostermann, & Millen, 1999), and computer-aided education.

One of the key issues of 3D face analysis (tracking) and synthesis (animation) is to model facial deformation. The facial deformation is complex, which often includes subtle expressional variations to which people are very sensitive. Therefore, traditional models usually require extensive manual adjustment. Recently, the advance of motion-capture techniques sparked data-driven methods (e.g., Guenter et al., 1998). They achieve realistic animation by using real face-motion data to drive 3D face animation. However, the basic data-driven methods are inherently cumbersome because they require a large amount of data.

More recently, machine learning techniques have been used to learn compact and flexible face-deformation models from motion-capture data. The learned models have been shown to be useful for realistic face-motion synthesis and efficient face-motion analysis. A unified framework on facial deformation analysis and synthesis is demanded to address in a systematic way the following problems: (a) how to learn a compact 3D face-deformation model from data, and (b) how to use the model for robust facial-motion analysis and flexible animation.

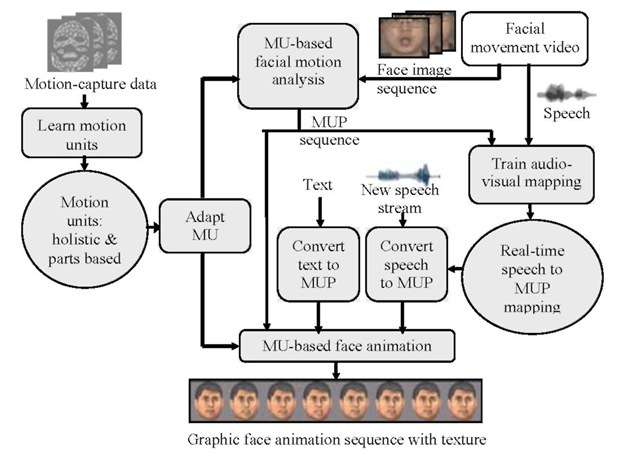

In this article, we present a unified machine-learning-based framework on facial deformation modeling, and facial motion analysis and synthesis. The framework is illustrated in Figure 1. In this framework, we first learn from extensive 3D facial motion-capture data a compact set of Motion units (MUs), which are chosen as the quantitative visual representation of facial deformation. Then, arbitrary facial deformation can be approximated by a linear combination of MUs, weighted by coefficients called motion unit parameters (MUPs). Based on interpolation, the MUs can be adapted to the face model with new geometry topology. MU representation is used in both robust facial motion analysis and efficient synthesis. We also utilize MUs to learn the correlation between speech and facial motion. A real-time audio-to-visual mapping is learned using an artificial neural network (ANN). Experimental results show that our framework achieved natural face animation and robust nonrigid tracking.

Figure 1. Machine-learning-based framework for facial deformation modeling, and facial motion analysis and synthesis

BACKGROUND

Facial Deformation Modeling

Representative 3D spatial facial deformation models include free-form interpolation models (e.g., affine functions, splines, radial basis functions), parameterized models (Parke, 1974), physics-based models (Waters, 1987), and more recently, machine-learning-based models. Because of the high complexity of natural motion, these models usually need extensive manual adjustments to achieve plausible results. To approximate the space of facial deformation using simpler units, people (Tao, 1998) proposed to describe arbitrary facial deformation as a combination of action units (AUs) based on the facial action coding system (FACS; Ekman & Friesen, 1977). Because AUs are only defined qualitatively, they are usually manually customized for computation. Recently, people turned to apply machine learning techniques to learn models from data (Hong, Wen, & Huang, 2002; Kshirsagar, Molet, & Thalmann, 2001; Reveret & Essa,2001).

Facial Motion Analysis

Human facial motion analysis is the key component for many applications, such as model-based very-low-bit-rate video coding, audiovisual speech recognition, and expression recognition. High-level knowledge of facial deformation must be used to constrain the possible deformed facial shapes. For 3D facial motion tracking, people have used various 3D deformable model spaces, such as the 3D parametric model (DeCarlo, 1998), B-spline surface (Eisert, Wiegand, & Girod, 2000), and FACS-based models (Tao, 1998). These models, however, are usually manually defined, thus may not capture characteristics of the real facial motion well. Therefore, people have recently proposed to use subspace models trained from real motion data (Reveret & Essa, 2001}.

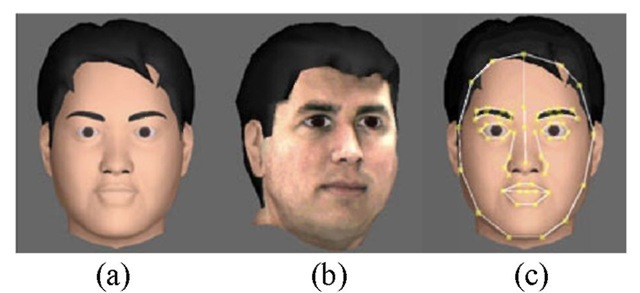

Figure 2. (a) Generic model in iFACE; (b) Personalized face model based on the Cyberware scanner data; (c) Feature points defined on a generic model for MU adaptation

Facial Motion Synthesis

In this article, we focus on real-time-speech face animation. The core issue is the audio-to-visual mapping. HMM-based methods (Brand, 1999) utilize long-term contextual information to generate smooth motion trajectory, but they can only be used in off-line scenarios. For real-time mapping, people proposed various methods such as vector quantization (VQ; Morishima & Harashima, 1991), the Gaussian mixture model (GMM), and ANN (Morishima & Harashima). To use short-time contextual information, people used the concatenated audio feature over a short time window (Massaro et al., 1999) or a time-delay neural network (TDNN; Lavagetto, 1995).

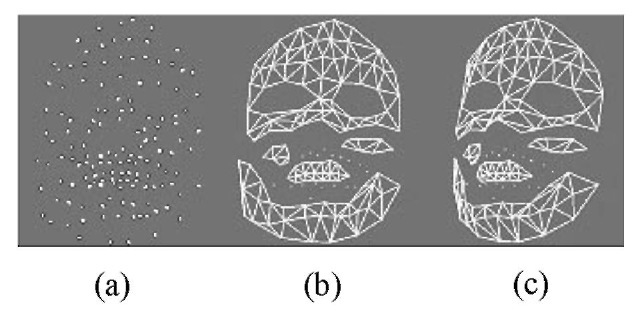

Figure 3. Markers; (a) markers are shown as white dots, (b and c) the mesh is show in in two different viewpoints

Figure 4. Neutral and deformed faces corresponding to the first four MUs. The top row is a frontal view and the bottom row is a side view.

LEARNING 3D FACE-DEFORMATION MODEL

In this section, we introduce our facial deformation models. We use iFACE (Hong et al., 2002) for MU-based animation; iFACE is illustrated in Figure 2.

The Motion-Capture Database

We use motion-capture data from Guenter et al. (1998). 3D facial motion is captured at the positions of the 153 markers on the participant’s face. Figure 3 shows an example of the markers. For better visualization, a mesh is built on those markers (Figure 3b and c).

Learning Holistic Linear Subspace

We try to learn the optimal linear bases, MUs, whose linear combination can approximate any facial deformation. Using MUs, a facial shape s can be represented by

Principal component analysis (PCA) is applied to learning MUs from the facial deformation of the database. The mean facial deformation and the first seven eigenvectors are selected as the MUs, which capture 93.2% of the facial deformation variance. The first four MUs are visualized by an animated face model in Figure 4.

Learning Parts-Based Linear Subspace

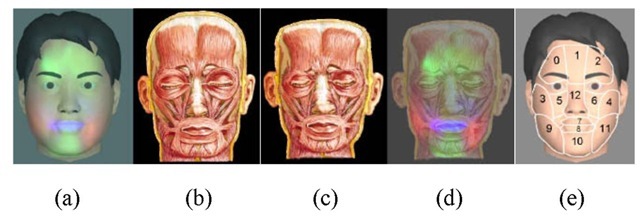

Because the facial motion is localized, it is possible to decompose the complex facial motion into parts. The decomposition helps reduce the complexity in deformation modeling, and improve the analysis robustness and the synthesis flexibility. The decomposition can be done manually using prior knowledge of facial muscle (Tao, 1998). However, it may not be optimal for the linear model used because of the high nonlinearity of facial motion. Parts-based learning techniques, such as nonnegative matrix factorization (NMF; Lee & Seung, 1999), provide a way to help design parts-based facial deformation models. We present a parts-based face-deformation model. In the model, each part corresponds to a facial region where facial motion is mostly generated by local muscles. The part decomposition is done using NMF. Next, the motion of each part is modeled by PCA. Then, the overall facial deformation is approximated by summing up the deformation in each part. Figure 5 shows the parts-decomposition results.

Figure 5. (a) NMF learned parts overlaid on the generic face model; (b) the facial muscle distribution; (c) the aligned facial muscle distribution; (d) the parts overlaid on muscle distribution; (e) the final parts decomposition.

Figure 6. Three lower lip shapes deformed by three of the lower lip parts-based MUs respectively. The top row is the frontal view and the bottom row is the side view.

The learned parts-based MUs give more flexibility in local facial deformation analysis and synthesis. Figure 6 shows some local deformation in lower lips, induced by one of the learned parts-based MUs. These locally deformed shapes are difficult to approximate using holistic MUs. For each local deformation shown in Figure 6, more than 100 holistic MUs are needed to achieve 90% reconstruction accuracy. Therefore, we can have more flexibility in using parts-based MUs. In face animation, people often want to animate a local region separately, which can be easily achieved by adjusting MUPs of parts-based MUs separately. In face tracking, people may use parts-based MUs to track only regions of interest.

MU Adaptation

The learned MUs are based on the motion-capture data of particular subjects. They need to be adapted to the face model with new geometry and topology. We call this process MU adaptation, whose first step fits MUs to a face model with different geometry. It is done by moving the markers of the learned MUs to their corresponding positions on the new face. We interactively build the correspondence of facial feature points shown in Figure 2c via a GUI (graphical user interface). Then, warping is used to interpolate the remaining correspondence. The second step is to derive movements of facial surface points that are not sampled by markers in MUs. It could be done using the radial basis function (RBF) in a similar way to Marschner, Guenter, & Raghupathy (2000).

MODEL-BASED FACIAL MOTION ANALYSIS

In existing 3D nonrigid face-tracking algorithms using the 3D facial deformation model, the subspace spanned by the AUs is used as constraints of low-level image motion. However, the AUs are usually manually designed. For these approaches, our automatically learned MUs can be used in place of the manually designed AUs. We choose to use the learned MUs in the 3D nonrigid face-tracking system in Tao (1998) because it has been shown to be robust and in real time. Details of facial motion estimation can be found in Tao. In the original system, AU is manually designed using Bezier volume and represented by the displacements of vertices of a face-surface mesh. We derive AUs from the learned holistic MUs using the MU adaptation process. The system is implemented to run on a 2.2-GHz Pentium 4 processor. It works at 14 Hz for nonrigid tracking for image size 640 480 . The estimated motion can be directly used to animated face models. Figure 7 shows some typical frames that it tracked, along with the animated face model to visualize the results.

Figure 7. Typical tracked frames and corresponding animated face models. (a) The input frames; (b) the tracking results visualized by yellow mesh; (c) the front views of the synthetic face animated using tracking results; (d) the side views of the synthetic face. In each row, the first image corresponds to a neutral face.

Figure 8. (a) The synthesized face motion; (b) the reconstructed video frame with synthesizedface motion; (c) the reconstructed video frame using H.26L codec.

The tracking algorithm can be used in model-based face video coding (Tu et al., 2003). We track and encode the face area using model-based coding, and encode the residual in the face area and the background using traditional waveform-based coding method H.26L. This hybrid approach improves the robustness of the model-based method at the expense of increased bit rate. Eisert et al. (2000) proposed a similar hybrid coding technique using different model-based tracking. We capture and code videos of 352 240 at 30 Hz. At the same low bit rate (18 kbits/s), we compare this hybrid coding with the H.26L JM 4.2 reference software. Figure 8 shows three snapshots of a video. Our hybrid coding has 2 dB higher PSNR around the facial area and has much higher visual quality. Our tracking system could also be used in audiovisual speech recognition, emotion recognition, and medical applications related to facial motion disorders such as facial paralysis.

REAL-TIME SPEECH-DRIVEN 3D FACE ANIMATION

We use the facial motion-capture database used for learning MUs along with its audio track for learning audio-to-visual mapping. For each 33-ms window, we calculate the holistic MUPs as the visual features, and 12 Mel-frequency cepstrum coefficients (MFCCs; Rabiner & Juang, 1993) as the audio features. To include contextual information, the audio feature vectors of frames t-3, t-2, t -1, t, t+1, t+2, and t+3, are concatenated as the final audio feature vector of frame t.

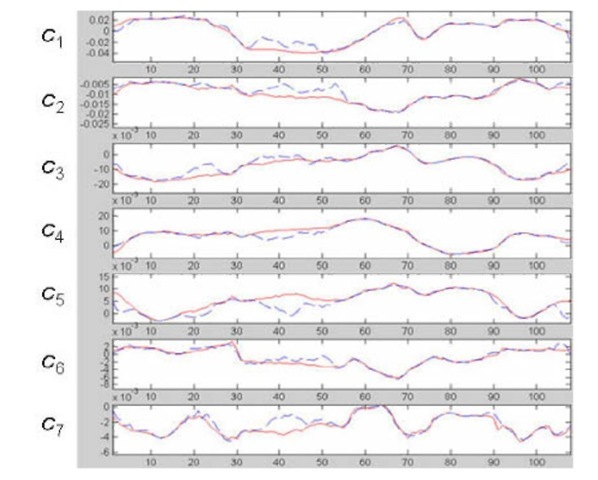

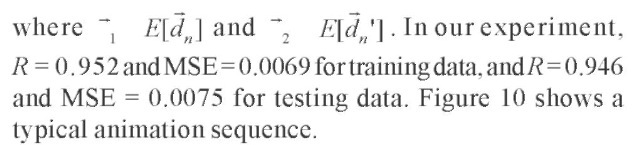

The training audiovisual data is divided into 21 groups, where one is for silence and the remaining groups are generated using the k-means algorithm. Then, the audio features of each group are modeled by a Gaussian model. After that, a three-layer perceptron is trained for each group to map the audio features to the visual features. For estimation, we first classify an audio vector into one of the audio feature groups using GMM. We then select the corresponding neural network to map the audio feature to MUPs, which can be used in Equation (1) to synthesize the facial shape. For each group, 80% of the data is randomly selected for training and 20% for testing. A typical estimation result is shown in Figure 9. The horizontal axes represent time. The vertical axes represent the magnitude of the MUPs. The solid red trajectory is the original MUPs, and the dashed blue trajectory is the estimation results.

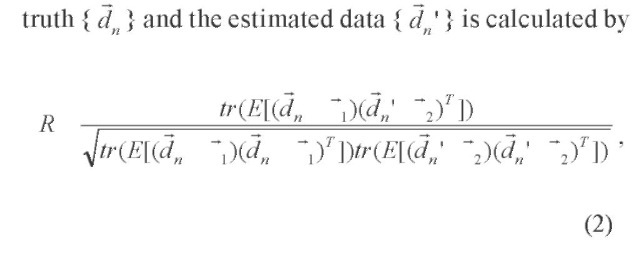

We reconstruct the facial deformation using the estimated MUPs. For both the ground truth and the estimated results, we divide the deformation of each marker by its maximum absolute displacement in the ground-truth data. To evaluate the performance, we calculate the Pearson product-moment correlation coefficients (R) and the mean square error (MSE) using the normalized deformations. The Pearson product-moment correlation (0.0 R 1.0) measures how good the global match is between two signal sequences. The coefficient R between the ground

Figure 9. Compare the estimated MUPs with the original MUPs. The content of the corresponding speech track is, “A bird flew on lighthearted wing.”

Our real-time speech-driven animation can be used in real-time two-way communication scenarios such as videophone. On the other hand, existing off-line speech-driven animation can be used in one-way communication scenarios, such as broadcasting. Our approach deals with the mapping of both vowels and consonants, thus it is more accurate than real-time approaches with only vowel mapping. Compared to real-time approaches using only one neural network for all audio features, our local ANN mapping (i.e., one neural network for each audio feature group) is more efficient because each ANN is much simpler.

Human Emotion Perception Study

The synthetic talking face can be evaluated by comparing the influence of the synthetic face and real face on human emotion perception. We choose three facial expressions, (a) neutral, (b) smile, and (c) sad, and three audio tracks, (a) “It is normal,” (b) “It is good,” and (c) “It is bad.” The information associated with the sentences is (a) neutral,(b) positive, and (c) negative. We capture video and generate animation for all nine combinations of expressions and sentences. Sixteen untrained people participated in the experiments.

Figure 10. Typical animation frames. Temporal order is from left to right, top to bottom.

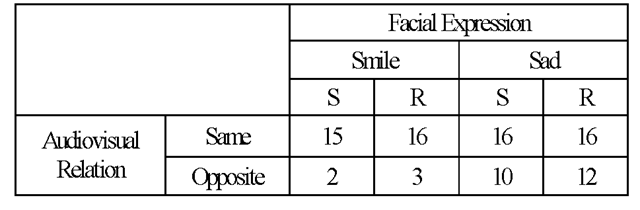

Table 1. Emotion inference based on visual-only stimuli. The S column is the synthetic face, the R column the real face.

The first experiment investigates human emotion perception based on visual-only stimuli. The subjects are asked to infer the emotional states based on the animation sequences without audio. The emotion inference results in terms of the number of the subjects are shown in Table 1. As shown, the effectiveness of the synthetic talking face is comparable with that of the real face.

The second and third experiments are designed to compare the influence of the synthetic face on bimodal human emotion perception and that of the real face. In the second experiment, the participants are asked to infer the emotion from the synthetic talking face animation with audio. The third experiment is the same except that the participants observe the real face instead. In each of the experiments, the audiovisual stimuli are presented in two groups. In the first group, audio content and visual information represent the same kind of information (e.g., positive text with smile expression). In the second group, the relationship is the opposite. The results are combined in Table 2. If the audio content and the facial expressions represent the same kind of information, the human perception is enhanced. Otherwise, it confuses human participants. An example is shown in the fifth and sixth columns of Table 2. The audio content is positive while the facial expression is sad. Ten participants report “sad” for the synthetic face with sad expression. The number increases to 12 if the real face is used. Overall, the experiments show that the effectiveness of the synthetic face is comparable with that of the real face, though it is slightly weaker.

Table 2. Emotion inference results agreed with facial expressions. The inference is based on both audio and visual stimuli. The S column is the synthetic face, the R column the real face.

FUTURE TRENDS

Future research directions include investigating systematic ways of adapting learned models for new people, and capturing appearance variations in motion-capture data for subtle yet perceptually important facial deformation.

CONCLUSION

This article presents a unified framework for learning compact facial deformation models from data, and applying the models to facial motion analysis and synthesis. This framework uses a 3D facial motion-capture database to learn compact holistic and parts-based facial deformation models called MUs. The MUs are used to approximate arbitrary facial deformation. The learned models are used in 3D facial motion analysis and real-time speech-driven face animation. The experiments demonstrate that robust nonrigid face tracking and flexible, natural face animation can be achieved based on the learned models.

KEY TERMS

Artificial Neural Networks: Type of machine learning paradigm. They simulate the densely interconnected, parallel structure of the mammalian brain. They have been shown to be powerful tools for function approximation.

Facial Deformation Model: Model that explains the nonrigid motions of human faces. The nonrigid motions are usually caused by speech and facial expressions.

Facial Motion Analysis: Procedure of estimating facial motion parameters. It can also be called “face tracing.” It can be used to extract human face motion information from video, which is useful input for intelligent video surveillance and human-computer interaction.

Facial Motion Synthesis: Procedure of creating synthetic face animations. Examples include text-driven face animation and speech-driven face animation. It can be used as an avatar-based visual interface for human-computer interaction.

Machine Learning Techniques: Techniques that can automatically improve computational models based on experiences

Motion Capture: Techniques that measure complex motions. One type of motion-capture techniques places markers on the target object and tracks the motions of these markers.

Motion Unit Model: Facial deformation model we propose. It is based on measurement of real facial motions. Motion units (MUs) are a set of vectors whose linear combinations can be used to approximate arbitrary facial shapes.