INTRODUCTION

An optical character recognition (OCR) system enables a user to feed an article directly into an electronic computer file and translate the optically scanned bitmaps of text characters into machine-readable codes; that is, ASCII, Chinese GB, as well as Big5 codes, and then edits it by using a word processor. OCR is hence being employed by libraries to digitize and preserve their holdings. Billions of letters are sorted every day by OCR machines, which can considerably speed up mail delivery.

The techniques of OCR can be divided into two approaches: template matching and structure analysis (Mori, Suen & Yamamoto, 1992). The template matching approach is to reduce the complexity of matching by projecting from two-dimensional information onto one; the structure analysis approach is to analyze the variation of shapes of characters. The template matching approach is only suitable for recognizing printed characters; however, the structure analysis approach can be applied to recognize handwritten characters.

Several OCR techniques have been proposed, based on statistical, matching, transform and shape features (Abdelazim & Hashish, 1989; Papamarkos, Spilioties & Zoumadakis, 1994). Recently, integrated OCR systems have been proposed, and they take advantage of specific character-driven hardware implementations (Pereira & Bourbakis, 1995). OCR generally involves four discrete processes (Khoubyari & Hull, 1996; Liu, Tang & Suen, 1997; Wang, Fan & Wu, 1997):

1. separate the text and the image blocks; then finds columns, paragraphs, text lines, words, and characters;

2. extract the features of characters, and compare their features with a set of rules that can distinguish each character/font from others;

3. correct the incorrect words by using spell checking tools; and

4. translate each symbol into a machine-readable code.

The duplicate document image retrieval (DDIR) system transforms document formatted data into document images, then stores these images and their corresponding features in a database for the purpose of data backup. The document images are called duplicate document images. When retrieving a duplicate document image from the database, users input the first several text lines of the original document into the system to create a query document image. Then the system figures out the features of the image, and transmits to the users the duplicate document image whose image features are similar to those of the query document image (Nagy & Xu, 1997).

Some approaches have been proposed for the DDIR system. Doermann, Li, and Kia (1997) classified and encoded character types according to the condition that four base lines cross each text line, and uses the codes as the feature of the document image. Caprari (2000) extracted a small region from one document, assigned this region to the template (signature generation), and then scanned this template over a search area in another document. If the template also appears in the second document (signature matching), the two documents are classified as duplicates. Angelina, Yasser, and Essam (2000) transformed a scanned form into a frameset composed of a number of cells. The maximal grid encompassing all of the horizontal and vertical lines in the form is generated; meanwhile, the number of cells in the frameset,where each cell was created by the maximal grid, was calculated. Additionally, an algorithm for similarity matching of document framesets based on their grid representations is proposed too. Peng, Long, Chi, and Siu (2001) used the size of each component block containing a paragraph text image in a duplicate document image and its relative location as the features of the duplicate document image.

The approaches mentioned previously are only suitable for stating the characteristics of an English document image. The characteristics of Chinese characters are quite different from those of English ones, and the strokes and shapes of Chinese characters are much more complicated than those of English characters. Chan, Chen, and Ho (2003) provided a line segment feature to represent a character image block and presented a duplicate Chinese document image retrieval (DCDIR) system based on this feature. The purpose of this short article is to give a brief overview of the duplicate Chinese DDIR systems.

BACKGROUND

Traditional information retrieval methods use keywords for textual databases. However, it is difficult to describe an image using exact information, and defining manually keywords is tedious or even impossible for a large image database. Moreover, some non-text components cannot be represented in a converted form without sufficient accuracy. One solution is to convert a document into digital images; meanwhile, some methods are applied to extract the features of the images. Based on the feature, some document images with database satisfying query requirements are returned.

A duplicate document image retrieval (DDIR) system has to own the following properties (Doermann, Li, & Kia, 1997):

Robust: The features should be reliably extracted even when the document becomes degraded.

Unique: The extracted features can distinguish each document image from others.

Compact: The storage capacity required to hold the features should be as small as possible.

Fast: The system needs a quick response with an answer to the query.

Scalable: As more documents are processed, the size of the database could grow to tens of millions. Accurate: The system should accurately response with an answer, which satisfies the query requirement.

Unfortunately, many DDIR systems are vulnerable to poor qualities of document images, such as the scale, translation, rotation, and noise variants. Because of different resolution setup of a scanner, the same image may be scanned to become two images with different sizes. We call this phenomenon the scale variant. When an image is added with a great amount of noises, it may be regarded as a different image from the original one. It is named a noise variant image of the original one. In a particular document, images with rotation and translation variants may be generated owing to placing the document on different orientation angles or on different positions on a scanner. The variants mentioned previously will cause many troubles in feature extracting and image matching stages. They should be removed in advance.

A CHINESE DDIR SYSTEM

Many techniques about the DDIR system have been proposed (Caprari, 2000; Doermann, Li, & Kia, 1997; Peng, Chi, Siu, & Long, 2000; Peng, Long, Chi, & Siu, 2001). Since an English document mostly consists of approximately 70 commonly-used characters which contain 52 uppercase as well as lowercase English letters and punctuation marks, the classification and encoding procedure based on the feature of these characters’ font types are possible. However, these techniques are only suitable for duplicate English document images, but not for duplicate Chinese document image retrieval (DCDIR) because the number of different Chinese characters is about 45,000. What is more, the shapes of Chinese characters are complex, and many different characters have similar shapes to each other. Hence, there are several major problems with Chinese character recognition, that is, Chinese characters are distinct and ideographic, the size of a character is large, and there exist many structurally similar characters (Amin & Singh, 1996; Chan, Chen, & Ho, 2003).

It is necessary to develop a feature offering an excellent identification capability to classify Chinese characters by only using a little extra memory space. To reduce the extra memory space, it is feasible to segment a duplicate document image into blocks, each of which contains a set of adjacent characters, and then to extract the features from the blocks. Since the number of the blocks in a duplicate document image is much smaller than that of the characters in an identical duplicate document image, the feature dimensions are reduced greatly; however, its identification capability is lessened.

I. DCDIR System

The proposed duplicate document image retrieval system approximately includes three parts — image preprocessing, database creation, and document retrieval. This section will introduce these three parts in details.

A. Image Preprocessing

When scanning a document to generate a duplicate document binary image, the position of the document on the scanner may be misplaced so that the duplicate document image may become inclined. Figure 1(a) shows an original image and Figure 1(b) is its duplicate document image that appears inclined. The inclined condition of a document image may lead to inconvenience to users and cause the errors in extracting its image features. Peng et al. (2000) used a correlation-based method to detect the inclination of an image, and then applied an interpolation technique to turn the image back according to the detected inclination. The DCDIR system will use this technique to turn the inclined document image back. Figure 1(c) is the duplicate document image after adjusting the inclination.

As in Figure 1(c), after turning back the duplicate document image, the frame of the duplicate document image will become inclined. It is necessary to cut off the border blank of the document image. While removing the border blank, the system starts scanning the duplicate document image from the left-top pixel. Then, in the order from left to right and top to bottom, each pixel is scanned until one certain black pixel P is found. Finally, all pixels locating on the lines, which are prior to the line containing P are removed to cut off the top border blank of the document image. By using the same method, the bottom, left, and right border blanks of the document image are removed as well. Figure 1(d) demonstrates the final duplicate document image after cutting off the border blanks of the document image as illustrated in Figure 1(c).

B. Database Creation

After that, the DCDIR system extracts the character image features from the duplicate document image I in which its border blanks have been cut off and the system stores the features in the database. Before extracting the character image features of I, the system first performs the text line segmentation on I to make every line image

Figure 1. Normalization of an inclined document image

(a) Original document

(b) Duplicate document image after scanning

When scanning a document to generate a duplicate document binary image, the position of the document on the scanner may be misplaced so that the duplicate document image may become inclined. Figure 1(a) shows an original image and Figure 1(b) is its duplicate document image that appears inclined. The inclined condition of a document

When scanning a document to generate a duplicate document binary image, the position of the document on the scanner may be misplaced so that ‘he duplicate document image may become inclined. Figure 1(a) shows an original image and Figure Kb) is its duplicate document image that appears inclined. The inclined condition of a document

(c) Duplicate document image after inclination adjusting

(d) Duplicate document image after cutting off the border blanks

When scanning a document to generate a duplicate document binary image, the position of the document on the scanner may be misplaced so that the duplicate document image may become inclined. Figure 1(a) shows an original image and Figure 1(b) is its duplicate document image that appears inclined. The inclined condition of a document t

When scanning a document to generate a duplicate document binary image, the position of the document on the scanner may be misplaced so that the duplicate document image may become inclined. Figure 1(a) shows an original image and Figure 1(b) is its duplicate document image that appears inclined. The inclined condition of a document block contain only the complete image of one certain line text in the original document. Then, the system segments out all character image blocks from each previously segmented line image block so that every character image block contains only one Chinese character. Finally, the feature of each character image block is then extracted.

Concerning the steps of segmenting line image blocks from a duplicate document image, first, all of the black pixels in the duplicate document image are projected in horizontal direction onto a projection vertical axis. The length of a black section on the projection vertical axis is just the height of the corresponding text line containing those character images whose black pixels are projected onto the black section.

Next, all of the black pixels in every line image block is projected in vertical direction onto a certain projection horizontal axis. In this case, the distribution borders of the black pixels and the white pixels on the projection horizontal axis are the locations of the left and the right boundaries of the character image blocks. On the projection horizontal axis, the length of a black section is just the width of the corresponding character image block whose black pixels are projected onto the black section.

The sizes of most Chinese characters are close. When the height of a certain character image block CB is smaller than three-fourths of the average height of all character image blocks in the document image, and the width of CB is also smaller than three-fourths of the average width of all character image blocks, the system will then regard the character in CB as a noise, and then remove it.

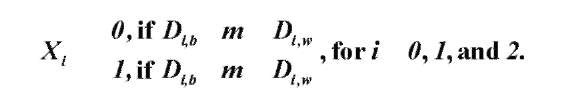

After that, three horizontal scanning lines are drawn on each character image block. These three horizontal scanning lines are respectively located at 1/4*H, 2/4*-H and 3/4><H character heights in the block. Here H represents the height of the character image block. According to the ratio of the total number of the black pixels to that of the white pixels, which the scanning line goes through, an encoding process is executed to reduce the memory space required to store the feature of the character image block. The way of encoding is shown as follows:

In this equation, D.w and D.b are respectively the total numbers of the white pixels and the black pixels that the i-th scanning line passes through, and m is the weight (a given constant value) for the ratio from the total numbers of the black pixels and the white pixels on the scanning line. Thus, each character image block can be represented by a three-bit (X(/X1X2) code; we name the code the feature code of the character image block. There are 8 different binary codes 000, 001, 010, 011,100,101,110, and 111 corresponding to decimal feature codes 0, 1, and 7 respectively.

Because the resolution setup of a scanner may be different, the same original document may be scanned to become duplicate document images of different sizes. This proposed feature adopts the ratio from the number of black pixels and white pixels, so the feature encoding will not be affected due to the scale variant of images. Moreover, the desired duplicate document image and the query document image are both from the same original document. Therefore, the problem that the font type and style of the characters in the query document are different from those in the desired duplicate document image will not occur in this system.

C. Document Retrieval

Let Q = q1q2…ql be the feature code of query document image I and the length of the feature code be/. Next, the system extracts the first feature codes with the length from every duplicate document image Id in the database. Let the extracted feature codes be D = d1d2^dr Then, the system compares the corresponding bit pair q. and d. between Q and D from left to right, respectively. When q. = d, the system adds 1 to the value of S. The final value of S is the similarity between Iq and Id. Finally, the duplicate document image with the largest similarity value is found out.

II. Experiments

Experiment 1 is to explore the constant weight m. As for different values of m, the character image blocks of 5401 in commonly used Chinese characters among Big5 codes, are categorized into eight groups each of which corresponds to one feature code. Table 1 shows the number of members in each group for m=2, 3, and 4, where

2 is the variance of the number of members of the eight groups. The experimental results shows that when m = 3,

2 is minimal. This means, when m = 3, all Chinese character image blocks are most uniformly mapped to various kinds of feature codes. The next experiment will set m = 3.

Experiment 2 is to investigate the performance of the DCDIR system. This experiment scans each page of the topic ” t -Jp 1 l^^” with 336 sheets to become images by a scanner. This experiment rescans 101 sheets of the topic to generate the query document images. Here, the first L text lines of the 101 document sheets are respectively used as the contents of the query document images. Table 2 shows the experimental results. The average searching time is approximately 8 seconds for each query.

Table 1. Results of the first experiment

| 2 | 3 | 4 | |

| Feature Code | |||

| 000 | 372 | 1253 | 2266 |

| 001 | 596 | 773 | 763 |

| 010 | 262 | 387 | 402 |

| 011 | 813 | 525 | 312 |

| 100 | 337 | 564 | 628 |

| 101 | 817 | 591 | 324 |

| 110 | 390 | 374 | 262 |

| 111 | 1798 | 918 | 428 |

| 2 c | 220549 | 74523 | 387785 |

FUTURE TRENDS

After a paper document is used over a long period, the document may be stained or worn out, so that its contents may be indistinct. How to develop an effective image feature insensitive to the rotation, scale, translation, and noise variations is an important task in the future. Many documents are printed or handwritten texts, are multilingual, or are composed of basic composition style and font for text. A future document image indexing method should own the robustness of the above variants.

Moreover, the following topics have been explored, and will continue to be researched. The first is to find or to match the instances of a document image with known content in a database. The techniques can be applied to maintaining database integrity by eliminating duplicates and retrieval itself. The second is to index image captions and to establish a relationship between the content and the images they describe. Then the caption can be a valuable tool of their duplicate document images. Ideally, a duplicate detection algorithm can find both exact duplicates which have just the same content, and partial duplicates, which have a large percentage of their text in common. Locating exact duplicates could reduce the storage required for a large database. Finding partial duplicates will allow users to easily find other versions of a given document.

KEY TERMS

Character Segmentation: The technique that partitions images of lines or words into individual characters.

Content-Based Image Retrieval (CBIR): The technique of image retrieval based on the features automatically extracted from the images themselves.

Duplicate Document Detection: The technique to find the exact duplicates, which have exactly the same content, or partial duplicates which have a large percentage of their text in common.

Duplicate Document Image Retrieval (DDIR): A system for finding the image-formatted duplicate of documents from a database.

MFU: Most frequently used characters in a character set with enormous members.

Optical Character Recognition (OCR): The technique of automatically translating the content of an image formatted document into text-formatted materials.

Skew Correction: The technology detects and compensates for the inclination angle of a document image.

Template Matching: The approach involves designing template masks, which are capable of detecting incidences of the relevant feature at different orientations.