1. Introduction

The Human Genome Project (HGP) has seen many important milestones in its history, but perhaps the most significant was in February 1996 when all the participants (Table 1) agreed that they would do their utmost to ensure that the genome sequence would be freely available to all. They agreed that sequence data would be released without restriction as swiftly as possible and that they would seek no patent protection. By any standards, it was a remarkable agreement. Potentially, large institutions could have patented genes as they went along. Funding agencies agreed with their research leaders that the best way to benefit humankind was to release the sequence swiftly and freely into the public domain. This approach has been vindicated by the massive growth in Internet access to the genome resources (see Section 5), which shows the value the worldwide community places on the sequence.

2. Starting and end points

Three separate proposals were made in the mid-1980s to sequence the human genome (Roberts, 2001). With an estimate of the genome size of 3 billion base pairs (bp) and a cost for sequencing each base of around US$ 10, many researchers fiercely opposed the concept, arguing that financial support would be diverted from other more immediate projects.

The Congress of the United States of America voted in 1988 to support the Human Genome Project, courageously accepting that even if the cost per base sequenced dropped 10-fold, the overall budget would be as much as US$ 3 billion simply for the sequence. The Project was formally announced in October 1990, funded by the US Department of Energy and the Institutes of Health. Around the world, interest in genome projects grew, resulting in the formation of the Human Genome Organization (HUGO), which acted in the early days to discuss priorities and procedures. As the HGP developed, participants joined from the United Kingdom, France, Germany, Japan, and China (Table 1).

From the outset, the HGP had as its goals not only to sequence the human genome but to develop new technologies, to set paradigms using model organisms, to develop mechanisms transfer genome knowledge to the research community, and to consider ethical, legal, and social issues. Most of the scientific goals of the project were significantly exceeded in terms of accuracy or amount of data collected (Table 2).

Table 1 Participants in the Human Genome Project

” Sequencing center is no longer in operation.

The assembly of the genome sequence across chromosomes was also assisted by scientists at Neomorphic, Inc.

3. From 230K to 60 000K

In 1990, the longest single DNA sequence was some 230 000 bp, the genome of the cytomegalovirus. Within 10 years, more than 90% of the human genome would be sequenced and the longest contiguous sequence would have grown more than 100fold, for human chromosomes 21 and 22. Today, the longest contiguous sequence is in excess of 60 000 000 bp.

Table 2

(a) Goals of the Human Genome Project0

Mapping and sequencing the human genome

Mapping and sequencing the genomes of model organisms

Data collection and distribution

Ethical and legal considerations

Research training

Technology development

Technology transfer

(b) Scientific achievements of the Human Genome Project6

| Area | Goal | Achieved | Date |

| Genetic map | 2-5-cM resolution map (600-1500 markers) | 1-cM resolution map (3000 markers) | September 1994 |

| Physical map DNA sequence | 30 000 STSs

95% of gene-containing part of human sequence finished to 99.99% accuracy |

52 000 STSs

99% of gene-containing part of human sequence finished to 99.99% accuracy |

October 1998 April 2003 |

| Capacity and cost of finished sequence Human sequence variation | Sequence 500 Mb/year at < $0.25

per finished base 100 000 mapped human SNPs |

Sequence >1400 Mb/year at <$0.09 per finished base 3.7 million mapped human SNPs | November 2002 February 2003 |

| Gene identification Model organisms | Full-length human cDNAs Complete genome sequences of E. coli, S. cerevisiae, C. elegans, D. melanogaster | 15 000 full-length human cDNAs Finished genome sequences of E. coli, S. cerevisiae, C. elegans, D. melanogaster, plus whole-genome drafts of several others, including C. briggsae, D. pseudoobscura, mouse, and rat | March 2003 April 2003 |

| Functional analysis | Develop genomic-scale technologies | High-throughput oligonucleotide

synthesis DNA microarrays Eukaryotic, whole-genome knockouts (yeast) Scale-up of two-hybrid system for protein-protein interaction |

1994

1996 1999 2002 |

This production of high-quality contiguous sequence is built upon a phased mapping and sequencing program established by the HGP during its development. The initial aim of the HGP was to produce by 2005 at least 95% of the gene-containing regions of the human genome at an error rate less than 0.01%, producing long runs of DNA sequence (Table 2).

Why should long runs be important? At that time, most of the sequences generated by labs around the world were shorter and of lower quality. But the human genome sequence was unlikely to be reproduced in full in the foreseeable future and an archival gold standard was considered essential. Much more important, as biologists got to grips with human genes and variation in human genomes, it became apparent that many genes extended over tens or hundreds of thousands of base pairs, making longer sequence runs much more valuable. Similarly, as it was found that variation in the genome occurs at only about one in 1000 bp, accuracy also became an important criterion.

As the HGP progressed, the demand for accurate sequence was balanced against the growing hunger of the research community for more sequence. In many projects, a lower level of sequence quality is sufficient to allow researchers to isolate and analyze the gene(s) they are interested in. To satisfy this hunger, in 1998, the HGP adopted a plan first mooted in 1995 (Waterston and Sulston, 1998) to produce a draft or sketch of the genome as an intermediate product that could be generated rapidly. While not the finished product, the draft would allow much research to be accelerated and would ensure that the majority of the genome sequence was deposited in the public domain swiftly. At the same time, the HGP remained committed to go on and bring the draft sequence up to gold standard quality. The decision to rapidly create a draft was also partly made out of concerns about commercial plans to create a proprietary draft of the genome and the possible undesirable consequences of restrictions on its use (Sulston and Ferry, 2002).

4. Sequencing the genome

Today’s high-throughput DNA sequencing is still based on the biochemical reactions and high-resolution methods for DNA analysis developed by Fred Sanger in the late 1970s (Sanger et al., 1977). Sanger’s lab also pioneered approaches to apply this method to genome-sized sequence fragments and in 1982 published the first whole-genome shotgun (WGS) (Sanger etal., 1982) – a method in which a genome sequence is assembled from overlaps between many short sequence “reads” produced by breaking a genome apart at random. However, for very large genomes, it was considered that the very large number of fragments that would be generated by WGS approach would lead to serious assembly problems (see Article 25, Genome assembly, Volume 3), as would segmental duplications (see Article 26, Segmental duplications and the human genome, Volume 3). The HGP adopted a more complex hierarchical sequencing approach for this reason and because it facilitated finishing to high accuracy.

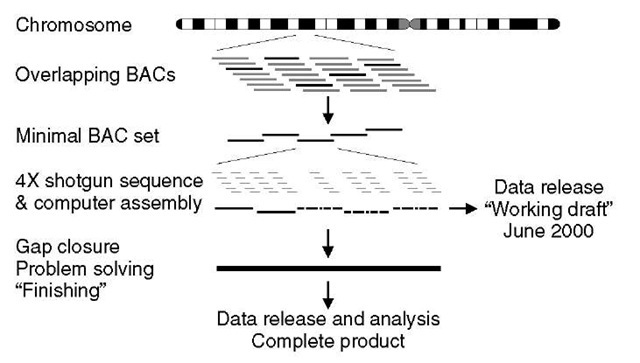

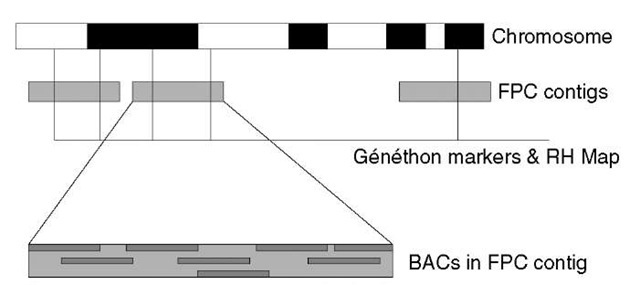

In hierarchical sequencing projects, large clones of DNA (called bacterial artificial chromosomes, or BACs) are first produced by “shotgun” fragmentation of the whole genome, each ~100-200kb in size. In the early phases of the HGP, a set of landmarks had been established on the 23 pairs of human chromosomes, and many of the BACs could be mapped to the genome using these landmarks. Through the construction of fingerprint contigs of BACs (see Article 3, Hierarchical, ordered mapped large insert clone shotgun sequencing, Volume 3) 300 000 BACs could be positioned on the genome through the small number of mapped BACs (Figure 1). From these, a minimal set of BACs was chosen that would cover the majority of the human genome (some regions of all large genomes are refractory to conventional cloning methods and are not represented in libraries of BACs). Each of the minimal set of clones was then subjected to shotgun sequencing (Figure 2). Each was fragmented in turn and subcloned into DNA plasmids that could be used for sequencing. The fragments were typically 2000 bp in length, and about 500 bases of sequence were “read” from each end. First-pass sequencing of 2000-4000 reads per BAC corresponds to reading each base about four times. Assembling these randomly obtained reads generates about 90-95% of the BAC sequence at an accuracy of about 99.9%. This is the draft – the sketch of our genome (International Human Genome Sequencing Consortium, 2001). The majority of the sequencing for the draft was achieved in a little over 12 months, representing an impressive distributed scale up and application of laboratory technology (see Article 23, The technology tour de force of the Human Genome Project, Volume 3).

Figure 1 FPC (finger print contigs) are positioned and orientated on human chromosomes via Genethon markers and radiation hybrid (RH) Map. Each FPC is an assembly of BAC clone-based similarities between their restriction digest fingerprints

Figure 2 Strategy of HGP was based on selecting a minimal set of overlapping BAC clones to sequence, from libraries covering the genome many times. Each selected clone was then shotgun sequenced to a certain base coverage and then “finished” to close the remaining gaps and resolve problems in a semimanual fashion. A shotgun coverage of 4X means that on average each base in the clone will occur in four different reads

Following the announcement of the draft sequence in June 2000, the HGP continued to improve the sequence quality. To fill gaps or to clear up ambiguities, new DNA clones were made using different methods, and sequence was generated without using clones to fill gaps that appeared to be unclonable. Finishing a genome is a specialist skill and all the participants in the HGP devoted teams to these tasks.

By April 2003, the HGP had surpassed the original goals of the project. The sequence was of much higher quality than the standards set and the amount of the genome represented also exceeded the level of 95% of the gene-containing regions (International Human Genome Sequencing Consortium, 2004). Work continues today to improve the regions that are poorly represented. As the continuity and completeness of each chromosome has been finalized to what appears possible with current technology, each has been analyzed and published. To its credit, the HGP has never claimed to have finished the sequencing of the human genome.

While the human genome sequence has been finished, draft sequences of other vertebrate genomes have been generated using WGS techniques. Recently, the formally private assemblies from the commercial human sequencing project, which championed using WGS techniques for vertebrates (Venter et al., 2001), have been released. This has allowed the comparison of a WGS assembly with a clone assembly (International Human Genome Sequencing Consortium, 2004) for the same organism (She etal., 2004). The comparison shows that WGS techniques do cause large duplicated regions of genomes to be lost from the assembly with their associated genes (see Article 26, Segmental duplications and the human genome, Volume 3). It concluded that clone-based approaches are required to resolve such problems. Therefore, while WGS techniques have allowed rapid coverage of new genomes, users must be cautious about overinterpreting differences between genome sequences that may be assembly artifacts.

5. Finding value in our genome

The growth in sequence data in public databases has been exponential since the mid-1980s, requiring continuous development at the database centers to support it. Despite this, the human genome sequencing effort generated an unprecedented amount of sequence in a very short time. It has been a challenge to organize, analyze, and provide access to it, particularly in the face of a huge and growing demand (Figure 3). The HGP has driven the development of two major new public domain Web-based resources Ensembl (Hubbard etal., 2002) and UCSC (Kent etal., 2002) to organize and provide access to the human genome alongside the existing NCBI resources. The browsers have provided users with web access to an organized representation of the genome sequence, from the time when the genome consisted of around 100 000 fragments at the time of the draft, to the few hundred continuous blocks today. Each allows anyone to download the views, the underlying data, and even the software that makes them work. Each provides links to the experimental data that supports the genome structure and integrates information about sequence variation and disease association. As other vertebrate genomes have been sequenced, they have also provided integrated views of the similarities and differences between them through comparative analysis.

Beyond providing access to the genome sequence, the major focus has been locating the genes in the sequence and annotating their structure. The Ensembl project has been at the forefront, providing consistent gene sets by annotating the genome on the basis of the alignment of experimental evidence. As the genome has been finished and the collection of high-quality cDNA sequences has increased, a process of curating gene structures has begun to extend and refine this geneset, most notably by the Havana group as shown in their Vega database (Ashurst etal., 2005) and by the NCBI RefSeq annotators (Pruitt etal., 2003). Recently, Ensembl, NCBI, Havana, and UCSC have begun to collaborate to create the Consensus Coding Sequence (CCDS) resource, which identifies the subset of genes (14 795) for which the annotation of a CDS is agreed across all databases. automatically

Figure 3 Growth in the use of Ensembl database.The measures shown here are Page Impressions, which is the number of whole pages accessed (and is a more accurate and lower count than “Hits”)

Annotation and analysis is very far from complete. Up to now, the focus has been the protein coding genes, and only now are efforts being made to identify and annotate noncoding RNA genes (see Article 27, Noncoding RNAs in mammals, Volume 3) and microRNAs (see Article 34, Human microRNAs, Volume 3). Separating functional genes from pseudogenes accurately remains problematic (see Article 29, Pseudogenes, Volume 3), as does identifying functional alternative transcripts (see Article 30, Alternative splicing: conservation and function, Volume 3). Ultimately, gene annotation must also extend to promoters (see Article 33, Transcriptional promoters, Volume 3).

The existence of the sequence and a core annotation has driven researchers worldwide to collect experimental data and perform computational analysis on a genomic scale. One of the most powerful aspects of the genome browsers has been the way that they encourage the integration of external data. Technologies such as the distributed annotation system (DAS) (Dowell et al., 2001), which facilitate this, are based on the recognition that there is too much data that can be related to the genome to be stored in a single database. This virtual integration of annotation will drive progress in our understanding the genome by allowing researchers to easily share and compare different data.

6. Epilogue

The HGP established a clear set of goals (Table 2), within which targets were set and regularly updated in a series of five-year plans. Given the knowledge and capacity for sequencing and analysis at the outset of the Project, these were enormously challenging. Each was met and exceeded. With the goals of the HGP achieved, the centers involved in the project continue to improve the sequence of the human genome, to sequence genomes of other organisms and, above all, to grapple with the real quest of turning this information into biomedical benefit.

Beyond the genome itself, the boldness of the HGP project – to rapidly generate such a critical and huge dataset, through a coordinated public project with immediate public release – has not only transformed biology, but has had a major impact on what is viewed as possible through public research. The HGP has been a triumph for an open data access model of scientific research and has led to policy changes to support this better in future (Dennis, 2003; Editor, 2003). Coming at a time of the success of other open models in software development (open source) and in open access publishing, there are serious questions as to whether this can go as far as open source drug development (Economist, 2004).

Knowledge of our genome sequence means that biologists can do experiments that were not possible in 1999, and can study in detail diseases that had too complex a genetic basis before the catalog of genes was available. Only rarely do biological experiments transform our world. With its careful attention to quality, to free release, and to ethical issues, the HGP has produced a resource that can truly benefit all of humankind.