The importance of the wavelet transform for biomedical image processing was realized almost immediately after the key concepts became widely known. Two reviews18,20 cover most of the fundamental concepts and applications. In addition, the application of wavelets in medical imaging has led to development of powerful wavelet toolboxes that can be downloaded freely from the Web. A list of some wavelet software applications is provided in Section 14.6.

The most dominant application is arguably wavelet-based denoising. Noise is an inescapable component of medical images, since noise is introduced in several steps of signal acquisition and image formation. A good example is magnetic resonance imaging, where very small signals (the magnetic echos of spinning protons) need to be amplified by large factors. Many fast MRI acquisition sequences gain acquisition speed at the expense of the signal/noise ratio (SNR). For this reason, the availability of detail-preserving noise-reduction schemes are crucial to optimizing MR image quality. A comprehensive survey of different wavelet-based denoising algorithms in MR images was conducted by Wink and Roerdink33 together with a comparison to Gaussian blurring. Gaussian blurring is capable of improving the SNR by 10 to 15 dB in images with very low SNR. However, when the SNR of the original image exceeds 15 to 20 dB (i.e., has a relatively low noise component), Gaussian blurring does not provide further improvement of SNR. Most wavelet denoising schemes, on the other hand, show consistent improvement of the SNR by 15 to 20 dB even in images with a low initial noise component. Noise is particularly prevalent in MR images with small voxel sizes: for example, in high-resolution MR microscopy images. For those cases, a wavelet denoising scheme was introduced by Ghugre et al.15 The three-dimensional algorithm is based on hard thresholding and averaging of multiple shifted transforms following the translation-invariant denoising method by Coifman and Donoho.7 These shifts are particularly important, since Ghugre et al. subdivide the three-dimensional MRI volume into smaller 64 x 64 x 64 voxel blocks and denoise each block individually. Without the shift-average operation, the subdivision of the volume would cause blocking effects. Three-dimensional denoising outperformed corresponding two-dimensional methods both in mean-squared-error metrics and in subjective perception. Finally, in the special case of magnetic resonance images, the denoising process can be applied to both the real and imaginary parts of the spatial image.25,36 The k-space matrix was reconstructed through an inverse Fourier transform, resulting in a real and an imaginary spatial image. Soft-threshold wavelet shrinking with the threshold determined by generalized cross-validation [Equation (4.25)] was applied on both real and imaginary parts before computation of the spatial magnitude image, which was the final reconstruction step.

Plain x-ray imaging is much less susceptible to noise than is MRI. Yet some applications, predominantly mammography, require particularly low noise with excellent preservation of detail. The ability of wavelet-based methods to achieve these goals has been examined. Scharcanski and Jung30 presented an x-ray denoising technique based on wavelet shrinkage (hard thresholding) as the second step in a two-step process. The first step is local contrast enhancement. The standard deviation of x-ray noise component increases with image intensity, and a contrast enhancement function was designed that acts similar to local histogram equalization but depends on the local noise component. Wavelet shrinkage was optimized under the assumption of a Gaussian distribution of the noise component, but a Laplacian distribution of noise-free wavelet coefficients. The end effect of local contrast enhancement and subsequent wavelet denoising is a mammography image where local features, such as microcal-cifications, are strongly contrast enhanced and visually more dominant than in either the original image, the image subjected to local histogram equalization, or the image subjected to noise-dependent contrast enhancement only. A similar idea of a two-step approach involving local contrast enhancement and adaptive denoising was followed by Sakellaropoulos et al.29 In this study, a nondecimating wavelet transform (i.e., a wavelet transform that does not downsample the lowpass-filtered component) was used, and the wavelet coefficients were denoised by soft thresholding followed by applying nonlinear gain at each scale for adaptive contrast enhancement.

Ultrasound imaging is another biomedical imaging modality where noise is dominant and leads to poor image quality. Usually, skilled medical personnel are needed to interpret ultrasound images. Suitable image processing methods have been developed to improve image quality and help interpret the images. To remove noise and noise-related ultrasound speckles, wavelet-based methods have been developed. Contrary to MR and x-ray images, ultrasound speckle noise is frequently interpreted as multiplicative noise [i.e., the A-mode signal f (z) consists of the echo intensity e(z) as a function of depth z and noise n such that f (z) = e(z)n]. Consequently, taking the logarithm of f (z) converts multiplicative noise into additive noise. A straightforward approach, such as proposed by Gupta et al.,16 would be to convert the ultrasound image to its log-transformed form, perform a wavelet decomposition, use a soft-thresholding wavelet shrinkage for denoising, reconstruct the image through an inverse wavelet transform, and restore the denoised intensity values through a pixelwise exponential function. This idea can be taken even further when the wavelet-based denoising filter is built into the ultrasound machine at an early stage of the image formation process. Such a filter would act on the RF signal prior to demodulation. A wavelet filter that acts either on the RF signal before demodulation or on the actual image data was proposed by Michailovich and Tannenbaum.22 However, this study indicated that the model of uncorrelated, Gaussian, multiplicative noise was overly simplified. In fact, Michailovich and Tannenbaum demonstrate a high autocorrelation of the noise component, depending on its position in the B-mode scan. By using a nonlinear filter, they propose to decorrelate the noise, after which soft thresholding can be applied on the logarithmic data. A completely different approach was taken by Yue et al.,35 who combined wavelet decomposition with a nonlinear anisotropic diffusion filter (see Section 5.1). In this special case, wavelet decomposition of the image took place over three levels, and all wavelet coefficients except the lowest-detail region were subjected individually to anisotropic diffusion. Since the wavelet transform is a powerful method of separating signal and noise, the anisotropic diffusion filter can preserve edges even better than the same filter applied on the original image.

Single-photon emission computed tomography (SPECT) and positron emission tomography (PET) are modalities that rely on radioactive decay of radiopharmaceu-ticals. The decay is a random event, and with the required low doses of radioactive material, the signal is composed of a few integrated events. In other words, the image is made of noise. One model for this type of noise is the Poisson model, where the standard deviation is proportional to the signal intensity. For visualization purposes, the SPECT or PET signal is frequently severely blurred, false-colored, and superimposed over a higher-resolution MR or CT image. Wavelet-based denoising is a promising approach to improving SPECT and PET image quality. For example, Bronnikov3 suggested the use of a wavelet shrinkage filter in low-count cone-beam computed tomography, which is related to SPECT in its high Poisson noise content. For PET images, a three-dimensional extension of the wavelet denoising technique based on a threshold found through general cross-validation was proposed by Charnigo et al.,6 who describe a multidimensional wavelet decomposition strategy that is applied to small nonoverlapping cubes (subspaces) of the original three-dimensional PET image. To find a suitable threshold for wavelet coefficient shrinking, Stein’s unbiased risk estimator (SURE)11,31 was proposed, but with an adjustable parameter to either oversmooth or undersmooth [similar to the parameter Y in Equation (4.20)]. Charnigo et al. point out that undersmoothing (i.e., selecting a weaker than optimal filter) in medical images is preferable to oversmoothing. The efficacy of different denoising protocols in PET time-course images of the heart was analyzed by Lin et al.,21 who propose a wavelet decomposition scheme that does not include subsam-pling. The resulting redundancy led to shift invariance of the wavelet filter. Some of the denoising protocols proposed allowed more accurate determination of myocaridal perfusion and better differentiating between normal and underperfused regions. Similar conclusions were found in a study by Su and et al.32 on simulated PET images and small-animal PET images. Wavelet filtering improved nonlinear least-squares model fitting of time-course models to the data, particularly in images with high noise levels when compared to conventional smoothing filters.

Neither light microscopic nor electron microscopic images are typically known as being extremely noisy. However, there are conditions under which the signal-to-noise ratio is very low, particularly when imaging weak fluorescent signals or reducing exposure to reduce photobleaching. For this type of image, Moss et al.24 developed a wavelet-based size filter that emphasizes structures of a specified size and made use of the property of the wavelet transform to provide the strength of correlation of the data with the wavelet.24 This particular filter makes use of the continuous wavelet transform [Equation (4.4)] rather than the fast dyadic wavelet transform. Furthermore, Moss et al. propose designing a wavelet transform in three dimensions that uses separability to improve computational efficiency and symmetry to provide rotation invariance. In a different study, Boutet de Monvel et al.2 present wavelet-based improvements to the deconvolution of confocal microscope images. They noted that the deconvolution process affects image detail differently depending on its size: namely, that small image features are more sensitive than large features to noise or mismatches of the optical point-spread function. Furthermore, the iterative nature of the deconvolution process exacerbates this effect. It was found that a wavelet-based denoising step introduced between the deconvolution iterations strongly improves the image quality of the final reconstructed images.

Optical coherence tomography (OCT) is a more recent imaging modality where light-scattering properties of tissue provide contrast. Broadband laser light (i.e., laser light with a short coherent length) is directed onto the tissue through an interferometer. Laser light that is scattered back from the tissue into the optical system provides a signal only from within the short coherent section. The coherent section and thus the axial resolution is several micrometers deep. Scanning the coherent section in the axial direction provides the optical equivalent of an ultrasound A-mode scan. Most

OCT devices generate a two-dimensional cross section analogous to the B-mode scan of an ultrasound device. Similar to ultrasound, OCT images are strongly affected by noise, particularly additive Gaussian noise and multiplicative noise. Gargesha et al.14 show that conventional filtering techniques (median filtering, Wiener filtering) and wavelet-based filtering with orthogonal wavelets do not sufficiently reduce noise while retaining structural information. The filter scheme proposed by Gargesha et al. is based on an idea by Kovesi19 to perform denoising in the Fourier domain, shrinking only the magnitude coefficients and leaving the phase information unchanged. The improvement by Gargesha et al. consists of adaptively optimizing the filter parameters and using nonlinear diffusion to reduce noise at different scales.

Whereas wavelets are clearly most popular in image denoising, other areas benefit from the wavelet transform as well. To give two particularly interesting examples, the wavelet transform can be used in the image formation process of computed tomography images, and wavelet-based interpolation provides a higher interpolated image quality than do conventional schemes. In computed tomography, reconstruction is based on the Fourier slice theorem. Peyrin et al.26 showed that tomographic reconstruction from projections is possible with the wavelet transform. Based on Peyrin et al.26 approach, Bonnet et al.1 suggest a wavelet-based modification of the Feldkamp algorithm for the reconstruction of three-dimensional cone-beam tomography data. Another interesting application of the wavelet transform is the reconstruction of tomographic images from a low number of projections that cover a limited angle.28 The main challenge with reduced-angle backprojection lies in its inability to reconstruct reliably edges perpendicular to an x-ray beam. Therefore, discontinuities that are not tangential to any of the projections in the limited angle range will be represented poorly in the reconstructed image. The wavelet reconstruction by Rantala et al.28 is based on modeling the statistical properties of the projection data and iterative optimization of a maximum a posteriori estimate.

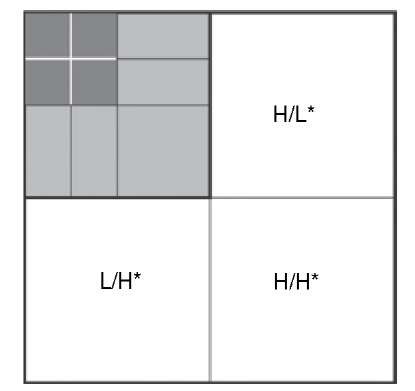

Image interpolation can be envisioned as adding a new highest-detail scale, as shown in Figure 4.19. Decomposition of the original image provides the gray shaded decomposition levels. A new level is added for reconstruction, which has twice the side length of the original image. This level is shown in white in Figure 4.19 and labeled L/H*, H/L*, and H/H*. If the entire image is reconstructed (including the white areas), the image resolution will have doubled. The main task of the image interpolation technique is to find suitable information for the new (white) areas that provide the new interpolated image detail. Carey et al.5 presented an interpolation method that enhances the edge information by measuring the decay of wavelet transform coefficients across scales and preserves the underlying regularity by extrapolating into the new subband (H/L*, L/H*, and H/H*) to be used in image resynthesis. By using the information that exists on multiple scales, the soft interpolated edges associated with linear and cubic interpolation schemes can be avoided. A different approach is taken by Woo et al.,34 who use the statistical distribution of wavelet coefficients in two subbands of the original image (light gray in Figure 4.19) to estimate a probable distribution in the new extrapolated subband. The new subbands are then filled with random variables that have this distribution.

FIGURE 4.19 Principle of image interpolation. The gray shaded areas are the wavelet decomposition of the original image, and suitable information needs to be found for the white area.

Finally, a recent publication by Daszykowski et al.8 gives an interesting example of a complete image processing chain (in this case the analysis of electrophoresis images) that includes wavelet denoising as one processing step. The image processing chain begins with digitally scanned images of electrophoresis gels. A spline-based function fit is used for removal of background inhomogeneities, followed by wavelet shrinking for denoising. After the denoising steps, geometrical distortions are removed and the image is binarized by Otsu’s threshold. The features are finally separated by means of the watershed transform, and the blots are classified by using different statistical models. This publication describes a highly automated start-to-end image processing chain.