Discovering Malware

One laborious analysis task that many analysts encounter is locating malware on a system, or within an acquired image. In one instance, I was examining an image of a system on which the user had reported suspicious events. I eventually located malware that was responsible for those events, but using a tool to mount the acquired image as a read-only file system would not only allow me to locate the specific malware much more quickly by scanning for it with an antivirus scanning application, but also allow me to automate scans across several images to locate that malware, or to use multiple tools to scan for malware or spyware. This would have been beneficial to me in one particular case where the initial infection to the system occurred two years prior to the image being acquired. Having the ability to scan files from an acquired image just as though they were files on your live system, but without modifying them in any way, can be extremely valuable to an examiner.

Tip:

When attempting to determine whether an acquired image contains malware, one excellent resource is the log files from the installed antivirus application, if there is one. For example, the mrt.log file mentioned earlier in this topic may give you some indications of what the system had been protected against. Other antivirus applications maintain log files in other locations within the file system, some depending upon the version of the application. McAfee VirusScan Enterprise Version 8.0i maintains its log files in the C:\ Documents and Settings\All Users\Application Data\Network Associates\ VirusScan directory, whereas another version of the application maintains its onaccessscanlog.txt file in the C:\Documents and Settings\All Users\Application Data\McAfee\DesktopProtection directory. Also be sure to check the Application Event Log for entries written by antivirus applications.

Once you’ve mounted the acquired image (read-only, of course), you’re ready to scan it with your antivirus scanning application of choice. A number of commercial and freeware antivirus solutions are available, and it is generally a good idea to use more than one antivirus scanning application. Claus Valca has a number of portable antivirus scanning applications listed in his Grand Stream Dreams blog (http://grandstreamdreams.blogspot.com/2008/11/portable-anti-virusmalware-security.html). When using any antivirus or spyware scanning tools (there are just too many options to list here) be sure to configure the tools to not delete or quarantine or otherwise modify any files that are detected. Many scanning tools come with an option to alert only and take no other action, so be sure to check those options.

Because mounting the acquired image can provide read-only access to the files within an image, you can access those files just like regular files on a system, without changing the contents of the files. As such, scripting languages such as Perl will return file handles when you open the files and directories, making restore point and prefetch directory analysis a straightforward process. Perl scripts used on live systems to perform these functions need only be "pointed to" the appropriate locations.

Tip::

Autostart locations are locations within Windows systems that allow applications to be started with little or no user interaction.In last topic, you saw that you can use the Autoruns application available from Microsoft (from the Sysinternals site) or its CLI brother, autorunsc.exe, to quickly check the autostart locations on a live system.

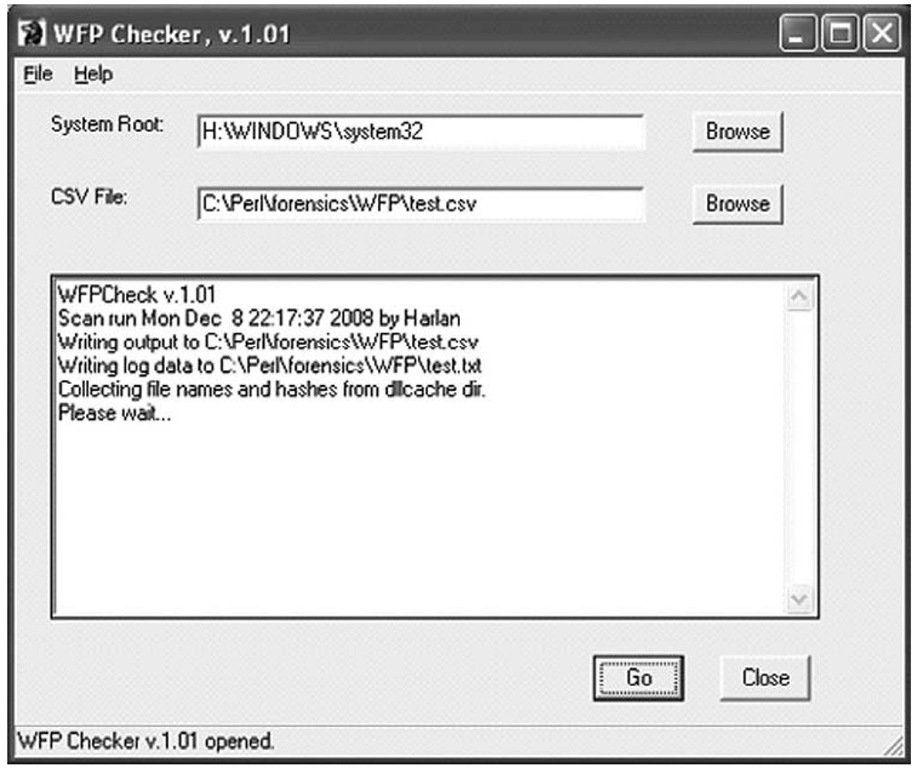

Another example is of a tool I wrote called WFPCheck (WFPCheck is not available on the accompanying media). At the SANS Forensic Summit in October 2008, the consultants from Mandiant mentioned malware that could infect files protected by Windows File Protection (WFP), and those files would remain infected. I had read reports of similar mal-ware previously, and had done research into the issue. I found that there is reportedly an undocumented API call called SfcFileException (www.bitsum.com/aboutwfp.asp) that will allegedly suspend WFP for one minute. WFP "listens" for file changes and "wakes up" when a file change event occurs for one of the designated protected files, and does not poll the protected files on a regular basis to determine whether any have been modified in some way. Suspending WFP for one minute is more than enough time to infect a file, and once WFP resumes, there is no way for it to detect that a protected file has been modified.

Tip::

The SfcFileException API is called by the demonstration tool, WfpDeprotect (www.bitsum.com/wfpdeprotect.php).

As a result of this issue, I decided to write WFPCheck (wfpchk.pl). WFPCheck works by first reading the list of files from the system32\dllcache directory and generating MD5 hashes for each file. Then WFPCheck will search for each file, first within the system32 directory and then within the entire partition (skipping the system32 and system32\dllcache directories). As a file with the same name as one of the protected files is located, WFPCheck generates an MD5 hash for that file and compares it to the hash that it already has on hand. WFPCheck outputs its results to a comma-separated value (.csv) file, and writes a text-based log of its activities to the same directory as the .csv file. Figure 5.20 illustrates WFPCheck being run against a mounted image file.

Figure 5.20 WFPCheck Being Run against a Mounted Image File

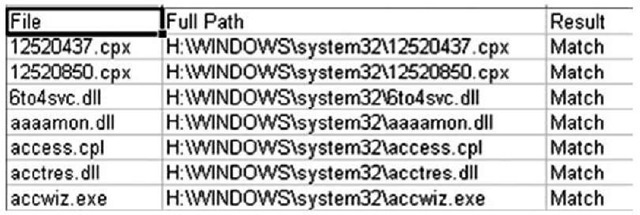

The .csv file from WFPCheck consists of three columns: the filename from the system32\ dllcache directory, the full path to where the file was found (outside the dllcache directory), and the results of the comparison. If WFPCheck finds that the generated hash values match, the result will be "Match" (illustrated in Figure 5.21). If the hashes do not match, the result will be "Mismatch", followed by two sets of colon-separated values; the respective file sizes followed by their respective hashes. These two sets of numbers are themselves separated by a dash.

Figure 5.21 Excerpt of WFPCheck Output .csv File

Warning::

On systems that are updated but do not have older files cleared away, WFPCheck may generate a number of "Mismatch" results. This is because files are replaced when the system is updated, and if the older versions of files from previous updates are left lying around the file system, WFPCheck will likely find that a protected file matches its dllcache mate, but will identify older files of the same name as mismatches. When using WFPCheck, you must closely examine the results, as there may be false positives.

Yet another means for checking for potential malware in an acquired image mounted as a read-only file system is to use sigcheck.exe from Microsoft (http://technet.microsoft.com/en-us/sysinternals/bb897441.aspx). Sigcheck.exe checks to see whether a file is digitally signed, as well as dumping the file’s version information, if available. For example, the following command will check the contents of the Windows\system32 directory of a mounted image file (mounted as the H:\ drive) for all unsigned executable files:

Although not definitive, you can use this technique as one of several techniques employed to provide a comprehensive analysis of files in an acquired image while searching for malware.

Timeline Analysis

Timeline analysis is a means of identifying or linking a sequence of events in a manner that is easy for people such as incident responders to visualize and understand. So much of what we do as analysts is associated to time in some way; for example, one of the things I track when I receive a call for assistance is the time when I received that call. During the triage process, I try to determine when the caller first received an indication of an incident, such as when he first noticed something unusual or received notification from an external source. This information very often helps me narrow down what I would be looking for during response as well as during my analysis, and where within the acquired data (images, memory dumps, logs, etc.) I would be looking. All of this has to do with having data that has some sort of time value associated with it, or is "time-stamped" in some manner.

Once a responder becomes engaged in an incident, suddenly the door is open to a significant amount of time-stamped data. Files within an acquired image have MAC times associated with them, indicating when they were last modified or accessed. Log files on the system (Windows Event Logs, IIS Web server logs, FTP service logs, antivirus application log files, etc.) contain entries that have times associated with specific events.Add to that the various Registry values that contain time values within their data and there is quite a bit of time-stamped data available to the responder to not only get an idea of when the incident may have occurred, but also possibly determine additional artifacts of the incident.

Most often, analysts have begun developing a timeline by collecting time-stamped data and adding it to a spreadsheet. One of the benefits of this sort of timeline development is that adding new events as they are discovered is relatively simple, and as new data is added, the entries can be sorted to put all of the entries in the proper sequence on the timeline. This process is also useful for data reduction (as the analyst adds only the values of interest), but it can be time-consuming and cumbersome. Also, this process is not particularly scalable, as with modern operating systems and other sources of data during an examination, the analyst can quickly be overwhelmed by the sheer number of events that may or may not have any impact on or relevance to the examination.

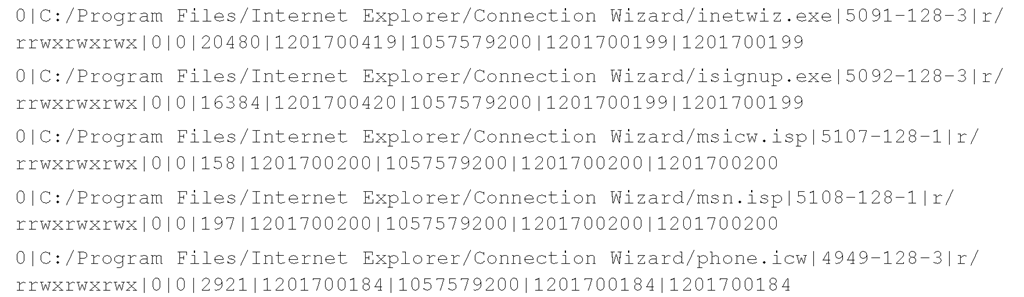

An automated means for collecting time-stamped information from an acquired image is to use the fls tool available with The Sleuth Kit (TSK, which you can find at www.sleuthkit.org/), which was written by Brian Carrier. The fls tool is run against an acquired image file to list the files and directories in the image, as well as recently deleted files, sending its output to what is referred to as a "body file" (http://wiki.sleuthkit.org/index.php?title=Body_file). The body file can then be parsed by the mactime.pl Perl script to convert the body file to a timeline in a more understandable, text-based format. The Timelines page at the Sleuth Kit Web site (http://wiki.sleuthkit.org/index.php?title=Timelines) provides additional information about this process.

Displaying time-stamped file data from an acquired image using fls.exe is relatively straightforward. Using the following command, the output from the tool is displayed in the console (i.e., STDOUT) in mactime or body file format:

Note that to display only the deleted entries from the acquired image, add the —d switch. For more details on the use of fls, refer to the man page for the tool, which you can find at www.sleuthkit.org/sleuthkit/man/fls.html. The output of fls is pipe-separated (and easily parsed), and can be redirected to a file for storage and later analysis. An excerpt of the output of fls appears as follows:

This data appears in the body file and can then be parsed by the mactime Perl script to produce timeline information. The times associated with the file are all "normalized" to 32-bit UNIX epoch times, even as the times themselves are maintained by the file system (the image is of a system using the NTFS file system) as 64-bit FILETIME objects. Alternatively, you can parse the contents of the body file with Michael Cloppert’s Ex-Tip tool (http://sourceforge.net/projects/ex-tip/) and view the output in a slightly different format.

Summary

Most of us know, or have said, that no two investigations are alike. Each investigation we undertake seems to be different from the last, much like snowflakes. However, some basic concepts can be common across investigations, and knowing where to look for corroborating information can be an important key. Too often we might be tugged or driven by external forces and deadlines, and knowing where to look for information or evidence of activity, beyond what is presented by forensic "analysis" GUIs, can be very important. Many investigations are limited due to time and resources for merely a search for keywords or specific files, whereas a great deal of information could be available if only we knew where to look and what questions to ask. Besides the existence of specific files (illicit images, malware), we can examine a number of undocumented (or poorly documented) file formats to develop a greater understanding of what occurred on the system and when.

Knowing where to look and where evidence should exist based on how the operating system and applications respond to user action are both very important aspects of forensic analysis. Knowing where log files should exist, as well as their format, can provide valuable clues during an investigation—perhaps more so if those artifacts are absent.

A lack of clear documentation of various file formats (as well as the existence of certain files) has been a challenge for forensic investigations. The key to overcoming this challenge is thorough, documented investigation of these file formats and sharing of this information. This includes not only files and file formats from versions of the Windows operating system currently being investigated (Windows 2000, XP, and 2003), but also newer versions such as Vista.

Solutions Fast Track

Log Files

- A good deal of traditional computer forensic analysis revolves around the existence of files or file fragments. Windows systems maintain a number of files that can be incorporated into this traditional view to provide a greater level of detail of analysis.

- Many of the log files maintained by Windows systems include time stamps that can be incorporated into the investigator’s timeline analysis of activity on the system.

File Metadata

- The term metadata refers to data about data. This amounts to additional data about a file that is separate from the actual contents of the file (i.e., where many analysts perform text searches).

- Many applications maintain metadata about a file or document within the file itself.

Alternative Methods of Analysis

- In addition to the traditional means of computer forensic analysis of files, additional methods of analysis are available to the investigator.

- Booting an acquired image into a virtual environment can provide the investigator with a useful means for both analysis of the system as well as presentation of collected data to others (such as a jury).

- Accessing an image as a read-only file system provides the investigator with the means to quickly scan for viruses, Trojans, and other malware.

Frequently Asked Questions

Q: I was performing a search of Internet browsing activity in an image, and I found that the "Default User" had some browsing history. What does this mean?

A: Although we did not discuss Internet browsing history in this topic (this subject has been thoroughly addressed through other means), this is a question I have received, and in fact, I have seen it myself in investigations. Robert Hensing (a Microsoft employee and all around completely awesome dude) addressed this issue in his blog (http://blogs.tech-net.com/robert_hensing/default.aspx). In a nutshell, the Default User does not have any Temporary Internet files or browsing history by default. If a browsing history is discovered for this account, it indicates someone with SYSTEM-level access making use of the WinInet API functions. I have seen this in cases where an attacker was able to gain SYSTEM-level access and run a tool called wget.exe to download tools to the compromised system. Because the wget.exe file uses the WinInet API, the "browsing history" was evident in the Temporary Internet Files directory for the Default User. Robert provides an excellent example to demonstrate this situation by launching Internet Explorer as a Scheduled Task so that when it runs it does so with SYSTEM credentials. The browsing history will then be populated for the Default User. Analysis can then be performed on the browsing history/index.dat file using the Internet History Viewer in ProDiscover, or Web Historian from Mandiant.com.

Q: I’ve acquired an image of a hard drive, and at first glance, there doesn’t seem to be a great deal of data on the system. My understanding is that the user who "owned" this system has been with the organization for several years and has recently left under suspicious circumstances. The installation date maintained in the Registry is for approximately a month ago. What are some approaches I can take from an analysis perspective?

A: This question very often appears in the context that while analyzing a system, the investigator believes that the operating system was reinstalled just prior to the image being acquired. This could end up being the case, but before we go down that route, there are places the investigator can look to gather more data about the issue. The date/time stamp for when the operating system is written to the InstallDate value in the following Registry key during installation:

The data associated with the InstallDate value is a DWORD that represents the number of seconds since 00:00:00, January 1, 1970. Microsoft Knowledge Base article 235162 (http://support.microsoft.com/?id=235162) indicates that this value may be recorded incorrectly. Other places that an investigator can look for information to confirm or corroborate this value are the date/time stamps on entries in the setuplog.txt file, in LastWrite times for the Service Registry keys, and so on. Also be sure to check the LastWrite times for the user account Registry keys in the SAM file.Other areas the investigator should examine include the Prefetch directory on Windows XP systems and the MAC times on the user profile directories.

Q: I’ve heard talk about a topic called antiforensics, where someone makes special efforts and uses special tools to hide evidence from a forensic analyst. What can I do about that?

A: Forensic analysts and investigators should never hang a theory or their findings on a single piece of data. Rather, wherever possible, findings should be corroborated by supporting facts. In many cases, attempts to hide data will create their own sets of artifacts; understanding how the operating system behaves and what circumstances and events cause certain artifacts to be created (note that if a value is changed, that’s still an "artifact") allows an investigator to see signs of activity. Also keep in mind that the absence of an artifact where there should be one is itself an artifact.