In this topic, we finally talk about transactions and how you create and control units of work in a application. We’ll show you how transactions work at the lowest level (the database) and how you work with transactions in an application that is based on native Hibernate, on Java Persistence, and with or without Enterprise JavaBeans.

Transactions allow you to set the boundaries of a unit of work: an atomic group of operations. They also help you isolate one unit of work from another unit of work in a multiuser application. We talk about concurrency and how you can control concurrent data access in your application with pessimistic and optimistic strategies.

Finally, we look at nontransactional data access and when you should work with your database in autocommit mode.

Transaction essentials

Let’s start with some background information. Application functionality requires that several things be done at the same time. For example, when an auction finishes, three different tasks have to be performed by the CaveatEmptor application:

1 Mark the winning (highest amount) bid.

2 Charge the seller the cost of the auction.

3 Notify the seller and successful bidder.

What happens if you can’t bill the auction costs because of a failure in the external credit-card system? The business requirements may state that either all listed actions must succeed or none must succeed. If so, you call these steps collectively a transaction or unit of work. If only one step fails, the whole unit of work must fail. This is known as atomicity, the notion that all operations are executed as an atomic unit.

Furthermore, transactions allow multiple users to work concurrently with the same data without compromising the integrity and correctness of the data; a particular transaction should not be visible to other concurrently running transactions. Several strategies are important to fully understand this isolation behavior, and we’ll explore them in this topic.

Transactions have other important attributes, such as consistency and durability. Consistency means that a transaction works on a consistent set of data: a set of data that is hidden from other concurrently running transactions and that is left in a clean and consistent state after the transactions completes. Your database integrity rules guarantee consistency. You also want correctness of a transaction. For example, the business rules dictate that the seller is charged once, not twice. This is a reasonable assumption, but you may not be able to express it with database constraints. Hence, the correctness of a transaction is the responsibility of the application, whereas consistency is the responsibility of the database. Durability means that once a transaction completes, all changes made during that transaction become persistent and aren’t lost even if the system subsequently fails.

Together, these transaction attributes are known as the ACID criteria.

Database transactions have to be short. A single transaction usually involves only a single batch of database operations. In practice, you also need a concept that allows you to have long-running conversations, where an atomic group of database operations occur in not one but several batches. Conversations allow the user of your application to have think-time, while still guaranteeing atomic, isolated, and consistent behavior.

Now that we’ve defined our terms, we can talk about transaction demarcation and how you can define the boundaries of a unit of work.

Database and system transactions

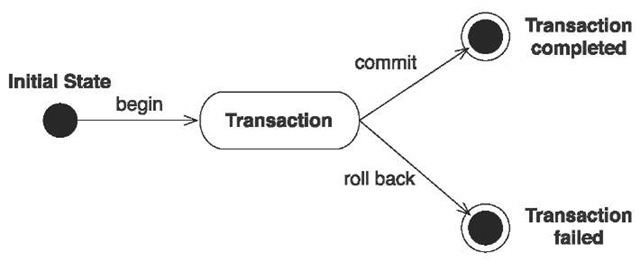

Databases implement the notion of a unit of work as a database transaction. A database transaction groups data-access operations—that is, SQL operations. All SQL statements execute inside a transaction; there is no way to send an SQL statement to a database outside of a database transaction. A transaction is guaranteed to end in one of two ways: It’s either completely committed or completely rolled back. Hence, we say database transactions are atomic. In figure 10.1, you can see this graphically.

To execute all your database operations inside a transaction, you have to mark the boundaries of that unit of work. You must start the transaction and at some point, commit the changes. If an error occurs (either while executing operations or when committing the transaction), you have to roll back the transaction to leave the data in a consistent state. This is known as transaction demarcation and, depending on the technique you use, involves more or less manual intervention.

Figure 10.1

Lifecycle of an atomic unit of work—a transaction

In general, transaction boundaries that begin and end a transaction can be set either programmatically in application code or declaratively.

Programmatic transaction demarcation

In a nonmanaged environment, the JDBC API is used to mark transaction boundaries. You begin a transaction by calling setAutoCommit(false) on a JDBC Connection and end it by calling commit(). You may, at any time, force an immediate rollback by calling rollback().

In a system that manipulates data in several databases, a particular unit of work involves access to more than one resource. In this case, you can’t achieve atomicity with JDBC alone. You need a transaction manager that can handle several resources in one system transaction. Such transaction-processing systems expose the Java Transaction API (JTA) for interaction with the developer. The main API in JTA is the UserTransaction interface with methods to begin() and commit() a system transaction.

Furthermore, programmatic transaction management in a Hibernate application is exposed to the application developer via the Hibernate Transaction interface. You aren’t forced to use this API—Hibernate also lets you begin and end JDBC transactions directly, but this usage is discouraged because it binds your code to direct JDBC. In a Java EE environment (or if you installed it along with your Java SE application), a JTA-compatible transaction manager is available, so you should call the JTA UserTransaction interface to begin and end a transaction programmatically. However, the Hibernate Transaction interface, as you may have guessed, also works on top of JTA. We’ll show you all these options and discuss portability concerns in more detail.

Programmatic transaction demarcation with Java Persistence also has to work inside and outside of a Java EE application server. Outside of an application server, with plain Java SE, you’re dealing with resource-local transactions; this is what the EntityTransaction interface is good for—you’ve seen it in previous topics. Inside an application server, you call the JTA UserTransaction interface to begin and end a transaction.

Let’s summarize these interfaces and when they’re used:

■ java.sql.Connection—Plain JDBC transaction demarcation with set-AutoCommit(false), commit(), and rollback(). It can but shouldn’t be used in a Hibernate application, because it binds your application to a plain JDBC environment.

■ org.hibernate.Transaction—Unified transaction demarcation in Hibernate applications. It works in a nonmanaged plain JDBC environment and also in an application server with JTA as the underlying system transaction service. The main benefit, however, is tight integration with persistence context management—for example, a Session is flushed automatically when you commit. A persistence context can also have the scope of this transaction (useful for conversations; see the next topic). Use this API in Java SE if you can’t have a JTA-compatible transaction service.

■ javax.transaction.UserTransaction—Standardized interface for programmatic transaction control in Java; part of JTA. This should be your primary choice whenever you have a JTA-compatible transaction service and want to control transactions programmatically.

■ javax.persistence.EntityTransaction—Standardized interface for programmatic transaction control in Java SE applications that use Java Persistence.

Declarative transaction demarcation, on the other hand, doesn’t require extra coding; and by definition, it solves the problem of portability.

Declarative transaction demarcation

In your application, you declare (for example, with annotations on methods) when you wish to work inside a transaction. It’s then the responsibility of the application deployer and the runtime environment to handle this concern. The standard container that provides declarative transaction services in Java is an EJB container, and the service is also called container-managed transactions (CMT). We’ll again write EJB session beans to show how both Hibernate and Java Persistence can benefit from this service.

Before you decide on a particular API, or for declarative transaction demarcation, let’s explore these options step by step. First, we assume you’re going to use native Hibernate in a plain Java SE application (a client/server web application, desktop application, or any two-tier system). After that, you’ll refactor the code to run in a managed Java EE environment (and see how to avoid that refactoring in the first place). We also discuss Java Persistence along the way.

Transactions in a Hibernate application

Imagine that you’re writing a Hibernate application that has to run in plain Java; no container and no managed database resources are available.

Programmatic transactions in Java SE

You configure Hibernate to create a JDBC connection pool for you, as you did in “The database connection pool”.In addition to the connection pool, no additional configuration settings are necessary if you’re writing a Java SE Hibernate application with the Transaction API:

■ The hibernate.transaction.factory_class option defaults to org.hiber-nate.transaction.JDBCTransactionFactory, which is the correct factory for the Transaction API in Java SE and for direct JDBC.

■ You can extend and customize the Transaction interface with your own implementation of a TransactionFactory. This is rarely necessary but has some interesting use cases. For example, if you have to write an audit log whenever a transaction is started, you can add this logging to a custom Transaction implementation.

Hibernate obtains a JDBC connection for each Session you’re going to work with:

A Hibernate Session is lazy. This is a good thing—it means it doesn’t consume any resources unless they’re absolutely needed. A JDBC Connection from the connection pool is obtained only when the database transaction begins. The call to beginTransaction() translates into setAutoCommit(false) on the fresh JDBC Connection. The Session is now bound to this database connection, and all SQL statements (in this case, all SQL required to conclude the auction) are sent on this connection. All database statements execute inside the same database transaction. (We assume that the concludeAuction() method calls the given Session to access the database.)

We already talked about write-behind behavior, so you know that the bulk of SQL statements are executed as late as possible, when the persistence context of the Session is flushed. This happens when you call commit() on the Transaction, by default. After you commit the transaction (or roll it back), the database connection is released and unbound from the Session. Beginning a new transaction with the same Session obtains another connection from the pool.

Closing the Session releases all other resources (for example, the persistence context); all managed persistent instances are now considered detached.

FAQ Is it faster to roll back read-only transactions? If code in a transaction reads data but doesn’t modify it, should you roll back the transaction instead of committing it? Would this be faster? Apparently, some developers found this to be faster in some special circumstances, and this belief has spread through the community. We tested this with the more popular database systems and found no difference. We also failed to discover any source of real numbers showing a performance difference. There is also no reason why a database system should have a suboptimal implementation—why it should not use the fastest transaction cleanup algorithm internally. Always commit your transaction and roll back if the commit fails. Having said that, the SQL standard includes a SET TRANSACTION READ ONLY statement. Hibernate doesn’t support an API that enables this setting, although you could implement your own custom Transaction and TransactionFactory to add this operation. We recommend you first investigate if this is supported by your database and what the possible performance benefits, if any, will be.

We need to discuss exception handling at this point. Handling exceptions

If concludeAuction() as shown in the last example (or flushing of the persistence context during commit) throws an exception, you must force the transaction to roll back by calling tx.rollback(). This rolls back the transaction immediately, so no SQL operation you sent to the database has any permanent effect.

This seems straightforward, although you can probably already see that catching RuntimeException whenever you want to access the database won’t result in nice code.

NOTE A history of exceptions—Exceptions and how they should be handled always end in heated debates between Java developers. It isn’t surprising that Hibernate has some noteworthy history as well. Until Hibernate 3.x, all exceptions thrown by Hibernate were checked exceptions, so every Hibernate API forced the developer to catch and handle exceptions. This strategy was influenced by JDBC, which also throws only checked exceptions. However, it soon became clear that this doesn’t make sense, because all exceptions thrown by Hibernate are fatal. In many cases, the best a developer can do in this situation is to clean up, display an error message, and exit the application. Therefore, starting with Hibernate 3.x, all exceptions thrown by Hibernate are subtypes of the unchecked Runtime-Exception, which is usually handled in a single location in an application. This also makes any Hibernate template or wrapper API obsolete.

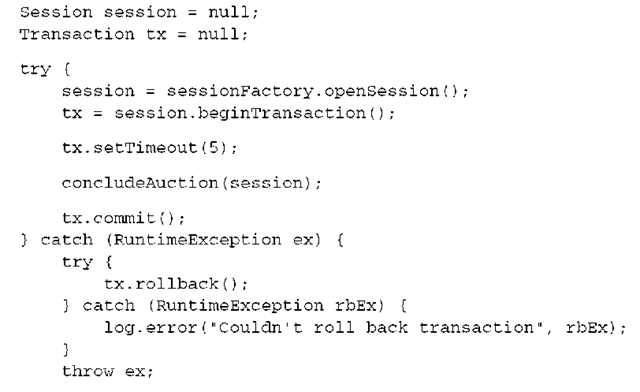

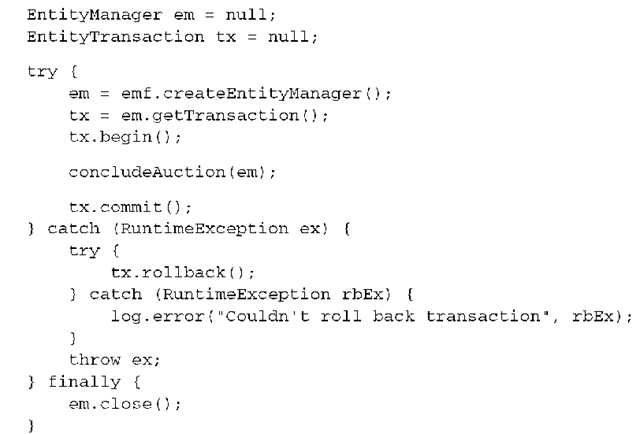

First, even though we admit that you wouldn’t write your application code with dozens (or hundreds) of try/catch blocks, the example we showed isn’t complete. This is an example of the standard idiom for a Hibernate unit of work with a database transaction that contains real exception handling:

Any Hibernate operation, including flushing the persistence context, can throw a RuntimeException. Even rolling back a transaction can throw an exception! You want to catch this exception and log it; otherwise, the original exception that led to the rollback is swallowed.

An optional method call in the example is setTimeout(), which takes the number of seconds a transaction is allowed to run. However, real monitored transactions aren’t available in a Java SE environment. The best Hibernate can do if you run this code outside of an application server (that is, without a transaction manager) is to set the number of seconds the driver will wait for a Prepared-Statement to execute (Hibernate exclusively uses prepared statements). If the limit is exceeded, an SQLException is thrown.

You don’t want to use this example as a template in your own application, because you should hide the exception handling with generic infrastructure code. You can, for example, write a single error handler for RuntimeException that knows when and how to roll back a transaction. The same can be said about opening and closing a Session.

Hibernate throws typed exceptions, all subtypes of RuntimeException that help you identify errors:

■ The most common HibernateException is a generic error. You have to either check the exception message or find out more about the cause by calling getCause() on the exception.

■ A JDBCException is any exception thrown by Hibernate’s internal JDBC layer. This kind of exception is always caused by a particular SQL statement, and you can get the offending statement with getSQL(). The internal exception thrown by the JDBC connection (the JDBC driver, actually) is available with getSQLException() or getCause(), and the database- and vendor-specific error code is available with getErrorCode().

■ Hibernate includes subtypes of JDBCException and an internal converter that tries to translate the vendor-specific error code thrown by the database driver into something more meaningful. The built-in converter can produce JDBCConnectionException, SQLGrammarException, LockAquisition-Exception, DataException, and ConstraintViolationException for the most important database dialects supported by Hibernate. You can either manipulate or enhance the dialect for your database, or plug in a SQLEx-ceptionConverterFactory to customize this conversion.

■ Other RuntimeExceptions thrown by Hibernate should also abort a transaction. You should always make sure you catch RuntimeException, no matter what you plan to do with any fine-grained exception-handling strategy.

You now know what exceptions you should catch and when to expect them. However, one question is probably on your mind: What should you do after you’ve caught an exception?

All exceptions thrown by Hibernate are fatal. This means you have to roll back the database transaction and close the current Session. You aren’t allowed to continue working with a Session that threw an exception.

Usually, you also have to exit the application after you close the Session following an exception, although there are some exceptions (for example, Stale-ObjectStateException) that naturally lead to a new attempt (possibly after interacting with the application user again) in a new Session. Because these are closely related to conversations and concurrency control, we’ll cover them later.

FAQ Can I use exceptions for validation? Some developers get excited once they see how many fine-grained exception types Hibernate can throw. This can lead you down the wrong path. For example, you may be tempted to catch the ConstraintViolationException for validation purposes. If a particular operation throws this exception, why not display a (customized depending on the error code and text) failure message to application users and let them correct the mistake? This strategy has two significant disadvantages. First, throwing unchecked values against the database to see what sticks isn’t the right strategy for a scalable application. You want to implement at least some data-integrity validation in the application layer. Second, all exceptions are fatal for your current unit of work. However, this isn’t how application users will interpret a validation error— they expect to still be inside a unit of work. Coding around this mismatch is awkward and difficult. Our recommendation is that you use the fine-grained exception types to display better looking (fatal) error messages. Doing so helps you during development (no fatal exceptions should occur in production, ideally) and also helps any customer-support engineer who has to decide quickly if it’s an application error (constraint violated, wrong SQL executed) or if the database system is under load (locks couldn’t be acquired).

Programmatic transaction demarcation in Java SE with the Hibernate Transaction interface keeps your code portable. It can also run inside a managed environment, when a transaction manager handles the database resources.

Programmatic transactions with JTA

A managed runtime environment compatible with Java EE can manage resources for you. In most cases, the resources that are managed are database connections, but any resource that has an adaptor can integrate with a Java EE system (messaging or legacy systems, for example). Programmatic transaction demarcation on those resources, if they’re transactional, is unified and exposed to the developer with JTA; javax.transaction.UserTransaction is the primary interface to begin and end transactions.

The common managed runtime environment is a Java EE application server. Of course, application servers provide many more services, not only management of resources. Many Java EE services are modular—installing an application server isn’t the only way to get them. You can obtain a stand-alone JTA provider if managed resources are all you need. Open source stand-alone JTA providers include JBoss Transactions (http://www.jboss.com/products/transactions), ObjectWebJOTM (http://jotm.objectweb.org), and others. You can install such a JTA service along with your Hibernate application (in Tomcat, for example). It will manage a pool of database connections for you, provide JTA interfaces for transaction demarcation, and provide managed database connections through a JNDI registry.

The following are benefits of managed resources with JTA and reasons to use this Java EE service:

■ A transaction-management service can unify all resources, no matter of what type, and expose transaction control to you with a single standardized API. This means that you can replace the Hibernate Transaction API and use JTA directly everywhere. It’s then the responsibility of the application deployer to install the application on (or with) a JTA-compatible runtime environment. This strategy moves portability concerns where they belong; the application relies on standardized Java EE interfaces, and the runtime environment has to provide an implementation.

■ A Java EE transaction manager can enlist multiple resources in a single transaction. If you work with several databases (or more than one resource), you probably want a two-phase commit protocol to guarantee atomicity of a transaction across resource boundaries. In such a scenario, Hibernate is configured with several SessionFactorys, one for each database, and their Sessions obtain managed database connections that all participate in the same system transaction.

■ The quality of JTA implementations is usually higher compared to simple JDBC connection pools. Application servers and stand-alone JTA providers that are modules of application servers usually have had more testing in high-end systems with a large transaction volume.

■ JTA providers don’t add unnecessary overhead at runtime (a common misconception). The simple case (a single JDBC database) is handled as efficiently as with plain JDBC transactions. The connection pool managed behind a JTA service is probably much better software than a random connection pooling library you’d use with plain JDBC.

Let’s assume that you aren’t sold on JTA and that you want to continue using the Hibernate Transaction API to keep your code runnable in Java SE and with managed Java EE services, without any code changes. To deploy the previous code examples, which all call the Hibernate Transaction API, on a Java EE application server, you need to switch the Hibernate configuration to JTA:

■ The hibernate.transaction.factory_class option must be set to org. hibernate.transaction.JTATransactionFactory.

■ Hibernate needs to know the JTA implementation on which you’re deploying, for two reasons: First, different implementations may expose the JTA UserTransaction, which Hibernate has to call internally now, under different names. Second, Hibernate has to hook into the synchronization process of the JTA transaction manager to handle its caches. You have to set the hibernate.transaction.manager_lookup_class option to configure both: for example, to org.hibernate.transaction.JBossTransaction-ManagerLookup. Lookup classes for the most common JTA implementations and application servers are packaged with Hibernate (and can be customized if needed). Check the Javadoc for the package.

■ Hibernate is no longer responsible for managing a JDBC connection pool; it obtains managed database connections from the runtime container. These connections are exposed by the JTA provider through JNDI, a global registry. You must configure Hibernate with the right name for your database resources on JNDI.

Now the same piece of code you wrote earlier for Java SE directly on top of JDBC will work in a JTA environment with managed datasources:

However, the database connection-handling is slightly different. Hibernate obtains a managed database connection for each Session you’re using and, again, tries to be as lazy as possible. Without JTA, Hibernate would hold on to a particular database connection from the beginning until the end of the transaction. With a JTA configuration, Hibernate is even more aggressive: A connection is obtained and used for only a single SQL statement and then is immediately returned to the managed connection pool. The application server guarantees that it will hand out the same connection during the same transaction, when it’s needed again for another SQL statement. This aggressive connection-release mode is Hibernate’s internal behavior, and it doesn’t make any difference for your application and how you write code. (Hence, the code example is line-by-line the same as the last one.)

A JTA system supports global transaction timeouts; it can monitor transactions. So, setTimeout() now controls the global JTA timeout setting—equivalent to calling UserTransaction.setTransactionTimeout().

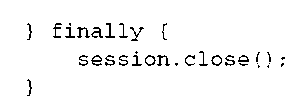

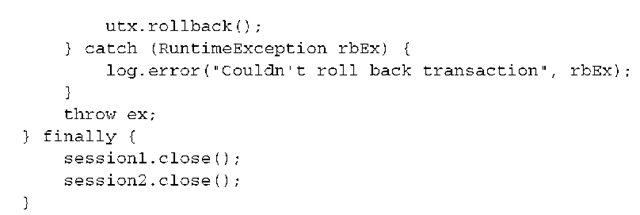

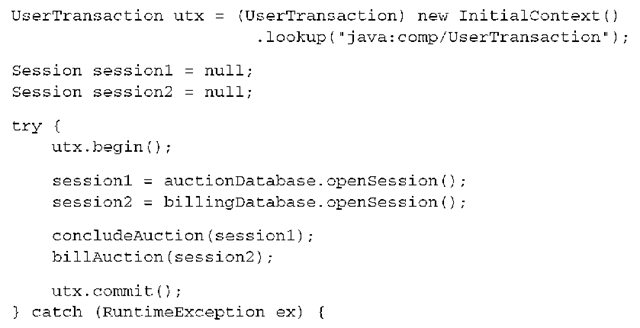

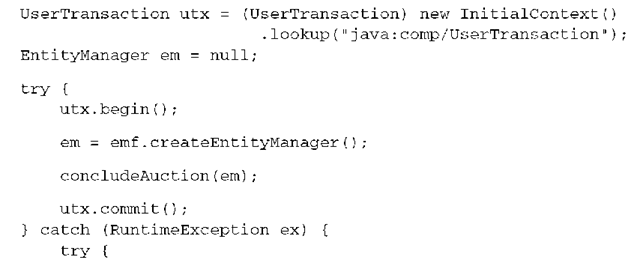

The Hibernate Transaction API guarantees portability with a simple change of Hibernate configuration. If you want to move this responsibility to the application deployer, you should write your code against the standardized JTA interface, instead. To make the following example a little more interesting, you’ll also work with two databases (two SessionFactorys) inside the same system transaction:

(Note that this code snippet can throw some other, checked exceptions, like a NamingException from the JNDI lookup. You need to handle these accordingly.)

First, a handle on a JTA UserTransaction must be obtained from the JNDI registry. Then, you begin and end a transaction, and the (container-provided) database connections used by all Hibernate Sessions are enlisted in that transaction automatically. Even if you aren’t using the Transaction API, you should still configure hibernate.transaction.factory_class and hibernate.transaction. manager_lookup_class for JTA and your environment, so that Hibernate can interact with the transaction system internally.

With default settings, it’s also your responsibility to flush() each Session manually to synchronize it with the database (to execute all SQL DML). The Hibernate Transaction API did this automatically for you. You also have to close all Sessions manually. On the other hand, you can enable the hibernate.trans-action.flush_before_completion and/or the hibernate.transaction.auto_ close_session configuration options and let Hibernate take care of this for you again—flushing and closing is then part of the internal synchronization procedure of the transaction manager and occurs before (and after, respectively) the JTA transaction ends. With these two settings enabled the code can be simplified to the following:

The session1 and session2 persistence context is now flushed automatically during commit of the UserTransaction, and both are closed after the transaction completes.

Our advice is to use JTA directly whenever possible. You should always try to move the responsibility for portability outside of the application and, if you can, require deployment in an environment that provides JTA.

Programmatic transaction demarcation requires application code written against a transaction demarcation interface. A much nicer approach that avoids any nonportable code spread throughout your application is declarative transaction demarcation.

Container-managed transactions

Declarative transaction demarcation implies that a container takes care of this concern for you. You declare if and how you want your code to participate in a transaction. The responsibility to provide a container that supports declarative transaction demarcation is again where it belongs, with the application deployer.

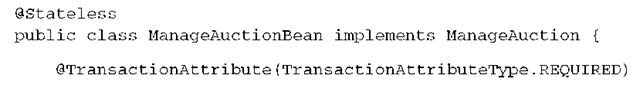

CMT is a standard feature of Java EE and, in particular, EJB. The code we’ll show you next is based on EJB 3.0 session beans ( Java EE only); you define transaction boundaries with annotations. Note that the actual data-access code doesn’t change if you have to use the older EJB 2.1 session beans; however, you have to write an EJB deployment descriptor in XML to create your transaction assembly— this is optional in EJB 3.0.

(A stand-alone JTA implementation doesn’t provide container-managed and declarative transactions. However, JBoss Application Server is available as a modular server with a minimal footprint, and it can provide only JTA and an EJB 3.0 container, if needed.)

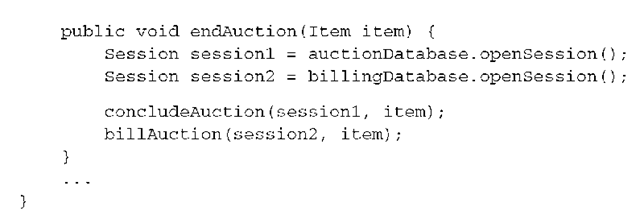

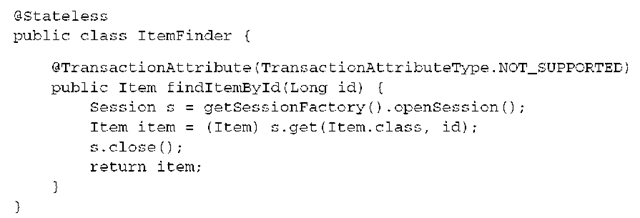

Suppose that an EJB 3.0 session bean implements an action that ends an auction. The code you previously wrote with programmatic JTA transaction demarcation is moved into a stateless session bean:

The container notices your declaration of a TransactionAttribute and applies it to the endAuction() method. If no system transaction is running when the method is called, a new transaction is started (it’s REQUIRED). Once the method returns, and if the transaction was started when the method was called (and not by anyone else), the transaction commits. The system transaction is automatically rolled back if the code inside the method throws a RuntimeException.

We again show two SessionFactorys for two databases, for the sake of the example. They could be assigned with a JNDI lookup (Hibernate can bind them there at startup) or from an enhanced version of HibernateUtil. Both obtain database connections that are enlisted with the same container-managed transaction. And, if the container’s transaction system and the resources support it, you again get a two-phase commit protocol that ensures atomicity of the transaction across databases.

You have to set some configuration options to enable CMT with Hibernate:

■ The hibernate.transaction.factory_class option must be set to org. hibernate.transaction.CMTTransactionFactory.

■ You need to set hibernate.transaction.manager_lookup_class to the right lookup class for your application server.

Also note that all EJB session beans default to CMT, so if you want to disable CMT and call the JTA UserTransaction directly in any session bean method, annotate the EJB class with @TransactionManagement(TransactionManagementType. BEAN). You’re then working with bean-managed transactions (BMT). Even if it may work in most application servers, mixing CMT and BMT in a single bean isn’t allowed by the Java EE specification.

The CMT code already looks much nicer than the programmatic transaction demarcation. If you configure Hibernate to use CMT, it knows that it should flush and close a Session that participates in a system transaction automatically. Furthermore, you’ll soon improve this code and even remove the two lines that open a Hibernate Session.

Let’s look at transaction handling in a Java Persistence application.

Transactions with Java Persistence

With Java Persistence, you also have the design choice to make between programmatic transaction demarcation in application code or declarative transaction demarcation handled automatically by the runtime container. Let’s investigate the first option with plain Java SE and then repeat the examples with JTA and EJB components.

The description resource-local transaction applies to all transactions that are controlled by the application (programmatic) and that aren’t participating in a global system transaction. They translate directly into the native transaction system of the resource you’re dealing with. Because you’re working with JDBC databases, this means a resource-local transaction translates into a JDBC database transaction.

Resource-local transactions in JPA are controlled with the EntityTransaction API. This interface exists not for portability reasons, but to enable particular features of Java Persistence—for instance, flushing of the underlying persistence context when you commit a transaction.

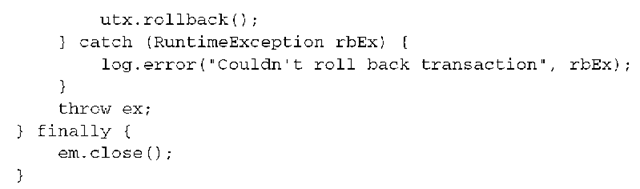

You’ve seen the standard idiom of Java Persistence in Java SE many times. Here it is again with exception handling:

This pattern is close to its Hibernate equivalent, with the same implications: You have to manually begin and end a database transaction, and you must guarantee that the application-managed EntityManager is closed in a finally block. (Although we often show code examples that don’t handle exceptions or are wrapped in a try/catch block, this isn’t optional.)

Exceptions thrown by JPA are subtypes of RuntimeException. Any exception invalidates the current persistence context, and you aren’t allowed to continue working with the EntityManager once an exception has been thrown. Therefore, all the strategies we discussed for Hibernate exception handling also apply to Java Persistence exception handling. In addition, the following rules apply:

■ Any exception thrown by any method of the EntityManager interfaces triggers an automatic rollback of the current transaction.

■ Any exception thrown by any method of the javax.persistence.Query interface triggers an automatic rollback of the current transaction, except for NoResultException and NonUniqueResultException. So, the previous code example that catches all exceptions also executes a rollback for these exceptions.

Note that JPA doesn’t offer fine-grained SQL exception types. The most common exception is javax.persistence.PersistenceException. All other exceptions thrown are subtypes of PersistenceException, and you should consider them all fatal except NoResultException and NonUniqueResultException. However, you may call getCause() on any exception thrown by JPA and find a wrapped native Hibernate exception, including the fine-grained SQL exception types.

If you use Java Persistence inside an application server or in an environment that at least provides JTA (see our earlier discussions for Hibernate), you call the JTA interfaces for programmatic transaction demarcation. The EntityTransac-tion interface is available only for resource-local transactions.

JTA transactions with Java Persistence

If your Java Persistence code is deployed in an environment where JTA is available, and you want to use JTA system transactions, you need to call the JTA UserTrans-action interface to control transaction boundaries programmatically:

The persistence context of the EntityManager is scoped to the JTA transaction. All SQL statements flushed by this EntityManager are executed inside the JTA transaction on a database connection that is enlisted with the transaction. The persistence context is flushed and closed automatically when the JTA transaction commits. You could use several EntityManagers to access several databases in the same system transaction, just as you’d use several Sessions in a native Hibernate application.

Note that the scope of the persistence context changed! It’s now scoped to the JTA transaction, and any object that was in persistent state during the transaction is considered detached once the transaction is committed.

The rules for exception handling are equivalent to those for resource-local transactions. If you use JTA in EJBs, don’t forget to set @TransactionManage-ment(TransactionManagementType.BEAN) on the class to enable BMT.

You won’t often use Java Persistence with JTA and not also have an EJB container available. If you don’t deploy a stand-alone JTA implementation, a Java EE 5.0 application server will provide both. Instead of programmatic transaction demarcation, you’ll probably utilize the declarative features of EJBs.

Java Persistence and CMT

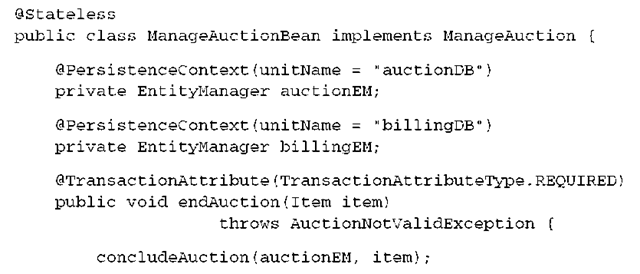

Let’s refactor the ManageAuction EJB session bean from the earlier Hibernate-only examples to Java Persistence interfaces. You also let the container inject an EntityManager:

Again, what happens inside the concludeAuction() and billAuction() methods isn’t relevant for this example; assume that they need the EntityManagers to access the database. The TransactionAttribute for the endAuction() method requires that all database access occurs inside a transaction. If no system transaction is active when endAuction() is called, a new transaction is started for this method. If the method returns, and if the transaction was started for this method, it’s committed. Each EntityManager has a persistence context that spans the scope of the transaction and is flushed automatically when the transaction commits. The persistence context has the same scope as the endAuction() method, if no transaction is active when the method is called.

Both persistence units are configured to deploy on JTA, so two managed database connections, one for each database, are enlisted inside the same transaction, and atomicity is guaranteed by the transaction manager of the application server.

You declare that the endAuction() method may throw an AuctionNotValidEx-ception. This is a custom exception you write; before ending the auction, you check if everything is correct (the end time of the auction has been reached, there is a bid, and so on). This is a checked exception that is a subtype of java.lang. Exception. An EJB container treats this as an application exception and doesn’t trigger any action if the EJB method throws this exception. However, the container recognizes system exceptions, which by default are all unchecked RuntimeExceptions that may be thrown by an EJB method. A system exception thrown by an EJB method enforces an automatic rollback of the system transaction.

In other words, you don’t need to catch and rethrow any system exception from your Java Persistence operations—let the container handle them. You have two choices how you can roll back a transaction if an application exception is thrown: First, you can catch it and call the JTA UserTransaction manually, and set it to roll back. Or you can add an @ApplicationException(rollback = true) annotation to the class of AuctionNotValidException—the container will then recognize that you wish an automatic rollback whenever an EJB method throws this application exception.

You’re now ready to use Java Persistence and Hibernate inside and outside of an application server, with or without JTA, and in combination with EJBs and container-managed transactions. We’ve discussed (almost) all aspects of transaction atomicity. Naturally, you probably still have questions about the isolation between concurrently running transactions. Controlling concurrent access

Databases (and other transactional systems) attempt to ensure transaction isolation, meaning that, from the point of view of each concurrent transaction, it appears that no other transactions are in progress. Traditionally, this has been implemented with locking. A transaction may place a lock on a particular item of data in the database, temporarily preventing access to that item by other transactions. Some modern databases such as Oracle and PostgreSQL implement transaction isolation with multiversion concurrency control (MVCC) which is generally considered more scalable. We’ll discuss isolation assuming a locking model; most of our observations are also applicable to multiversion concurrency, however.

How databases implement concurrency control is of the utmost importance in your Hibernate or Java Persistence application. Applications inherit the isolation guarantees provided by the database management system. For example, Hibernate never locks anything in memory. If you consider the many years of experience that database vendors have with implementing concurrency control, you’ll see the advantage of this approach. On the other hand, some features in Hibernate and Java Persistence (either because you use them or by design) can improve the isolation guarantee beyond what is provided by the database.

We discuss concurrency control in several steps. We explore the lowest layer and investigate the transaction isolation guarantees provided by the database. Then, we look at Hibernate and Java Persistence features for pessimistic and optimistic concurrency control at the application level, and what other isolation guarantees Hibernate can provide.

Understanding database-level concurrency

Your job as a Hibernate application developer is to understand the capabilities of your database and how to change the database isolation behavior if needed in your particular scenario (and by your data integrity requirements). Let’s take a step back. If we’re talking about isolation, you may assume that two things are either isolated or not isolated; there is no grey area in the real world. When we talk about database transactions, complete isolation comes at a high price. Several isolation levels are available, which, naturally, weaken full isolation but increase performance and scalability of the system.

Transaction isolation issues

First, let’s look at several phenomena that may occur when you weaken full transaction isolation. The ANSI SQL standard defines the standard transaction isolation levels in terms of which of these phenomena are permissible in a database management system:

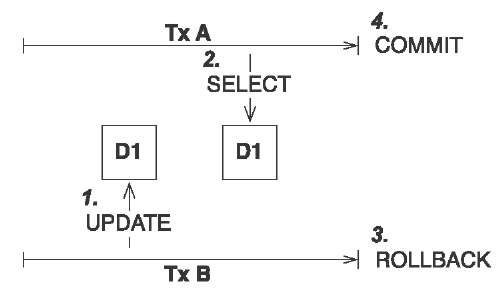

A lost update occurs if two transactions both update a row and then the second transaction aborts, causing both changes to be lost. This occurs in systems that don’t implement locking. The concurrent transactions aren’t isolated. This is shown in figure 10.2.

Figure 10.2

Lost update: two transactions update the same data without locking.

A dirty read occurs if a one transaction reads changes made by another transaction that has not yet been committed. This is dangerous, because the changes made by the other transaction may later be rolled back, and invalid data may be written by the first transaction, see figure 10.3.

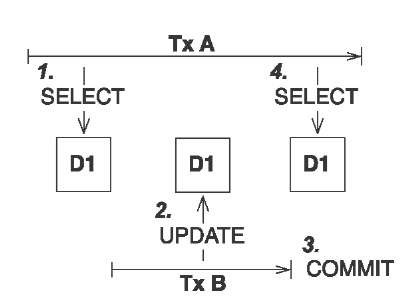

An unrepeatable read occurs if a transaction reads a row twice and reads different state each time. For example, another transaction may have written to the row and committed between the two reads, as shown in figure 10.4.

A special case of unrepeatable read is the second lost updates problem. Imagine that two concurrent transactions both read a row: One writes to it and commits, and then the second writes to it and commits. The changes made by the first writer are lost. This issue is especially relevant if you think about application conversations that need several database transactions to complete. We’ll explore this case later in more detail.

Figure 10.3

Dirty read: transaction A reads uncommitted data.

Figure 10.4

Unrepeatable read: transaction A executes two nonrepeatable reads

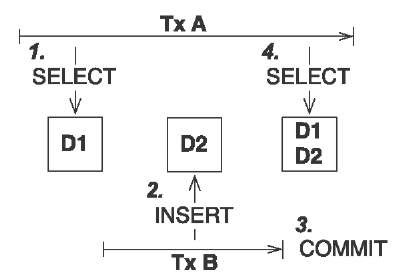

A phantom read is said to occur when a transaction executes a query twice, and the second result set includes rows that weren’t visible in the first result set or rows that have been deleted. (It need not necessarily be exactly the same query.) This situation is caused by another transaction inserting or deleting rows between the execution of the two queries, as shown in figure 10.5.

Figure 10.5

Phantom read: transaction A reads new data in the second select.

Now that you understand all the bad things that can occur, we can define the transaction isolation levels and see what problems they prevent.

ANSI transaction isolation levels

The standard isolation levels are defined by the ANSI SQL standard, but they aren’t peculiar to SQL databases. JTA defines exactly the same isolation levels, and you’ll use these levels to declare your desired transaction isolation later. With increased levels of isolation comes higher cost and serious degradation of performance and scalability:

■ A system that permits dirty reads but not lost updates is said to operate in read uncommitted isolation. One transaction may not write to a row if another uncommitted transaction has already written to it. Any transaction may read any row, however. This isolation level may be implemented in the database-management system with exclusive write locks.

■ A system that permits unrepeatable reads but not dirty reads is said to implement read committed transaction isolation. This may be achieved by using shared read locks and exclusive write locks. Reading transactions don’t block other transactions from accessing a row. However, an uncommitted writing transaction blocks all other transactions from accessing the row.

■ A system operating in repeatable read isolation mode permits neither unrepeatable reads nor dirty reads. Phantom reads may occur. Reading transactions block writing transactions (but not other reading transactions), and writing transactions block all other transactions.

■ Serializable provides the strictest transaction isolation. This isolation level emulates serial transaction execution, as if transactions were executed one after another, serially, rather than concurrently. Serializability may not be implemented using only row-level locks. There must instead be some other mechanism that prevents a newly inserted row from becoming visible to a transaction that has already executed a query that would return the row.

How exactly the locking system is implemented in a DBMS varies significantly; each vendor has a different strategy. You should study the documentation of your DBMS to find out more about the locking system, how locks are escalated (from row-level, to pages, to whole tables, for example), and what impact each isolation level has on the performance and scalability of your system.

It’s nice to know how all these technical terms are defined, but how does that help you choose an isolation level for your application?

Choosing an isolation level

Developers (ourselves included) are often unsure what transaction isolation level to use in a production application. Too great a degree of isolation harms scalability of a highly concurrent application. Insufficient isolation may cause subtle, unreproduceable bugs in an application that you’ll never discover until the system is working under heavy load.

Note that we refer to optimistic locking (with versioning) in the following explanation, a concept explained later in this topic. You may want to skip this section and come back when it’s time to make the decision for an isolation level in your application. Picking the correct isolation level is, after all, highly dependent on your particular scenario. Read the following discussion as recommendations, not carved in stone.

Hibernate tries hard to be as transparent as possible regarding transactional semantics of the database. Nevertheless, caching and optimistic locking affect these semantics. What is a sensible database isolation level to choose in a Hibernate application?

First, eliminate the read uncommitted isolation level. It’s extremely dangerous to use one transaction’s uncommitted changes in a different transaction. The rollback or failure of one transaction will affect other concurrent transactions. Rollback of the first transaction could bring other transactions down with it, or perhaps even cause them to leave the database in an incorrect state. It’s even possible that changes made by a transaction that ends up being rolled back could be committed anyway, because they could be read and then propagated by another transaction that is successful!

Secondly, most applications don’t need serializable isolation (phantom reads aren’t usually problematic), and this isolation level tends to scale poorly. Few existing applications use serializable isolation in production, but rather rely on pessimistic locks (see next sections) that effectively force a serialized execution of operations in certain situations.

This leaves you a choice between read committed and repeatable read. Let’s first consider repeatable read. This isolation level eliminates the possibility that one transaction can overwrite changes made by another concurrent transaction (the second lost updates problem) if all data access is performed in a single atomic database transaction. A read lock held by a transaction prevents any write lock a concurrent transaction may wish to obtain. This is an important issue, but enabling repeatable read isn’t the only way to resolve it.

Let’s assume you’re using versioned data, something that Hibernate can do for you automatically. The combination of the (mandatory) persistence context cache and versioning already gives you most of the nice features of repeatable read isolation. In particular, versioning prevents the second lost updates problem, and the persistence context cache also ensures that the state of the persistent instances loaded by one transaction is isolated from changes made by other transactions. So, read-committed isolation for all database transactions is acceptable if you use versioned data.

Repeatable read provides more reproducibility for query result sets (only for the duration of the database transaction); but because phantom reads are still possible, that doesn’t appear to have much value. You can obtain a repeat-able-read guarantee explicitly in Hibernate for a particular transaction and piece of data (with a pessimistic lock).

Setting the transaction isolation level allows you to choose a good default locking strategy for all your database transactions. How do you set the isolation level?

Setting an isolation level

Every JDBC connection to a database is in the default isolation level of the DBMS— usually read committed or repeatable read. You can change this default in the DBMS configuration. You may also set the transaction isolation for JDBC connections on the application side, with a Hibernate configuration option:

Hibernate sets this isolation level on every JDBC connection obtained from a connection pool before starting a transaction. The sensible values for this option are as follows (you may also find them as constants in java.sql.Connection):

■ 1—Read uncommitted isolation

■ 2—Read committed isolation

■ 8—Serializable isolation

Note that Hibernate never changes the isolation level of connections obtained from an application server-provided database connection in a managed environment! You can change the default isolation using the configuration of your application server. (The same is true if you use a stand-alone JTA implementation.)

As you can see, setting the isolation level is a global option that affects all connections and transactions. From time to time, it’s useful to specify a more restrictive lock for a particular transaction. Hibernate and Java Persistence rely on optimistic concurrency control, and both allow you to obtain additional locking guarantees with version checking and pessimistic locking.

Optimistic concurrency control

An optimistic approach always assumes that everything will be OK and that conflicting data modifications are rare. Optimistic concurrency control raises an error only at the end of a unit of work, when data is written. Multiuser applications usually default to optimistic concurrency control and database connections with a read-committed isolation level. Additional isolation guarantees are obtained only when appropriate; for example, when a repeatable read is required. This approach guarantees the best performance and scalability.

Understanding the optimistic strategy

To understand optimistic concurrency control, imagine that two transactions read a particular object from the database, and both modify it. Thanks to the read-committed isolation level of the database connection, neither transaction will run into any dirty reads. However, reads are still nonrepeatable, and updates may also be lost. This is a problem you’ll face when you think about conversations, which are atomic transactions from the point of view of your users. Look at figure 10.6.

Figure 10.6

Conversation B overwrites changes made by conversation A.

Let’s assume that two users select the same piece of data at the same time. The user in conversation A submits changes first, and the conversation ends with a successful commit of the second transaction. Some time later (maybe only a second), the user in conversation B submits changes. This second transaction also commits successfully. The changes made in conversation A have been lost, and (potentially worse) modifications of data committed in conversation B may have been based on stale information.

You have three choices for how to deal with lost updates in these second transactions in the conversations:

■ Last commit wins—Both transactions commit successfully, and the second commit overwrites the changes of the first. No error message is shown.

■ First commit wins—The transaction of conversation A is committed, and the user committing the transaction in conversation B gets an error message. The user must restart the conversation by retrieving fresh data and go through all steps of the conversation again with nonstale data.

■ Merge conflicting updates—The first modification is committed, and the transaction in conversation B aborts with an error message when it’s committed. The user of the failed conversation B may however apply changes selectively, instead of going through all the work in the conversation again.

If you don’t enable optimistic concurrency control, and by default it isn’t enabled, your application runs with a last commit wins strategy. In practice, this issue of lost updates is frustrating for application users, because they may see all their work lost without an error message.

Obviously, first commit wins is much more attractive. If the application user of conversation B commits, he gets an error message that reads, Somebody already committed modifications to the data you’re about to commit. You’ve been working with stale data. Please restart the conversation with fresh data. It’s your responsibility to design and write the application to produce this error message and to direct the user to the beginning of the conversation. Hibernate and Java Persistence help you with automatic optimistic locking, so that you get an exception whenever a transaction tries to commit an object that has a conflicting updated state in the database.

Merge conflicting changes, is a variation of first commit wins. Instead of displaying an error message that forces the user to go back all the way, you offer a dialog that allows the user to merge conflicting changes manually. This is the best strategy because no work is lost and application users are less frustrated by optimistic concurrency failures. However, providing a dialog to merge changes is much more time-consuming for you as a developer than showing an error message and forcing the user to repeat all the work. We’ll leave it up to you whether you want to use this strategy.

Optimistic concurrency control can be implemented many ways. Hibernate works with automatic versioning.

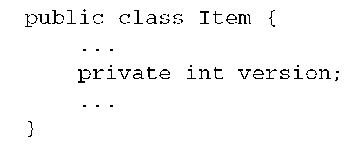

Enabling versioning in Hibernate

Hibernate provides automatic versioning. Each entity instance has a version, which can be a number or a timestamp. Hibernate increments an object’s version when it’s modified, compares versions automatically, and throws an exception if a conflict is detected. Consequently, you add this version property to all your persistent entity classes to enable optimistic locking:

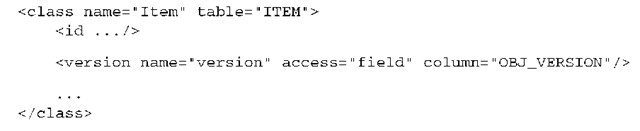

You can also add a getter method; however, version numbers must not be modified by the application. The <version> property mapping in XML must be placed immediately after the identifier property mapping:

The version number is just a counter value—it doesn’t have any useful semantic value. The additional column on the entity table is used by your Hibernate application. Keep in mind that all other applications that access the same database can (and probably should) also implement optimistic versioning and utilize the same version column. Sometimes a timestamp is preferred (or exists):

In theory, a timestamp is slightly less safe, because two concurrent transactions may both load and update the same item in the same millisecond; in practice, this won’t occur because a JVM usually doesn’t have millisecond accuracy (you should check your JVM and operating system documentation for the guaranteed precision).

Furthermore, retrieving the current time from the JVM isn’t necessarily safe in a clustered environment, where nodes may not be time synchronized. You can switch to retrieval of the current time from the database machine with the source=”db” attribute on the <timestamp> mapping. Not all Hibernate SQL dialects support this (check the source of your configured dialect), and there is always the overhead of hitting the database for every increment.

We recommend that new projects rely on versioning with version numbers, not timestamps.

Optimistic locking with versioning is enabled as soon as you add a <version> or a <timestamp> property to a persistent class mapping. There is no other switch.

How does Hibernate use the version to detect a conflict?

Automatic management of versions

Every DML operation that involves the now versioned Item objects includes a version check. For example, assume that in a unit of work you load an Item from the database with version 1. You then modify one of its value-typed properties, such as the price of the Item. When the persistence context is flushed, Hibernate detects

If another concurrent unit of work updated and committed the same row, the OBJ_VERSION column no longer contains the value 1, and the row isn’t updated. Hibernate checks the row count for this statement as returned by the JDBC driver—which in this case is the number of rows updated, zero—and throws a StaleObjectStateException. The state that was present when you loaded the Item is no longer present in the database at flush-time; hence, you’re working with stale data and have to notify the application user. You can catch this exception and display an error message or a dialog that helps the user restart a conversation with the application.

What modifications trigger the increment of an entity’s version? Hibernate increments the version number (or the timestamp) whenever an entity instance is dirty. This includes all dirty value-typed properties of the entity, no matter if they’re single-valued, components, or collections. Think about the relationship between User and BillingDetails, a one-to-many entity association: If a Credit-Card is modified, the version of the related User isn’t incremented. If you add or remove a CreditCard (or BankAccount) from the collection of billing details, the version of the User is incremented.

If you want to disable automatic increment for a particular value-typed property or collection, map it with the optimistic-lock=”false” attribute. The inverse attribute makes no difference here. Even the version of an owner of an inverse collection is updated if an element is added or removed from the inverse collection.

As you can see, Hibernate makes it incredibly easy to manage versions for optimistic concurrency control. If you’re working with a legacy database schema or existing Java classes, it may be impossible to introduce a version or timestamp property and column. Hibernate has an alternative strategy for you.

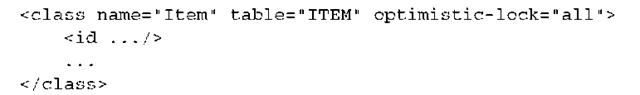

Versioning without version numbers or timestamps

If you don’t have version or timestamp columns, Hibernate can still perform automatic versioning, but only for objects that are retrieved and modified in the same persistence context (that is, the same Session). If you need optimistic locking for conversations implemented with detached objects, you must use a version number or timestamp that is transported with the detached object. that modification and increments the version of the Item to 2. It then executes the SQL UPDATE to make this modification permanent in the database:

This alternative implementation of versioning checks the current database state against the unmodified values of persistent properties at the time the object was retrieved (or the last time the persistence context was flushed). You may enable this functionality by setting the optimistic-lock attribute on the class mapping:

Hibernate lists all columns and their last known nonstale values in the WHERE clause of the SQL statement. If any concurrent transaction has modified any of these values, or even deleted the row, this statement again returns with zero updated rows. Hibernate then throws a StaleObjectStateException.

Alternatively, Hibernate includes only the modified properties in the restriction (only ITEM_PRICE, in this example) if you set optimistic-lock=”dirty”. This means two units of work may modify the same object concurrently, and a conflict is detected only if they both modify the same value-typed property (or a foreign key value). In most cases, this isn’t a good strategy for business entities. Imagine that two people modify an auction item concurrently: One changes the price, the other the description. Even if these modifications don’t conflict at the lowest level (the database row), they may conflict from a business logic perspective. Is it OK to change the price of an item if the description changed completely? You also need to enable dynamic-update=”true” on the class mapping of the entity if you want to use this strategy, Hibernate can’t generate the SQL for these dynamic UPDATE statements at startup.

We don’t recommend versioning without a version or timestamp column in a new application; it’s a little slower, it’s more complex, and it doesn’t work if you’re using detached objects.

Optimistic concurrency control in a Java Persistence application is pretty much the same as in Hibernate.

The following SQL is now executed to flush a modification of an Item instance:

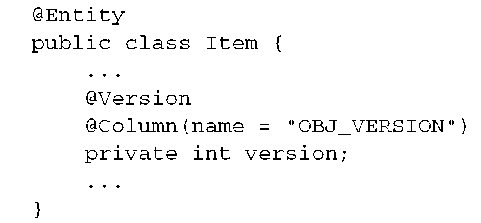

Versioning with Java Persistence

The Java Persistence specification assumes that concurrent data access is handled optimistically, with versioning. To enable automatic versioning for a particular entity, you need to add a version property or field:

Again, you can expose a getter method but can’t allow modification of a version value by the application. In Hibernate, a version property of an entity can be of any numeric type, including primitives, or a Date or Calendar type. The JPA specification considers only int, Integer, short, Short, long, Long, and java.sql. Timestamp as portable version types.

Because the JPA standard doesn’t cover optimistic versioning without a version attribute, a Hibernate extension is needed to enable versioning by comparing the old and new state:

You can also switch to OptimisticLockType.DIRTY if you only wish to compare modified properties during version checking. You then also need to set the dynamicUpdate attribute to true.

Java Persistence doesn’t standardize which entity instance modifications should trigger an increment of the version. If you use Hibernate as a JPA provider, the defaults are the same—every value-typed property modification, including additions and removals of collection elements, triggers a version increment. At the time of writing, no Hibernate annotation for disabling of version increments on particular properties and collections is available, but a feature request for @OptimisticLock(excluded=true) exists. Your version of Hibernate Annotations probably includes this option.

Hibernate EntityManager, like any other Java Persistence provider, throws a javax.persistence.OptimisticLockException when a conflicting version is detected. This is the equivalent of the native StaleObjectStateException in Hibernate and should be treated accordingly.

We’ve now covered the basic isolation levels of a database connection, with the conclusion that you should almost always rely on read-committed guarantees from your database. Automatic versioning in Hibernate and Java Persistence prevents lost updates when two concurrent transactions try to commit modifications on the same piece of data. To deal with nonrepeatable reads, you need additional isolation guarantees.

Obtaining additional isolation guarantees

There are several ways to prevent nonrepeatable reads and upgrade to a higher isolation level.

Explicit pessimistic locking

We already discussed switching all database connections to a higher isolation level than read committed, but our conclusion was that this is a bad default when scalability of the application is a concern. You need better isolation guarantees only for a particular unit of work. Also remember that the persistence context cache provides repeatable reads for entity instances in persistent state. However, this isn’t always sufficient.

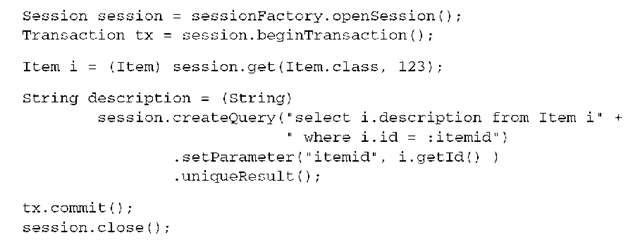

For example, you may need repeatable read for scalar queries:

This unit of work executes two reads. The first retrieves an entity instance by identifier. The second read is a scalar query, loading the description of the already loaded Item instance again. There is a small window in this unit of work in which a concurrently running transaction may commit an updated item description between the two reads. The second read then returns this committed data, and the variable description has a different value than the property i. getDescription().

This example is simplified, but it’s enough to illustrate how a unit of work that mixes entity and scalar reads is vulnerable to nonrepeatable reads, if the database transaction isolation level is read committed.

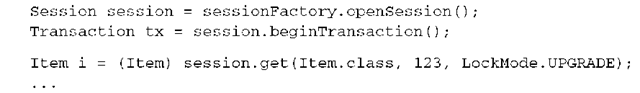

Instead of switching all database transactions into a higher and nonscalable isolation level, you obtain stronger isolation guarantees when necessary with the lock() method on the Hibernate Session:

Using LockMode.UPGRADE results in a pessimistic lock held on the database for the row(s) that represent the Item instance. Now no concurrent transaction can obtain a lock on the same data—that is, no concurrent transaction can modify the data between your two reads. This can be shortened as follows:

A LockMode.UPGRADE results in an SQL SELECT … FOR UPDATE or similar, depending on the database dialect. A variation, LockMode.UPGRADE_NOWAIT, adds a clause that allows an immediate failure of the query. Without this clause, the database usually waits when the lock can’t be obtained (perhaps because a concurrent transaction already holds a lock). The duration of the wait is database-dependent, as is the actual SQL clause.

FAQ Can I use long pessimistic locks? The duration of a pessimistic lock in Hibernate is a single database transaction. This means you can’t use an exclusive lock to block concurrent access for longer than a single database transaction. We consider this a good thing, because the only solution would be an extremely expensive lock held in memory (or a so-called lock table in the database) for the duration of, for example, a whole conversation. These kinds of locks are sometimes called offline locks. This is almost always a performance bottleneck; every data access involves additional lock checks to a synchronized lock manager. Optimistic locking, however, is the perfect concurrency control strategy and performs well in long-running conversations. Depending on your conflict-resolution options (that is, if you had enough time to implement merge changes), your application users are as happy with it as with blocked concurrent access. They may also appreciate not being locked out of particular screens while others look at the same data.

Java Persistence defines LockModeType.READ for the same purpose, and the EntityManager also has a lock() method. The specification doesn’t require that this lock mode is supported on nonversioned entities; however, Hibernate supports it on all entities, because it defaults to a pessimistic lock in the database.

The Hibernate lock modes

Hibernate supports the following additional LockModes:

■ LockMode.NONE—Don’t go to the database unless the object isn’t in any cache.

■ LockMode.READ—Bypass all caches, and perform a version check to verify that the object in memory is the same version that currently exists in the database.

■ LockMode.UPDGRADE—Bypass all caches, do a version check (if applicable), and obtain a database-level pessimistic upgrade lock, if that is supported. Equivalent to LockModeType.READ in Java Persistence. This mode transparently falls back to LockMode.READ if the database SQL dialect doesn’t support a SELECT … FOR UPDATE option.

■ LockMode.UPDGRADE_NOWAIT—The same as UPGRADE, but use a SELECT … FOR UPDATE NOWAIT, if supported. This disables waiting for concurrent lock releases, thus throwing a locking exception immediately if the lock can’t be obtained. This mode transparently falls back to LockMode.UPGRADE if the database SQL dialect doesn’t support the NOWAIT option.

■ LockMode.FORCE—Force an increment of the objects version in the database, to indicate that it has been modified by the current transaction. Equivalent to LockModeType.WRITE in Java Persistence.

■ LockMode.WRITE—Obtained automatically when Hibernate has written to a row in the current transaction. (This is an internal mode; you may not specify it in your application.)

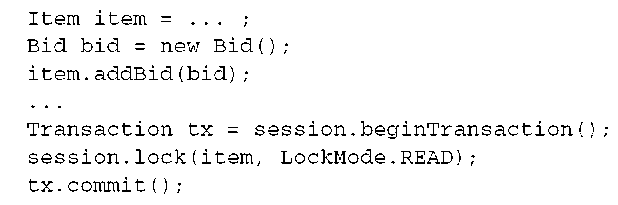

This code performs a version check on the detached Item instance to verify that the database row wasn’t updated by another transaction since it was retrieved, before saving the new Bid by cascade (assuming the association from Item to Bid has cascading enabled).

(Note that EntityManager.lock() doesn’t reattach the given entity instance— it only works on instances that are already in managed persistent state.)

Hibernate LockMode.FORCE and LockModeType.WRITE in Java Persistence have a different purpose. You use them to force a version update if by default no version would be incremented.

Forcing a version increment

If optimistic locking is enabled through versioning, Hibernate increments the version of a modified entity instance automatically. However, sometimes you want to increment the version of an entity instance manually, because Hibernate doesn’t consider your changes to be a modification that should trigger a version increment.

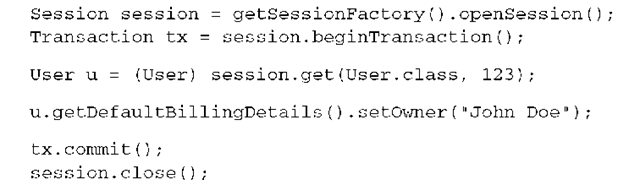

Imagine that you modify the owner name of a CreditCard:

When this Session is flushed, the version of the BillingDetail’s instance (let’s assume it’s a credit card) that was modified is incremented automatically by Hibernate. This may not be what you want—you may want to increment the version of the owner, too (the User instance).

Call lock() with LockMode.FORCE to increment the version of an entity instance:

By default, load() and get() use LockMode.NONE. A LockMode.READ is most useful with session.lock() and a detached object. Here’s an example:

Any concurrent unit of work that works with the same User row now knows that this data was modified, even if only one of the values that you’d consider part of the whole aggregate was modified. This technique is useful in many situations where you modify an object and want the version of a root object of an aggregate to be incremented. Another example is a modification of a bid amount for an auction item (if these amounts aren’t immutable): With an explicit version increment, you can indicate that the item has been modified, even if none of its value-typed properties or collections have changed. The equivalent call with Java Persistence is em.lock(o, LockModeType.WRITE).

You now have all the pieces to write more sophisticated units of work and create conversations. We need to mention one final aspect of transactions, however, because it becomes essential in more complex conversations with JPA. You must understand how autocommit works and what nontransactional data access means in practice.

Nontransactional data access

Many DBMSs enable the so called autocommit mode on every new database connection by default. The autocommit mode is useful for ad hoc execution of SQL.

Imagine that you connect to your database with an SQL console and that you run a few queries, and maybe even update and delete rows. This interactive data access is ad hoc; most of the time you don’t have a plan or a sequence of statements that you consider a unit of work. The default autocommit mode on the database connection is perfect for this kind of data access—after all, you don’t want to type begin a transaction and end a transaction for every SQL statement you write and execute. In autocommit mode, a (short) database transaction begins and ends for each SQL statement you send to the database. You’re working effectively nontransactionally, because there are no atomicity or isolation guarantees for your session with the SQL console. (The only guarantee is that a single SQL statement is atomic.)

An application, by definition, always executes a planned sequence of statements. It seems reasonable that you therefore always create transaction boundaries to group your statements into units that are atomic. Therefore, the autocommit mode has no place in an application.

Debunking autocommit myths

Many developers still like to work with an autocommit mode, often for reasons that are vague and not well defined. Let’s first debunk a few of these reasons before we show you how to access data nontransactionally if you want (or have) to:

■ Many application developers think they can talk to a database outside of a transaction. This obviously isn’t possible; no SQL statement can be send to a database outside of a database transaction. The term nontransactional data access means there are no explicit transaction boundaries, no system transaction, and that the behavior of data access is that of the autocommit mode. It doesn’t mean no physical database transactions are involved.

■ If your goal is to improve performance of your application by using the autocommit mode, you should think again about the implications of many small transactions. Significant overhead is involved in starting and ending a database transaction for every SQL statement, and it may decrease the performance of your application.

■ If your goal is to improve the scalability of your application with the auto-commit mode, think again: A longer-running database transaction, instead of many small transactions for every SQL statement, may hold database locks for a longer time and probably won’t scale as well. However, thanks to the Hibernate persistence context and write-behind of DML, all write locks in the database are already held for a short time. Depending on the isolation level you enable, the cost of read locks is likely negligible. Or, you may use a DBMS with multiversion concurrency that doesn’t require read locks (Oracle, PostgreSQL, Informix, Firebird), because readers are never blocked by default.

■ Because you’re working nontransactionally, not only do you give up any transactional atomicity of a group of SQL statements, but you also have weaker isolation guarantees if data is modified concurrently. Repeatable reads based on read locks are impossible with autocommit mode. (The persistence context cache helps here, naturally.)

Many more issues must be considered when you introduce nontransactional data access in your application. We’ve already noted that introducing a new type of transaction, namely read-only transactions, can significantly complicate any future modification of your application. The same is true if you introduce nontransac-tional operations.

You would then have three different kinds of data access in your application: in regular transactions, in read-only transactions, and now also nontransactional, with no guarantees. Imagine that you have to introduce an operation that writes data into a unit of work that was supposed to only read data. Imagine that you have to reorganize operations that were nontransactional to be transactional.

Our recommendation is to not use the autocommit mode in an application, and to apply read-only transactions only when there is an obvious performance benefit or when future code changes are highly unlikely. Always prefer regular ACID transactions to group your data-access operations, regardless of whether you read or write data.

Having said that, Hibernate and Java Persistence allow nontransactional data access. In fact, the EJB 3.0 specification forces you to access data nontransaction-ally if you want to implement atomic long-running conversations. We’ll approach this subject in the next topic. Now we want to dig a little deeper into the consequences of the autocommit mode in a plain Hibernate application. (Note that, despite our negative remarks, there are some good use cases for the autocommit mode. In our experience autocommit is often enabled for the wrong reasons and we wanted to wipe the slate clean first.)

Working nontransactionally with Hibernate

Look at the following code, which accesses the database without transaction boundaries:

By default, in a Java SE environment with a JDBC configuration, this is what happens if you execute this snippet:

1 A new Session is opened. It doesn’t obtain a database connection at this point.

2 The call to get() triggers an SQL SELECT. The Session now obtains a JDBC Connection from the connection pool. Hibernate, by default, immediately turns off the autocommit mode on this connection with setAutoCom-mit(false). This effectively starts a JDBC transaction!

3 The SELECT is executed inside this JDBC transaction. The Session is closed, and the connection is returned to the pool and released by Hibernate— Hibernate calls close() on the JDBC Connection. What happens to the uncommitted transaction?

The answer to that question is, “It depends!” The JDBC specification doesn’t say anything about pending transactions when close() is called on a connection. What happens depends on how the vendors implement the specification. With Oracle JDBC drivers, for example, the call to close() commits the transaction! Most other JDBC vendors take the sane route and roll back any pending transaction when the JDBC Connection object is closed and the resource is returned to the pool.

Obviously, this won’t be a problem for the SELECT you’ve executed, but look at this variation:

With this setting, Hibernate no longer turns off autocommit when a JDBC connection is obtained from the connection pool—it enables autocommit if the connection isn’t already in that mode. The previous code examples now work predictably, and the JDBC driver wraps a short transaction around every SQL statement that is send to the database—with the implications we listed earlier.

This code results in an INSERT statement, executed inside a transaction that is never committed or rolled back. On Oracle, this piece of code inserts data permanently; in other databases, it may not. (This situation is slightly more complicated: The INSERT is executed only if the identifier generator requires it. For example, an identifier value can be obtained from a sequence without an INSERT. The persistent entity is then queued until flush-time insertion—which never happens in this code. An identity strategy requires an immediate INSERT for the value to be generated.)