The “Hello World” example in the previous topic introduced you to Hibernate; however, it isn’t useful for understanding the requirements of real-world applications with complex data models. For the rest of the topic, we use a much more sophisticated example application—CaveatEmptor, an online auction system—to demonstrate Hibernate and Java Persistence.

We start our discussion of the application by introducing a programming model for persistent classes. Designing and implementing the persistent classes is a multistep process that we’ll examine in detail.

First, you’ll learn how to identify the business entities of a problem domain. You create a conceptual model of these entities and their attributes, called a domain model, and you implement it in Java by creating persistent classes. We spend some time exploring exactly what these Java classes should look like, and we also look at the persistence capabilities of the classes, and how this aspect influences the design and implementation.

We then explore mapping metadata options—the ways you can tell Hibernate how your persistent classes and their properties relate to database tables and columns. This can involve writing XML documents that are eventually deployed along with the compiled Java classes and are read by Hibernate at runtime. Another option is to use JDK 5.0 metadata annotations, based on the EJB 3.0 standard, directly in the Java source code of the persistent classes. After reading this topic, you’ll know how to design the persistent parts of your domain model in complex real-world projects, and what mapping metadata option you’ll primarily prefer and use.

Finally, in the last (probably optional) section of this topic, we look at Hibernate’s capability for representation independence. A relatively new feature in Hibernate allows you to create a domain model in Java that is fully dynamic, such as a model without any concrete classes but only HashMaps. Hibernate also supports a domain model representation with XML documents.

Let’s start with the example application.

The CaveatEmptor application

The CaveatEmptor online auction application demonstrates ORM techniques and Hibernate functionality; you can download the source code for the application from http://caveatemptor.hibernate.org. We won’t pay much attention to the user interface in this topic (it could be web based or a rich client); we’ll concentrate instead on the data access code. However, when a design decision about data access code that has consequences for the user interface has to be made, we’ll naturally consider both.

In order to understand the design issues involved in ORM, let’s pretend the CaveatEmptor application doesn’t yet exist, and that you’re building it from scratch. Our first task would be analysis.

Analyzing the business domain

A software development effort begins with analysis of the problem domain (assuming that no legacy code or legacy database already exists).

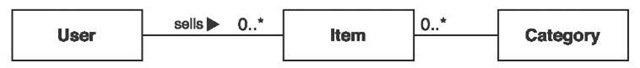

At this stage, you, with the help of problem domain experts, identify the main entities that are relevant to the software system. Entities are usually notions understood by users of the system: payment, customer, order, item, bid, and so forth. Some entities may be abstractions of less concrete things the user thinks about, such as a pricing algorithm, but even these would usually be understandable to the user. All these entities are found in the conceptual view of the business, which we sometimes call a business model. Developers and architects of object-oriented software analyze the business model and create an object-oriented model, still at the conceptual level (no Java code). This model may be as simple as a mental image existing only in the mind of the developer, or it may be as elaborate as a UML class diagram created by a computer-aided software engineering (CASE) tool like ArgoUML or TogetherJ. A simple model expressed in UML is shown in figure 3.1.

This model contains entities that you’re bound to find in any typical auction system: category, item, and user. The entities and their relationships (and perhaps their attributes) are all represented by this model of the problem domain. We call this kind of object-oriented model of entities from the problem domain, encompassing only those entities that are of interest to the user, a domain model. It’s an abstract view of the real world.

The motivating goal behind the analysis and design of a domain model is to capture the essence of the business information for the application’s purpose. Developers and architects may, instead of an object-oriented model, also start the application design with a data model (possibly expressed with an Entity-Relationship diagram). We usually say that, with regard to persistence, there is little difference between the two; they’re merely different starting points. In the end, we’re most interested in the structure and relationships of the business entities, the rules that have to be applied to guarantee the integrity of data (for example, the multiplicity of relationships), and the logic used to manipulate the data.

Figure 3.1 A class diagram of a typical online auction model

In object modeling, there is a focus on polymorphic business logic. For our purpose and top-down development approach, it’s helpful if we can implement our logical model in polymorphic Java; hence the first draft as an object-oriented model. We then derive the logical relational data model (usually without additional diagrams) and implement the actual physical database schema.

Let’s see the outcome of our analysis of the problem domain of the Caveat-Emptor application.

The CaveatEmptor domain model

The CaveatEmptor site auctions many different kinds of items, from electronic equipment to airline tickets. Auctions proceed according to the English auction strategy: Users continue to place bids on an item until the bid period for that item expires, and the highest bidder wins.

In any store, goods are categorized by type and grouped with similar goods into sections and onto shelves. The auction catalog requires some kind of hierarchy of item categories so that a buyer can browse these categories or arbitrarily search by category and item attributes. Lists of items appear in the category browser and search result screens. Selecting an item from a list takes the buyer to an item-detail view.

An auction consists of a sequence of bids, and one is the winning bid. User details include name, login, address, email address, and billing information.

A web of trust is an essential feature of an online auction site. The web of trust allows users to build a reputation for trustworthiness (or untrustworthiness). Buyers can create comments about sellers (and vice versa), and the comments are visible to all other users.

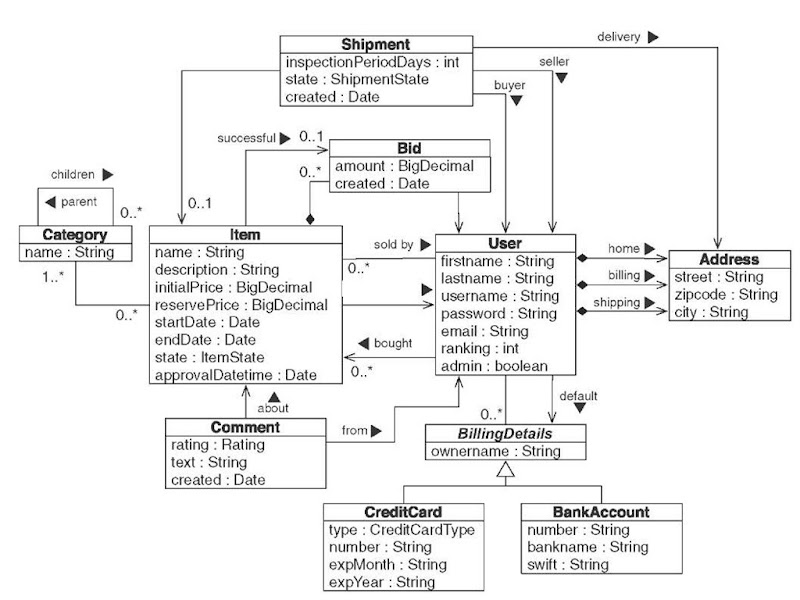

A high-level overview of our domain model is shown in figure 3.2. Let’s briefly discuss some interesting features of this model.

Each item can be auctioned only once, so you don’t need to make Item distinct from any auction entities. Instead, you have a single auction item entity named Item. Thus, Bid is associated directly with Item. Users can write Comments about other users only in the context of an auction; hence the association between Item and Comment. The Address information of a User is modeled as a separate class, even though the User may have only one Address; they may alternatively have three, for home, billing, and shipping. You do allow the user to have many BillingDetails. The various billing strategies are represented as subclasses of an abstract class (allowing future extension).

Figure 3.2 Persistent classes of the CaveatEmptor domain model and their relationships

A Category may be nested inside another Category. This is expressed by a recursive association, from the Category entity to itself. Note that a single Category may have multiple child categories but at most one parent. Each Item belongs to at least one Category.

The entities in a domain model should encapsulate state and behavior. For example, the User entity should define the name and address of a customer and the logic required to calculate the shipping costs for items (to this particular customer). The domain model is a rich object model, with complex associations, interactions, and inheritance relationships. An interesting and detailed discussion of object-oriented techniques for working with domain models can be found in Patterns of Enterprise Application Architecture (Fowler, 2003) or in Domain-Driven Design (Evans, 2003).

In this topic, we won’t have much to say about business rules or about the behavior of our domain model. This isn’t because we consider it unimportant; rather, this concern is mostly orthogonal to the problem of persistence. It’s the state of our entities that is persistent, so we concentrate our discussion on how to best represent state in our domain model, not on how to represent behavior. For example, in this topic, we aren’t interested in how tax for sold items is calculated or how the system may approve a new user account. We’re more interested in how the relationship between users and the items they sell is represented and made persistent. We’ll revisit this issue in later topics, whenever we have a closer look at layered application design and the separation of logic and data access.

NOTE ORM without a domain model—We stress that object persistence with full ORM is most suitable for applications based on a rich domain model. If your application doesn’t implement complex business rules or complex interactions between entities (or if you have few entities), you may not need a domain model. Many simple and some not-so-simple problems are perfectly suited to table-oriented solutions, where the application is designed around the database data model instead of around an object-oriented domain model, often with logic executed in the database (stored procedures). However, the more complex and expressive your domain model, the more you’ll benefit from using Hibernate; it shines when dealing with the full complexity of object/relational persistence.

Now that you have a (rudimentary) application design with a domain model, the next step is to implement it in Java. Let’s look at some of the things you need to consider.

Implementing the domain model

Several issues typically must be addressed when you implement a domain model in Java. For instance, how do you separate the business concerns from the cross-cutting concerns (such as transactions and even persistence)? Do you need automated or transparent persistence? Do you have to use a specific programming model to achieve this? In this section, we examine these types of issues and how to address them in a typical Hibernate application.

Let’s start with an issue that any implementation must deal with: the separation of concerns. The domain model implementation is usually a central, organizing component; it’s reused heavily whenever you implement new application functionality. For this reason, you should be prepared to go to some lengths to ensure that concerns other than business aspects don’t leak into the domain model implementation.

Addressing leakage of concerns

The domain model implementation is such an important piece of code that it shouldn’t depend on orthogonal Java APIs. For example, code in the domain model shouldn’t perform JNDI lookups or call the database via the JDBC API. This allows you to reuse the domain model implementation virtually anywhere. Most importantly, it makes it easy to unit test the domain model without the need for a particular runtime environment or container (or the need for mocking any service dependencies). This separation emphasizes the distinction between logical unit testing and integration unit testing.

We say that the domain model should be concerned only with modeling the business domain. However, there are other concerns, such as persistence, transaction management, and authorization. You shouldn’t put code that addresses these crosscutting concerns in the classes that implement the domain model. When these concerns start to appear in the domain model classes, this is an example of leakage of concerns.

The EJB standard solves the problem of leaky concerns. If you implement your domain classes using the entity programming model, the container takes care of some concerns for you (or at least lets you externalize those concerns into metadata, as annotations or XML descriptors). The EJB container prevents leakage of certain crosscutting concerns using interception. An EJB is a managed component, executed inside the EJB container; the container intercepts calls to your beans and executes its own functionality. This approach allows the container to implement the predefined crosscutting concerns—security, concurrency, persistence, transactions, and remoteness—in a generic way.

Unfortunately, the EJB 2.1 specification imposes many rules and restrictions on how you must implement a domain model. This, in itself, is a kind of leakage of concerns—in this case, the concerns of the container implementer have leaked! This was addressed in the EJB 3.0 specification, which is nonintrusive and much closer to the traditional JavaBean programming model.

Hibernate isn’t an application server, and it doesn’t try to implement all the crosscutting concerns of the full EJB specification. Hibernate is a solution for just one of these concerns: persistence. If you require declarative security and transaction management, you should access entity instances via a session bean, taking advantage of the EJB container’s implementation of these concerns. Hibernate in an EJB container either replaces (EJB 2.1, entity beans with CMP) or implements (EJB 3.0, Java Persistence entities) the persistence aspect.

Hibernate persistent classes and the EJB 3.0 entity programming model offer transparent persistence. Hibernate and Java Persistence also provide automatic persistence.

Let’s explore both terms in more detail and find an accurate definition.

Transparent and automated persistence

We use transparent to mean a complete separation of concerns between the persistent classes of the domain model and the persistence logic, where the persistent classes are unaware of—and have no dependency on—the persistence mechanism. We use automatic to refer to a persistence solution that relieves you of handling low-level mechanical details, such as writing most SQL statements and working with the JDBC API.

The Item class, for example, doesn’t have any code-level dependency on any Hibernate API. Furthermore:

■ Hibernate doesn’t require that any special superclasses or interfaces be inherited or implemented by persistent classes. Nor are any special classes used to implement properties or associations. (Of course, the option to use both techniques is always there.) Transparent persistence improves code readability and maintenance, as you’ll soon see.

■ Persistent classes can be reused outside the context of persistence, in unit tests or in the user interface (UI) tier, for example. Testability is a basic requirement for applications with rich domain models.

■ In a system with transparent persistence, objects aren’t aware of the underlying data store; they need not even be aware that they are being persisted or retrieved. Persistence concerns are externalized to a generic persistence manager interface—in the case of Hibernate, the Session and Query. In JPA, the EntityManager and Query (which has the same name, but a different package and slightly different API) play the same roles.

Transparent persistence fosters a degree of portability; without special interfaces, the persistent classes are decoupled from any particular persistence solution. Our business logic is fully reusable in any other application context. You could easily change to another transparent persistence mechanism. Because JPA follows the same basic principles, there is no difference between Hibernate persistent classes and JPA entity classes.

By this definition of transparent persistence, certain nonautomated persistence layers are transparent (for example, the DAO pattern) because they decouple the persistence-related code with abstract programming interfaces. Only plain Java classes without dependencies are exposed to the business logic or contain the business logic. Conversely, some automated persistence layers (including EJB 2.1 entity instances and some ORM solutions) are nontransparent because they require special interfaces or intrusive programming models.

We regard transparency as required. Transparent persistence should be one of the primary goals of any ORM solution. However, no automated persistence solution is completely transparent: Every automated persistence layer, including Hibernate, imposes some requirements on the persistent classes. For example, Hibernate requires that collection-valued properties be typed to an interface such as java.util.Set or java.util.List and not to an actual implementation such as java.util.HashSet (this is a good practice anyway). Or, a JPA entity class has to have a special property, called the database identifier.

You now know why the persistence mechanism should have minimal impact on how you implement a domain model, and that transparent and automated persistence are required. What kind of programming model should you use? What are the exact requirements and contracts to observe? Do you need a special programming model at all? In theory, no; in practice, however, you should adopt a disciplined, consistent programming model that is well accepted by the Java community.

Writing POJOs and persistent entity classes

As a reaction against EJB 2.1 entity instances, many developers started talking about Plain Old Java Objects (POJOs),1 a back-to-basics approach that essentially revives JavaBeans, a component model for UI development, and reapplies it to the business layer. (Most developers now use the terms POJO and JavaBean almost synonymously.) The overhaul of the EJB specification brought us new lightweight entities, and it would be appropriate to call them persistence-capable JavaBeans. Java developers will soon use all three terms as synonyms for the same basic design approach.

In this topic, we use persistent class for any class implementation that is capable of persistent instances, we use POJO if some Java best practices are relevant, and we use entity class when the Java implementation follows the EJB 3.0 and JPA specifications. Again, you shouldn’t be too concerned about these differences, because the ultimate goal is to apply the persistence aspect as transparently as possible. Almost every Java class can be a persistent class, or a POJO, or an entity class if some good practices are followed.

Hibernate works best with a domain model implemented as POJOs. The few requirements that Hibernate imposes on your domain model implementation are also best practices for the POJO implementation, so most POJOs are Hibernate-compatible without any changes. Hibernate requirements are almost the same as the requirements for EJB 3.0 entity classes, so a POJO implementation can be easily marked up with annotations and made an EJB 3.0 compatible entity.

A POJO declares business methods, which define behavior, and properties, which represent state. Some properties represent associations to other user-defined POJOs.

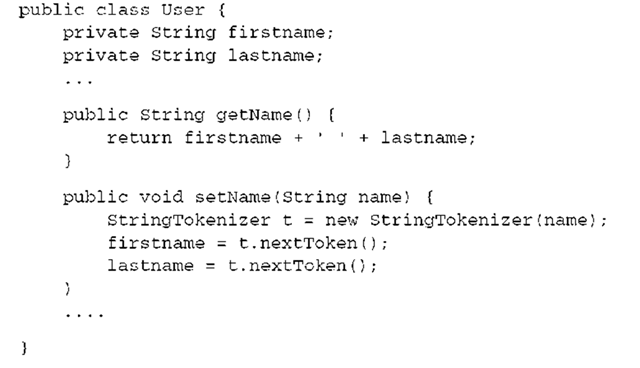

A simple POJO class is shown in listing 3.1. This is an implementation of the User entity of your domain model.

Listing 3.1 POJO implementation of the User class

Hibernate doesn’t require that persistent classes implement Serializable. However, when objects are stored in an HttpSession or passed by value using RMI, serialization is necessary. (This is likely to happen in a Hibernate application.) The class can be abstract and, if needed, extend a nonpersistent class.

Unlike the JavaBeans specification, which requires no specific constructor, Hibernate (and JPA) require a constructor with no arguments for every persistent class. Hibernate calls persistent classes using the Java Reflection API on this constructor to instantiate objects. The constructor may be nonpublic, but it has to be at least package-visible if runtime-generated proxies will be used for performance optimization. Proxy generation also requires that the class isn’t declared final (nor has final methods)!

The properties of the POJO implement the attributes of the business entities— for example, the username of User. Properties are usually implemented as private or protected instance variables, together with public property accessor methods: a method for retrieving the value of the instance variable and a method for changing its value. These methods are known as the getter and setter, respectively. The example POJO in listing 3.1 declares getter and setter methods for the username and address properties.

The JavaBean specification defines the guidelines for naming these methods, and they allow generic tools like Hibernate to easily discover and manipulate the property value. A getter method name begins with get, followed by the name of the property (the first letter in uppercase); a setter method name begins with set and similarly is followed by the name of the property. Getter methods for Boolean properties may begin with is instead of get.

You can choose how the state of an instance of your persistent classes should be persisted by Hibernate, either through direct access to its fields or through accessor methods. Your class design isn’t disturbed by these considerations. You can make some accessor methods nonpublic or completely remove them. Some getter and setter methods do something more sophisticated than access instance variables (validation, for example), but trivial accessor methods are common. Their primary advantage is providing an additional buffer between the internal representation and the public interface of the class, allowing independent refac-toring of both.

The example in listing 3.1 also defines a business method that calculates the cost of shipping an item to a particular user (we left out the implementation of this method).

What are the requirements for JPA entity classes? The good news is that so far, all the conventions we’ve discussed for POJOs are also requirements for JPA entities. You have to apply some additional rules, but they’re equally simple; we’ll come back to them later.

Now that we’ve covered the basics of using POJO persistent classes as a programming model, let’s see how to handle the associations between those classes.

Implementing POJO associations

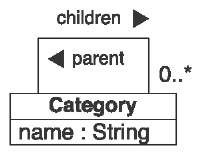

Figure 3.3 Diagram of the Category class with associations

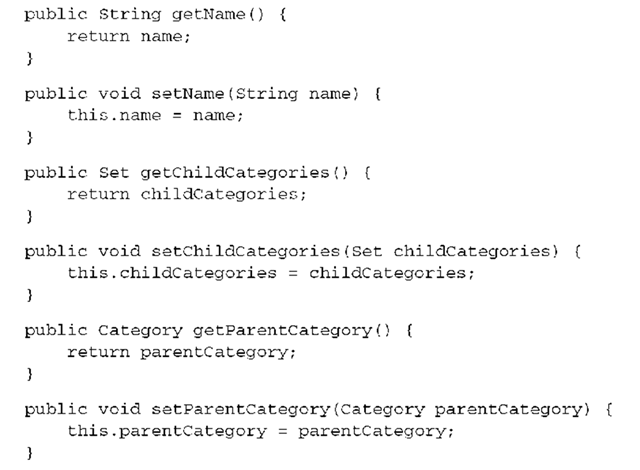

You use properties to express associations between POJO classes, and you use accessor methods to navigate from object to object at runtime. Let’s consider the associations defined by the Category class, as shown in figure 3.3.

As with all our diagrams, we left out the association-related attributes (let’s call them parentCategory and childCategories) because they would clutter the illustration. These attributes and the methods that manipulate their values are called scaffolding code.

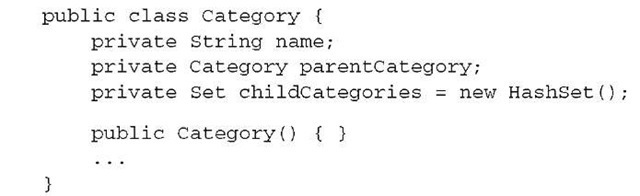

This is what the scaffolding code for the one-to-many self-association of Category looks like:

To allow bidirectional navigation of the association, you require two attributes. The parentCategory field implements the single-valued end of the association and is declared to be of type Category. The many-valued end, implemented by the childCategories field, must be of collection type. You choose a Set, because duplicates are disallowed, and initialize the instance variable to a new instance of HashSet.

Hibernate requires interfaces for collection-typed attributes, so you must use java.util.Set or java.util.List rather than HashSet, for example. This is consistent with the requirements of the JPA specification for collections in entities. At runtime, Hibernate wraps the HashSet instance with an instance of one of Hibernate’s own classes. (This special class isn’t visible to the application code.) It’s good practice to program to collection interfaces anyway, rather than concrete implementations, so this restriction shouldn’t bother you.

You now have some private instance variables but no public interface to allow access from business code or property management by Hibernate (if it shouldn’t access the fields directly). Let’s add some accessor methods to the class:

Again, these accessor methods need to be declared public only if they’re part of the external interface of the persistent class used by the application logic to create a relationship between two objects. However, managing the link between two Category instances is more difficult than setting a foreign key value in a database field. In our experience, developers are often unaware of this complication that arises from a network object model with bidirectional references. Let’s walk through the issue step by step.

The basic procedure for adding a child Category to a parent Category looks like this:

Whenever a link is created between a parent Category and a child Category, two actions are required:

■ The parentCategory of the child must be set, effectively breaking the association between the child and its old parent (there can only be one parent for any child).

■ The child must be added to the childCategories collection of the new parent Category.

NOTE Managed relationships in Hibernate—Hibernate doesn’t manage persistent associations. If you want to manipulate an association, you must write exactly the same code you would write without Hibernate. If an association is bidirectional, both sides of the relationship must be considered. Programming models like EJB 2.1 entity beans muddled this behavior by introducing container-managed relationships—the container automatically changes the other side of a relationship if one side is modified by the application. This is one of the reasons why code that uses EJB 2.1 entity beans couldn’t be reused outside the container. EJB 3.0 entity associations are transparent, just like in Hibernate. If you ever have problems understanding the behavior of associations in Hibernate, just ask yourself, “What would I do without Hibernate?” Hibernate doesn’t change the regular Java semantics.

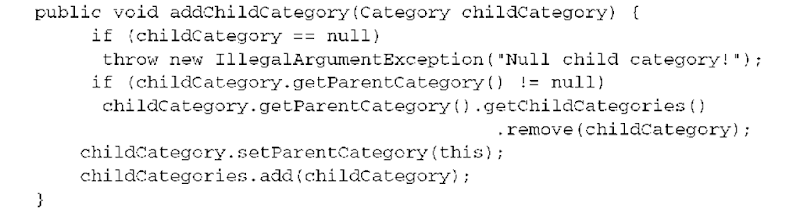

It’s a good idea to add a convenience method to the Category class that groups these operations, allowing reuse and helping ensure correctness, and in the end guarantee data integrity:

The addChildCategory() method not only reduces the lines of code when dealing with Category objects, but also enforces the cardinality of the association. Errors that arise from leaving out one of the two required actions are avoided. This kind of grouping of operations should always be provided for associations, if possible. If you compare this with the relational model of foreign keys in a relational database, you can easily see how a network and pointer model complicates a simple operation: instead of a declarative constraint, you need procedural code to guarantee data integrity.

Because you want addChildCategory() to be the only externally visible muta-tor method for the child categories (possibly in addition to a removeChildCate-gory() method), you can make the setChildCategories() method private or drop it and use direct field access for persistence. The getter method still returns a modifiable collection, so clients can use it to make changes that aren’t reflected on the inverse side. You should consider the static methods Collections.unmod-ifiableCollection(c) and Collections.unmodifiableSet(s), if you prefer to wrap the internal collections before returning them in your getter method. The client then gets an exception if it tries to modify the collection; every modification is forced to go through the relationship-management method.

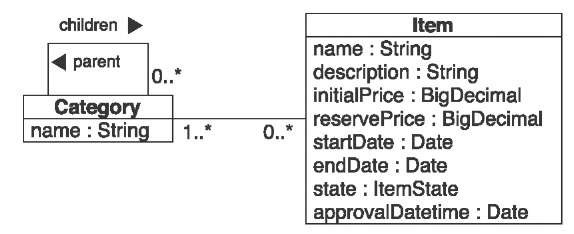

A different kind of relationship exists between the Category and Item classes: a bidirectional many-to-many association, as shown in figure 3.4.

Figure 3.4

Category and the associated Item class

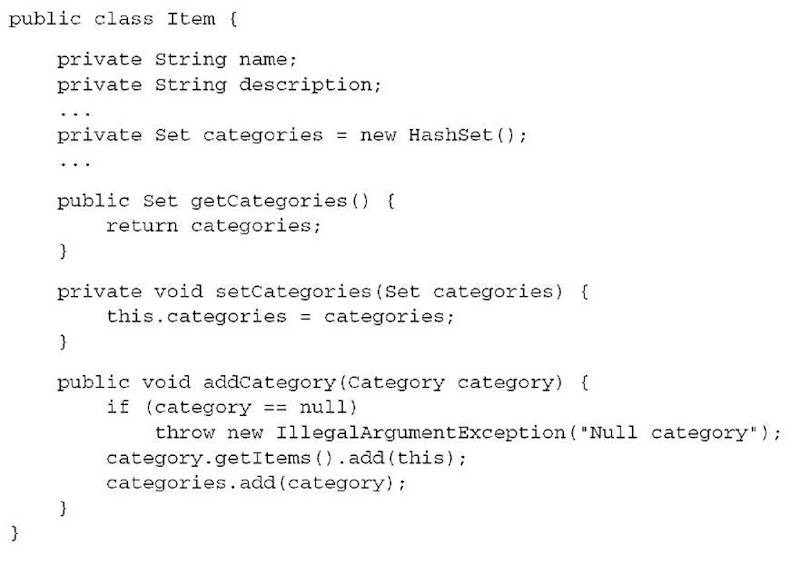

In the case of a many-to-many association, both sides are implemented with collection-valued attributes. Let’s add the new attributes and methods for accessing the Item relationship to the Category class, as shown in listing 3.2.

Listing 3.2 Category to Item scaffolding code

The code for the Item class (the other end of the many-to-many association) is similar to the code for the Category class. You add the collection attribute, the standard accessor methods, and a method that simplifies relationship management, as in listing 3.3.

Listing 3.3 Item to Category scaffolding code

The addCategory() method is similar to the addChildCategory() convenience method of the Category class. It’s used by a client to manipulate the link between an Item and a Category. For the sake of readability, we won’t show convenience methods in future code samples and assume you’ll add them according to your own taste.

Using convenience methods for association handling isn’t the only way to improve a domain model implementation. You can also add logic to your accessor methods.

Adding logic to accessor methods

One of the reasons we like to use JavaBeans-style accessor methods is that they provide encapsulation: The hidden internal implementation of a property can be changed without any changes to the public interface. This lets you abstract the internal data structure of a class—the instance variables—from the design of the database, if Hibernate accesses the properties at runtime through accessor methods. It also allows easier and independent refactoring of the public API and the internal representation of a class.

For example, if your database stores the name of a user as a single NAME column, but your User class has firstname and lastname properties, you can add the following persistent name property to the class:

Later, you’ll see that a Hibernate custom type is a better way to handle many of these kinds of situations. However, it helps to have several options.

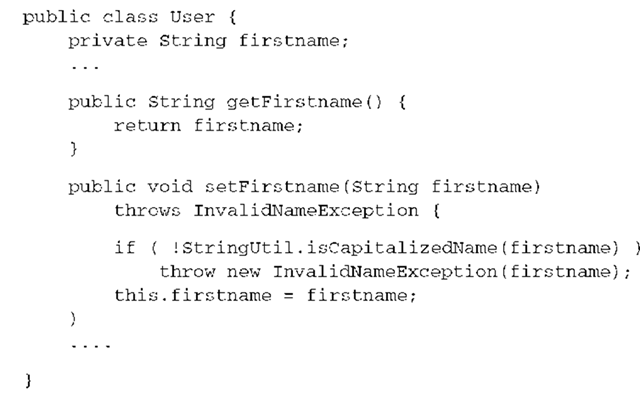

Accessor methods can also perform validation. For instance, in the following example, the setFirstName() method verifies that the name is capitalized:

Hibernate may use the accessor methods to populate the state of an instance when loading an object from a database, and sometimes you’ll prefer that this validation not occur when Hibernate is initializing a newly loaded object. In that case, it makes sense to tell Hibernate to directly access the instance variables.

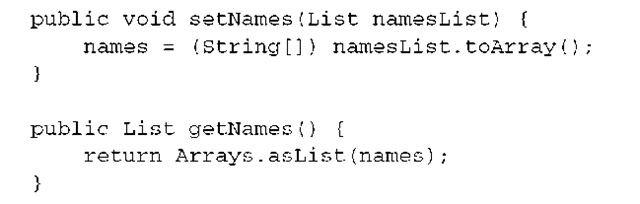

Another issue to consider is dirty checking. Hibernate automatically detects object state changes in order to synchronize the updated state with the database. It’s usually safe to return a different object from the getter method than the object passed by Hibernate to the setter. Hibernate compares the objects by value—not by object identity—to determine whether the property’s persistent state needs to be updated. For example, the following getter method doesn’t result in unnecessary SQL UPDATEs:

There is one important exception to this: Collections are compared by identity! For a property mapped as a persistent collection, you should return exactly the same collection instance from the getter method that Hibernate passed to the setter method. If you don’t, Hibernate will update the database, even if no update is necessary, every time the state held in memory is synchronized with the database. This kind of code should almost always be avoided in accessor methods:

Finally, you have to know how exceptions in accessor methods are handled if you configure Hibernate to use these methods when loading and storing instances. If a RuntimeException is thrown, the current transaction is rolled back, and the exception is yours to handle. If a checked application exception is thrown, Hibernate wraps the exception into a RuntimeException.

You can see that Hibernate doesn’t unnecessarily restrict you with a POJO programming model. You’re free to implement whatever logic you need in accessor methods (as long as you keep the same collection instance in both getter and setter). How Hibernate accesses the properties is completely configurable. This kind of transparency guarantees an independent and reusable domain model implementation. And everything we have explained and said so far is equally true for both Hibernate persistent classes and JPA entities.

Let’s now define the object/relational mapping for the persistent classes.

Object/relational mapping metadata

ORM tools require metadata to specify the mapping between classes and tables, properties and columns, associations and foreign keys, Java types and SQL types, and so on. This information is called the object/relational mapping metadata. Metadata is data about data, and mapping metadata defines and governs the transformation between the different type systems and relationship representations in object-oriented and SQL systems.

It’s your job as a developer to write and maintain this metadata. We discuss various approaches in this section, including metadata in XML files and JDK 5.0 source code annotations. Usually you decide to use one strategy in a particular project, and after reading these sections you’ll have the background information to make an educated decision.

Metadata in XML

Any ORM solution should provide a human-readable, easily hand-editable mapping format, not just a GUI mapping tool. Currently, the most popular object/ relational metadata format is XML. Mapping documents written in and with XML are lightweight, human readable, easily manipulated by version-control systems and text editors, and they can be customized at deployment time (or even at runtime, with programmatic XML generation).

But is XML-based metadata really the best approach? A certain backlash against the overuse of XML can be seen in the Java community. Every framework and application server seems to require its own XML descriptors.

In our view, there are three main reasons for this backlash:

■ Metadata-based solutions have often been used inappropriately. Metadata is not, by nature, more flexible or maintainable than plain Java code.

■ Many existing metadata formats weren’t designed to be readable and easy to edit by hand. In particular, a major cause of pain is the lack of sensible defaults for attribute and element values, requiring significantly more typing than should be necessary. Even worse, some metadata schemas use only XML elements and text values, without any attributes. Another problem is schemas that are too generic, where every declaration is wrapped in a generic extension attribute of a meta element.

■ Good XML editors, especially in IDEs, aren’t as common as good Java coding environments. Worst, and most easily fixable, a document type declaration (DTD) often isn’t provided, preventing autocompletion and validation.

There is no getting around the need for metadata in ORM. However, Hibernate was designed with full awareness of the typical metadata problems. The XML metadata format of Hibernate is extremely readable and defines useful default values. If attribute values are missing, reflection is used on the mapped class to determine defaults. Hibernate also comes with a documented and complete DTD. Finally, IDE support for XML has improved lately, and modern IDEs provide dynamic XML validation and even an autocomplete feature.

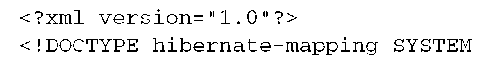

Let’s look at the way you can use XML metadata in Hibernate. You created the Category class in the previous section; now you need to map it to the CATEGORY table in the database. To do that, you write the XML mapping document in listing 3.4.

Listing 3.4 Hibernate XML mapping of the Category class

O The Hibernate mapping DTD should be declared in every mapping file—it’s required for syntactic validation of the XML.

© Mappings are declared inside a <hibernate-mapping> element. You may include as many class mappings as you like, along with certain other special declarations that we’ll mention later in the topic.

© The class Category (in the auction.model package) is mapped to the CATEGORY table. Every row in this table represents one instance of type Category.

© We haven’t discussed the concept of object identity, so you may be surprised by this mapping element. This complex topic is covered in the next topic. To understand this mapping, it’s sufficient to know that every row in the CATEGORY table has a primary key value that matches the object identity of the instance in memory. The <id> mapping element is used to define the details of object identity.

© The property name of type java.lang.String is mapped to a database NAME column. Note that the type declared in the mapping is a built-in Hibernate type (string), not the type of the Java property or the SQL column type. Think about this as the converter that represents a bridge between the other two type systems.

We’ve intentionally left the collection and association mappings out of this example. Association and especially collection mappings are more complex, so we’ll return to them in the second part of the topic.

Although it’s possible to declare mappings for multiple classes in one mapping file by using multiple <class> elements, the recommended practice (and the practice expected by some Hibernate tools) is to use one mapping file per persistent class. The convention is to give the file the same name as the mapped class, appending a suffix (for example, Category.hbm.xml), and putting it in the same package as the Category class.

As already mentioned, XML mapping files aren’t the only way to define mapping metadata in a Hibernate application. If you use JDK 5.0, your best choice is the Hibernate Annotations based on the EJB 3.0 and Java Persistence standard.

Annotation-based metadata

The basic idea is to put metadata next to the information it describes, instead of separating it physically into a different file. Java didn’t have this functionality before JDK 5.0, so an alternative was developed. The XDoclet project introduced annotation of Java source code with meta-information, using special Javadoc tags with support for key/value pairs. Through nesting of tags, quite complex structures are supported, but only some IDEs allow customization ofJavadoc templates for autocompletion and validation.

Java Specification Request (JSR) 175 introduced the annotation concept in the Java language, with type-safe and declared interfaces for the definition of annotations. Autocompletion and compile-time checking are no longer an issue. We found that annotation metadata is, compared to XDoclet, nonverbose and that it has better defaults. However, JDK 5.0 annotations are sometimes more difficult to read than XDoclet annotations, because they aren’t inside regular comment blocks; you should use an IDE that supports configurable syntax highlighting of annotations. Other than that, we found no serious disadvantage in working with annotations in our daily work in the past years, and we consider annotation-metadata support to be one of the most important features of JDK 5.0.

We’ll now introduce mapping annotations and use JDK 5.0. If you have to work with JDK 1.4 but like to use annotation-based metadata, consider XDoclet, which we’ll show afterwards.

Defining and using annotations

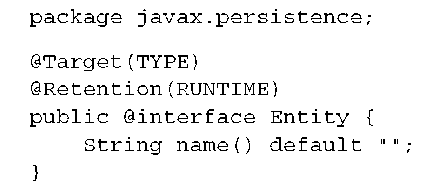

Before you annotate the first persistent class, let’s see how annotations are created. Naturally, you’ll usually use predefined annotations. However, knowing how to extend the existing metadata format or how to write your own annotations is a useful skill. The following code example shows the definition of an Entity annotation:

The first line defines the package, as always. This annotation is in the package javax.persistence, the Java Persistence API as defined by EJB 3.0. It’s one of the most important annotations of the specification—you can apply it on a POJO to make it a persistent entity class. The next line is an annotation that adds meta-information to the @Entity annotation (metadata about metadata). It specifies that the @Entity annotation can only be put on type declarations; in other words, you can only mark up classes with the @Entity annotation, not fields or methods. The retention policy chosen for this annotation is RUNTIME; other options (for other use cases) include removal of the annotation metadata during compilation, or only inclusion in byte-code without possible runtime reflectivity. You want to preserve all entity meta-information even at runtime, so Hibernate can read it on startup through Java Reflection. What follows in the example is the actual declaration of the annotation, including its interface name and its attributes (just one in this case, name, with an empty string default).

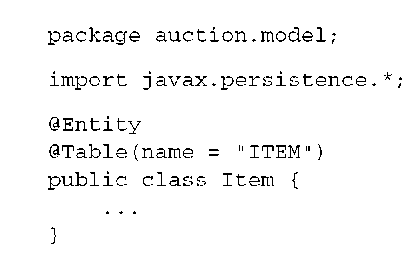

Let’s use this annotation to make a POJO persistent class a Java Persistence entity:

This public class, Item, has been declared as a persistent entity. All of its properties are now automatically persistent with a default strategy. Also shown is a second annotation that declares the name of the table in the database schema this persistent class is mapped to. If you omit this information, the JPA provider defaults to the unqualified class name (just as Hibernate will if you omit the table name in an XML mapping file).

All of this is type-safe, and declared annotations are read with Java Reflection when Hibernate starts up. You don’t need to write any XML mapping files, Hibernate doesn’t need to parse any XML, and startup is faster. Your IDE can also easily validate and highlight annotations—they are regular Java types, after all.

One of the clear benefits of annotations is their flexibility for agile development. If you refactor your code, you rename, delete, or move classes and properties all the time. Most development tools and editors can’t refactor XML element and attribute values, but annotations are part of the Java language and are included in all refactoring operations.

Which annotations should you apply? You have the choice among several standardized and vendor-specific packages.

Considering standards

Annotation-based metadata has a significant impact on how you write Java applications. Other programming environments, like C# and .NET, had this kind of support for quite a while, and developers adopted the metadata attributes quickly. In the Java world, the big rollout of annotations is happening with Java EE 5.0. All specifications that are considered part of Java EE, like EJB, JMS, JMX, and even the servlet specification, will be updated and use JDK 5.0 annotations for metadata needs. For example, web services in J2EE 1.4 usually require significant metadata in XML files, so we expect to see real productivity improvements with annotations. Or, you can let the web container inject an EJB handle into your servlet, by adding an annotation on a field. Sun initiated a specification effort (JSR 250) to take care of the annotations across specifications, defining common annotations for the whole Java platform. For you, however, working on a persistence layer, the most important specification is EJB 3.0 and JPA.

Annotations from the Java Persistence package are available in javax.persis-tence once you have included the JPA interfaces in your classpath. You can use these annotations to declare persistent entity classes, embeddable classes (we’ll discuss these in the next topic), properties, fields, keys, and so on. The JPA specification covers the basics and most relevant advanced mappings—everything you need to write a portable application, with a pluggable, standardized persistence layer that works inside and outside of any runtime container.

What annotations and mapping features aren’t specified in Java Persistence? A particular JPA engine and product may naturally offer advantages—the so-called vendor extensions.

Utilizing vendor extensions

Even if you map most of your application’s model with JPA-compatible annotations from the javax.persistence package, you’ll have to use vendor extensions at some point. For example, almost all performance-tuning options you’d expect to be available in high-quality persistence software, such as fetching and caching settings, are only available as Hibernate-specific annotations.

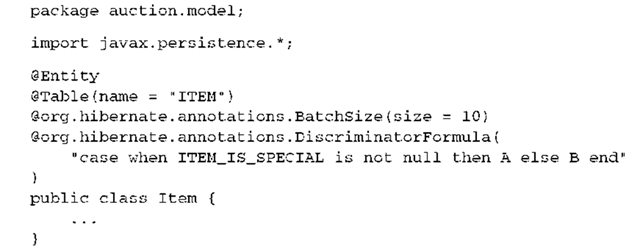

Let’s see what that looks like in an example. Annotate the Item entity source code again:

This example contains two Hibernate annotations. The first, @BatchSize, is a fetching option that can increase performance in situations we’ll examine later in this topic. The second, @DiscriminatorFormula, is a Hibernate mapping annotation that is especially useful for legacy schemas when class inheritance can’t be determined with simple literal values (here it maps a legacy column ITEM_IS_SPECIAL—probably some kind of flag—to a literal value). Both annotations are prefixed with the org.hibernate.annotations package name.

Consider this a good practice, because you can now easily see what metadata of this entity class is from the JPA specification and which tags are vendor-specific. You can also easily search your source code for “org.hibernate.annotations” and get a complete overview of all nonstandard annotations in your application in a single search result.

If you switch your Java Persistence provider, you only have to replace the vendor-specific extensions, and you can expect a similar feature set to be available with most sophisticated solutions. Of course, we hope you’ll never have to do this, and it doesn’t happen often in practice—just be prepared.

Annotations on classes only cover metadata that is applicable for that particular class. However, you often need metadata at a higher level, for a whole package or even the whole application. Before we discuss these options, we’d like to introduce another mapping metadata format.

XML descriptors in JPA and EJB 3.0

The EJB 3.0 and Java Persistence standard embraces annotations aggressively. However, the expert group has been aware of the advantages of XML deployment descriptors in certain situations, especially for configuration metadata that changes with each deployment. As a consequence, every annotation in EJB 3.0 and JPA can be replaced with an XML descriptor element. In other words, you don’t have to use annotations if you don’t want to (although we strongly encourage you to reconsider and give annotations a try, if this is your first reaction to annotations).

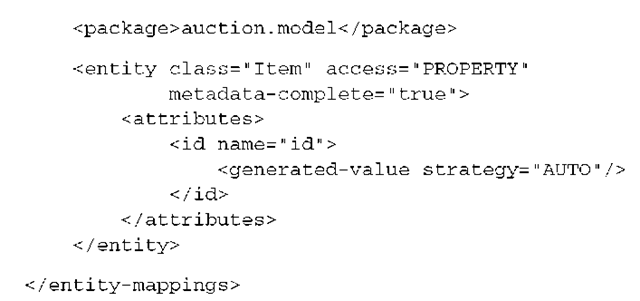

Let’s look at an example of a JPA XML descriptor for a particular persistence unit:

This XML is automatically picked up by the JPA provider if you place it in a file called orm.xml in your classpath, in the META-INF directory of the persistence unit. You can see that you only have to name an identifier property for a class; as in annotations, all other properties of the entity class are automatically considered persistent with a sensible default mapping.

You can also set default mappings for the whole persistence unit, such as the schema name and default cascading options. If you include the <xml-mapping-metadata-complete> element, the JPA provider completely ignores all annotations on your entity classes in this persistence unit and relies only on the mappings as defined in the orm.xml file. You can (redundantly in this case) enable this on an entity level, with metadata-complete=”true”. If enabled, the JPA provider assumes that all properties of the entity are mapped in XML, and that all annotations for this entity should be ignored.

If you don’t want to ignore but instead want to override the annotation metadata, first remove the global <xml-mapping-metadata-complete> element from the orm.xml file. Also remove the metadata-complete=”true” attribute from any entity mapping that should override, not replace, annotations:

Here you map the initialPrice property to the INIT_PRICE column and specify it isn’t nullable. Any annotation on the initialPrice property of the Item class is ignored, but all other annotations on the Item class are still applied. Also note that you didn’t specify an access strategy in this mapping, so field or accessor method access is used depending on the position of the @Id annotation in Item. (We’ll get back to this detail in the next topic.)

An obvious problem with XML deployment descriptors in Java Persistence is their compatibility with native Hibernate XML mapping files. The two formats aren’t compatible at all, and you should make a decision to use one or the other. The syntax of the JPA XML descriptor is much closer to the actual JPA annotations than to the native Hibernate XML mapping files.

You also need to consider vendor extensions when you make a decision for an XML metadata format. The Hibernate XML format supports all possible Hibernate mappings, so if something can’t be mapped in JPA/Hibernate annotations, it can be mapped with native Hibernate XML files. The same isn’t true with JPA XML descriptors—they only provide convenient externalized metadata that covers the specification. Sun does not allow vendor extensions with an additional namespace.

On the other hand, you can’t override annotations with Hibernate XML mapping files; you have to define a complete entity class mapping in XML.

For these reasons, we don’t show all possible mappings in all three formats; we focus on native Hibernate XML metadata and JPA/Hibernate annotations. However, you’ll learn enough about the JPA XML descriptor to use it if you want to.

Consider JPA/Hibernate annotations the primary choice if you’re using JDK 5.0. Fall back to native Hibernate XML mapping files if you want to externalize a particular class mapping or utilize a Hibernate extension that isn’t available as an annotation. Consider JPA XML descriptors only if you aren’t planning to use any vendor extension (which is, in practice, unlikely), or if you want to only override a few annotations, or if you require complete portability that even includes deployment descriptors.

But what if you’re stuck with JDK 1.4 (or even 1.3) and still want to benefit from the better refactoring capabilities and reduced lines of code of inline metadata?

Using XDoclet

The XDoclet project has brought the notion of attribute-oriented programming to Java. XDoclet leverages the Javadoc tag format (@attribute) to specify class-, field-, or method-level metadata attributes. There is even a topic about XDoclet from Publications, XDoclet in Action (Walls and Richards, 2004).

XDoclet is implemented as an Ant task that generates Hibernate XML metadata (or something else, depending on the plug-in) as part of the build process.

Creating the Hibernate XML mapping document with XDoclet is straightforward; instead of writing it by hand, you mark up the Java source code of your persistent class with custom Javadoc tags, as shown in listing 3.5.

Listing 3.5 Using XDoclet tags to mark up Java classes with mapping metadata

With the annotated class in place and an Ant task ready, you can automatically generate the same XML document shown in the previous section (listing 3.4).

The downside to XDoclet is that it requires another build step. Most large Java projects are using Ant already, so this is usually a nonissue. Arguably, XDoclet mappings are less configurable at deployment time; but there is nothing stopping you from hand-editing the generated XML before deployment, so this is probably not a significant objection. Finally, support for XDoclet tag validation may not be available in your development environment. However, the latest IDEs support at least autocompletion of tag names. We won’t cover XDoclet in this topic, but you can find examples on the Hibernate website.

Whether you use XML files, JDK 5.0 annotations, or XDoclet, you’ll often notice that you have to duplicate metadata in several places. In other words, you need to add global information that is applicable to more than one property, more than one persistent class, or even the whole application.

Handling global metadata

Consider the following situation: All of your domain model persistent classes are in the same package. However, you have to specify class names fully qualified, including the package, in every XML mapping file. It would be a lot easier to declare the package name once and then use only the short persistent class name. Or, instead of enabling direct field access for every single property through the access=”field” mapping attribute, you’d rather use a single switch to enable field access for all properties. Class- or package-scoped metadata would be much more convenient.

Some metadata is valid for the whole application. For example, query strings can be externalized to metadata and called by a globally unique name in the application code. Similarly, a query usually isn’t related to a particular class, and sometimes not even to a particular package. Other application-scoped metadata includes user-defined mapping types (converters) and data filter (dynamic view) definitions.

Let’s walk through some examples of global metadata in Hibernate XML mappings and JDK 5.0 annotations.

Global XML mapping metadata

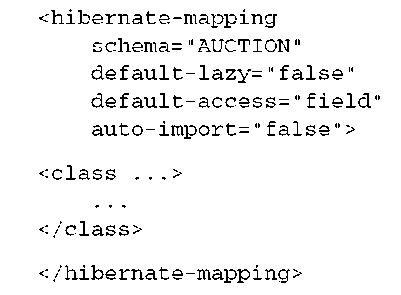

If you check the XML mapping DTD, you’ll see that the <hibernate-mapping> root element has global options that are applied to the class mapping(s) inside it—some of these options are shown in the following example:

The schema attribute enables a database schema prefix, AUCTION, used by Hibernate for all SQL statements generated for the mapped classes. By setting default-lazy to false, you enable default outer-join fetching for some class associations, (This default-lazy=”true” switch has an interesting side effect: It switches to Hibernate 2.x default fetching behavior—useful if you migrate to Hibernate 3.x but don’t want to update all fetching settings.) With default-access, you enable direct field access by Hibernate for all persistent properties of all classes mapped in this file. Finally, the auto-import setting is turned off for all classes in this file.

TIP Mapping files with no class declarations—Global metadata is required and present in any sophisticated application. For example, you may easily import a dozen interfaces, or externalize a hundred query strings. In large-scale applications, you often create mapping files without actual class mappings, and only imports, external queries, or global filter and type definitions. If you look at the DTD, you can see that <class> mappings are optional inside the <hibernate-mapping> root element. Split up and organize your global metadata into separate files, such as AuctionTypes.hbm.xml, AuctionQueries.hbm.xml, and so on, and load them in Hibernate’s configuration just like regular mapping files. However, make sure that all custom types and filters are loaded before any other mapping metadata that applies these types and filters to class mappings.

Let’s look at global metadata with JDK 5.0 annotations. Global annotation metadata

Annotations are by nature woven into the Java source code for a particular class. Although it’s possible to place global annotations in the source file of a class (at the top), we’d rather keep global metadata in a separate file. This is called package metadata, and it’s enabled with a file named package-info.java in a particular package directory:

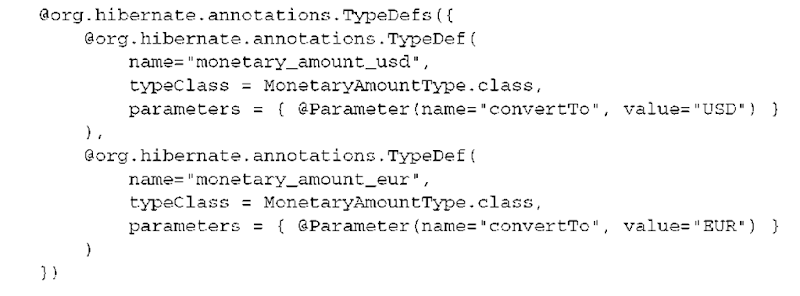

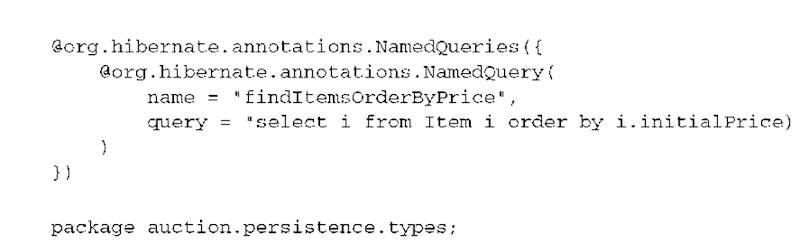

This example of a package metadata file, in the package auction.persistence.types, declares two Hibernate type converters. “The Hibernate type system.” You can now refer to the user-defined types in class mappings by their names. The same mechanism can be used to externalize queries and to define global identifier generators (not shown in the last example).

There is a reason the previous code example only includes annotations from the Hibernate package and no Java Persistence annotations. One of the (last-minute) changes made to the JPA specification was the removal of package visibility of JPA annotations. As a result, no Java Persistence annotations can be placed in a package-info.java file. If you need portable global Java Persistence metadata, put it in an orm.xml file.

Global annotations (Hibernate and JPA) can also be placed in the source code of a particular class, right after the import section. The syntax for the annotations is the same as in the package-info.java file, so we won’t repeat it here.

You now know how to write local and global mapping metadata. Another issue in large-scale applications is the portability of metadata.

Using placeholders

In any larger Hibernate application, you’ll face the problem of native code in your mapping metadata—code that effectively binds your mapping to a particular database product. For example, SQL statements, such as in formula, constraint, or filter mappings, aren’t parsed by Hibernate but are passed directly through to the database management system. The advantage is flexibility—you can call any native SQL function or keyword your database system supports. The disadvantage of putting native SQL in your mapping metadata is lost database portability, because your mappings, and hence your application, will work only for a particular DBMS (or even DBMS version).

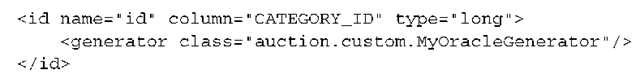

Even simple things, such as primary key generation strategies, usually aren’t portable across all database systems. In the next topic, we discuss a special identifier generator called native, which is a built-in smart primary key generator. On Oracle, it uses a database sequence to generate primary key values for rows in a table; on IBM DB2, it uses a special identity primary key column by default. This is how you map it in XML:

We’ll discuss the details of this mapping later. The interesting part is the declaration class=”native” as the identifier generator. Let’s assume that the portability this generator provides isn’t what you need, perhaps because you use a custom identifier generator, a class you wrote that implements the Hibernate

IdentifierGenerator interface:

The XML mapping file is now bound to a particular database product, and you lose the database portability of the Hibernate application. One way to deal with this issue is to use a placeholder in your XML file that is replaced during build when the mapping files are copied to the target directory (Ant supports this). This mechanism is recommended only if you have experience with Ant or already need build-time substitution for other parts of your application.

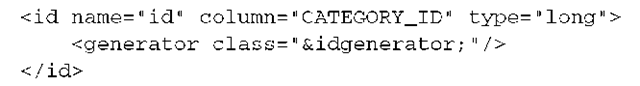

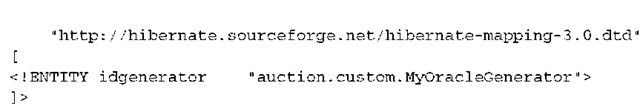

A much more elegant variation is to use custom XML entities (not related to our application’s business entities). Let’s assume you need to externalize an element or attribute value in your XML files to keep it portable:

The &idgenerator; value is called an entity placeholder. You can define its value at the top of the XML file as an entity declaration, as part of the document type definition:

The XML parser will now substitute the placeholder on Hibernate startup, when mapping files are read.

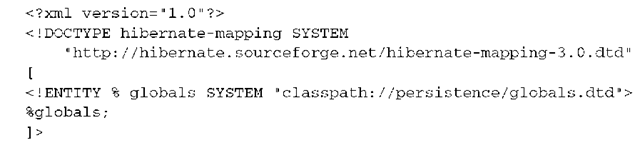

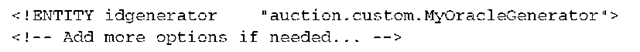

You can take this one step further and externalize this addition to the DTD in a separate file and include the global options in all other mapping files:

This example shows the inclusion of an external file as part of the DTD. The syntax, as often in XML, is rather crude, but the purpose of each line should be clear. All global settings are added to the globals.dtd file in the persistence package on the classpath:

To switch from Oracle to a different database system, just deploy a different glo-bals.dtd file.

Often, you need not only substitute an XML element or attribute value but also to include whole blocks of mapping metadata in all files, such as when many of your classes share some common properties, and you can’t use inheritance to capture them in a single location. With XML entity replacement, you can externalize an XML snippet to a separate file and include it in other XML files.

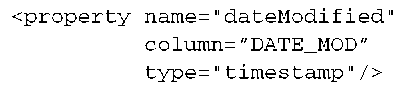

Let’s assume all the persistent classes have a dateModified property. The first step is to put this mapping in its own file, say, DateModified.hbm.xml:

This file needs no XML header or any other tags. Now you include it in the mapping file for a persistent class:

The content of DateModified.hbm.xml will be included and be substituted for the &datemodified; placeholder. This, of course, also works with larger XML snippets.

When Hibernate starts up and reads mapping files, XML DTDs have to be resolved by the XML parser. The built-in Hibernate entity resolver looks for the hibernate-mapping-3.0.dtd on the classpath; it should find the DTD in the hibernate3.jar file before it tries to look it up on the Internet, which happens automatically whenever an entity URL is prefixed with http://hibernate.source-forge.net/. The Hibernate entity resolver can also detect the classpath:// prefix, and the resource is then searched for in the classpath, where you can copy it on deployment. We have to repeat this FAQ: Hibernate never looks up the DTD on the Internet if you have a correct DTD reference in your mapping and the right JAR on the classpath.

The approaches we have described so far—XML, JDK 5.0 annotations, and XDoclet attributes—assume that all mapping information is known at development (or deployment) time. Suppose, however, that some information isn’t known before the application starts. Can you programmatically manipulate the mapping metadata at runtime?

Manipulating metadata at runtime

It’s sometimes useful for an application to browse, manipulate, or build new mappings at runtime. XML APIs like DOM, dom4j, and JDOM allow direct runtime manipulation of XML documents, so you could create or manipulate an XML document at runtime, before feeding it to the Configuration object.

On the other hand, Hibernate also exposes a configuration-time metamodel that contains all the information declared in your static mapping metadata. Direct programmatic manipulation of this metamodel is sometimes useful, especially for applications that allow for extension by user-written code. A more drastic approach would be complete programmatic and dynamic definition of the mapping metadata, without any static mapping. However, this is exotic and should be reserved for a particular class of fully dynamic applications, or application building kits.

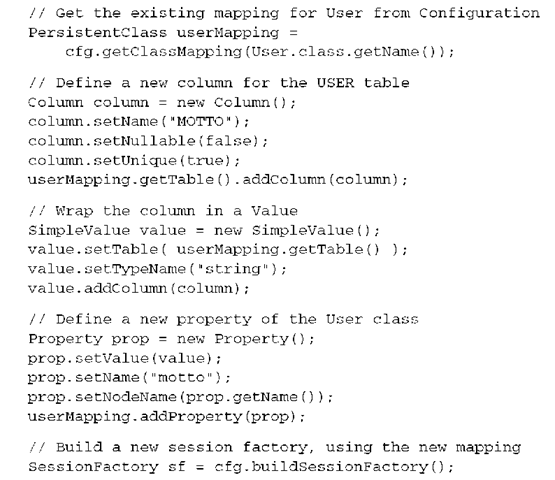

The following code adds a new property, motto, to the User class:

A PersistentClass object represents the metamodel for a single persistent class, and you retrieve it from the Configuration object. Column, SimpleValue, and Property are all classes of the Hibernate metamodel and are available in the org.hibernate.mapping package.

TIP Keep in mind that adding a property to an existing persistent class mapping, as shown here, is quite easy, but programmatically creating a new mapping for a previously unmapped class is more involved.

Once a SessionFactory is created, its mappings are immutable. The Session-Factory uses a different metamodel internally than the one used at configuration time. There is no way to get back to the original Configuration from the SessionFactory or Session. (Note that you can get the SessionFactory from a Session if you wish to access a global setting.) However, the application can read the SessionFactory’s metamodel by calling getClassMetadata() or getCollection-Metadata(). Here’s an example:

This code snippet retrieves the names of persistent properties of the Item class and the values of those properties for a particular instance. This helps you write generic code. For example, you may use this feature to label UI components or improve log output.

Although you’ve seen some mapping constructs in the previous sections, we haven’t introduced any more sophisticated class and property mappings so far. You should now decide which mapping metadata option you’d like to use in your project and then read more about class and property mappings in the next topic.

Or, if you’re already an experienced Hibernate user, you can read on and find out how the latest Hibernate version allows you to represent a domain model without Java classes.

Alternative entity representation

In this topic, so far, we’ve always talked about a domain model implementation based on Java classes—we called them POJOs, persistent classes, JavaBeans, or entities. An implementation of a domain model that is based on Java classes with regular properties, collections, and so on, is type-safe. If you access a property of a class, your IDE offers autocompletion based on the strong types of your model, and the compiler checks whether your source is correct. However, you pay for this safety with more time spent on the domain model implementation—and time is money.

In the following sections, we introduce Hibernate’s ability to work with domain models that aren’t implemented with Java classes. We’re basically trading type-safety for other benefits and, because nothing is free, more errors at runtime whenever we make a mistake. In Hibernate, you can select an entity mode for your application, or even mix entity modes for a single model. You can even switch between entity modes in a single Session.

These are the three built-in entity modes in Hibernate:

■ POJO—A domain model implementation based on POJOs, persistent classes.

This is what you have seen so far, and it’s the default entity mode.

■ MAP—No Java classes are required; entities are represented in the Java application with HashMaps. This mode allows quick prototyping of fully dynamic applications.

■ DOM4J—No Java classes are required; entities are represented as XML elements, based on the dom4j API. This mode is especially useful for exporting or importing data, or for rendering and transforming data through XSLT processing.

There are two reasons why you may want to skip the next section and come back later: First, a static domain model implementation with POJOs is the common case, and dynamic or XML representation are features you may not need right now. Second, we’re going to present some mappings, queries, and other operations that you may not have seen so far, not even with the default POJO entity mode. However, if you feel confident enough with Hibernate, read on.

Let’s start with the MAP mode and explore how a Hibernate application can be fully dynamically typed.

Creating dynamic applications

A dynamic domain model is a model that is dynamically typed. For example, instead of a Java class that represents an auction item, you work with a bunch of values in a Java Map. Each attribute of an auction item is represented by a key (the name of the attribute) and its value.

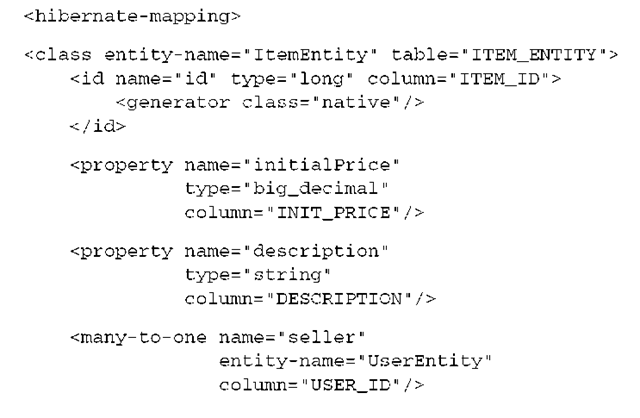

Mapping entity names

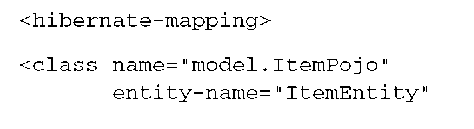

First, you need to enable this strategy by naming your business entities. In a Hibernate XML mapping file, you use the entity-name attribute:

There are three interesting things to observe in this mapping file.

First, you mix several class mappings in one, something we didn’t recommend earlier. This time you aren’t really mapping Java classes, but logical names of entities. You don’t have a Java source file and an XML mapping file with the same name next to each other, so you’re free to organize your metadata in any way you like.

Second, the <class name=” . . . “> attribute has been replaced with <class entity-name=”…”>. You also append …Entity to these logical names for clarity and to distinguish them from other nondynamic mappings that you made earlier with regular POJOs.

Finally, all entity associations, such as <many-to-one> and <one-to-many>, now also refer to logical entity names. The class attribute in the association mappings is now entity-name. This isn’t strictly necessary—Hibernate can recognize that you’re referring to a logical entity name even if you use the class attribute. However, it avoids confusion when you later mix several representations. Let’s see what working with dynamic entities looks like.

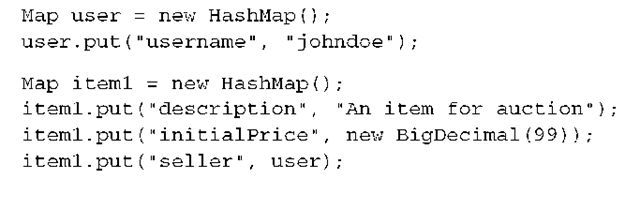

Working with dynamic maps

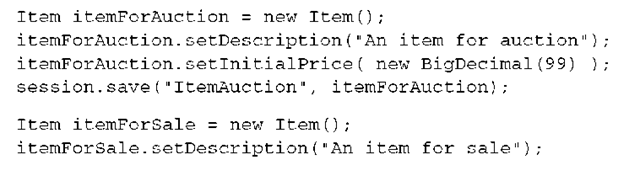

To create an instance of one of your entities, you set all attribute values in a Java Map:

The first map is a UserEntity, and you set the username attribute as a key/value pair. The next two maps are ItemEntitys, and here you set the link to the seller of each item by putting the user map into the item1 and item2 maps. You’re effectively linking maps—that’s why this representation strategy is sometimes also called “representation with maps of maps.”

The collection on the inverse side of the one-to-many association is initialized with an ArrayList, because you mapped it with bag semantics (Java doesn’t have a bag implementation, but the Collection interface has bag semantics). Finally, the save() method on the Session is given a logical entity name and the user map as an input parameter.

Hibernate knows that UserEntity refers to the dynamically mapped entity, and that it should treat the input as a map that has to be saved accordingly. Hibernate also cascades to all elements in the itemsForSale collection; hence, all item maps are also made persistent. One UserEntity and two ItemEntitys are inserted into their respective tables.

FAQ Can I map a Set in dynamic mode? Collections based on sets don’t work with dynamic entity mode. In the previous code example, imagine that itemsForSale was a Set. A Set checks its elements for duplicates, so when you call add(item1) and add(item2), the equals() method on these objects is called. However, item1 and item2 are Java Map instances, and the equals() implementation of a map is based on the key sets of the map. So, because both item1 and item2 are maps with the same keys, they aren’t distinct when added to a Set. Use bags or lists only if you require collections in dynamic entity mode.

Hibernate handles maps just like POJO instances. For example, making a map persistent triggers identifier assignment; each map in persistent state has an identifier attribute set with the generated value. Furthermore, persistent maps are automatically checked for any modifications inside a unit of work. To set a new price on an item, for example, you can load it and then let Hibernate do all the work:

All Session methods that have class parameters such as load() also come in an overloaded variation that accepts entity names. After loading an item map, you set a new price and make the modification persistent by committing the transaction, which, by default, triggers dirty checking and flushing of the Session.

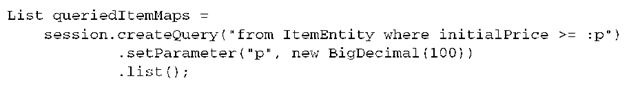

You can also refer to entity names in HQL queries:

This query returns a collection of ItemEntity maps. They are in persistent state.

Let’s take this one step further and mix a POJO model with dynamic maps. There are two reasons why you would want to mix a static implementation of your domain model with a dynamic map representation:

■ You want to work with a static model based on POJO classes by default, but sometimes you want to represent data easily as maps of maps. This can be particularly useful in reporting, or whenever you have to implement a generic user interface that can represent various entities dynamically.

■ You want to map a single POJO class of your model to several tables and then select the table at runtime by specifying a logical entity name.

You may find other use cases for mixed entity modes, but they’re so rare that we want to focus on the most obvious.

First, therefore, you’ll mix a static POJO model and enable dynamic map representation for some of the entities, some of the time.

Mixing dynamic and static entity modes

To enable a mixed model representation, edit your XML mapping metadata and declare a POJO class name and a logical entity name:

Obviously, you also need the two classes, model.ItemPojo and model.UserPojo, that implement the properties of these entities. You still base the many-to-one and one-to-many associations between the two entities on logical names.

Hibernate will primarily use the logical names from now on. For example, the following code does not work:

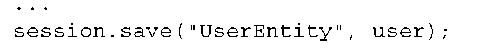

The preceding example creates a few objects, sets their properties, and links them, and then tries to save the objects through cascading by passing the user instance to save(). Hibernate inspects the type of this object and tries to figure out what entity it is, and because Hibernate now exclusively relies on logical entity names, it can’t find a mapping for model.UserPojo. You need to tell Hibernate the logical name when working with a mixed representation mapping:

Once you change this line, the previous code example works. Next, consider loading, and what is returned by queries. By default, a particular SessionFactory is in POJO entity mode, so the following operations return instances of model.ItemPojo:

You can switch to a dynamic map representation either globally or temporarily, but a global switch of the entity mode has serious consequences. To switch globally, add the following to your Hibernate configuration; e.g., in hibernate.cfg.xml:

All Session operations now either expect or return dynamically typed maps! The previous code examples that stored, loaded, and queried POJO instances no longer work; you need to store and load maps.

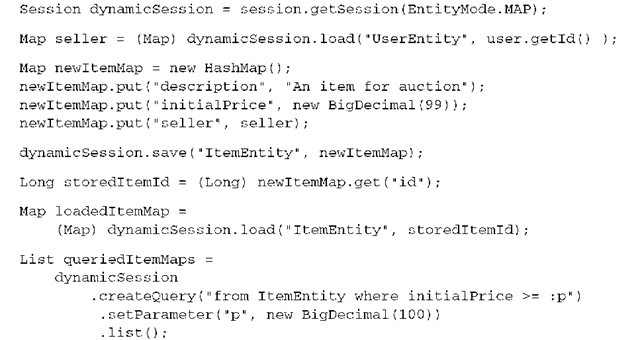

It’s more likely that you want to switch to another entity mode temporarily, so let’s assume that you leave the SessionFactory in the default POJO mode. To switch to dynamic maps in a particular Session, you can open up a new temporary Session on top of the existing one. The following code uses such a temporary Session to store a new auction item for an existing seller:

The temporary dynamicSession that is opened with getSession() doesn’t need to be flushed or closed; it inherits the context of the original Session. You use it only to load, query, or save data in the chosen representation, which is the Entity-Mode.MAP in the previous example. Note that you can’t link a map with a POJO instance; the seller reference has to be a HashMap, not an instance of UserPojo.

We mentioned that another good use case for logical entity names is the mapping of one POJO to several tables, so let’s look at that.

Mapping a class several times

Imagine that you have several tables with some columns in common. For example, you could have ITEM_AUCTION and ITEM_SALE tables. Usually you map each table to an entity persistent class, ItemAuction and ItemSale respectively. With the help of entity names, you can save work and implement a single persistent class.

To map both tables to a single persistent class, use different entity names (and usually different property mappings):

The model.Item persistent class has all the properties you mapped: id, description, initialPrice, and salesPrice. Depending on the entity name you use at runtime, some properties are considered persistent and others transient:

Thanks to the logical entity name, Hibernate knows into which table it should insert the data. Depending on the entity name you use for loading and querying entities, Hibernate selects from the appropriate table.

Scenarios in which you need this functionality are rare, and you’ll probably agree with us that the previous use case isn’t good or common.

In the next section, we introduce the third built-in Hibernate entity mode, the representation of domain entities as XML documents.

Representing data in XML

XML is nothing but a text file format; it has no inherent capabilities that qualify it as a medium for data storage or data management. The XML data model is weak, its type system is complex and underpowered, its data integrity is almost completely procedural, and it introduces hierarchical data structures that were outdated decades ago. However, data in XML format is attractive to work with in Java; we have nice tools. For example, we can transform XML data with XSLT, which we consider one of the best use cases.

Hibernate has no built-in functionality to store data in an XML format; it relies on a relational representation and SQL, and the benefits of this strategy should be clear. On the other hand, Hibernate can load and present data to the application developer in an XML format. This allows you to use a sophisticated set of tools without any additional transformation steps.

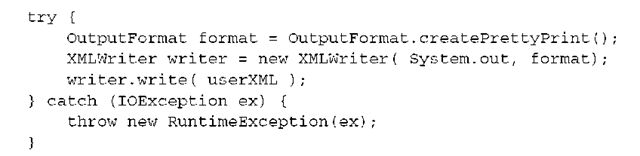

Let’s assume that you work in default POJO mode and that you quickly want to obtain some data represented in XML. Open a temporary Session with the Enti-tyMode.DOM4J:

What is returned here is a dom4j Element, and you can use the dom4j API to read and manipulate it. For example, you can pretty-print it to your console with the following snippet:

If we assume that you reuse the POJO classes and data from the previous examples, you see one User instance and two Item instances (for clarity, we no longer name them UserPojo and ItemPojo):

Hibernate assumes default XML element names—the entity and property names. You can also see that collection elements are embedded, and that circular references are resolved through identifiers (the <seller> element).

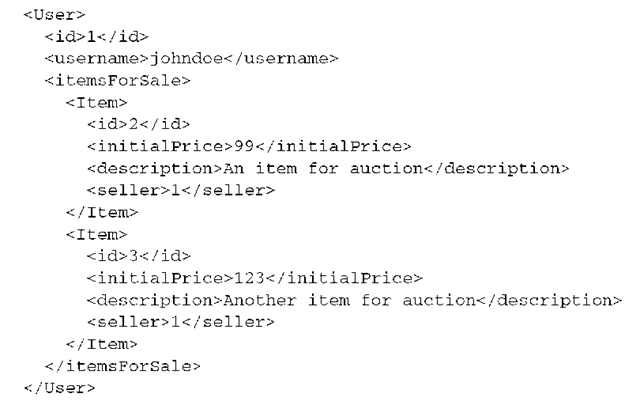

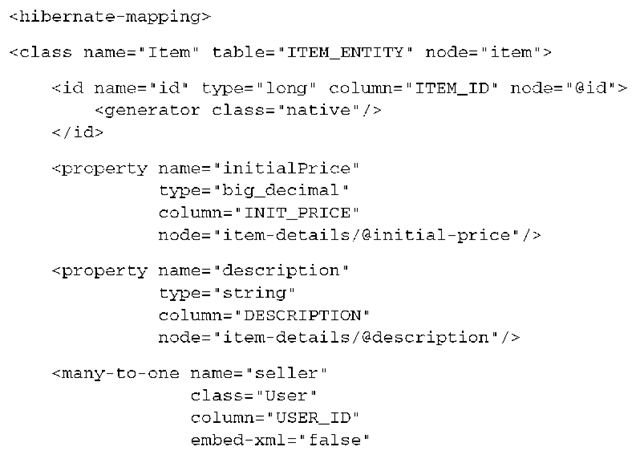

You can change this default XML representation by adding node attributes to your Hibernate mapping metadata:

Each node attribute defines the XML representation:

■ A node=”name” attribute on a <class> mapping defines the name of the XML element for that entity.

■ A node=”name” attribute on any property mapping specifies that the property content should be represented as the text of an XML element of the given name.

■ A node=”@name” attribute on any property mapping specifies that the property content should be represented as an XML attribute value of the given name.

■ A node=”name/@attname” attribute on any property mapping specifies that the property content should be represented as an XML attribute value of the given name, on a child element of the given name.

The embed-xml option is used to trigger embedding or referencing of associated entity data. The updated mapping results in the following XML representation of the same data you’ve seen before:

Be careful with the embed-xml option—you can easily create circular references that result in an endless loop!

Finally, data in an XML representation is transactional and persistent, so you can modify queried XML elements and let Hibernate take care of updating the underlying tables:

There is no limit to what you can do with the XML that is returned by Hibernate. You can display, export, and transform it in any way you like. See the dom4j documentation for more information.

Finally, note that you can use all three built-in entity modes simultaneously, if you like. You can map a static POJO implementation of your domain model, switch to dynamic maps for your generic user interface, and export data into XML. Or, you can write an application that doesn’t have any domain classes, only dynamic maps and XML. We have to warn you, though, that prototyping in the software industry often means that customers end up with the prototype that nobody wanted to throw away—would you buy a prototype car? We highly recommend that you rely on static domain models if you want to create a maintainable system.