Spatiotemporal Interpolation

Despite the fact that perception in the laboratory is often studied with well-controlled, static, 2D images, ordinary perception usually involves diverse, moving, 3D objects. When an object is partially occluded and moves relative to the occluding surface, it is dynamically occluded. In such circumstances, shape information from the dynamically occluded object is discontinuous in both space and time. Regions of the object may become visible at different times and places in the visual field, and some regions may never project to the observer’s eyes at all. Such cases are somewhat analogous to static, 2D occluded images, except that the partner edges on either side of an occluding boundary may appear at different times and be spatially misaligned.

For instance, imagine standing in a park and looking past a grove of trees toward a street in the distance. A car drives down the street from left to right and is visible beyond the grove. The car goes into and out of view as it passes behind the tree trunks and tiny bits and pieces of it twinkle through the gaps in the branches and leaves. You might see a bit of the fender at time 1 in the left visual field, a bit of the passenger door at time 2 in the middle of the visual field, and a bit of the trunk at time 3 in the right visual field. But what you perceive is not a collection of car bits flickering into and out of view. What you perceive is a car, whole and unified. In other words, your visual system naturally takes into account the constantly changing stimulation from a dynamically occluded object, collects it over time, compensates for its lateral displacement, and delivers a coherent percept of a whole object.

This feat of perception is rather amazing. Given that boundary interpolation for static images declines as a function of spatial misalignment, and given that the pieces of the car became visible at different places throughout the visual field, our perception of a coherent object is quite remarkable. The key is that the spatially misaligned pieces did not appear at the same time, but rather in an orderly temporal progression as the car moved. What unifies the spatial and temporal elements of this equation is, of course, motion. The motion vector of the car allows the visual system to correct for and anticipate the introduction of new shape information.

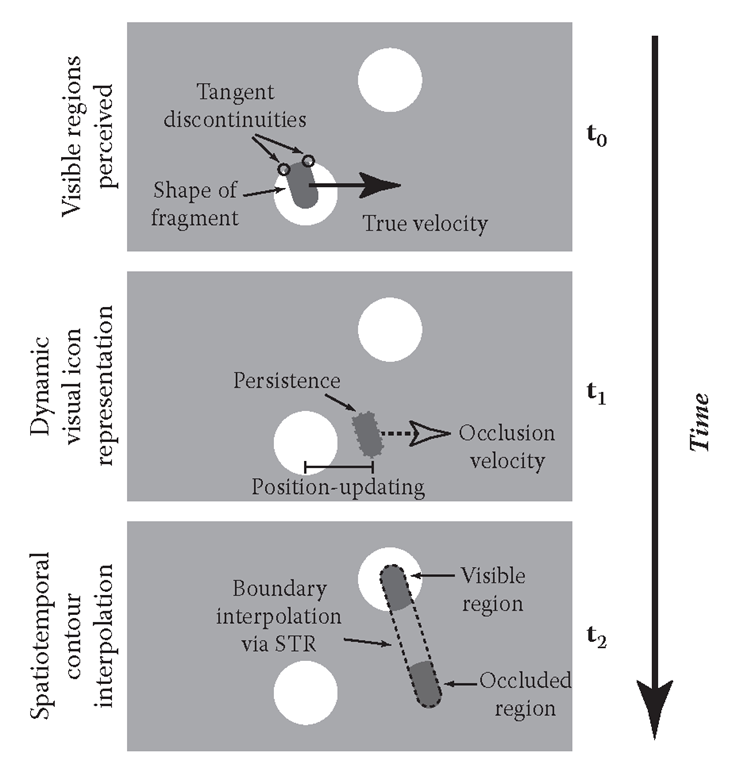

Palmer, Kellman, and Shipley (2006) considered the requirements for spatiotemporal object formation. One important requirement is persistence: In order to be connected with fragments not yet visible, a momentarily viewed fragment must be represented after it becomes occluded. (See Figure 10.11 at time t0.) A second requirement is position updating. Not only must a previously viewed, moving fragment continue to be represented, its spatial position must be updated over time, in accordance with its previously observed velocity (Figure 10.11 at time t1). Finally, previously viewed and currently viewed fragments are both utilized by processes of contour and surface interpolation, which connect regions across spatial gaps (Figure 10.11 at t2). When new fragments of the object come into view, they are integrated with the persisting, position-updated fragments via contour and surface interpolation processes that have previously been identified for static objects. As a result of persistence and updating processes, whether contours are interpolated is constrained by the same geometric relations of contour relatability (Kellman and Shipley, 1991; Kellman, Garrigan, and Shipley, 2005) that determine unit formation in static arrays.

FIGURE 10.11 Spatiotemporal interpolation processes. An object moves from left to right behind an occluder with two circular windows. At t0, shape and motion information about visible regions of the rod are perceived. At t1, the shape and current position of the occluded region of the rod are represented in the dynamic visual icon representation. At t2, another portion of the rod becomes visible, and contour interpolation occurs between the occluded and visible regions.

As the spatial relationships that support interpolation are highly constrained, accurate representations of previously seen fragments are important for allowing spatiotemporal object formation to occur, and to operate accurately. Palmer, Kellman, and Shipley (2006) combined the requirements for object formation with proposals about visual mechanisms that represent, update, and connect object fragments over time in a model of spatiotemporal relatability (STR). The model provides an account for perception of dynamically occluded objects in situations such as that presented in Figure 10.1.

In a series of experiments, Palmer, Kellman, and Shipley (2006) found support for the persistence, position updating, and relatability notions of STR. The notion of persistence was supported because observers performed as if they had seen dynamically occluded objects for longer than their physical exposure durations. The notion of position updating was supported because observers were highly accurate at discriminating between two shape configurations that differed only in the horizontal alignment of the pieces. Because the dynamically occluded objects traveled horizontally and two partner edges on either side of an occluded region were not seen simultaneously, observers’ accurate performance demonstrated that they had information about the locations of both edges despite the fact that, at all times, at least one was occluded. Finally, the notion that contour and surface interpolation processes operated in these displays was supported by a strong advantage in discrimination performance under conditions predicted to support object formation. Specifically, configurations that conformed to the geometric constraints of STR produced markedly better sensitivity than those that did not. The dependence of this effect on object formation was also shown in another condition, in which the removal of a mere 6% of pixels at the points of occlusion (rounding contour junctions) produced reliably poorer performance, despite the fact that the global configuration of the pieces was preserved. This last result was predicted from prior findings that rounding of contour junctions weakens contour completion processes (Albert, 2001; Shipley and Kellman, 1990; Rubin, 2001). When the contour interpolation process was compromised, the three projected fragments of the objects were less likely to be perceived as a single visual unit, and discrimination performance suffered.

Palmer, Kellman, and Shipley (2006) proposed the notion of a dynamic visual icon—a representation in which the persistence and position updating (and perhaps interpolation) functions of STR are carried out. The idea extends the notion of a visual icon representation, first discovered by Sperling (1960) and labeled by Neisser (1967), which accepts information over time and allows the perceiver to integrate visual information that is no longer physically visible. Perception is not an instantaneous process but rather is extended over time, and the visual icon is a representation that faithfully maintains visual information in a spatially accurate format for 100 ms or more after it disappears. The proposal of a dynamic visual icon adds the idea that represented information may be positionally updated based on previously acquired velocity information. It is not clear whether this feature is a previously unexplored aspect of known iconic visual representations or whether it implicates a special representation. What is clear is that visual information is not only accumulated over time and space, but that the underlying representation is geared toward the processing of ongoing events.

A yet unexplored aspect of the dynamic visual icon is whether it incorporates position change information in all three spatial dimensions. If so, this sort of representation might handle computations in a truly 4D spatiotemporal object formation process. Most studies to date, as well as the theory of STR proposed by Palmer, Kellman, and Shipley (2006), have focused on 2D contour completion processes and motion information. A more comprehensive idea of 3D interpolation that incorporates position change and integration over time has yet to be studied experimentally. Future work will address this issue and attempt to unify the 3D object formation work of Kellman, Garrigan, and Shipley (2005) with the STR theory and findings of Palmer, Kellman, and Shipley (2006).

From Subsymbolic to Symbolic Representations

One way to further our understanding of contour interpolation processes is to build models of contour interpolation mechanisms and compare their performance to human perception. One such model takes grayscale images as input, and using simulated simple and complex cells, detects contour junctions (Heitger et al., 1992) and interpolates between them using geometric constraints much like contour relatability (Heitger et al., 1998).

The output of this model is an image of activations at pixel locations along interpolation paths. These activations appear at locations in images where people report perceiving illusory contours.

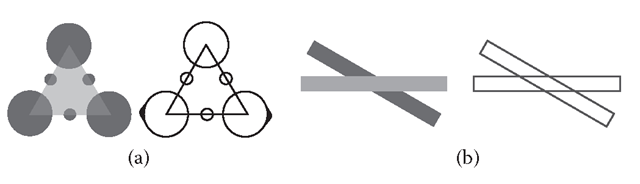

A more recent model (Kalar et al., 2010), generalizes the framework proposed in the model of Heitger et al. (1998) to interpolate both illusory and occluded contours. This model, which is a neural implementation of contour relatability and the identity hypothesis (that illusory and occluded objects share a common underlying interpolation mechanism), generates images of illusory and occluded contours consistent with human perception in a wide variety of contexts (e.g., Figure 10.12).

There is, however, an important shortcoming of models of this type. The inputs to these models are images where pixel values represent luminance. The outputs of the models are images of illusory and occluded (and real) contours. That is, these models take images that represent luminance at each pixel location and return images that represent illusory and occluded interpolation activity at each pixel location. There is nothing in the outputs to indicate that different pixels are connected to each other, that they form part of a contour, and so on. Nothing describes the areas that form a complete object, much less provides a description of its shape. In this sense, such models can be easily misinterpreted, as the observer viewing the outputs provides all of these additional descriptions.

FIGURE 10.12 Displays and outputs from a filtering model that uses a unified operator to handle illusory and occluded interpolations. The model (Kalar et al., 2010) draws heavily on Heitger et al. (1998) but replaces their “ortho” and “para” grouping processes by a single operator sensitive to either L or T junction inputs. (a) Kanizsa-style transparency display on the left produces output on the right. The illusory contours would not be interpolated by a model sensitive to L junctions only (e.g., Heitger et al., 1998). Except for triangle vertices, all junctions in this display are anomalous T junctions. (b) Occlusion display on the left produces output on the right. This output differs from the Heitger et al. model, which is intended to interpolate only illusory contours.

To account for human perception, the important filtering information provided by these models must feed into mechanisms that produce higher-level, symbolic descriptions. Beyond representations of contours (both physically defined and interpolated) as sets of unbound pixel values must be a more holistic description, with properties like shape, extent, and their geometric relationships to other contours in the scene.

This is a very general point about research into object and surface perception. Vision models using relatively local filters describe important basic aspects of processing. At a higher level, some object recognition models assume tokens such as contour shapes, aspect ratios, or volumetric primitives as descriptions. The difficult problem is in the middle: How do we get from local filter responses to higher-level symbolic descriptions? This question is an especially high priority for understanding 3D shape and surface perception. Higher-level shape descriptions are needed to account for our use of shape in object recognition and our perceptions of similarity. As the Gestalt psychologists observed almost a century ago, two shapes may be seen as similar despite being composed of very different elements. Shape cannot be the sum of local filter activations. Understanding processes that bind local responses into unitary objects and achieve more abstract descriptions of these objects are crucial challenges for future research.

3D Perception in the Brain

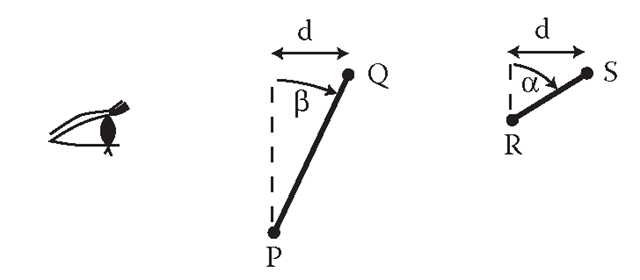

Research in 3D and spatiotemporal perception also raises important issues for understanding the cortical processes of vision. A great deal of research and modeling has focused on early cortical areas, V1 and V2, as likely sites of interpolation processes (Mendola et al., 1999; Sugita, 1999; Bakin, Nakayama, and Gilbert, 2000; Seghier et al., 2000) for both illusory and occluded contours. On the basis of their results on 3D interpolation, Kellman, Garrigan, and Shipley (2005) suggested that interpolation processes involve all three spatial dimensions and are unlikely to be accomplished in these early areas. There are several reasons for this suggestion. First, orientation-sensitive units in V1 and V2 encode 2D orientation characteristics, which are not sufficient to account for 3D interpolation. Second, one could hypothesize that 2D orientations combined with outputs of disparity-sensitive cells might somehow provide a basis for 3D interpolation. Evidence indicates, however, that the type of disparity information available in these early areas is insufficient: whereas relative disparities are needed for depth computations, V1 neurons with disparity sensitivity appear to be sensitive to absolute disparities, which vary with fixation (Cumming and Parker, 1999). Third, even relative disparities do not directly produce perception of depth intervals in the world. There are two problems. One is that obtaining a depth interval from disparity involves a constancy problem; a given depth interval produces different disparity differences depending on viewing distance (Wallach and Zuckerman, 1963). To obtain a depth interval, disparity information must be combined with egocentric distance information, obtained from some other source, to at least one point. Figure 10.13 illustrates this problem, along with a second one. The experimental data of Kellman et al. (2005) suggest that edge segments at particular slants provide the inputs to 3D interpolation processes. Slant, however, depends not only on the depth interval between points, but also on their separation. Moreover, 3D slant may be specified from a variety of sources. The likely substrate of 3D interpolation is some cortical area in which actual slant information, computed from a variety of contributing cues, is available. These requirements go well beyond computations that are suspected to occur in V1 or V2.

Where might such computations take place? Although no definite neural locus has been identified, research using a single-cell recording in the caudal intraparietal sulcus (cIPS) indicates the presence of units tuned to 3D slants (Sakata et al., 1997). It is notable that these units appear to respond to a particular 3D orientation regardless of whether that orientation is specified by stereoscopic information or texture information. These findings indicate where the kinds of input units required for 3D relatability—namely, units signaling 3D orientation and position—may exist in the nervous system.

FIGURE 10.13 Relations between disparity, edge length, and slant. Depth and disparity: A given depth interval d in the world gives rise to decreasing disparity as viewing distance increases. Interval d given by points R and S produces smaller disparity differences than P and Q, if R and S are farther away. Slant: Obtaining slant from disparity depends not only on recovering the depth interval but also depends on the vertical separation of the points defining that depth interval (a > ß).

In addition to the location of 3D processing, much remains to be learned about the nature of its mechanisms. Are there areas of cortex in which units sensitive to 3D positions and orientations of contour or surface fragments interact in a network that represents 3D relations? At present, we do not know of such a network, but the psychophysical results suggest that it is worth looking for.

All of these same sorts of questions apply as well to spatiotemporal object formation. We have impressive capabilities to construct coherent objects and scenes from fragments accumulated across gaps in space and time. Where in the cortex are these capabilities realized? And what mechanisms carry out the storage of previously visible fragments and their positional updating, based on velocity information, after they have gone out of sight? A striking possibility is that the same cortical areas are involved as those in 3D interpolation. At least, such an outcome would be consistent with a grand unification of processes that create objects from fragments. Although individual experiments have usually addressed 2D, 3D, and spatiotemporal interpolation separately, they may be part of a more comprehensive 4D process. Understanding both the computations involved and their neural substrates are fundamental and exciting issues for future research.