Introduction

The inference of depth information from single images is typically performed by devising models of image formation based on the physics of light interaction and then inverting these models to solve for depth. Once inverted, these models are highly underconstrained, requiring many assumptions, such as Lambertian surface reflectance, smoothness of surfaces, uniform albedo, or lack of cast shadows. Little is known about the relative merits of these assumptions in real scenes. A statistical understanding of the joint distribution of real images and their underlying three-dimensional (3D) structure would allow us to replace these assumptions and simplifications with probabilistic priors based on real scenes. Furthermore, statistical studies may uncover entirely new sources of information that are not obvious from physical models. Real scenes are affected by many regularities in the environment, such as the natural geometry of objects, the arrangements of objects in space, natural distributions of light, and regularities in the position of the observer. Few current computer vision algorithms for 3D shape inference make use of these trends. Despite the potential usefulness of statistical models and the growing success of statistical methods in vision, few studies have been made into the statistical relationship between images and range (depth) images. Those studies that have examined this relationship in nature have uncovered meaningful and exploitable statistical trends in real scenes which may be useful for designing new algorithms in surface inference, and also for understanding how humans perceive depth in real scenes (Howe and Purves, 2002; Potetz and Lee, 2003; Torralba and Oliva, 2002). In this topic, we highlight some results we obtained in our study on the statistical relationships between 3D scene structures and two-dimensional (2D) images and discuss their implications on understanding human 3D surface perception and its underlying computational principles.

Correlation Between Brightness and Depth

To understand the statistical regularities in natural scenes that allow us to infer 3D structures from their 2D images, we carried out a study to investigate the correlational structures between depth and light in natural scenes. We collected a database of coregistered intensity and high-resolution range images (corresponding pixels of the two images correspond to the same point in space) of over 100 urban and rural scenes. Scans were collected using the Riegl LMS-Z360 laser range scanner. The Z360 collects coregistered range and color data using an integrated charge-coupled device (CCD) sensor and a time-of-flight laser scanner with a rotating mirror. The scanner has a maximum range of 200 m and a depth accuracy of 12 mm. However, for each scene in our database, multiple scans were averaged to obtain accuracy under 6 mm. Raw range measurements are given in meters. All scanning is performed in spherical coordinates. Scans were taken of a variety of rural and urban scenes. All images were taken outdoors, under sunny conditions, while the scanner was level with the ground. Typical spatial resolution was roughly 20 pixels per degree.

To begin to understand the statistical trends present between 3D shape and 2D appearance, we start our statistical investigation by studying simple linear correlations within 3D scenes. We analyzed corresponding intensity and range patches, computing the correlation between a specific pixel (in either image or range patch) with other pixels in the image patch or the range patch, obtained with the following equation:

The patch size is 25 x 25 pixels, slightly more than 1 degree visual angle in each dimension, and in calculating the covariance, both of the image patch and the range patch, we subtracted their corresponding means across all patches.

One significant source of variance between images is the intensity of the light source illuminating the scene. Differences in lighting intensity result in changes to the contrast of each image patch, which is equivalent to applying a multiplicative constant. In order to compute statistics that are invariant to lighting intensity, previous studies of the statistics of natural images (without range data) focus on the logarithm of the light intensity values, rather than intensity (Field, 1994; van Hateren and van der Schaaf, 1998). Zero-sum linear filters will then be insensitive to changes in image contrast. Likewise, we take the logarithm of range data as well. As explained by Huang et al. (2000), a large object and a small object of the same shape will appear identical to the eye when the large object is positioned appropriately far away and the small object is close. However, the raw range measurements of the large, distant object will differ from those of the small object by a constant multiplicative factor. In the log range data, the two objects will differ by an additive constant. Therefore, a zero-sum linear filter will respond identically to the two objects.

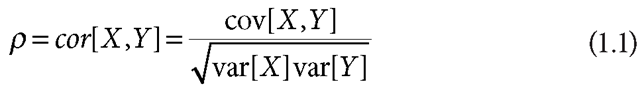

Figure 1.1 shows three illustrative correlation plots. Figure 1.1a shows the correlation between intensity at center pixel (13,13) and all of the pixels of the intensity patch. Figure 1.1b shows the correlation between range at pixel (13,13) and the pixels of the range patch. We observe that neighboring range pixels are much more highly correlated with one another than neighboring luminance pixels. This suggests that the low-frequency components of range data contain much more power than in luminance images, and that the spatial Fourier spectra for range images drop off more quickly than for luminance images, which are known to have roughly j spatial Fourier amplitude spectra (Ruderman and Bialek, 1994). This finding is reasonable, because factors that cause high-frequency variation in range images, such as occlusion contours or surface texture, tend to also cause variation in the luminance image. However, much of the high-frequency variations found in luminance images, such as shadow and surface markings, are not observed in range images.

FIGURE 1.1 (a) Correlation between intensity at central pixel (13,13) and all of the pixels of the intensity patch. Note that pixel (1,1) is regarded as the upper-left corner of the patch. (b) Correlation between range at pixel (13,13) and the pixels of the range patch. (c) Correlation between intensity at pixel (13,13) and the pixels of the range patch. For example, correlation between intensity at central pixel (13,13) and lower-right pixel (25,25) was -0.210.

These correlations are related to the relative degree of smoothness characteristic of natural images versus natural range images. Specifically, natural range images are in a sense smoother than natural images. Accurate modeling of these statistical properties of natural images and range images is essential for robust computer vision algorithms and for perceptual inference in general. Smoothness properties in particular are ubiquitous in modern computer vision techniques for applications such as image denoising and inpainting (Roth and Black, 2005), image-based rendering (Woodford et al., 2006), shape from stereo (Scharstein and Szeliski, 2002), shape from shading (Potetz, 2007), and others.

Figure 1.1c shows correlation between intensity at pixel (13,13) and the pixels of the range patch. There are two important effects here. The first is a general vertical tilt in the correlation plot, showing that luminance values are more negatively correlated with depth at pixels lower within the patch. This result is due to the fact that the scenes in our database were lit from above. Because of this, surfaces facing upward were generally brighter than surfaces facing downward; conversely, brighter surfaces were more likely to be facing upward than darker surfaces. Thus, when a given pixel is bright, the distance to that pixel is generally less than the distance to pixels slightly lower within the image. This explains the increasingly negative correlations between the intensity at pixel (13,13) and the depth at pixels lower within the range image patch.

What is more surprising in Figure 1.1c is that the correlation between depth and intensity is significantly negative. Specifically, the correlation between the intensity and the depth at a given pixel is roughly -0.20. In other words, brighter pixels tend to be closer to the observer. Historically, physics-based approaches to shape from shading have generally concluded that shading cues offer only relative depth information. Our findings show there is also an absolute depth cue available from image intensity data that could help to more accurately infer depth from 2D images.

This empirical finding regarding natural 3D scenes may be related to an analogous psychophysical observation that, all other things being equal, brighter stimuli are perceived as being closer to the observer. This psychophysical phenomenon has been observed as far back as Leonardo da Vinci, who stated, “among bodies equal in size and distance, that which shines the more brightly seems to the eye nearer” (MacCurdy, 1938). Hence, we referred to our empirical correlation as the da Vinci correlation. Artists sometimes make use of this cue to help create compelling illusions of depth (Sheffield et al., 2000; Wallschlaeger and Busic-Snyder, 1992).

In psychology literature, this effect is known as relative brightness (Myers 1995). Numerous possible explanations have been offered as to why such a perceptual bias exists. One common explanation is that light coming from distant objects has a greater tendency to be absorbed by the atmosphere (Cutting and Vishton, 1995). However, in most conditions, as in outdoor sunlit scenes, the atmosphere tends to scatter light from the sun directly toward our eyes, making more distant objects appear brighter under hazy conditions (Nayar and Narasimhan, 1999). Furthermore, our database was acquired under sunny, clear conditions, under distances insufficient to cause atmospheric effects (maximum distances were roughly 200 m). Other explanations of a purely psychological explanation have also been advanced (Taylor and Sumner, 1945). Although these might be contributing factors for our perceptual bias, they cannot account for empirical observations of real scenes.

By examining which images exhibited the da Vinci correlation most strongly, we concluded that the major cause of the correlation was primarily due to shadow effects within the environment (Potetz and Lee, 2003). For example, one category of images where correlation between nearness and brightness was very strong was images of trees and leafy foliage. Because the source of illumination comes from above, and outside of any tree, the outermost leaves of a tree or bush are typically the most illuminated. Deeper into the tree, the foliage is more likely to be shadowed by neighboring leaves, and so nearer pixels tend to be brighter.

Figure 1.2 An example color image (top) and range image (bottom) from our database. For purposes of illustration, the range image is shown by displaying depth as shades of gray. Notice that dark regions in the color image tend to lie in shadow, and that shadowed regions are more likely to lie slightly farther from the observer than the brightly lit outer surfaces of the rock pile. This example image from our database had an especially strong correlation between closeness and brightness.

This same effect can cause a correlation between nearness and brightness in any scene with complex surface concavities and interiors. Because the light source is typically positioned outside of these concavities, the interiors of these concavities tend to be in shadow and more dimly lit than the object’s exterior. At the same time, these concavities will be farther away from the viewer than the object’s exterior. Piles of objects (such as Figure 1.2) and folds in clothing and fabric are other good examples of this phenomenon.

To test our hypothesis, we divided the database into urban scenes (such as building facades and statues) and rural scenes (trees and rocky terrain).

The urban scenes contained primarily smooth, man-made surfaces with fewer concavities or crevices, and so we predicted these images to have reduced correlation between nearness and brightness. On the other hand, were the correlation found in the original dataset due to atmospheric effects, we would expect the correlation to exist equally well in both the rural and urban scenes. The average depth in the urban database (32 m) was similar to that of the rural database (40 m), so atmospheric effects should be similar in both datasets. We found that correlations calculated for the rural dataset increased to -0.32, while those for the urban dataset are considerably weaker, in the neighborhood of -0.06.

In Langer and Zucker (1994)], it was observed that for continuous Lambertian surfaces of constant albedo, lit by a hemisphere of diffuse lighting and viewed from above, a tendency for brighter pixels to be closer to the observer can be predicted from the equations for rendering the scene. Intuitively, the reason for this is that under diffuse lighting conditions, the brightest areas of a surface will be those that are the most exposed to the sky. When viewed from above, the peaks of the surface will be closer to the observer. Although these theoretical results have not been extended to more general environments, our results show that in natural scenes, these tendencies remain, even when scenes are viewed from the side, under bright light from a single direction, and even when that lighting direction is oblique to the viewer. In spite of these differences, both phenomena seem related to the observation that concave areas are more likely to be in shadow. The fact that all of our images were taken under cloudless, sunny conditions and with oblique lighting from above suggests that this cue may be more important than first realized.

It is interesting to note that the correlation between nearness and brightness in natural scenes depends on several complex properties of image formation. Complex 3D surfaces with crevices and concavities must be present, and cast shadows must be present to fill these concavities. Additionally, we expect that without diffuse lighting and lighting interreflections (light reflecting off of several surfaces before reaching the eye), the stark lighting of a single-point light source would greatly diminish the effect (Langer and Zucker, 1994). Cast shadows, complex 3D surfaces, diffuse lighting, and lighting interreflections are image formation phenomena that are traditionally ignored by methods of depth inference that attempt to invert physical models of image formation. The mathematics required for these phenomena are too cumbersome to invert. However, taken together, these image formation behaviors result in the simplest possible relationship between shape and appearance—an absolute correlation between nearness and brightness. This finding illustrates the necessity of continued exploration of the statistics of natural 3D scenes.

Characterizing the Linear Statistics of Natural 3D Scenes

In the previous section, we explained the correlation between the intensity of a pixel and its nearness. We now expand this analysis to include the correlation between the intensity of a pixel and the nearness of other pixels in the image. The set of all such correlations forms the cross-correlation between depth and intensity. The cross-correlation is an important statistical tool: as we explain later, if the cross-correlation between a particular image and its range image was known completely, then given the image, we could use simple linear regression techniques to infer 3D shape perfectly. Even though perfect estimation of the cross-correlation from a single image is impossible, we demonstrate that this correlational structure of a single scene follows several robust statistical trends. These trends allow us to approximate the full cross-correlation of a scene using only three parameters, and these parameters can be measured even from very sparse shape and intensity information. Approximating the cross-correlation this way allows us to achieve a novel form of statistically driven depth inference that can be used in conjunction with other depth cues, such as stereo.

Given an image i(x, y) with range image z(x, y), the cross-correlation for that particular scene is given by

It is helpful to consider the cross-correlation between intensity and depth within the Fourier domain. If we use I(u, v) and Z(u, v) to denote the Fourier transform of i(x, y) and z(x, y), respectively, then the Fourier transform of i, z is Z(u, v)I* (u, v). ZI* is known as the cross-spectrum of i and z. Note that ZI* has both real and imaginary parts. Also note that in this section, no logarithm or other transformation was applied to the intensity or range data (measured in meters). This allows us to evaluate ZI* in the context of the Lambertian model assumptions, as we demonstrate later.

If the cross-spectrum is known for a given image, and is sufficiently bounded away from zero, then the 3D shape could be estimated from a single image using linear regression: Z = I(ZI/II*). In this section, we demonstrate that given only three parameters, a close approximation to ZI* can be constructed. Roughly speaking, those three parameters are the strength of the nearness/brightness correlation in the scene, the prevalence of flat shaded surfaces in the scene, and the dominant direction of illumination in the scene. This model can be used to improve depth inference in a variety of situations.

FIGURE 1.3 (a) The log-log polar plot of | real[ZI* (r, 6)] | for a scene from our database. (b) B(6) for the same scene. real[BK(6)] is drawn in black and imag[BK(6)] in gray. This plot is typical of most scenes in our database. As predicted by Equation 1.5, imag[BK(6)] reaches its minima at the illumination direction (in this case, to the extreme left, almost 180°). Also typical is that real[BK(6)] is uniformly negative, most likely caused by cast shadows in object concavities.

Figure 1.3a shows a log-log polar plot of | real[ZI* (r, 6)] | from one image in our database. The general shape of this cross-spectrum appears to closely follow a power law. Specifically, we found that ZI* can be reasonably modeled by B(6)/r“, where r is spatial frequency in polar coordinates, and B(6) is a function that depends only on polar angle 6, with one curve for the real part and one for the imaginary part. We test this claim by dividing the Fourier plane into four 45° octants (vertical, forward diagonal, horizontal, and backward diagonal) and measuring the drop-off rate in each octant separately. For each octant, we average over the octant’s included orientations and fit the result to a power law. The resulting values of a (averaged over all 28 images) are listed in Table 1.1.

For each octant, the correlation coefficient between the power-law fit and the actual spectrum ranged from 0.91 to 0.99, demonstrating that each octant is well-fit by a power law. (Note that averaging over orientation smoothes out some fine structures in each spectrum.) Furthermore, a varies little across orientations, showing that our model fits ZI* closely.

TABLE 1.1 Power Law Drop-Off Rates a for Each Power Spectrum Component

|

Orientation |

II |

Real[ZI] |

Imag[ZI] |

ZZ |

|

Horizontal |

2.47 ± 0.10 |

3.61 ± 0.18 |

3.84 ± 0.19 |

2.84 ± 0.11 |

|

Forward diagonal |

2.61 ± 0.11 |

3.67 ± 0.17 |

3.95 ± 0.17 |

2.92 ± 0.11 |

|

Vertical |

2.76 ± 0.11 |

3.62 ± 0.15 |

3.61 ± 0.24 |

2.89 ± 0.11 |

|

Backward diagonal |

2.56 ± 0.09 |

3.69 ± 0.17 |

3.84 ± 0.23 |

2.86 ± 0.10 |

|

Mean |

2.60 ± 0.10 |

3.65 ± 0.14 |

3.87 ± 0.16 |

2.88 ± 0.10 |

Note from the table that the image power spectra I(u, v)I*(u, v) also obeys a power law. The observation that the power spectrum of natural images obeys a power law is one of the most robust and important statistic trends of natural images (Ruderman and Bialek, 1994), and it stems from the scale invariance of natural images. Specifically, an image that has been scaled up, such as i(ax, ay), has similar statistical properties as an unscaled image. This statistical property predicts that II* (r, 6) = 1/r2. The power-law structure of the power spectrum II* has proven highly useful in image processing and computer vision and has led to advances in image compression, image denoising, and several other applications. Similarly, the discovery that ZI* also obeys a power spectrum may prove highly useful for the inference of 3D shape.

As mentioned earlier, knowing the full cross-covariance structure of an image/range-image pair would allow us to reconstruct the range image using linear regression via the equation Z = I(ZI*/II*). Thus, we are especially interested in estimating the regression kernel K = ZI*/II*. IK is a perfect reconstruction of the original range image (as long as II*(u, v) ^ 0). The findings shown in Table 1.1 predict that K also obeys a power law. Subtracting an* from areal[ZI*j and aimag[ZI*], we find that real[K] drops off at 1/r11, and imag[K] drops off at 1/r12. Thus, we have that K(r, 6) = BK(6)/r.

Now that we know that K can be fit (roughly) by a 1/r power law, we can offer some insight into why K tends to approximate this general form. Note that the 1/r drop-off of K cannot be predicted by scale invariance. If images and range images were jointly scale invariant, then II* and ZI* would both obey 1/r2 power laws, and K would have a roughly uniform magnitude. Thus, even though natural images appear to be statistically scale invariant, the finding that K = BK(6)/r disproves scale invariance for natural scenes (meaning images and range images taken together).

The 1/r drop-off in the imaginary part of K can be explained by the linear Lambertian model of shading, with oblique lighting conditions. Recall that Lambertian shading predicts that pixel intensity is given by

where n(x,y) is the unit surface normal at point (x, y), and L is the unit lighting direction. The linear Lambertian model is obtained by taking only the linear terms of the Taylor series of the Lambertian reflectance equation. Under this model, if constant albedo and illumination conditions are assumed, and lighting is from above, then i(x, y) = a dz/dy, where a is some constant. In the Fourier domain, I(u, v) = a2njvZ(u, v), where j = V-T. Thus, we have that

Thus, Lambertian shading predicts that imag[ZI*] should obey a power law, with aimag[ZI*] being one more than aimag[n*], which is consistent with the findings in Table 1.1.

Equation (1.4) predicts that only the imaginary part of ZI* should obey a power law, and the real part of ZI* should be zero. Yet, in our database, the real part of ZI* was typically stronger than the imaginary part. The real part of ZI* is the Fourier transform of the even-symmetric part of the cross-correlation function, and it includes the direct correlation cov[i,z], corresponding to the da Vinci correlation between intensity pixel and range pixel discussed earlier. The form of real[ZI*] is related to the rate at which the da Vinci correlation drops off over space. One explanation for the 1/r3 drop-off rate of real[ZI*] is the observation that deeper crevices and concavities should be more shadowed and therefore darker than shallow concavities. Specifically, the 1/r3 drop-off rate of real[ZI*] predicts that the correlation between nearness and brightness should be inversely proportional to the aperture width it is measured over. This matches our intuition that given two surface concavities with equal depths, the one with the narrower aperture should be darkest.

Figure 1.4 shows examples of BK in urban and rural scenes. These plots illustrate that the real part of BK is strongest (most negative) for rural scenes with abundant concavities and shadows. These figures also illustrate how the imaginary part of K follows Equation (1.5), and imag[BK(6)] closely follows a sinusoid with phase determined by the dominant illumination angle. Thus, BK (and therefore also K and ZI*) can be approximated using only three parameters: the strength of the da Vinci correlation (which is related to the extent of complex 3D surfaces and shadowing present in the scene), the angle of the dominant lighting direction, and the strength of the Lambertian relationship in the scene (i.e., the coefficient 1/a in Equation (1.5), which is related to the prominence of smooth Lambertian surfaces in the scene). We can use this approximation to improve depth inference.

FIGURE 1.4 Natural and urban scenes and their BK(6). Images with surface concavities and cast shadows have significantly negative real[B(6)] (black line), and images with prominent flat shaded surfaces have strong imag[B(6)] (gray line).

![(a) The log-log polar plot of | real[ZI* (r, 6)] | for a scene from our database. (b) B(6) for the same scene. real[BK(6)] is drawn in black and imag[BK(6)] in gray. This plot is typical of most scenes in our database. As predicted by Equation 1.5, imag[BK(6)] reaches its minima at the illumination direction (in this case, to the extreme left, almost 180°). Also typical is that real[BK(6)] is uniformly negative, most likely caused by cast shadows in object concavities. (a) The log-log polar plot of | real[ZI* (r, 6)] | for a scene from our database. (b) B(6) for the same scene. real[BK(6)] is drawn in black and imag[BK(6)] in gray. This plot is typical of most scenes in our database. As predicted by Equation 1.5, imag[BK(6)] reaches its minima at the illumination direction (in this case, to the extreme left, almost 180°). Also typical is that real[BK(6)] is uniformly negative, most likely caused by cast shadows in object concavities.](http://what-when-how.com/wp-content/uploads/2012/07/tmpf10322_thumb_thumb.png)

![Natural and urban scenes and their BK(6). Images with surface concavities and cast shadows have significantly negative real[B(6)] (black line), and images with prominent flat shaded surfaces have strong imag[B(6)] (gray line). Natural and urban scenes and their BK(6). Images with surface concavities and cast shadows have significantly negative real[B(6)] (black line), and images with prominent flat shaded surfaces have strong imag[B(6)] (gray line).](http://what-when-how.com/wp-content/uploads/2012/07/tmpf10325_thumb.png)