Biomedical Engineering Reference

In-Depth Information

two last calculated criterion values

E

. As a fallback strategy, the previous

step size is divided by

µ

f

, as above.

3.

Conjugated gradient.

This algorithm [59] chooses its descent directions

to be mutually conjugate so that moving along one does not spoil the result

of previous optimizations. To work well, the step size

µ

has to be chosen

optimally. Therefore, at each step, we need to run another internal one-

dimensional minimization routine which finds the optimal

µ

; this makes it

the slowest algorithm in our setting.

4.

Marquardt-Levenberg.

The most effective algorithm in the sense of the

number of iterations was a regularized Newton method inspired by the

Marquardt-Levenberg

algorithm (ML), as in [98]. We shall examine various

approximations of the Hessian matrix

∇

c

E

, see Section 9.4.8.1.

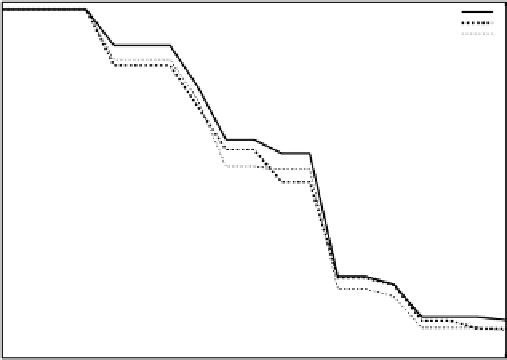

The choice of the best optimizer is always application-dependent. We ob-

serve that the behavior of all optimizers is almost identical at the beginning of

the optimization process (see Fig. 9.7). The main factor determining the speed

280

MLdH

MLH

GD

270

260

250

240

230

220

210

200

190

180

170

0

2

4

6

8

10

12

14

16

18

Figure 9.7: The evolution of the SSD criterion during first 18 iterations when

registering the Lena image, artificially deformed with 2

×

4

×

4 cubic B-spline

coefficients and a maximum displacement of about 30 pixels, without multireso-

lution. The optimizers used were: Marquardt-Levenberg with full Hessian (MLH),

Marquardt-Levenberg with only the diagonal of the Hessian taken into account

(MLdH), and gradient descent (GD). The deformation was recovered in all cases

with an accuracy between 0

.

1 and 0

.

01 pixels (see also section 9.4.10).