Biomedical Engineering Reference

In-Depth Information

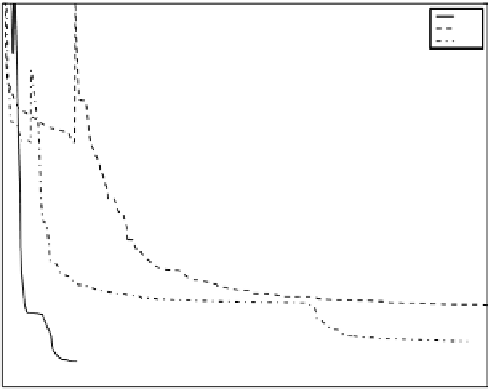

is therefore the cost of a single iteration, which was smallest for the gradient de-

scent (GD) algorithm. Among the GD variants we recommend the quadratic step

size estimation that outperforms the classical feedback adjustment. One addi-

tional pleasant property of the GD algorithm is its tendency to leave uninfluential

coefficients intact, unlike the ML algorithm. Consequently, less regularization is

needed for the GD algorithm. We choose the GD optimizer for most of our image

registration tasks.

When, on the other hand, we work with a small number of parameters, the

criterion is smooth, and high precision is needed, the ML algorithm [36] performs

the best, as its higher cost per iteration is compensated for by a smaller number

of iterations due to the quadratic convergence (Fig. 9.8).

9.4.6.2

Multiresolution

The robustness and efficiency of the algorithm can be significantly improved by

a

multiresolution

approach: The task at hand is first solved at a coarse scale.

5

x 10

5

ML

GD

CG

4.5

4

3.5

3

2.5

2

1.5

1

0.5

0

0

50

100

150

200

250

300

350

400

450

500

time [s]

Figure 9.8:

Comparison of gradient descent (GD), conjugated gradient (CG),

and Marquardt-Levenberg (ML) optimization algorithm performances when reg-

istering SPECT images with control grid of 6

×

6

×

6 knots. The graphs give the

value of the finest-level SSD criterion of all successful (i.e., criterion-decreasing)

iterations as a function of the execution time. The abrupt changes are caused

by transitions between resolution levels.