Information Technology Reference

In-Depth Information

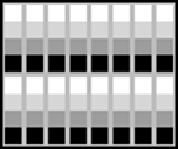

2I × 2J ×K/2 I ×J ×K I/2 ×J/2 × 2K

layer (l− 1) layer l layer (l + 1)

Fig. 4.2.

As the spatial resolution decreases, when going up the hierarchy, the number of

feature arrays increases. Different grayscales represent different features. In the lower layers

few grayscales are available at many locations while in the higher layers many variations of

shading can be accessed at few positions.

to all feature arrays of a layer is required to compute a feature cell, the total number

of connections rises by a factor of four when increasing the number of features to

4K, instead of 2K, in layer

(

l

+ 1)

. The choice of 2K features leads to a constant

number of total connections within each layer and to the reduction by a factor of two

in representation size when going up one layer. Hence, in this case the size of the

entire pyramid is less than double the size of its lowest layer and the total number of

connections is linear in the number of layers and in the number of bottom-layer cells.

Since the size of the representation decreases with height, not all details of an image

can be represented in the higher layers. Thus, there is some incentive in discovering

image structure that can be used to efficiently encode the abstract representations.

4.1.2 Distributed Representations

The feature cells in the Neural Abstraction Pyramid contain simple processing el-

ements that make a single value, the activity, available to other cells. The activ-

ity of a cell represents the strength of the associated feature at a certain position.

This resembles the computation by neurons in the brain. Such a massively parallel

approach to image processing requires millions of processing elements for high-

resolution images. Thus, it is necessary to make the individual processors simple

to keep the costs of simulating these on a serial machine or implementing them

in VLSI hardware within reasonable bounds. No complex data structures are used

in the architecture. The communication between processing elements only requires

access to cell activities via weighted links.

Figure 4.3 magnifies one layer

l

of the pyramid. All feature cells that share the

same location

(

i,j

)

within a layer form a hypercolumn. A hypercolumn describes all

aspects of the corresponding image window in a distributed sparse representation.

Neighboring hypercolumns define a hyper-neighborhood.

This definition is motivated by the interwoven feature maps present in cortical

areas, like in the primary visual cortex (compare to Section 2.2). All feature cells

that describe the same image location at the same level of abstraction are accessible

within close proximity. This facilitates interaction between these cells. Such inter-

Search WWH ::

Custom Search