Information Technology Reference

In-Depth Information

Lateral

Backward

projection

projection

Hypercolumn

Forward

projection

Feature

Feature

2j

cell

i

2i

array

Layer

(l−1)

j

j/2

k

i/2

Layer

(l+1)

Hyper−neighborhood

Layer

l

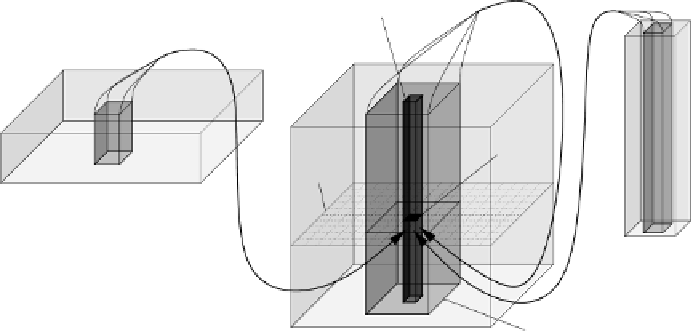

Fig. 4.3.

A feature cell with its projections. Such a cell is addressed by its layer

l

, its feature

array number

k

, and its array position

(i,j)

. Lateral projections originate from the hyper-

neighborhood in the same layer. Forward projections come from the hyper-neighborhood of

the corresponding position

(2i, 2j)

in the next lower layer

(l−1)

. Backward projections start

from the hyper-neighborhood at position

(i/2,j/2)

in layer

(l + 1)

.

action is not only necessary between neighboring cells within a feature array

k

, but

also across arrays since the code used is a distributed one.

The use of distributed codes is much more efficient than the use of localized

encodings. A binary local 1-out-of-

N

code can provide at most

log

N

bits of infor-

mation while in a dense codeword of the same length,

N

bits can be stored. The

use of sparse codes lowers the storage capacity of a code, but it facilitates decoding

and associative completion of patterns [172]. Sparse codes are also energetically

efficient since most spikes are devoted to the most active feature-detecting cells.

One important idea of the Neural Abstraction Pyramid architecture is that each

layer maintains a complete image representation in an array of hypercolumns. The

degree of abstraction of these representations increases as one ascends in the hi-

erarchy. At the bottom of the pyramid, features correspond to local measurements

of a signal, the image intensity. Subsymbolic representations, like the responses of

edge detectors or the activities of complex feature cells are present in the middle

layers of the network. When moving upwards, the feature cells respond to image

windows of increasing size, represent features of increasing complexity, and are in-

creasingly invariant to image deformations. At the top of the pyramid, the images

are described in terms of very complex features that respond invariantly to large im-

age parts. These representations are almost symbolic, but they are still encoded in a

distributed manner.

This sequence of more and more abstract representations resembles the abstrac-

tion hierarchy found along the ventral visual pathway. Every step changes the na-

ture of the representation only slightly, but all steps follow the same direction. They

Search WWH ::

Custom Search