Information Technology Reference

In-Depth Information

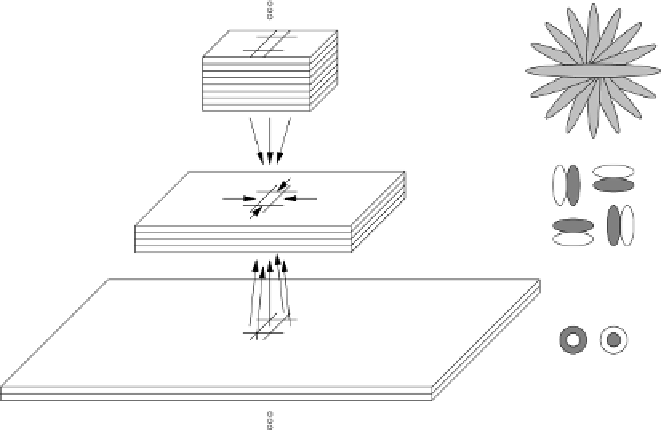

more abstract

Backward projections

Lateral projections

Forward projections

less abstract

Fig. 4.1.

Neural Abstraction Pyramid architecture. The network consists of several layers.

Each layer is composed of multiple feature arrays that contain discrete cells. When going

up the hierarchy, spatial resolution decreases while the number of features increases. The

network has a local recurrent connection structure. It produces a sequence of increasingly

abstract image representations. At the bottom of the pyramid, signal-like representations are

present while the representations at the top are almost symbolic.

decreasing resolution has been used before, e.g. in image pyramids and wavelet

representations (see Section 3.1.1). In these architectures, the number of feature

arrays is constant across all layers. Hence, the representational power of the higher

layers of these architectures is very limited. In the Neural Abstraction Pyramid,

this effect is avoided by increasing the number of feature arrays when going up the

hierarchy.

In most example networks discussed in the remainder of the thesis, the number

of cells per feature decreases from

I

×

J

in layer

l

to

I/

2

×

J/

2

in layer

(

l

+ 1)

while the number of features increases from

K

to

2

K

. Figure 4.2 illustrates this.

The successive combination of simple features to a larger number of more com-

plex ones would lead to an explosion of the representation size if it were not coun-

teracted by an implosion of spatial resolution. This principle is applied also by the

visual system, as is evident from the increasing size of receptive fields when going

along the ventral pathway. The dyadic reduction of resolution is natural to an im-

plementation with binary computers. It allows one to map addresses into adjacent

layers by simple shift operations. Of course, the concept can be generalized to any

pyramidal structure.

It may be desirable that the number of feature cells per layer stays constant, as

in the fast Fourier transformation described in Section 3.1.1. This is only possible

with reasonable computational costs, if the number of accesses to the activities of

other feature cells when computing a feature cell is kept below a constant. If access

Search WWH ::

Custom Search