Introduction

We’ve seen how global metadata such as title and genre are represented, stored and delivered in a wide range of applications such as Podcasting and broadcast television. Formats such as MXF and MPEG-7 allow much deeper metadata specification. In particular, representation of the dialog of a program is of particular interest for video search applications, so we will focus on this aspect.

Several metadata standards include support for storing the dialog of a video program along with corresponding temporal information. For many applications such as streaming captions, DVD subtitles, and song lyrics, other specific dialog storage formats have been developed which are easier for application developers to use, even if they lack support for other basic metadata and are not extensible. This type of information is of obvious value for video search engines, and the temporal information is of importance for retrieving segments of interest in long form video. In this section, we will introduce several widely used dialog or annotation storage formats and provide examples of each. Note that, as is the case with global metadata, this information can be embedded in the media container format, or stored in separate files. The text may be synchronized or unsynchronized, and may be represented as a single unit in the header of the media file, or represented as a separate stream, multiplexed with other media streams for transport.

Synchronization Precision and Resolution

Time units are specified in a variety of ways such as in milliseconds relative to the start of the media, as frame numbers referencing the media time base or using SMPTE time codes. RTP defines the Normal Play Time (NPT) to represent these values. As Table 2.8 shows, applications may employ different resolutions for time stamping text segments. Note that although roll-up mode captions can be precisely specified, the timing is inaccurate due to the variable transcription delay inherent in real-time captioning.

Table 2.8. Text segmentation resolution for various applications.

|

Application |

Text segmentation resolution |

|

Speech recognition / synthesis |

Phoneme (or sub-phoneme) |

|

Roll-up captions |

Two characters |

|

Karaoke |

Syllable |

|

1-best ASR transcription |

Word level |

|

Music lyrics, streaming media captions |

Phrase |

|

Pop-up captions, |

Two “lines” where each line is ~30 |

|

Subtitles |

characters |

|

Aligned transcripts |

Sentence |

|

Distance learning, slide presentations |

Paragraph |

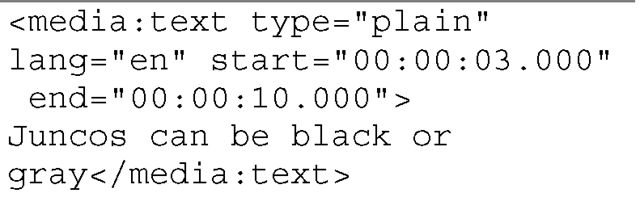

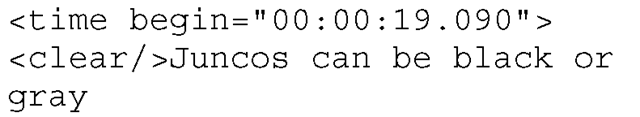

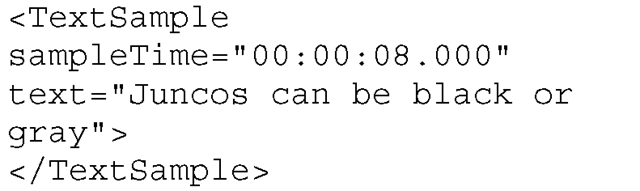

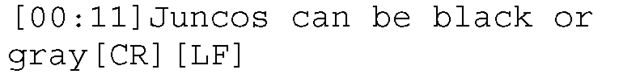

Unfortunately, as is the case with global metadata, there is no overarching agreement on the file formats or syntax for marking up timed text. The W3C is developing Timed Text to help address this problem, and the recommendation includes all possible features to support almost any application. However, it is likely that the simple, application specific text formats will persist for the foreseeable future; in fact new formats are still being created, e.g. the MediaRSS specification is relatively new, so these formats show no sign of dying out. Table 2.9 gives a sampling of some of the formats in use today to give an idea of the current state of timed text on the Web. Note that DSM-CC NPT is the Digital Storage Media Command and Control Normal Play Time which is also used by RTSP (RFC 2326 3.6).

Table 2.9. Representative timed text formats.

Transcripts

In addition to the metadata sources described above, media content in the form of text or text streams is sometimes available from the Web or other sources. Transcripts of TV program dialogs are freely available on the Web for some programs, (e.g. cnn.com/transcripts.) Others are offered through fee-based services such as Burrelles® or LexisNexis®. Transcripts usually include some level of speaker ID and may include descriptions of visuals or audio events not in the dialog (e.g. “car horn honks”).

Closed Captions

In the US, closed captioning is used to make TV accessible to hearing-impaired viewers. Captions are textual representations of the program dialog as well as any significant audio events that a hearing-impaired viewer would need to understand the program. The term “closed” connotes that the captions can be switched on or off by the viewer. Captions are similar to subtitles used for translating films into other languages (see Table 2.10). Captions benefit all users: in certain consumption scenarios such as noisy environments, multichannel viewing or in public venues, captions are invaluable. Captions also improve comprehension for non-native listeners and can improve reading skills.

Table 2.10. Comparison of EIA-608 captioning and subtitling.

|

EIA-608 Captions |

Subtitles |

|

Primarily intended for hearing- |

For alternative language viewers (very |

|

impaired viewers (rarely includes “second language”) |

rarely includes cues for hearing-impaired viewers) |

|

Character codes (modified ASCII) |

Bitmapped |

|

At most two languages |

Many languages |

|

Latin characters (approximate) |

Any characters or fonts |

The FCC has mandated that nearly all broadcast content must be captioned, with some exceptions for cases where it is not practical. Video services and equipment such as DVRs and DVDs are required to preserve or “pass through” the caption information, and television sets over 17 inches in diagonal are required to include the ability to display captions.

Live TV is captioned in real-time often by highly skilled stenographers and displayed in “roll-up” mode, while other productions including commercials and movies are captioned in an offline mode. In some cases, the program script for the teleprompter is fed into the closed caption. Offline captioning is displayed in a “pop-up” or “pop-on” mode where the timing can be precisely controlled (down to the frame level) and screen positioning is often used to indicate speaker changes. Real-time captioners can’t afford to take the time to position text underneath the speaker, so a convention of using two chevron (greater-than) characters has been adopted to indicate speaker change. Similarly, three such characters indicate a topic change. The EIA-708 closed caption used in the ATSC DTV standard greatly expands EIA-608 and includes carriage of 608 for compatibility.

DVD subtitles are bitmaps, unlike EIA-608 captions which use a modified ASCII encoding. This provides more flexibility, but requires optical character recognition for extraction and use by search engine systems.

Many tools are available for this (subtitle rippers). Note that many DVDs include closed captions as well as subtitles.

Synchronized Accessible Media Interchange

Microsoft developed the Synchronized Accessible Media Interchange (SAMI) format to provide the ability to add closed captions to streaming Windows Media® format audio and video. The captions are stored in an XML-like file separate from the media but with the same root name with the extension “.smi”. This often leads to confusion with the SMIL format files which may use the same extension. Although often stored in the same directory as the media, the media player can read the SAMI file from an entirely different URL and display the captions under the control of the user.

Metadata from Social Sources

Web users extract movie subtitles, translate, and post them on the Web (this is known as fan translation, or fansubs.) A popular movie may be translated into more than 30 languages. This phenomenon is typically in violation of copyrights and the quality cannot be assured. However, a site called DotSub is available for social multilingual captioning of Web media in Flash format, and this is welcomed by many content creators, rather than viewed as a copyright infringement. Extended character sets and encodings are needed to represent multilingual texts. Beyond dialog representation, social tagging (a.k.a. folksonomy) may be applied to video wherein any user may enter metadata which is later indexed for retrieval.

Metadata Issues

Practical considerations limit the reliability of authored metadata such as descriptions or keywords, particularly for non-professionally authored media. It is up to the content author to maintain this information, which is tedious and expensive, so in practice it may not be reliable. Applications such as the Windows Media® Encoder which retain the most recently used values across runs, while intended to save repeated metadata entry, may in fact cause problems. If a user is not aware of this feature, it is possible that the metadata they may end up with is more relevant to their last work, not their current one. Also, editing applications may extract metadata from the constituent clips and apply that to the edited work.

Conclusion

We’ve seen that there is a wide range of content sources available to video search engines, each with its own associated metadata descriptions. Metadata systems have historically arisen from distinct communities including video production, US and international television broadcasters, as well as the computing and Internet standards bodies. In each of these domains, legacy and single-vendor systems continue to play a major role, representing significant sources of described content.

For a representative sampling of systems from these domains, we’ve looked into metadata which describes content at a range of levels. While MPEG-7 and MXF can go much further, most systems at a minimum support specifying textual metadata with temporal attributes. Other systems such as the DCES provide only high level attributes about the media assets, but offer broad applicability. The related topic of text stream formats for capturing the dialog of video and audio media was also introduced. Although technically text streams are content as opposed to metadata, for search applications they are interpreted by retrieval systems as descriptions of the media content. Finally, metadata systems for describing collections for content such as Electronic Program Guides and RSS Feeds were shown to provide valuable metadata about their constituent media items.