Transport

Video search engines deliver their product to clients over IP connections in several ways:

• Download - This simple delivery system has been available since the beginning of HTTP where MIME types are used by browsers to launch the appropriate media player after the media has been downloaded to a local file.

• Progressive Download - Again, a basic HTTP server delivers the media file, but in this case its play-out is initiated via a media player before the entire file is downloaded.

• HTTP with byte offsets – The byte range feature of HTTP/1.1 is used to support random access to media files. Clients map user play position (time seek) requests to media stream byte offsets and issue requests to the server to fetch required segments of the media file.

• Managed Download - A specially designed client application provides additional features such as DRM management, expiration, reliable download and HTTP or P2P is typically used for transport. There are many types of these applications, from applications that operated in the background without much of a UI, to iTunes which include download management capabilities for Podcasts and purchased media.

• HTTP Streaming – These systems require a dedicated media server that parses the media file to determine the bit rate and delivers the content accordingly. Random access and other features such as fast start, fast forward, etc. may also be supported.

• RTSP / RTP - A media streaming server delivers the content via UDP to avoid the overhead of TCP retransmissions. Some form of error concealment or forward error correction can be used. Some IPTV systems use a “reliable UDP” scheme where selective retransmission based on certain conditions is employed.

Searching VoD vs. Live

Most video search applications inherently provide personalized access to stored media – essentially this is a “video on demand” (VoD) scenario, although the term VoD is commonly used to refer to movie rental on a settop box delivered via cable TV or IPTV. For VoD, the connection is point to point and unicast IP transmission is appropriate. However, IPTV and Internet TV are channel based where many users are viewing the same content at the same time so multicast IP is employed. As the number of these feeds grows, users will need searching systems to locate channels of interest. In this scenario, EPG/ESG data including descriptions will provide the most readily accessible metadata for search. Live streams can be processed in real time to extract up to the minute metadata for more detailed content-based retrieval. Of course, prepared programming and rebroadcasts of live events can be indexed a priori and used to provide users with more accurate content selection capabilities.

IPTV

IPTV is often heralded as the future of television, promising a revolution on the same scale as the Web. With all this potential, there are many groups co-opting the term IPTV to their own advantage. Does IPTV imply any television content delivered over an IP network? Well, we have been able to see video content streamed over the Internet for years so it makes sense to restrict the term IPTV to a narrower connotation. Of course, as more bandwidth has become available and desktop computers more powerful, we can experience full-screen video delivery and begin to approach broadcast TV quality (although HD delivery to large audiences over unmanaged networks is much more demanding and may be slow to evolve). The term “Internet TV” has been used to describe this type of system, and the term IPTV is generally accepted to mean delivery of a television-like experience over a managed IP network. To avoid confusion for the purposes of standardization, the IPTV Interoperability Forum (IIF) group formed by the Alliance for Telecommunications Industry Solutions (ATIS) [ATIS06] has defined IPTV as: the secure and reliable delivery to subscribers of entertainment video and related services. These services may include, for example, Live TV, Video On Demand (VOD) and Interactive TV (iTV). These services are delivered across an access agnostic, packet switched network that employs the IP protocol to transport the audio, video and control signals. In contrast to video over the public Internet, with IPTV deployments, network security and performance are tightly managed to ensure a superior entertainment experience, resulting in a compelling business environment for content providers, advertisers and customers alike.

In the context of video search, IPTV is a significant step towards an evolved state of video programming where the entire end-to-end process is manageable using generic IT methods. While there is clearly a long way to go in terms of interoperability and standardization for exchange of media and metadata, the IP and accessible nature of the new delivery paradigm paves the way toward making this a reality. This offers the potential for engineers competent in networking and data management technologies to bring their experience to bear on the problem of managing video distribution. The potential for metadata loss through conversions through the delivery chain is greatly reduced. Of course, today’s IPTV systems use IP for distribution to consumers, but IP is not necessarily used for contribution of broadcast content. Traditional and reliable methods used for cable delivery such as satellite, pitcher / catcher VoD systems, etc. will persist for the foreseeable future. In addition to ATIS, several other bodies including ETSI (DVB-IPTV), OMA (BCAST) and OpenIPTV are participating in drafting IPTV recommendations for a range of applications.

Although not specified in the ATIS/IIF definition, IPTV deployments are usually delivered via DSL links that do not have enough bandwidth to support the cable model of bringing all channels to the customer premises and tuning at the set-top. With VDSL2, downstream bandwidth is typically 25Mb/s which can accommodate two HD and two SD channels simultaneously. With IPTV over DSL, only a single channel for each receiver is delivered to the customer – effectively the “tuning” takes place at the central office. This is sometimes referred to as a “switched video” service (although the term is used is used in cable TV delivery as well). To support rapid channel changing, IPTV systems keep the GoP short and employ various techniques to speed up channel change. Of course short GoP and channel change bursts consume bandwidth and systems must balance these factors. Given this optimization, and the necessary FEC for DSL, IPTV streams must be transcoded for efficient archival applications where there is less need for error correction.

As we have seen, there are a wide range of video coding systems in use and each is optimized for its intended set of applications. As video content is acquired and ingested into a video search engine, it is very likely that the encoding of the source video is not appropriate for delivery from the search engine. In some cases the bit rate is simply too high to scale well given the number of concurrent users, or the format may be unsuitable for the intended delivery mechanism. Although some services attempt to redirect users to origin servers, the user experience of switching among multiple players (some of which may not be installed) to view the search results is less than seamless. Therefore many systems have opted to transcode video to a common format and host it. Flash Video is often the format of choice here due to its platform independence and wide installed base of players. The term transcoding is loosely used to refer to changing container formats, encoding systems, or bitrates. Transrating refers to changing only the bitrate (typically via re-encoding, not using scalable coding or multirate streaming). In some cases it is not necessary to fully decode the media streams and re-encode them, such as when changing only the container format. Also, the re-encoding process can be made more efficient by only partially decoding the source (perhaps re-using motion estimation results), but in many general purpose transcoding systems, the source is fully decoded and the results fed to a standard encoder. This approach is taken because the required decoders and encoders are readily available and have been highly optimized to perform efficiently. Also, search engines may transcode to a small set of formats in order to target different markets such as mobile devices (e.g. YouTube’s use of Flash Video required large scale transcoding in order to support AppleTV® and iPod Touch® which did not include support for Flash Video).

Rights Management

In addition to incompatible media formats, digital rights management (DRM) systems are not interchangeable, and systems that hope to process a cornucopia of content must navigate these systems as well. Various DRM systems such as Apple’s FairPlay and Real’s Helix can be applied to MPEG-4 AAC media, but this does not imply interoperability. While it would be in keeping with the sprit of DRM to allow the purchaser of a song (or a license to a song) to enjoy the media and justly compensate the provider, in practice this notion has been restricted so that the user must enjoy the song on a single vendor’s device or player. MPEG-21 attempts to standardize the intent, if not the particular implementation, of rights through the definition of a rights expression language (REL). Examples of limited rights to use content include play once, play for a limited time, hold for up to 30 days and then play many times for up to 24 hours after the first play. The hope is that at least these desired use cases can be codified even though a particular media player device may only support a limited number of DRM systems or only a single system. In reality, choosing a DRM system is tantamount to choosing a media player. Purchased music and media (iTunes, Windows Media), video download services, DVDs and broadcast television all have forms of encryption for prevention of unauthorized copy of content (CCS, AACS for DVDs, conditional access for DVB and Cable, broadcast flag for ATSC). Finally, media watermarking and embedding user information in metadata to enable forensic traceability of a copied asset to its source are additional techniques used to preserve the copyright owner’s rights.

Redirector Files

Video search engine systems can make use of redirector (or “metafiles”) to provide increased functionality when initiating video playback. Instead of the user interface containing links directly to the media files, the links point to media metafiles which are small text markup files issued by the HTTP server with a particular MIME type that is mapped to the client media player. At this point the browser has done its job and control of the streaming session is passed to the media player which connects to a media server. This arrangement provides several advantages:

• Response time: the small files download instantly and the media player application can launch quickly and begin video playback using progressive download or streaming.

• Failover / Loadbalancing: The redirector files can include alternative URLs for retrieving the media and media players support a failover mechanism where connection to servers indicated by a list of URLs is attempted in sequence. Applications can also generate metafiles dynamically with URLs pointing to lightly loaded streaming servers if the desired media is avalible on multiple media servers.

• Playtime offsets / clipping: the media play time start and duration can be encoded in the metafile. The ability to seek into the media is critical for directing users to relevant segments in long-form content.

• Playlists / Ad insertion: sets of media files matching user quieres can be represented as a play list and interfaces supported by the media player can be used to navigate amoung them. Preroll or interstitial advertizing can be supported using this mechanism – where essentially one or more clips in the playlist are ads. Much to users chagrin, these clips can be marked so that the ability to skip or fastforward are disabled during playback of ads.

• Additional features: Optionally, directives for including media captions (similar to closed captions) are supported. Also, metadata specific to the session can be included, e.g. the title can be set to “Results for your query for the term: NASA.” This mechanism can be used to effectivly override any metadata embedded in the media itself.

Table 3.2. Media metafile systems.

|

Format |

Extension |

Comments |

|

Real Audio Metafile |

.ram |

One of the early streaming Web media formats |

|

Windows Media Metafile |

.asx, .wmx, .wvx, .wax |

Extensions connote video (v), and audio (a) but the format is the same; ‘asx’ is deprecated |

|

Synchronized Media Information Language |

.smil, .smi |

Supports many additional features such as layout. |

Some common file formats or protocols for achieving this effect are shown in Table 3.2; also the playlist formats such as M3U and PLS provide a somewhat similar function, but with a limited subset of the capabilities.

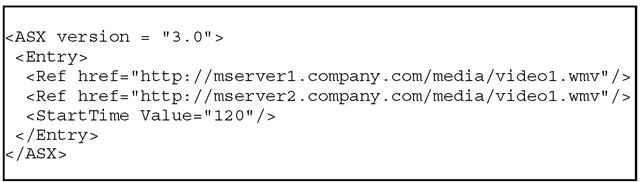

Fig. 3.1 shows a Windows Media Format metafile that includes failover (if the media is not available from mserver1, then mserver2 will be contacted). Also the media play position is set to 120 seconds. For Quicktime, a “reference movie” can be created to point to different bitrate versions of the content. A Reference Movie Atom (rmra) can contain multiple Reference movie descriptor atoms (rmda).

Fig. 3.1. ASX Metafile with failover and start offset.

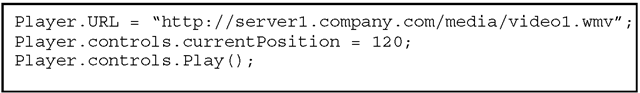

Embedded players: While UIs that launch the media player using metafiles can be extremely lightweight (no client side JavaScript is required) and therefore easily supported by a wide range of browser clients, a more integrated user experience is achieved by embedding the media player in the browser. With this approach, the player plug-in loads once and user navigation of search results can change the media and change the play position. For example Fig. 3.2 shows a client side script fragment for loading a media stream and seeking to a given point using the Windows Media Player object model, assuming that the player has been embedded and named “Player”. More recently, immersive interfaces that provide a user experience more similar to TV have been created leveraging emerging technologies including AJAX, XAML, and using the graphics capabilities of clients to their full potential to provide full screen interfaces with overlaid navigational elements.

Fig. 3.2. Controlling media playback using client side scripting.

Layered Encoding

Some encoding systems include features to efficiently support scalability. Scalability encompasses several varieties including spatial, temporal, and even object scalability. The idea is to encode media once and enable multiple applications where views may be alternatively rendered for services with bandwidths less than the media encoded bitrate. The concept also supports the notion of a base layer and an enhancement layer where the base layer may represent a lower resolution or lower frame rate version of the media and the enhancement later can include more detail. In a best effort network delivery scenario with variable congestion, the base layer can be delivered with a guaranteed quality of service (QoS), while the enhancement layer can use a lower priority so that the overall system user experience will be improved. (Rather than one user – or worse all users -experiencing video dropouts, all users may see a slight degradation in quality).

Some media streaming systems use a less efficient scheme to provide a similar effect. Using what is called “multirate encoding,” multiple versions of a video encoded at different bitrates are merged into a single file. Some implementations of this can be very inefficient, in that each stream is selfcontained and doesn’t share any information from the other representations of the media. Streaming media players can detect connection bandwidth dynamically and switch among the streams as appropriate. While crude, this improves the situation over the case where the user must select from separate files based on their connection bandwidth. Most users don’t have a good understanding of their connection bandwidth in the first place and requiring a selection choice is poor system design which can lead to errors if the wrong setting is chosen.

Illustrated Audio

Illustrated audio is a class of content that fills the gap between full motion video and a bare audio stream. There are two main classes of this; the first is frame flipping where a single still image is displayed at a given point in time until the next event where a different frame is displayed. This can be thought of as non-uniform sampling: instead of each frame being displayed for the same amount of time, e.g. 33 ms, a frame may be displayed for 20 seconds followed by a frame displayed for 65 seconds, etc. An example of this is a recording of a lecture containing slides. The second class involves some form of gradual transition between slides and may include synthesized camera operations such as panning and zooming. Some replay systems for digital photographs employ this technique using automatically selected operations. Readers may also be familiar with the historical documentary style of Ken Burns where old photographs are seemingly brought to life through appropriate narration and synthesized camera operations [Burns07].

The value of this form of content has justified the creation of systems designed for efficiently compressing and representing this unique material. Microsoft’s Photo Story application allows manual creation of slide shows from still frames and encodes the result in Windows media format with a special codec. Alternatively, the Windows Media Format allows for synchronous events to be included in the stream which may include links to images or may encode generic events that can be accessed via client JavaScript at media replay time to take action (which may also include fetching an image from a URL and displaying it).

Apple’s Enhanced Podcasts are MPEG-4 files with streams containing specific information that allows for the inclusion and synchronized replay of embedded still images (as well as other information such as links) that can be replayed on iPods. These files typically have the extension .m4a or .m4b. Other formats also support topic metadata such as ID3v2 which specifies CHAP and CTOC (Table of Contents) and the DVD specification. In Flash video, the “Cue Point” mechanism is used for synchronizing loading of graphics and providing for navigation of the media.

For video search engines, textual topic metadata can augment the global metadata and can improve relevance ranking and navigation for systems that support navigating within long form content. Additionally, where archiving systems manage wide varieties of content and adapt it to produce content for consumption scenarios where the primary media track is audio (i.e. mobile listening), the ability to automatically insert topic markings to aid user navigation is extremely valuable.