Metadata Normalization

The acquisition module typically performs some degree of data normalization, although this can be deferred to the indexing module. The goal of metadata normalization is to simplify the rest of the system by mapping tags with similar semantics to a single tag. Unfortunately, for many systems this is a lossy process since nuances in the source metadata may not be preserved accurately. For example, the search engine may list all results with their title, but for some content, a subtitle or episode title may be included in addition to the main title. Either this subtitle information is dropped, or somehow mapped into another supported field, perhaps using a tag thesaurus, such as the content description field – or some convention may be adopted to concatenate the subtitle onto the main title. All of these alternatives generate undesirable consequences – either the field in question is not available for search, or in the concatenation case, the original tag may not be recoverable from the database. The ideal situation is to preserve all source metadata, while extracting the reliable common tags such as the DCMI fields and utilizing a searching and browsing architecture that can seamlessly manage the complexity of assets having varying numbers and types of metadata fields – a daunting task to achieve at scale. Many systems in use on the Internet today fall short of the ideal case, but still provide an adequate user experience.

User Contributed

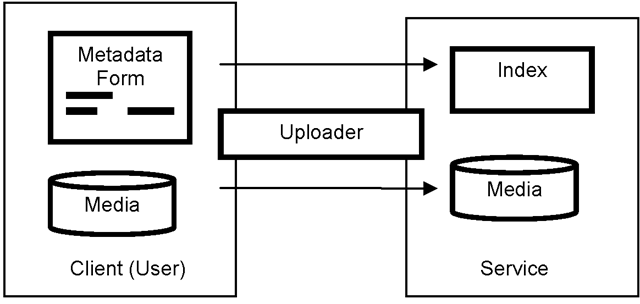

Content acquisition for the user contributed case is shown in Fig. 4.2. Here, a Web form is used to capture metadata from the user, and the media is transferred up to the server. The upload file transfer may be simple HTTP, or a special purpose client application maybe utilized which provides additional benefits such as parallel uploading, ability to restart aborted partial transfers, etc. Note that it seems logical given limited available upload bandwidth to transcode source media down to a smaller size prior to uploading. However, most systems designed for Web users do not employ client-side transcoding for a number of reasons including:

1. the desire to keep the client as thin as possible to ease maintenance;

2. the desire to support clients with limited compute power;

3. possible license issues with codecs;

4. the assumption that video clips will be short, limiting overall file upload size.

Fig. 4.2. Data flows for uploading user contibuted content.

The metadata entry may be decoupled such that the content is uploaded, and metadata tags added later either by the original author, or by other users (social tagging). Consider here the example of mobile video capture and share. The service can be such that the capture format is supported for upload (avoiding the necessity for transcoding on the mobile device), and users could access their clips from a laptop where annotating, forwarding to friends, etc. is much easier given the powerful user interface capabilities of the laptop as compared to the cellular handset. Contributors control the publication of, and rights to use (view or download), the content using categories such as:

1. Public: available to all users;

2. Groups: viewable to selected users;

3. Unlisted: unpublished; a URL is returned to access the content.

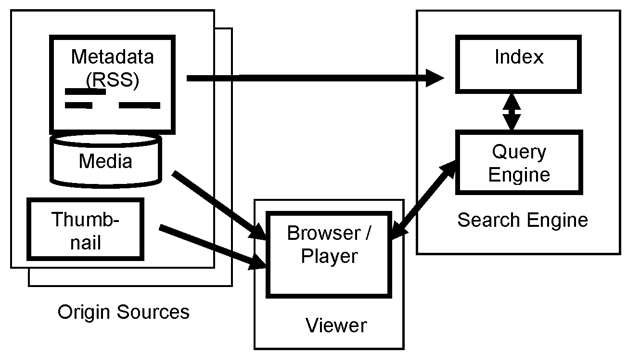

Fig. 4.3. Metadata and media flows for Podcast aggregation search.

Syndicated Contribution

Although we mentioned embedded metadata and introduced the concept of asset packages, which represent media and metadata as a logical unit, it is usually the case that the metadata and the media take separate paths through the acquisition, processing and retrieval flow. Perhaps the most striking example of this is the case of Podcast aggregation search sites. As shown in Fig. 4.3, it is not even required that the server ingest a copy of the media, the system can operate using only metadata provided by the source. In this case, the concept of acquisition really consists of creating a new record in the database with an identifier of some kind, perhaps a URI, referring back to the content source. It is most common to use RSS with media enclosures for the metadata formatting, with the iTunes® extensions for Podcasts or MediaRSS extensions for increased flexibility. Of course, our focus is on media processing to augment the existing metadata to enable content based search, in which case access to the media is required.

With distributed (meta) search, there is even less centralization and data movement. Rather than querying content sources for lists of new content and its associated metadata periodically and building a centralized index, metasearch systems can distribute the queries to several search systems and aggregate results. The problem of merging ranked results from disparate sources as well as duplicate removal present themselves in these scenarios.

Broadcast Acquisition

In addition to crawling, syndication, and user contribution, other forms of acquisition include broadcast capture and event based capture. Broadcast capture may involve analog to digital conversion and encoding, but it is becoming more common to simply capture digital streams directly to disk. For consumers, broadcast may be received over the air, via cable, via direct broadcast satellite, through IPTV or Internet TV multicast. Event-based acquisition is used in security applications where real-time processing may detect potential points of interest using video motion detection and this is used to control the recording for later forensic analysis. It is in the context of these streaming (or live, linear) sources that real-time processing considerations and highly available systems are paramount. In the previous examples of acquisition that we were considering, there is effectively a source buffer so that if the acquisition system were to go offline the result would only be slightly delayed appearance of new content – for streaming acquisition, this would result in irretrievable content loss. Of course, for user contributed situations high reliability is also desired to preserve a satisfying user experience.

A particular set of concerns arise with user contributed content, and appropriate mitigation steps should be taken prior to inserting UGC into the search engine for distribution. Users may post copyrighted material, inappropriate or offensive content and may intentionally misrepresent the content with inaccurate metadata. Sites may employ a review process where the content is not posted until approved, or may rely on other viewers to flag content for review. Fingerprinting technology is used to identify a particular segment of media based on features, and this operation can take place during the media processing phase to reject posting of copyrighted content.