Classical statistics assumes simple random sampling in which each sample of size n yields different values. Thus, the value of any statistic calculated with sample data (for example, the sample mean) varies from sample to sample.

A histogram (graph) of these values provides the sampling distribution of the statistic.

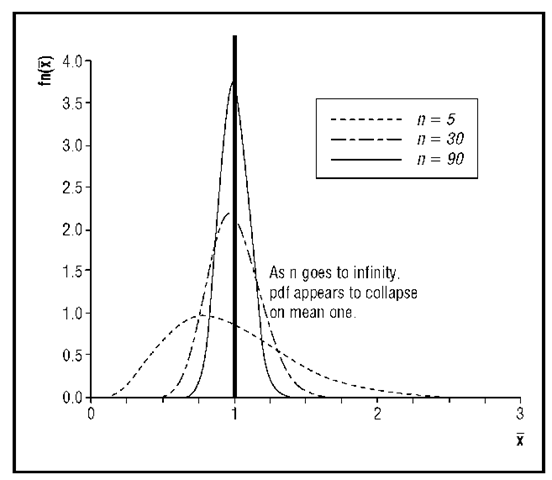

The law of large numbers holds that as n increases, a statistic such as the sample mean (X) converges to its true mean (f)—that is, the sampling distribution of the mean collapses on the population mean. The central limit theorem, on the other hand, states that for many samples of like and sufficiently large n, the histogram of sample means will appear to have a normal bell-shaped distribution. As the sample size n is increased, the distribution of the sample mean becomes normal (central limit theorem), but then degenerates to the population mean (law of large numbers). This process is shown in Figure 1. For an infinite number of samples, each of size n = 5, drawn from an exponential distribution with a mean of one, the probability density function (pdf) of X is right skewed, but for n = 30 the pdf appears more bell-shaped, and for n = 90 it is approximately normal. As n gets larger and larger (approaching infinity) the pdf of X appears to be a thick line at a mean of one, which illustrates the law of large numbers. Remember, however, that even as n goes to infinity, within two standard deviations of the mean there will continue to be approximately 95 percent of the distribution. It is the standard error of the mean(<7 / •Jn )that keeps shrinking.

More technically, a distinction is made between the weak law of large numbers and the strong law of large numbers. The weak law of large numbers states that if X1, X2, X3, … is an infinite independent and identically distributed sequence of random variables, then the sample mean

converges in probability to the population mean f. That is, for any positive small number s,

Figure 1: Sample mean pdf. expotential (1) population.

That is, the sample average converges almost surely to the population mean f.

Students, scientists, and social commentators have been and continue to be befuddled by the law of large numbers versus the central limit theorem. Although the law of large numbers was articulated by Jacob Bernoulli (1654-1705) in the seventeenth century, in the nineteenth century curve fitting and the finding of normality in nature were popular. It was believed that if the sample was big enough then it would be normal (not understanding that the central limit theorem applies to X and not to a single sample of size n). For instance, Adolphe Quetelet (1797-1874), originator of the "average man," made his early reputation by arguing that bigger and bigger samples would yield a bell-shaped curve for the attribute being sampled. He did not make the connection between the mean of one random sample and the distribution of means from all such samples (see Stigler [1986] for this history). By the law of large numbers, the larger the sample size the more the sample will look like the population (the sampling distribution of X appears to collapse on f).

To this day, confusion exists among those who should know the difference between the law of large numbers and the central limit theorem. For example, in his otherwise excellent book, Against the Gods, Peter Bernstein wrote:

Do 70 observations provide enough evidence for us to reach a judgement on whether the behavior of the stock market is a random walk? Probably not. We know that tosses of a die are independent of one another, but our trials of only six throws typically produced results that bore little resemblance to a normal distribution. Only after we increased the number of throws and trials substantially did theory and practice begin to come together. The 280 quarterly observations resemble a normal curve much more closely than do the (70) year-to-year observations. (Bernstein 1996, p. 148)