The statistical analysis of "time series" data, which are common in the social sciences, relies fundamentally on the concept of a stochastic process— a process that, in theory, generates a sequence of random variables over time. An autoregressive model (or autoregression) is a statistical model that characterizes or represents such a process. This article provides a brief overview of the nature of autore-gressive models.

BACKGROUND

In the discussion to follow, I will use the symbol yt to represent a variable of interest that varies over time, and that can therefore be imagined as having been generated by a stochastic process. For example, yt might stand for the value of the gross domestic product (GDP) in the United States during a particular year t. The sequence of the observed values of GDP over a range for t (say, 1946 to 2006) is the realization, or sample, generated from the process yt. One can imagine that, before they are known or observed, these values are drawn from a probability distribution that determines the likelihood that y takes on particular numerical values at any point in time. Time-series analysis is the set of techniques used to make inferences about this probability distribution, given a realization from the process. A key aspect of time series, especially when compared to cross-sectional data, is that for most time series of interest, probable values at a particular point in time depend on past values. For example, observing a larger than average of GDP in one year typically means observing a larger than average value for GDP in the following year. This time, or serial, dependence implies that the sequential order of a stochastic process matters. Such an ordering, however, is usually not important for observations on, say, individual households at a point in time because this ordering is arbitrary and unin-formative.

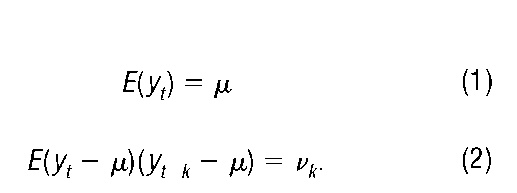

A general way to characterize a stochastic process is to compute its moments—its means, variances, covariances, and so on. For the scalar process above, these moments might be described as:

Equation 1 defines the (unconditional) mean of the process, while equation 2 defines the (unconditional) covariance structure—the variance for k = 0, and the auto-covariances for k > 0. (Some distributions, such as the well-known normal distribution that takes on the shape of a "bell-curve," are fully characterized by means and covari-ances; others require specification of higher-order moments.) Autocovariances measure the serial dependence of the process. If vk = 0 for all k ^ 0, then the process is serially uncorrelated, which implies that past values of the process provide no information that will help predict future values. Such a process is called white noise.

The moments in equations 1 and 2 are quite general, but they are often difficult to estimate and use in practice. Time-series models, on the other hand, impose restrictions on this general structure in order to facilitate estimation, inference, and forecasting. Typically, these models focus on conditional probability distributions and their moments, as opposed to the unconditional moments in equations 1 and 2 above.

AUTOREGRESSIVE MODELS

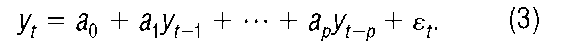

An autoregression (AR) is a model that breaks down the stochastic process yt into two parts: the conditional mean as a linear function of past values (to account for serial dependence), and a mean-zero random error that allows for unpredictable deviations from what is expected (given the past). Such a model can be expressed as:

Here, a0 is a constant, a1 through ap are parameters that measure the specific dependence of y on its past (or lagged) values, p is the number of past values of y needed to account for the serial dependence in the conditional mean (the lag order of the model), and £ is a white-noise process with variance E £2f = a2. The value £ is interpreted as the error in forecasting the current value of y based solely on a linear combination of its past realization. The lag order p can take on any positive integer value, and in principle it can approach infinity. Because yt _ 1 depends only on random errors dated t — 1 and earlier, and because £t is serially uncorrelated, £ and yt_ k must be uncorrelated for all t and whenever k exceeds zero.

Equation 3 is a pth order autoregressive model, or AR(p), of the stochastic process yt . It is a complete representation of the joint probability distribution assumed to generate the random variable y at each time t. These models originated in the 1920s in the work of Udny Yule, Eugen Slutsky, and others. The first known application of autoregressions was that of Yule in his 1927 analysis of the time-series behavior of sunspots (Klein 1997, p. 261). An autoregression explicitly models the conditional mean of the process. Because the mean of the error term s is zero, the expected value of yt , conditional on its past, is determined from equation 3 as:

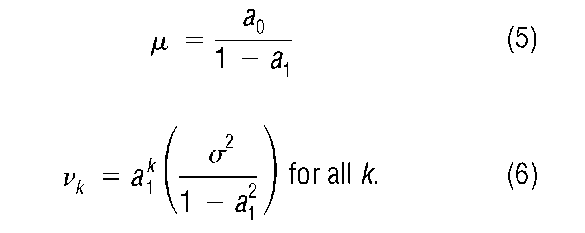

There is, however, a unique correspondence from the unconditional moments in equations 1 and 2 to the parameters in the AR model. This correspondence is most easily seen for the AR(1) process (i.e. p = 1, so that only the value of the process last period is needed to capture its serial dependence). For the AR(1) model, the unconditional mean and covariance structure are, respectively,

This correspondence clarifies how the AR model restricts the general probability distribution of the process. (Note that a large number of unconditional moments can be concisely represented by the three parameters in the model, a0, a1, and a .)

STATIONARITY AND MOVING AVERAGES

A stationary stochastic process is one for which the probability distribution that generates the observations does not depend on time. More precisely, the unconditional means and covariances in equations 1 and 2 are finite and independent of time. (Technically, these conditions describe covariance stationarity; a more general form of stationarity requires all moments, not just the first two, to be independent of r.) For example, if GDP is a stationary process, its unconditional mean in year 2006 is the same as its mean in, say, 1996, or any other year. Likewise, the autocovariance between GDP in 2006 and 2005 (one year apart) is the same as the autocovariance between GDP in 1996 and 1995 (one year apart), or any other pair of years one year apart. Stationarity is an important condition that allows many of the standard tools of statistical analysis to be applied to time series, and that motivates the existence and estimation of a broad class of linear time series models, as will be seen below.

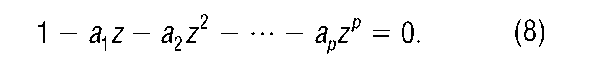

Stationarity imposes restrictions on the parameters of autoregressions. For convenience, consider once again the AR(1) model. A necessary condition for the AR(1) process yt to be stationary is that the root (z) of the following equation be greater than one in absolute value:

Since the root of equation 7 is 1/a1, this condition is identical to the condition laj < 1. The proof of such an assertion lies beyond the scope of this article, but equation 6 shows, heuristically, why the condition makes sense. From this equation, we can see that if a1 = 1, then the variance of the process approaches infinity; if a1 exceeds 1 in absolute value, then the variance is negative. Neither of these conditions is compatible with a stationary process. The general condition for the stationarity of the AR(p) model is that the p roots of the following equation be greater than one in absolute value (Hamilton 1994, p. 58):

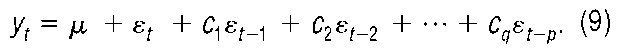

For an autoregressive process of any order that satisfies this condition, the q th order moving average (MA) representation of the process can be written as:

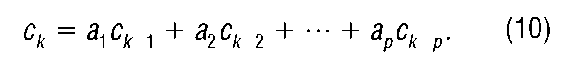

Here, s is the white-noise forecast error, and the parameters cx, c2, …, ck approach 0 as k gets large. For example, using the tools of difference equation analysis, we can show that, for the AR(1) model, c1 = a^, c2 = a 1 , …, ck = ak , and so on. Clearly, the limit of a x as k gets large is zero given the stationarity condition above. The correspondence between the MA coefficients and the autoregressive coefficients in the more general AR(p) case is more complex than for the first-order case, but it can be obtained from the following iteration, for values of k that exceed 1 (Hamilton 1994, p. 260):

(When applying this iteration, recall that c0 = 1.) As with the autoregressive form, the order of the MA process may approach infinity.

The MA model is an alternative way to characterize a stochastic process. It shows that a stationary process can be built up linearly from white-noise random errors, where the influence of these errors declines the farther removed they are in the past. Indeed, Herman Wold (1938) proved that any stationary process has such a moving average representation.

Wold’s theorem has an interesting implication. Suppose we start with the assumption that the stochastic process yt is stationary. We know from Wold that a moving average exists that can fully characterize this process. While such a moving average is not unique (there are many MA models and forecast errors that are consistent with any given stationary stochastic process), only one such MA model is invertible. Invertibility means that the MA process can be inverted to form an autoregressive model with lag coefficients ax, a2, …, ak that approach zero as k gets large. Thus, the use of moving average and autoregressive models can be justified for all stationary processes, not just those limited to a specific class.

RANDOM WALKS AND UNIT ROOTS

Although economists and other social scientists mostly rely on stationary models, an interesting class of nonsta-tionary autoregressive models often arises in time-series data relevant to these disciplines. Suppose that for the AR(1) model yt = a0 + ay_ 1 + st , the parameter a1 is equal to the value one. As seen in equation 7, such a process is nonstationary. However, the first difference of this process, yt — yt _ j, is serially uncorrelated (the first difference of a stochastic process is simply the difference between its value in one period and its value in the previous period). If the constant term a0 is zero, this process is called a "random walk." If a0 is not zero, it is called a "random walk with drift." (Actually, for a process to be a random walk, its first difference must be independent white noise, which is a stronger condition than the absence of serial correlation. A process for which the first difference is serially uncorrelated, but not necessarily serially independent, is called a martingale difference.)

The change in a random walk is unpredictable. A time-series plot of such a process will appear to "wander" over time, without a tendency to return to its mean, reflecting the fact that its variance approaches infinity. If the process has, say, a positive drift term, it will tend to grow over time, but it will deviate unpredictably around this growth trend.

Although the idea of a random walk dates back at least to games of chance during the sixteenth century, the British mathematician Karl Pearson first coined the term in 1905 in thinking about evolution and species diffusion (Klein 1997, p. 271). The French mathematician Louis Bachelier (1900) led the way in using the notion of the random walk (although he did not use the term) to model the behavior of speculative asset prices. This idea was picked up by Holbrook Working in the 1930s, and it was formally developed in the 1960s by Paul Samuelson, who showed that when markets are efficient—when prices quickly incorporate all relevant information about underlying values—stock prices should follow a random walk.

The random walk is actually a special case of a more general nonstationary stochastic process called a unit root (or integrated) process. As seen above, for the AR(p) to be stationary, the roots of equation 8 must exceed one in absolute value. If at least one of these roots equals one (a unit root) while the others satisfy the stationarity condition, the process has infinite variance and is thus nonsta-tionary. The first difference of a unit-root process is stationary, but it is not necessarily white noise. In general, if there are n < p unit roots, differencing the process n times will transform the nonstationary process into a stationary one. David Dickey and Wayne Fuller (1981) developed appropriate statistical tests for unit roots (see also Hamilton 1994, chapter 17), while Charles Nelson and Charles Plosser (1982) first applied these tools to economic issues.

ESTIMATION AND FORECASTING

The AR(p) model in equation 3 can be seen as representing all possible probability distributions that might generate the random sequence yt . Estimation refers to the use of an observed sample to infer which of these models is the most likely to have actually generated the data. That is, it refers to the assignment of numerical values to the unknown parameters of the model that are, in some precise sense, most consistent with the data.

Ordinary least squares (OLS) is an estimation procedure that selects parameter estimates to minimize the squared deviations of the observed values of the process from the values of the process predicted by the model. Under certain conditions, OLS has statistical properties that are deemed appropriate and useful for inference and forecasting, such as unbiasedness and consistency.

If an estimator of unknown parameters is unbiased, its expected value is identical to the true value no matter how large the sample is. Clearly, this is a desirable property. Unfortunately, the OLS estimator of the autoregres-sive parameters in equation 3 is not unbiased, because the random error process s is not independent of the lagged values of the dependent variable. When the error and lagged dependent variables are not independent, the OLS estimator of the AR parameters will systematically underestimate or overestimate their true values.

Nonetheless, OLS remains the common method for estimating AR models, because the OLS estimator, though biased, is a consistent estimator of the actual values. With a consistent estimator of a parameter, the probability that it will deviate from the true value approaches zero as the sample size becomes large. For a stationary process, all that is needed for consistency of the OLS estimator of the autoregression is that sf be uncorrelated with yt_ 1, yt_ 2, …, yt_ for all t in the sample. As noted above, in theory this condition will hold because of the assumption that s is serially uncorrelated. In practice, the condition is likely to hold if a sufficient number of lags are included in the estimated model. The upshot is that OLS will provide good estimates of the AR parameters, provided the sample is sufficiently large and the model is properly specified.

In the social sciences, the primary use of estimating AR models is to help forecast future values of a random variable by extrapolating from past behavior (using the conditional mean in equation 4). The foundations for exploiting the structure of AR and MA models for forecasting are based on the work of George Box and Gwilym Jenkins (1976). Although newer techniques have supplanted the exact methods of Box and Jenkins, their work has been extremely influential.

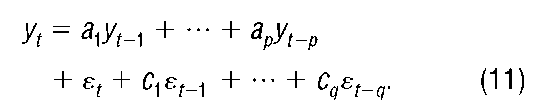

Because the true model of a particular stochastic process is unknown, forecasters must first determine the model they will estimate. The overriding rule of model determination suggested by Box and Jenkins is parsimony, which involves finding a model with the fewest parameters possible to fully describe the probability distribution generating the data. For example, while the data may, in concept, be generated by a complicated autoregressive process, a slimmed-down mixture of autoregressive and moving average components—an ARMA model—may provide the most efficiently parameterized model, and thus yield the most successful forecasts:

Here, the autoregressive component contains p past values of the process, while the moving average component contains q past values of the white noise error; hence this model is expressed as an ARMA(p,q).

With the methods of Box and Jenkins, it is important that the data be stationarity since unit-root nonstationar-ity can overwhelm and mask more transitory dynamics. The autoregressive-integrated-moving average process, or an ARIMA(p,d,q) model, is an ARMA(p,q) model applied to a process that is integrated of order d; that is, the process contains d unit roots. For example, the simple random walk model above can be described as an ARIMA(0,1,0) process, because it is white noise after being transformed by taking its first difference.

EXTENSIONS TO THE BASIC AUTOREGRESSIVE MODEL

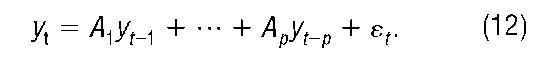

Thus far, the focus here has been on univariate autoregressive models (those having only one random variable). It is often advantageous to consider dynamic interactions across different variables in a multivariate autoregressive framework. The AR model is easily generalized to a vector stochastic process:

In this case, yt is now taken to be an n X 1 vector of random variables, each of which varies over time, and each of the A matrices is an n X n matrix of coefficients. The random behavior of the process is captured by the vector white noise process sf , which is characterized by the n X n covariance matrix Es f ‘ = S. This model is commonly denoted as a vector autoregressive (VAR) model.

To get a clear idea of the nature of this multivariate generalization of the scalar AR model, suppose that each of the coefficient matrices (A^, for k = 1, …, p) is diagonal, with zero values in the off-diagonal cells. The VAR is then simply n separate univariate AR models, with no cross-variable interactions. The important gain in using VAR models for forecasting and statistical inference comes from the type of interaction accounted for by nonzero off-diagonal elements in all the Ak matrices. VAR models can also capture cointegrating relationships among random variables, which are linear combinations of unit-root processes that are stationary (Engle and Granger 1987).

In economics, VAR models have been effective in providing a framework for analyzing dynamic systems of variables. For example, a typical model of the macro economy, potentially useful for implementing monetary policy or forecasting business cycles, might be set up as a VAR with the four variables (n = 4) being gross domestic product, the price level, an interest rate, and the stock of money. The VAR would capture not only the serial dependence of GDP on its own past, but also on the past behavior of prices, interest rates, and money. Christopher Sims (1980) produced the seminal work on the use of VAR models for economic inference, while James Stock and Mark Watson (2001) have reviewed VAR models and their effectiveness as a modern tool of macroeconometrics.

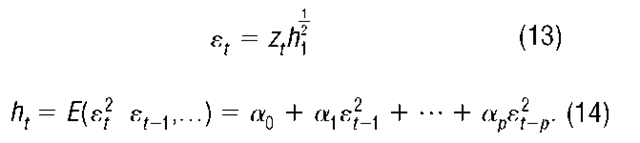

Finally, many random variables exhibit serial dependence that cannot be captured by simple, linear autore-gressive models. For example, returns on speculative asset prices observed at high-frequency intervals (e.g., daily) often have volatilities that cluster over time (i.e., there is serial dependence in the magnitude of the process, regardless of the direction of change). The autoregressive conditional heteroskedasticity (ARCH) model of Robert Engle (1982) can account for such properties and has been profoundly influential. If the scalar s t follows an ARCH(p) process, then the conditional variance of s varies with past realizations of the process:

Indeed, it is straightforward to show that an ARCH process is tantamount to an autoregressive model applied to the square of the process, in this case s2. Tim

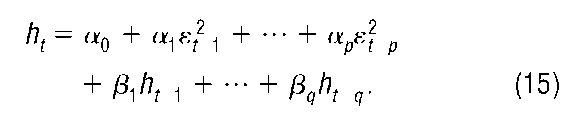

Bollerslev’s (1986) generalized ARCH (GARCH) model extends Engle’s ARCH formulation to an ARMA concept, allowing for parsimonious representations of rich dynamics in conditional variance:

Even this model has been extended and generalized in many ways. Numerous applications of the GARCH family of models in economics, finance, and other social sciences have substantially broadened the reach of autoregressive-type models in the statistical analysis of time series.