This topic discusses more advanced scenarios involving over-provisioning and guaranteed rates that are used in large-scale queues to differentiate shaping rates. This topic touches on the debate about the optimal size of the queues and different memory allocation techniques. Finally, the topic details the famous (or infamous) RED (Random Early Discard ) concept.

Hierarchical Scheduling

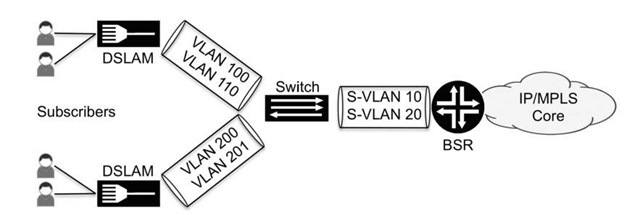

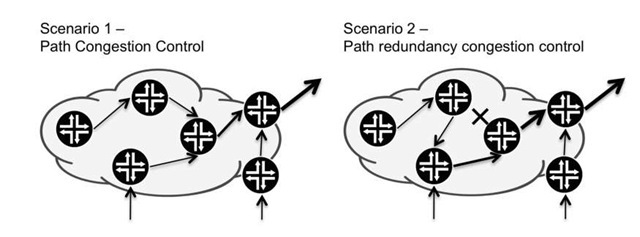

But modern QOS scenarios and implementations need to support multiple levels of scheduling and shaping. A situation when this is common is a subscriber scenario that provisions end users. Consider the topology in Figure 8.1.

The Broadband Service Router (BSR) in Figure 8.1 has a 10-Gbps Gigabit Ethernet link to the switch. The two connections between this switch and the two DSLAM switches are both Gigabit Ethernet links. The subscribers have traffic contracts with different services and rates. To be able to have a functional traffic provisioning model, rates to and from the subscribers need to accommodate service and contracts. Traffic from the subscribers must be shaped to align with the service contract, and traffic to the subscribers must be shaped according to the supported model. To meet these conditions, a hierarchical shaping model must be created on the BSR router link to and from the aggregation switch. At the port level, traffic to the switch is first shaped to 1 Gbps on the two S-VLANs, S-VLAN 10 and S- VLAN 20 in Figure 8.1. The next layer of shaping occurs on the VLANs that exist within each S-VLAN. For example, some users might have 12-Mbps contracts, while others have 24Mbps. In Figure 8.1. these VLANs are 100 and 110 on the upper DSLAM switch and 200 and 201 on the lower DSLAM.

Figure 8.1 Aggregated hierarchical rate provisioning model

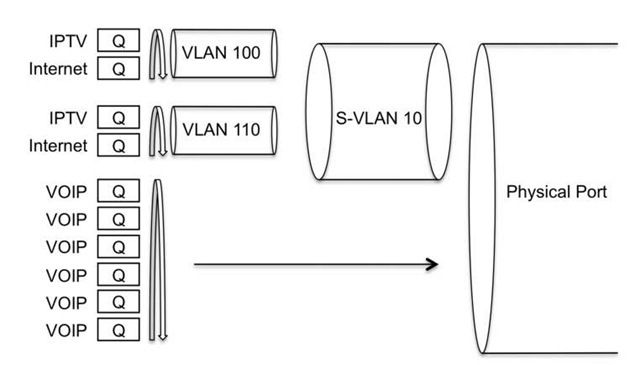

Figure 8.2 Hierarchical scheduling model

The next level of scheduling is the ability to provide a differentiated model to classify different types of traffic, for example, VOIP, IPTV, and best- effort Internet traffic, by scheduling each into a different queue. Figure 8.2 shows the hierarchical scheduling and shaping model for this scenario.

Figure 8.2 show two levels of aggregated shaping and scheduling. Routers that support hierarchical scheduling and shaping face issues not only with back-pressure in levels from the port that carries SVLAN and VLAN towards the subscribers, but also, by the nature of the service, they need to support a large amount of traffic and they need to be able to scale.

QOS scheduling on a large scale, with a large number of units and schedulers, has to be able to propagate important traffic. Consider a case in which you have 1000 subscribers on an interface, with one logical interface (that is, one unit) per subscriber. In the case of congestion, the scheduling algorithm establishes that each unit is visited in turn in case they have packets in their queues.

Figure 8.3 Propagation of priority queues

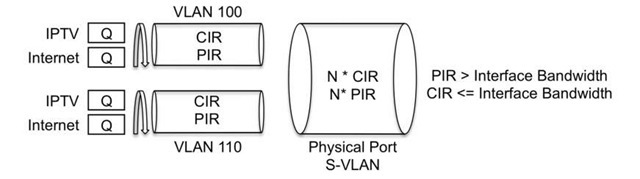

Figure 8.4 The PIR/CIR model

This mechanism can result in variable delay, depending on how many users have packets in their queues. Variable delay is not desirable for users who have triple-play services with, for example, VOIP. The scheduling must be able to propagate information about the priority of the queues before all the subscriber queues are visited in order. Figure 8.3 shows how all users queues with high- priority VOIP traffic are scheduled before each individual user is visited.

A second scenario for aggregated hierarchical rate and scheduling models involves over-provisioning. A common scenario is to balance oversubscription against guaranteed bandwidth for the users. This concept is most often referred to as Peak Information Rate (PIR) versus Committed Information Rate (CIR). The sum of all the CIRs on an interface cannot exceed the interface rate or the shaped rate, but the PIR is allowed to exceed these rates. If all the bandwidth is not used, subscribers and shaped VLANs are allowed to reuse that bandwidth, as illustrated by Figure 8.4.

Consider a service provider who sells a real-time service such as IPTV Video On Demand (VoD- and who also sells best- effort Internet. The IPTV bandwidth is about 6 Mbps per user. The Internet service is committed to be about 4 Mbps. The result is a CIR of 10 Mbps per subscriber. When no other users are transmitting, the PIR is allowed to be 20 Mbps. This bandwidth can be achieved if no other users are using their CIR portion of the bandwidth. So this over-provisioning model has a PIR of 2 : 1. Traditionally, the CIR is always lower than the PIR. Using hierarchical scheduling and rates, one possible service can be to shape Internet service for users to a certain value. The charge for Internet access is a fixed price each month. For subscribers who also have IPTV service, the allowed VOD rate could be much higher than the contract because sending a movie over the VOD service results in direct payment to the operator. Therefore, the VOD rate is scheduled and shaped in a hierarchical fashion, separate from the subscriber Internet rate contract.

Queues Lengths and Buffer Size

One debate that comes and goes concerns the optimal size of the queue. It is sometimes called the small vs. big size buffer debate. This debate can be viewed from a macro level or from a micro level.

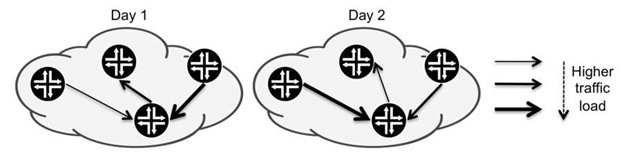

First, let us consider the macro debate, which centers on the question of how to design congestion avoidance in the big picture. On one side of this debate are the supporters of big buffers. The designers who favor this approach argue that in packet-based networks on which QOS is implemented using per-hop behavior (PHB), routers and switches make all the decisions on their own, with no awareness of the end-to-end situation. Hence, here an end-to-end QOS policy is simply not doable. Traffic destinations change as the network grows or shrinks on daily basis. Thus, the rerouting that occurs as the network continually changes size invalidates any attempt to predesign paths, with the result that any traffic modeling easily breaks down. In this design, end-to-end RTT estimates are hard to predict, so it is a good idea for all resources to be available. The solution is to go ahead and add memory to expand the capability of storing traffic inside a router. One benefit of this is that big buffers can temporarily absorb traffic when traffic patterns or traffic paths change. The idea is that a combination of well-designed QOS policy and tuned bandwidth and queue length works only if the traffic patterns and paths are somewhat static and easy to predict. In the macro situation, rather than having an end-to-end QOS policy, the QOS policy can be adapted to define specific traffic classes and to align with the capabilities of the routers. If one router can support a queue length of hundreds of milliseconds, while another router in the traffic path can support up to seconds of traffic buffering, the QOS policy can be tailored to each router’s capabilities. In this way, the policies can be based on each router’s functions and capabilities, because traffic and other conditions can vary widely from router to router and day by day, making end-to-end policies ineffective or impossible. Figure 8.5 illustrates the main argument for defining QOS policies on each individual router.

The opposing view is that end-to-end QOS policy is the only way for QOS to be effective, and hence buffers must be tuned to be a part of an estimated end-to-end delay budget. This means that for the possible congestion paths in the network, prioritizing the expected traffic is not enough. It is also necessary to tune the buffers to be able to carry the traffic and at the same time be within the end-to-end delay figures.

Figure 8.5 Every router stands on its own regarding QOS

Figure 8.6 End-to-end QOS policy for certain traffic classes

The estimation of what a fair share of the path and bandwidth is needs to take into account both the steady-state situation and predicted failure scenarios along the traffic paths, and must also anticipate possible congestion points. The rule to be followed with this approach is to control the rate of traffic entering the network. The amount of traffic that needs to be protected cannot be greater than what the network can carry. To achieve this service, the convergence and backup paths need to be calculated. A common way to control the paths is to use RSVP LSP signaling, implementing object constraints regarding bandwidth and ERO objects to control the end-to-end delay and bandwidth, because LSPs allow complete control over the path that the traffic takes (see Figure 8.6).

When considering these two approaches, the reality is probably that good queue-length tuning needs to use a little of both alternatives. Real-time applications obviously need to have reliable control of traffic paths, which means that the number of congestion points need to be minimal and the end–o-end delay, in milliseconds, needs to be predictable. For these applications, designers generally think that buffers should be small and paths should be well designed. Also, in case of a network path outage, equivalent convergence backup paths should be included in the design.

Here is a concrete example of balancing the queue length and buffer size. A transit router has four 10-Gigabit Ethernet (10GE) links. (A 10GE link is 10 000 Mbps.) Transforming this to bytes gives 1000/8, or 1250 MBps. Transforming this to milliseconds gives 1250 x 1000, or 1250KB. This means that 1 millisecond of traffic is 1250KB. If the router supports 100 milliseconds of traffic buffering, the buffer allocated to the 10GE interface is 100 x 1250 KB, or 125 000 000 bytes of memory.

Under steady conditions, the estimate is that a router has one duplex path for transit voice traffic, and the volume for one direction is 500 Mbps. If a reroute event occurs, one more duplex path of voice traffic transits the router. In case of congestion, the core link may suddenly find itself carrying 1000 Mbps of voice traffic, or 125KB/ms. This traffic volume can be handled well by a router capable of buffering 100 milliseconds of traffic on the 10GE interface.

In the first design approach, in which each router handles QOS on its own, the calculation for QOS ends here. The second design approach demands that buffers be adapted to the end–o-end RTT and that backup paths be well controlled, for example, by link-protection schemes. If a reroute scenario demands bandwidth, and ERO cannot be achieved, the routing and forwarding tables cannot deliver the traffic. The result is that RSVP would re-signal the ingress and egress points of the LSP to achieve the ERO requirements.

One possible design compromise is to use RSVP LSP paths for loss-sensitive traffic to control the buffer length and paths, effectively establishing a predictable delay and handling the bulk of the best-effort traffic with the available buffer space on each router in the network.

Now, let us consider the micro view, which considers the best behavior for the majority of applications and protocols regarding buffer tuning. Most designers agree that delay-sensitive and loss – sensitive traffic favors a design with smaller buffer lengths. The big difference between the micro and macro views is probably the dimensioning of the queue size for the TCP best-effort traffic. That is, should we use short queues so that bad news can be relayed quickly? The result would be earlier retransmissions or even the avoidance of too much congestion from transmitting hosts. In theory, retransmissions within a session are limited to a few packets because the queue should not be very full. The whole purpose of using smaller queue sizes is to avoid a "bigger mess" caused by delays in delivering bad news. With small queues, the delay is relatively low and jitter is not much of an issue, but the volume of packet retransmissions is higher. Web applications that are short-lived are more sensitive to delay because response time must be quicker since there is not much cwnd window build-up and not many bytes of traffic are transferred. On the other hand, should the queue be big to be able to smooth out bursts, but at the cost of increased delay or even a huge jitter? Having large queues would avoid retransmissions and allow smoother traffic delivery, and they ignore the delay factor because no delay occurs if the sessions exist just to transfer a file.

You can never have enough buffers to smooth the bursty traffic of all possible best-effort users in today’s Internet or large enterprise networks. Consider TCP-based traffic that is elastic with regards to its congestion and receiving windows. The memory necessary to be able to buffer all user streams is equal to all possible active TCP sessions, and the sessions of traffic must be multiplied by the maximum size of each session’s TCP window. In most situations, providing this amount of memory is unrealistic.

Dynamically Sized versus Fixed-Size Queue Buffers

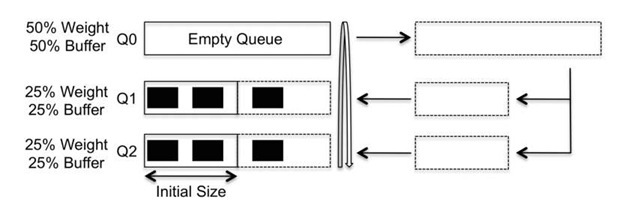

As we have discussed earlier, others queues can be configured to use bandwidth that is not being used by higher-priority or active queues. This means, e.g., that when saturation and packet drops are expected with a best- effort queue, the queue can receive better service if no assured-forwarding or expedited-forwarding packets needs the allocated bandwidth and scheduling time. Best-effort service is normally not rate-limit or policed to a conform to a specific rate, so if a best-effort queue gets more scheduling time because other queues have nothing to remove, this is simply the nature of best- effort traffic and oversubscription.

But the question arises about whether you should share buffers also. That is, should you allow the queue size memory quota to be reused if it is not needed by other queues? The advantages with this, of course, are being able to absorb a greater burst of packets and to reuse the memory space. Designer who favor this approach are supporters of having routers with large queuing buffers establish their own QOS policy.

The term most commonly used to describe this method is memory allocation dynamic (MAD). While the logic of implementing MAD seems fairly simple, it is actually relatively complex because the queue length status must be taken into consideration when scheduling turns allocate bandwidth based on the deficit counter and credit state. An additional algorithm is required to reallocate buffers for the queues currently not transmitting, to add entries to those queues.

Figure 8.7 illustrates how MAD operates. Queue 0 (Q0) contains no entries, while Queues 1(Q1) and 2 (Q2) are approaching a trail-drop situation because these buffers are close to overflowing. The extra scheduling (bandwidth) cycles and buffer space helps the queues when they are highly saturated.

It is worth noting that some designers do not like being able to share both buffer space and bandwidth, because the delay obviously cannot be predicted. The most common design is to allocate a queue for a best-effort traffic type class, thereby offering the ability to get more buffering space in case of need, but to have a more traditional static buffer length for expedited-forwarding traffic class as a way to keep the delay values below a strict margin.

Figure 8.7 Dynamic buffer allocation example

RED – Random Early Discard

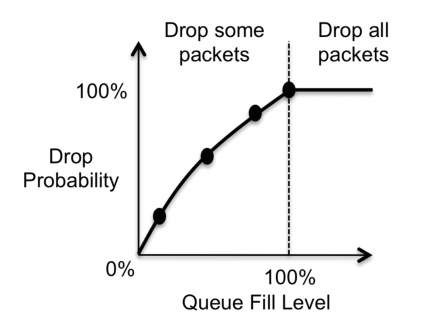

Throughout this topic, we have highlighted the applicability of RED and WRED for TCP flows and as a way to differentiate between different traffic types inside a queue. Let us now dive into the concepts behind RED and its variations. Queuing and scheduling handle bandwidth and buffering once congestion exists. Once packets are in the queue, they are either transmitted after spending some time in the queue or are aged out and dropped because the queue has no scheduling cycles. But what about dropping a packet before it enters a queue or dropping a packet in the queue before it gets aged out? What congestion avoidance tools are available? There are two main traffic congestion avoidance tools, policers.

As described earlier, policing can be "hard" (that is, packets are dropped if traffic exceeds a certain rate) or "soft" (packets are re-marked if traffic exceeds a certain rate). Hard policing can ensure that delay-sensitive traffic entering at the edge of the network does not exceed the rate supported across the core. It can also be used to rate- limit best -effort traffic that is being transmitted into the network maliciously, for example, during an ICMP attack. And policing can be used with RED. So what is this RED?

RED is a tool that provides the ability to discard packets before a queue becomes full. That is, RED can drop packets in the queue before the congestion becomes excessive, before packets are aged because the queue is overflowing, and hence before traffic stalls. Floyd and Jacobson first proposed RED in 1993 in their paper "Random Early Detection Gateways for Congestion Avoidance". The idea behind RED is to provide feedback as quickly as possible to responsive flows and sessions, which in reality means TCP-based sessions. The thinking behind RED is to flirt with a TCP congestion avoidance mechanism that kicks in when duplicate ACKs are received, acting by decreasing the congestion window (cwnd). The packet drops are not session related; rather, they are distributed more fairly across all sessions. Of course, when a session has more packets in the buffer, it has a greater chance of being exposed to drops than lower-volume sessions.

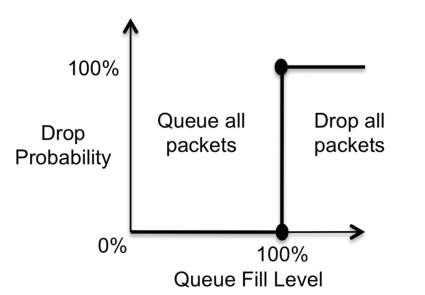

Let’s first examine the default queue behavior, tail drop. A queue has a specific length, which can be translated into how many packets it can accommodate. When a packet arrives to be put into the queue, it is either dropped if the queue is full or stored in the queue. This behavior is somewhat analogous to a prefix-limiting policy that can be applied to any routing protocol, in which new routes are accepted until a limit is reached, and then no new routes are accepted.

In Figure 8.8, the vertical axis represents a drop probability and the horizontal axis represents the queue fill level, and both parameters can range from 0 to 100%. As illustrated here, the drop probability is always 0% unless the queue is full, in which case the probability jumps straight to 100%, meaning that once the queue is full, all packets are dropped. This standard tail-drop behavior makes sense: why drop a packet if the queue has room to store it?

Figure 8.8 Tail-drop behavior

Figure 8.9 RED drops

RED allows the standard tail- drop behavior to be changed so that a packet can be dropped even if the queue is not full (see Figure 8.9). It sounds somewhat bizarre that the queue can store the packet but that the incoming packet can still be dropped.

Two scenarios can justify this behavior. The first, present with TCP sessions, is a phenomenon called the TCP synchronization issue, in which massive traffic loss triggers the TCP back-off mechanism. As a result, sessions enter the initial state of TCP slow start and they all start to ramp up their congestion windows simultaneously. The second scenario occurs when different types of traffic share the same queue and differentiating between the traffic types is necessary.