INTRODUCTION

Evaluating the quality and effectiveness of user interaction in networked collaborative systems is difficult. There is more than one user, and often the users are not physically proximal. The “session” to be evaluated cannot be comprehensively observed or monitored at any single display, keyboard, or processor. It is typical that none of the human participants has an overall view of the interaction (a common source of problems for such interactions). The users are not easily accessible either to evaluators or to one another.

In this article we describe an evaluation method that recruits the already-pervasive medium of Web forums to support collection and discussion of user critical incidents. We describe a Web forum tool created to support this discussion, the Collaborative Critical Incident Tool (CCIT). The notion of “critical incident” is adapted from Flanagan (1956), who debriefed test pilots in order to gather and analyze episodes in which something went surprisingly good or bad. Flanagan’s method has become a mainstay of human factors evaluation (Meister, 1985). In our method, users can post a critical incident report to the forum at any time. Subsequently, other users, as well as evaluators and system developers, can post threaded replies. This improves the critical incident method by permitting follow-up questions and other conversational elaboration and refinement of original reports.

In the balance of this article, we first describe the project context for our study, the development of a virtual school infrastructure in southwestern Virginia. We next describe the challenge of evaluating usability and effectiveness in this context. These problems sparked the idea for the Collaborative Critical Incident Tool (CCIT). We discuss the design of the tool as used during two academic years. We illustrate the use of the tool with sample data. Finally, we discuss the design rationale for the CCIT, including the design tradeoffs and our further plans.

BACKGROUND: THE LINC PROJECT

Our study was carried out in the context of the “Learning in Networked Communities” or LiNC Project. This project was a partnership between Virginia Tech and the public schools of Montgomery County, Virginia, U.S. The objective ofthe project was to provide a high-quality communications infrastructure to support collaborative classroom learning. Montgomery County is located in the rural Appalachian region of southwestern Virginia; in some schools, physics is only offered every other year, and to classes of only three to five students. Our initial vision was to give these students better access to peers through networked collaboration (Carroll, Chin, Rosson, & Neale, 2000).

Over six years, we developed and investigated the virtual school, a Java-based networked learning environment, emphasizing support for the coordination of synchronous and asynchronous collaboration including planning, note taking, experimentation, data analysis, and report writing. The central tools were a collaborative notebook and a workbench. The notebook allowed students to organize projects into shared and personal pages; it could be accessed collaboratively or individually by remote or proximal students. The software employed component architecture that allowed notebook “pages” of varying types (e.g., formatted text, images, shared whiteboard). The workbench allowed groups including remote members to jointly control simulation experiments and analyze data. The virtual school also incorporated e-mail, real-time chat, and videoconferencing communication channels. (See Isenhour, Carroll, Neale, Rosson, & Dunlap, 2000; Koenemann, Carroll, Shaffer, Rosson, & Abrams, 1999).

multifaceted evaluation

Some of the greatest challenges in the LiNC Project pertained to evaluation. In the situations of greatest interest, students were working together while located at different school sites, some more than 15 miles apart. Usability engineering and human factors engineering provide many techniques for evaluating traditional single-user sessions—observing and classifying portions of user activity, non-directively prompting think-aloud protocols, logging session events, interviewing, and surveying. The problem in the case of the Virtual School is that the “session” is distributed over the whole county.

Our approach to this challenge was to gather many sorts of traditional evaluation data and to try to synthesize and synchronize this data into a coherent multifaceted record. We transcribed video records from each school site, directly incorporating field notes made by observers during the activity recorded. We incorporated server logs into this data record, based on matching time stamps. Finally, we integrated the data records for groups that collaborated over the network during the activity (see Neale & Carroll, 1999).

The multifaceted event record, however, focuses on making sense of moment-by-moment user-interaction scripts. This is critical to identifying usability problems. However, it does not address larger questions about differences in values and world views among participants, including project members.

design of the ccit

The CCIT is a threaded discussion forum. The root of each thread is a critical incident report, consisting of a description of a critical incident and an author comment. Users can post comments to a critical incident report, and comments on comments. Authors of reports and comments specify short-hand descriptions. These are used as names in an indented list mapping of the critical incident database; in the map, the short hands are link anchors providing single-click access to reports and comments.

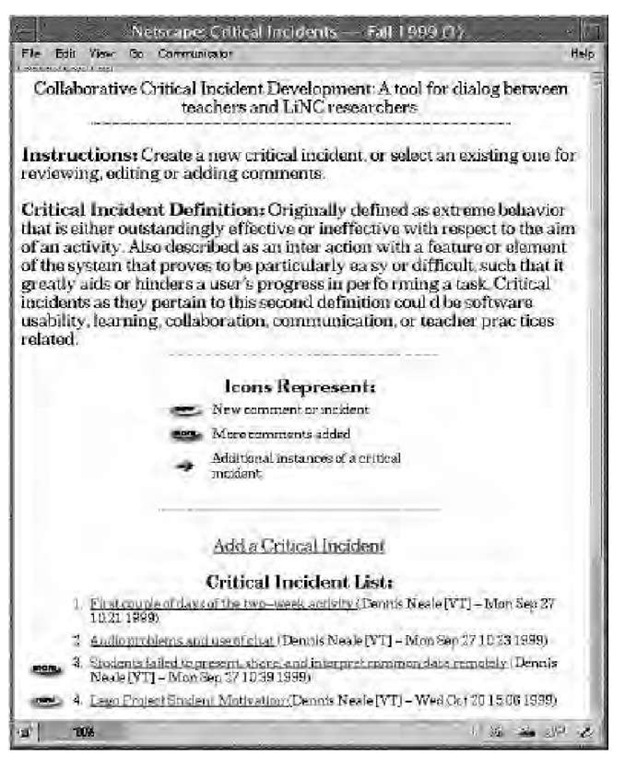

The first screen displays a statement of purpose and a definition of critical incident, a list of the critical incidents currently posted—listed by their author-supplied names, and a link to add a new critical incident (Figure 1). When a given user selects a posted critical incident for the first time, the critical incident description and author comment are displayed, and the user is asked to rate the critical incident on a 7-point scale, anchored by “not critical” and “critical” with respect to usability, learning, collaboration, communication, and/or teacher practices.

The 7-point criticality rating is indicated by selecting a radio button and then saving the rating, which also triggers a hyperlink to the discussion forum for that critical incident. The discussion forum displays the critical incident name, description, and author comment at the top of the page, followed by a table of the obtained criticality ratings from all users who have viewed that report. Below this is a link to “Add comment,” followed by an indented list mapping of the discussion to that point. Users can access each posted discussion item from a critical incident’s discussion forum. They can also move around the discussion from the pages displaying individual discussion items.

Figure 1. Main page for Collaborative Critical Incident Tool. A statement of purpose and definition of critical incident are displayed permanently with a key to special symbols (new comment or incident, more comments added to an incident report already posted, additional instances of a critical incident). Below this orientational information is the list of critical incidents currently posted — listed by their author-supplied names (only the top of the list is visible in the figure). At the bottom of the list is a link to add a new critical incident.

Users can reply to a critical incident or to a comment using a form that prompts for a name and a text pane that accepts ASCII or HTML. We used the “Re:” convention, common in e-mail clients, for suggesting a default comment name, and assume ASCII text as a default. We wanted to encourage users to bring additional evaluation data into these discussions, so we provided a check box to indicate that the comment describes another occurrence of the critical incident. Comments that include further critical incident data are subsequently marked in the indented list display ofthe discussion by being prefixed with a special icon. Adding a critical incident report also triggers a form, in this case consisting of a prompt for a name and two text panes, for a critical incident description and author comment.

The rating display only appears on a user’s first viewing of a critical incident. Subsequently, selecting a critical incident name in the list of critical incidents on the initial screen causes a direct jump to that critical incident’s discussion forum. The list of critical incidents is annotated with a “new” icon when a critical incident has not yet been viewed by the user, by a “more” icon when the critical incident has been viewed before but items have since been added to that critical incident’s discussion, and by an “additional instance” icon when a comment has been author-classified as including additional critical incident data.

The initial screen also contains notification and configuration functions. The notification function provides a form to create an e-mail notification that a critical incident report has been posted. The configuration function allows users to customize a variety of display parameters and privileges. Groups of contributors, administrators, and viewers can be defined, and restrictions on re-editing contributed incidents and comments can be defined.

EXAMPLE OF A CRITICAL INCIDENT REPORT

Figure 2 presents a typical example of a critical incident report. In this case, a middle school teacher posted a comment about a group of her students working on a bridge design project with a group of high school students at a different school site. (In our study, all of the critical incidents pertained to aspects of new collaborative learning activities.) She described an episode in which she was surprised at the engagement and initiative her student displayed in collaborating with a group of high school students. She reflected on sources of her student’s motivation, specifically on competitiveness, in trying to explain this episode, and concluded that the middle and high school students were functioning more effectively as a group than she had previously realized.

The context for this critical incident report included several other reports and entrained discussions addressed to perceived problems in the networked collaboration among students in a middle school and a high school. Students and teachers in both schools felt that their remote counterparts were not contributing enough. Interestingly, the underlying issue was more a matter of collaborative awareness than of cooperation: Session logs and in-class observations showed that quite a lot of relevant work and interaction was taking place. The teachers had concluded that the collaboration had broken down because their students were not video conferencing, but in fact, students were using chat to discuss hypotheses and bridge construction materials. The researchers used the CCIT to share this information.

This example demonstrates the utility of critical incident discussions in developing shared understandings of usage experiences in networked collaborative systems. The incident described would have been difficult for the other teachers or for the researchers to fully understand without this type of extended and enriched discussion. Although other methods, such as interviewing, can elicit rich information, the other teachers or the researchers would have had to ask just the right questions. This is particularly difficult for teachers as users, since teachers work in relative isolation from professional peers; not even colleagues within a school know one another’s classroom context in detail (Tyrack & Cuban, 1995). A total of nine people (including teachers and researchers – both evaluators and software developers) rated and participated in the discussion evoked by this critical incident report. On the criticality scale of 1 to 7, participants’ mean rating was 4.8. Following the initial posting, evaluators made five comments, teachers contributed four, and developers two.

Figure 2. Example critical incident drawn for the Spring 1999 database

|

Critical Incident: Bridge collaboration — the continuing saga |

|

Author: Mrs. Earles [Blacksburg Middle School] |

|

Created: Thu May 13 10:18 1999 |

|

Description: |

|

Yesterday, the group of three boys in my classroom who are working with two of Mark’s kids actually |

|

tested their bridge in class. Shanan recorded it digitally for the benefit of the partners at Blacksburg High |

|

School (BHS). With a little prompting from me, one of the group members got on the VS early this |

|

morning to post their results. I was expecting a cursory sentence or two but he took his job far more |

|

seriously and actually drew a picture of their bridge to go along with the explanation. He then wrote out the |

|

procedure they had used so the partners at BHS could compare their methods. He was disappointed that the |

|

digital pictures weren’t there because he wanted the BHS guys to see what happened. |

|

Comments: |

|

Several things surprised me about this scenario (dare I use the word)? The guys here and one in particular |

|

were really surprised their bridge held up 36 pounds of weight — he expected much less — there was real |

|

excitement in their results. I think this is why they were fairly anxious to share this with the other guys at |

|

BHS. I feel certain they are hoping the high school bridge does much worse — interestingly enough, each |

|

group predicted the other group would do better. At any rate, this group has been very interesting because |

|

their level of interaction has fluctuated significantly and the early problems that involved personalities |

|

seem to have been forgiven if not forgotten. These groups actually have had more give and take than I |

|

realized and in the end they may actually be a group that really relied on each other for shared data |

|

necessary to write up their experiment. |

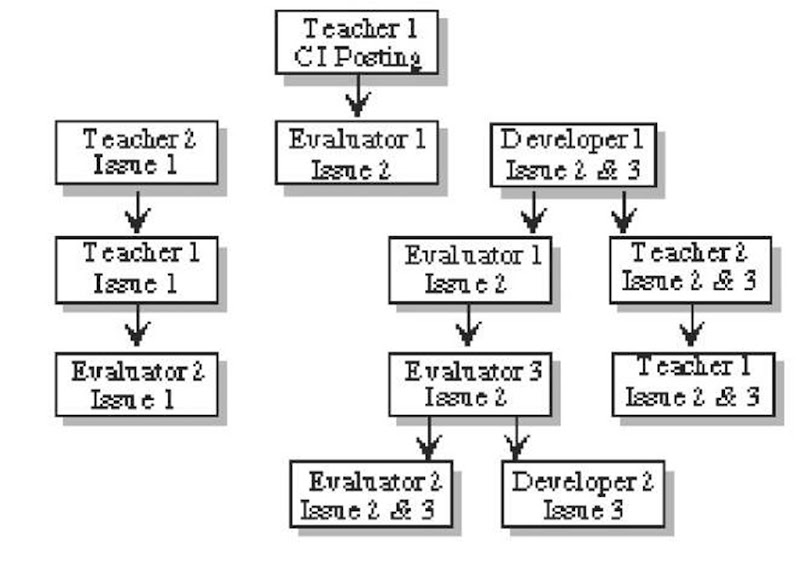

The structure of the comment threads is diagrammed in Figure 3. The original report elicited three direct responses, one from another teacher (the teacher whose students were collaborating with those of the teacher who posted the report), one from an evaluator, and one from a developer. The teacher’s response elicited a response from the teacher who had originally posted the critical incident report, which in turn elicited a response from an evaluator. The developer’s response to the original critical incident report elicited two direct responses, one from the evaluator who responded to the original report, and one from the teacher who responded to the original report.

The critical incident discussion involved three main issues: one was problems of managing remote collaborations among students (Issue 1), a second was competition among students (Issue 2), and a third was complications of gender (Issue 3).As indicated in Figure 3, Issues 2 and 3, competition and gender, tended to be discussed together.

Figure 3. Discussion structure of comments posted to a critical incident report

Competition (Issue 2) became a moderately controversial discussion thread. One of the evaluators suggested that a cross-school competition might increase student involvement in the bridge design project. Both teachers strongly objected to this idea. The high school teacher (Teacher 2) declared, “Regardless ofhow nicely anybody asks, I’ll never have competition as a part of my classroom…on any level; no matter how subtle.” Through the discussion, however, the idea of comparing results became generally accepted. This, in turn, led to a general conclusion about the design of activities for the virtual school: Networked collaborations should integrate physical sub activities at each of the collaborating sites, and each group should have a result or conclusion that is its own, and which the group can share and compare with its remote partners.

experience and design rationale

Like any forum, the CCIT requires deliberate administration. We made a concerted effort in the spring semester of 1999 to identify and post critical incidents for which we expected some difference of opinion.

For our type of technology intervention to succeed in the school context, it is extremely important to identify and address collisions with teacher goals and practices. The teachers we worked with are sometimes caught between their LiNC-oriented goals (innovative technology practices and research) and their immediate responsibilities to their students and the school administration. The teachers wanted to explore new teaching practices and classroom activities enabled by the virtual school, but they are, at the same time, expert practitioners operating within the context of current schools. They have job responsibilities and well-established and proven-effective skills to meet those responsibilities. When there is a conflict between project goals and standard practice, they must often choose standard practice. We found that they were sometimes reluctant to discuss these conflicts with us.

Posting classroom critical incidents, however, attracted lively discussion from the teachers as well as other project members. In some specific ways, it seemed superior to trying to confront these important but awkward issues face-to-face; asynchronous forums allow contributors to refine their comments until they have expressed their views precisely as intended, and thereby reduce the chance of misspeaking and unintentionally polarizing the discussion or embarrassing a colleague. In general, we have found that the best critical incidents are those that address moderately controversial issues; the most and the least controversial critical incident reports are those most likely to be neglected by colleagues.

We have not observed the sharp role differentiation in the use of the CCIT that we expected. Our original vision was that teachers would be the main providers of critical incident reports, and that developers and evaluators would be involved more as discussants. It has turned out that these roles are shared. Evaluators often post reports of things they have observed but not fully understood in order to elicit clarifications and explanations from the teachers. This is an extremely useful channel for the evaluators to have. It is like bringing all the classroom personnel together each week for a data interpretation workshop—something we could not do in this project.

conclusion and future work

Critical incidents are, by definition, salient usage events. Thus, it is more or less a given that people will notice critical incidents, and quite likely that they will discuss critical incidents. Members of our LiNC proj ect—teachers, evaluators, and developers—always talked about critical incidents. For example, one or two particularly significant episodes would often be raised for discussion at project meetings. However, all-hands, face-to-face project meetings were rare (about one per month over the past two years). And, in any case, these face-to-face discussions tend to be brief, incompletely documented, and often dominated by the person who first reported the incident.

The role of critical incident reports as a kind of evaluation data in this project was enhanced tremendously by the CCIT. Reporting no longer was delayed until people could meet face to face. Discussion was no longer restricted to what could be articulated during a meeting. Participation was no longer limited to who knew the most, who thought or talked the fastest, or who happened to attend a given face-to-face meeting.

Representing relationships among critical incidents is important. Empirical usability studies have shown that the traditional concept of discrete critical incidents with singular, proximal causes can be elaborated. The causes of observable critical incidents are not always discrete, nor are they always proximal to the observed effect. Sets of causally related episodes of user interaction, each of which is itself less than a critical incident, can comprise a “distributed” critical incident (Carroll, Koenemann-Belliveau, Rosson,& Singley, 1993). Such patterns indeed can have powerful and wide-ranging effects on the usability and usefulness of systems. One approach to representing such relationships would be to designate a special type of comment in the CCIT to indicate possible relationships to other critical incident reports and to provide links to those reports and their discussion threads.

We are encouraged by the utility of the CCIT in the LiNC Project and will continue to develop and investigate this class of evaluation tools. Other educational researchers and practitioners can easily adapt our method with any threaded discussion tool, augmented with social conventions about anchoring each thread with a critical incident report. We feel it definitely provided an effective channel for evoking, sharing, and discussing evaluation data in our project. Moreover, and of critical importance to our project, it achieved this while also providing a more active and equal role for the users in the interpretation of early evaluation data within the system development process.

key terms

Asynchronous Collaboration: Collaborative interactions, for example, over the Internet, that are not synchronized in real time, such as e-mail exchanges, browser-based shared editing (wikis), and postings to newsgroups.

Critical Incident: An observed or experienced episode in which things go surprisingly well or badly.

Multifaceted Evaluation Record: Evaluation data record collating and synchronizing multiple types of data, for example, video recordings, user session logs, and field observations,

Participatory Evaluation: Evaluation methods in which the subjects of the evaluation actively participate in planning and carrying out observations.

Synchronous Collaboration: Collaborative interactions, for example, over the Internet, carried out in real time, such as chat, video/audio conferencing, and shared applications.

Think-Aloud Protocol: A research protocol in which participants verbalize their task-relevant thoughts as they perform.

Threaded Discussion: An asynchronous collaboration medium in which participants contribute to topics and to contributions under topics, creating a nested discourse structure.