The transport layer links the application software in the application layer with the network and is responsible for segmenting large messages into smaller ones for transmission and for managing the session (the end-to-end delivery of the message). One of the first issues facing the application layer is to find the numeric network address of the destination computer. Different protocols use different methods to find this address. Depending on the protocol—and which expert you ask—finding the destination address can be classified as a transport layer function, a network layer function, a data link layer function, or an application layer function with help from the operating system. In all honesty, understanding how the process works is more important than memorizing how it is classified. The next section discusses addressing at the network layer and transport layer. In this section, we focus on the three unique functions performed by the transport layer: linking the application layer to the network, and segmenting.

Linking to the Application Layer

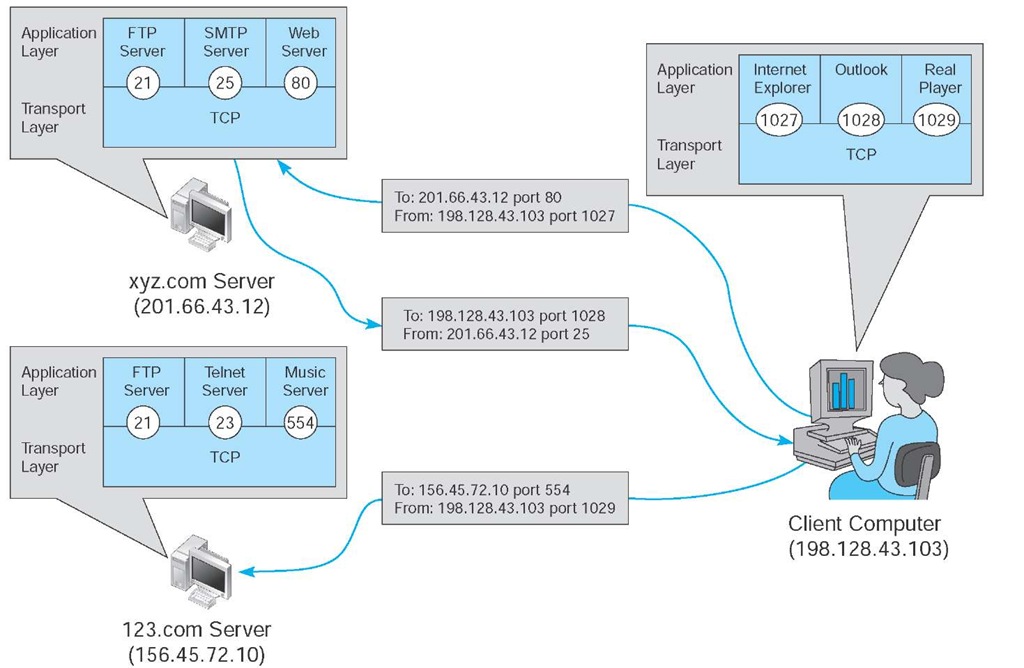

Most computers have many application layer software packages running at the same time. Users often have Web browsers, e-mail programs, and word processors in use at the same time on their client computers. Likewise, many servers act as Web servers, mail servers, FTP servers, and so on. When the transport layer receives an incoming message, the transport layer must decide to which application program it should be delivered. It makes no sense to send a Web page request to e-mail server software.

With TCP/IP, each application layer software package has a unique port address. Any message sent to a computer must tell TCP (the transport layer software) the application layer port address that is to receive the message. Therefore, when an application layer program generates an outgoing message, it tells the TCP software its own port address (i.e., the source port address) and the port address at the destination computer (i.e., the destination port address). These two port addresses are placed in the first two fields in the TCP segment (see Figure 5.2).

Port addresses can be any 16-bit (2-byte) number. So how does a client computer sending a Web request to a Web server know what port address to use for the Web server? Simple. On the Internet, all port addresses for popular services such as the Web, e-mail, and FTP have been standardized. Anyone using a Web server should set up the Web server with a port address of 80. Web browsers, therefore, automatically generate a port address of 80 for any Web page you click on. FTP servers use port 21, Telnet 23, SMTP 25, and so on. Network managers are free to use whatever port addresses they want, but if they use a nonstandard port number, then the application layer software on the client must specify the correct port number.1

Figure 5.5 shows a user running three applications on the client (Internet Explorer, Outlook, and RealPlayer), each of which has been assigned a different port number (1027, 1028, and 7070, respectively). Each of these can simultaneously send and receive data to and from different servers and different applications on the same server. In this case, we see a message sent by Internet Explorer on the client (port 1027) to the Web server software on the xyz.com server (port 80). We also see a message sent by the mail server software on port 25 to the e-mail client on port 1028. At the same time, the RealPlayer software on the client is sending a request to the music server software (port 554) at 123.com.

Segmenting

Some messages or blocks of application data are small enough that they can be transmitted in one frame at the data link layer. However, in other cases, the application data in one "message" is too large and must be broken into several frames (e.g., Web pages, graphic images). As far as the application layer is concerned, the message should be transmitted and received as one large block of data. However, the data link layer can transmit only messages of certain lengths. It is therefore up to the sender’s transport layer to break the data into several smaller segments that can be sent by the data link layer across the circuit. At the other end, the receiver’s transport layer must receive all these separate segments and recombine them into one large message.

Segmenting means to take one outgoing message from the application layer and break it into a set of smaller segments for transmission through the network. It also means to take the incoming set of smaller segments from the network layer and reassemble them into one message for the application layer. Depending on what the application layer software chooses, the incoming packets can either be delivered one at a time or held until all packets have arrived and the message is complete.

FIGURE 5.5 Linking to application layer services

Web browsers, for example, usually request delivery of packets as they arrive, which is why your screen gradually builds a piece at a time. Most e-mail software, on the other hand, usually requests that messages be delivered only after all packets have arrived and TCP has organized them into one intact message, which is why you usually don’t see e-mail messages building screen by screen.

The TCP is also responsible for ensuring that the receiver has actually received all segments that have been sent. TCP therefore uses continuous ARQ.

One of the challenges at the transport layer is deciding how big to make the segments. Remember, we discussed packet sizes in next topic. When transport layer software is set up, it is told what size segments it should use to make best use of its own data link layer protocols (or it chooses the default size of 536). However, it has no idea what size is best for the destination. Therefore, the transport layer at the sender negotiates with the transport layer at the receiver to settle on the best segment sizes to use. This negotiation is done by establishing a TCP connection between the sender and receiver.

Session Management

A session can be thought of as a conversation between two computers. When the sending computer wants to send a message to the receiver, it usually starts by establishing a session with that computer. The sender transmits the segments in sequence until the conversation is done, and then the sender ends the session. This approach to session management is called connection-oriented messaging.

Sometimes, the sender only wants to send one short information message or a request. In this case, the sender may choose not to start a session, but just send the one quick message and move on. This approach is called connectionless messaging.

Connection-Oriented Messaging Connection-oriented messaging sets up a TCP connection (also called a session) between the sender and receiver. The transport layer software sends a special segment (called a SYN) to the receiver, requesting that a session be established. The receiver either accepts or rejects the session, and together they settle on the segment sizes the session will use.

Once the connection is established, the segments flow between the sender and receiver. TCP uses the continuous ARQ (sliding window) technique described in next topic to make sure that all segments arrive and to provide flow control.

When the transmission is complete, the sender sends a special segment (called a FIN) to close the session. Once the sender and receiver agree, the session is closed and all record of it is deleted.

Connectionless Messaging Connectionless messaging means each packet is treated separately and makes its own way through the network. Unlike connection-oriented routing, no connection is established. The sender simply sends the packets as separate, unrelated entities, and it is possible that different packets will take different routes through the network, depending on the type of routing used and the amount of traffic. Because packets following different routes may travel at different speeds, they may arrive out of sequence at their destination. The sender’s network layer, therefore, puts a sequence number on each packet, in addition to information about the message stream to which the packet belongs. The network layer must reassemble them in the correct order before passing the message to the application layer.

TCP/IP can operate either as connection-oriented or connectionless. When connection-oriented is desired, TCP is used. When connectionless is desired, the TCP segment is replaced with a User Datagram Protocol (UDP) packet. The UDP packet is much smaller than the TCP packet (only eight bytes).

Connectionless is most commonly used when the application data or message can fit into one single message. One might expect, for example, that because HTTP requests are often very short, they might use UDP connectionless rather than TCP connection-oriented messaging. However, HTTP always uses TCP. All of the application layer software we have discussed so far uses TCP (HTTP, SMTP, FTP, Telnet). UDP is most commonly used for control messages such as addressing (DHCP [Dynamic Host Configuration Protocol], discussed later in this topic), routing control messages (RIP [Routing Information Protocol], discussed later in this topic), and network management.

Quality of Service Quality of Service (QoS) routing is a special type of connection-oriented messaging in which different connections are assigned different priorities. For example, videoconferencing requires fast delivery of packets to ensure that the images and voices appear smooth and continuous; they are very time dependent because delays in routing seriously affect the quality of the service provided. E-mail packets, on the other hand, have no such requirements. Although everyone would like to receive e-mail as fast as possible, a 10-second delay in transmitting an e-mail message does not have the same consequences as a 10-second delay in a videoconferencing packet.

With QoS routing, different classes of service are defined, each with different priorities. For example, a packet of videoconferencing images would likely get higher priority than would an SMTP packet with an e-mail message and thus be routed first. When the transport layer software attempts to establish a connection (i.e., a session), it specifies the class of service that connection requires. Each path through the network is designed to support a different number and mix of service classes. When a connection is established, the network ensures that no connections are established that exceed the maximum number of that class on a given circuit.

QoS routing is common in certain types of networks.The Internet provides several QoS protocols that can work in a TCP/IP environment. Resource Reservation Protocol (RSVP) and Real-Time Streaming Protocol (RTSP) both permit application layer software to request connections that have certain minimum data transfer capabilities. As one might expect, RTSP is geared toward audio/video streaming applications while RSVP is more general purpose.

RSVP and RTSP are used to create a connection (or session) and request a certain minimum guaranteed data rate. Once the connection has been established, they use Real-Time Transport Protocol (RTP) to send packets across the connection. RTP contains information about the sending application, a packet sequence number, and a time stamp so that the data in the RTP packet can be synchronized with other RTP packets by the application layer software if needed.

With a name like Real-Time Transport Protocol, one would expect RTP to replace TCP and UDP at the transport layer. It does not. Instead, RTP is combined with UDP. (If you read the previous paragraph carefully, you noticed that RTP does not provide source and destination port addresses.) This means that each real-time packet is first created using RTP and then surrounded by a UDP datagram, before being handed to the IP software at the network layer.