Ground support of spacecraft is often referred to as “TT&C,” for tracking, telemetry and command. Tracking generally refers to measuring the position of a spacecraft, telemetry to information carried on the radio-frequency (RF) downlink signal, and command to information transmitted from the ground to a spacecraft. Increasingly, these terms are somewhat narrow to describe the action fully, as, for example, transmission of voice and video on the uplink. Though these functions were initially stand-alone elements of space operations, technological advances and economic pressures have blurred distinctions, both among them and with related operational activities. For example, computing power and reliability have advanced sufficiently that many functions can be economically accomplished at the point of ground receipt or of application, rather than at a centralized facility. For Apollo, the separate functions of command, telemetry, tracking, and voice communications were integrated into a single radio-frequency (RF) system. Readers should be aware that the boundaries of data receiving and handling functions are dynamic.

The genesis of data receiving and handling facilities was primarily in the military launch ranges. Differences and limitations necessitated changes as space activities developed. Geographically, coverage expanded globally. Operationally, mission durations became more continuous and ground activities increasingly interactive.

Early space flights were brief. Operations were simple, few, and far between. With no existing infrastructure, supporting systems, procedures, and operators had to be put in place for each mission—usually unique for each flight. Preplanning an entire mission has gradually been replaced by continual interaction by both flight controllers and mission scientists—resulting in far more productive missions. Led by the interplanetary designs, spacecraft have become much more autonomous—more robust and require less, and less critical, ground control. Some ground operations systems evolved from ”specialized mission unique” to ”general purpose multimission.” Even as multimission applications shared hardware, they often retained unique software. Generally, RF systems became more standardized (strongly prompted by international spectrum allocations), as have terrestrial communications as these needs are increasingly met commercially. However, many data systems and operating procedures remained quite unique. Sharing multipurpose assets is itself quite expensive, and technology has reduced costs sufficiently that in many cases it now makes economic sense to consider dedicated systems again—though constructed of standardized components.

Although much of this discussion focuses on NASA, it is in a larger sense the history of most space operations and thus has broader significance. In the Cold War environment the Soviet systems evolved independently, but most other agencies and countries followed NASA’s lead, primarily to take advantage of existing designs, systems, and infrastructure. NASA originally had three separate networks of ground stations for controlling and tracking spacecraft. The Space Tracking And Data Network (STADAN) initially spanned the globe north and south across the Americas. It was used primarily for near-Earth scientific satellites. Telemetry and command systems were independent of each other, and tracking relied primarily on radio interferometery. The Deep Space Network (DSN) was used for interplanetary spacecraft; its notable characteristic was large antennae and very sensitive receivers. These stations were located around the world so that all directions in the celestial sphere were continuously in view of one of the stations. The Manned Space Flight Network (MSFN) was established just for “manned” missions. These stations were located primarily along the ground track of early orbits launched from Florida.

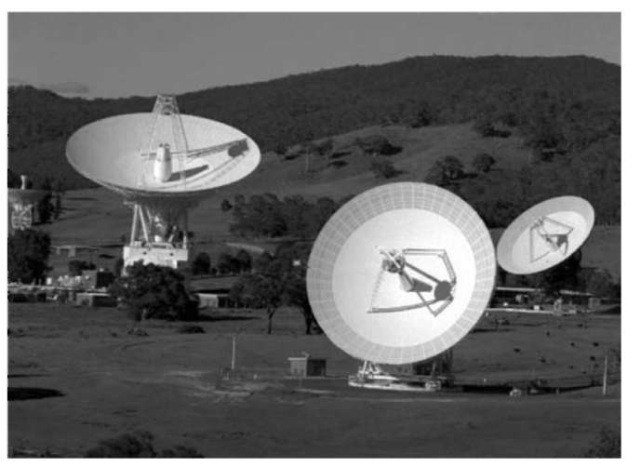

By the late 1960s, NASA had about 30 ground stations around the world. In Spain, Australia, and California, one station of each of the three networks was located within a few kilometers of the other two—each independently operated and often having incompatible systems. Responding to budget reductions after the Apollo program, the agency consolidated the STADAN and MSFN facilities into the Space Tracking and Data Network (STDN). This consolidation was primarily in name, and years passed before it evolved into an integrated network. Systems and RF spectrum differences accounted for much of this delay. The ground stations slowly became more standardized, but the control centers frequently preserved their individuality. With the advent of relay satellites (see TDRSS Relay Satellites section following), NASA closed most ground stations and colocated remaining STDN facilities with the three DSN stations. The Tidbinbilla station (Fig. 1) near Canberra, Australia, is typical; the other two stations are located in California’s Mojave Desert and near Madrid, Spain.

These tracking stations were originally the focal points for executing space operations. Prepass planning was accomplished by the flight projects and the plan communicated—often via teletype messages on HF radio—to the stations. For the Mercury and Gemini missions, flight controllers were dispatched to the MSFN stations and interacted with the astronauts during the brief contact periods. As ground communications became sufficiently reliable, control was centralized in Houston for Apollo; the ground stations became remote communications and tracking terminals. The STADAN and DSN underwent similar evolution; control became real time and centralized at mission control centers as reliable communications became available.

Figure 1. NASA’s Tidbinbilla, Australia, Tracking Station is one of three that provide communication with most NASA spacecraft beyond geosynchronous orbit. Antennae are (from left) a 26-meter STDN dish built for Apollo at Honeysuckle Creek and relocated to Tidbinbilla; the 70-meter diameter DSN antenna (expanded from 64 meters); the newest, a 34-meter beam waveguide DSN dish; and the original DSN antenna, a 26-meter dish expanded to 34 meters.

The subsequent evolution of data receiving and handling methods was synergistically enabled by advances in technology, although arguably more influenced by competitive factors than by either technical performance or economics. As often happens early in the evolution of a technology, there was little reason to standardize, and various designers developed somewhat different approaches to the same basic tasks. In space operations, the spacecraft manufacturer usually determined the design of the telemetric system. Industrial participants, of course, sought competitive advantages by perpetuating proprietary concepts. Internecine battles both between and even within space agencies also inhibited standardization. Ground facilities were usually viewed as infrastructure and were funded separately from the spacecraft programs. Thus space-ground cost trades were seldom a major consideration. As a result, ground facilities must often accommodate a number of different approaches to command and telemetry. This has led to such situations as one NASA Center now using four different ways to transmit telemetric data to the ground: time division multiplex (TDM) and three different packet data formats—each requires singular ground data-handling systems.

The economic benefits of standardization are persistently apparent, and progress is slowly being made. The Consultative Committee for Space Data Systems (CCSDS) was established by the major space agencies in 1982 to promote international standardization of space data systems. In 1990, CCSDS entered into a cooperative arrangement with the International Standards Organization (ISO), and many CCSDS recommendations have now been adopted as ISO standards. The reader is referred to the CCSDS for the latest information (http://ccsds.org).

Tracking

Initially, “tracking” was accomplished primarily by measuring the angle of the spacecraft’s path as a function of time from ground observing stations. Both optical and RF observations were used. Optical was by tracking telescopes, and, of course, depended on favorable lighting conditions. RF tracking was passive— receiving a spacecraft beacon signal at fixed antennae on the ground and determining angles using interferometry. NASA’s STADAN system was called the Minitrack. Radars were also used—transmitting signals from ground-based antennae and observing the reflections from the satellite. Radars provided another important parameter: range. Doppler information could also provide range rate data. Primarily due to weight and power limitations, few satellites carried transponders, and radars had to rely on skin tracking. The tracking data parameters measured by most of these methods were then transferred to central facilities with highly skilled specialists and usually the latest mainframe computer systems for processing and reduction. Except for a very few critical activities such as Apollo maneuvers, orbit determination was far from a real-time process. The tracking tasks included determining where the spacecraft had been when observations were made and also predicting its future orbital path. This propagation activity requires quite sophisticated modeling of such parameters as Earth’s shape and mass distribution, solar pressures, and outgassing and venting from the spacecraft. The Apollo USB system measured range and range rate using a pseudorandom code carried on the uplink to the spacecraft, then turned around and retransmitted to the ground on the downlink. Some programs chose a simple tone rather than a pseudorandom code. Angles were also measured but were increasingly of secondary importance relative to the range-range rate data.

Spacecraft designers are beginning to incorporate GPS receivers onboard, permitting the spacecraft to determine its own orbit and transmit the data to the ground via telemetry. One of the more precise “tracking” examples to date has been the Topex-Posidon spacecraft, where GPS was used in the differential mode to measure the spacecraft orbit to about 2 centimeters. This precision was necessary because the spacecraft orbit was the reference from which changes in sea level were determined. Corner reflective mirrors are carried on some spacecraft, permitting range to be measured quite accurately by using ground-based lasers. Many of the applications of this technique were not to determine the spacecraft orbit, but rather to measure tectonic drifts on Earth—movement of the ground station.

Differences among various concepts include different protocols for time-tagging tracking parameters. Even within the same agency, some programs chose to read all parameters at the same instant, whereas others chose to read each parameter sequentially, each had its individual time tag. Such differences were necessarily reflected in different systems for ground processing. Today much of the activity in orbit computations has been transformed from art to science, mostly executed in software. At the same time, the tools—computers— have become cheap and reliable. Commercial vendors now offer software packages that determine orbit functions using low-cost computer platforms. This, along with the use of GPS, has greatly simplified the tracking function.

Telemetry

Telemetry—literally, measuring at a distance—is the transmission of data from a spacecraft to ground facilities. This often consists of measured parameters (either analog or digital) and could relate to either the status of the spacecraft or to observations made by the spacecraft scientific instruments (often referred to as ”science data”). Occasionally this telemetry is simply relayed from another source via the spacecraft, for example, emergency locator beacons from downed aircraft and meteorological measurements collected from buoys. Voice communications from people onboard a spacecraft are often handled as ”telemetry.”

Telemetric systems have been used in aeronautical and space flight testing for more than 50 years. Early telemetric systems were based on analog signal transmission. These systems used frequency multiplexed/frequency modulation (FM/FM) techniques. In this technique, each sensor channel is transmitted by mixing the sensor output with a local oscillator using dual-sideband suppressed-carrier (DSBSC) amplitude modulation (AM). The outputs of each mixer are then summed and used as the input to a frequency modulator for transmission. In this technique, each of the local oscillators forming the frequency multiplexed signal need to be different and separated in frequency to keep the channel signals from overlapping in the frequency domain.

The FM/FM techniques were used in the Mercury manned spacecraft program as well as in the early, unmanned programs such as the Ranger and Lunar Orbiter spacecraft. The need for a large number of oscillators and the lack of flexibility gave way rapidly to digital techniques. For example, the Gemini program began using digital techniques after the Mercury program, and this continued on to Apollo and the Shuttle (the major exception was the real-time video data that has remained in their TV-compatible analog format). Deep space missions to the Moon and planets have also been using digital techniques for sensor data since the mid-1960s. These systems are based on pulse code modulation (PCM) for the data acquisition. The modulation techniques can be either analog frequency modulation (FM) or phase modulation (PM) or digital techniques based on phase shift keying (PSK) or frequency shift keying (FSK).

In the following sections, we will examine the basics of the data acquisition system and data encoding. Then we will look at the digital modulation techniques and finally at the error-correcting codes used in current space systems. The International Foundation for Telemetering is a nonprofit organization formed in 1964 to promote telemetry as a profession. Interested readers are referred to http://www.telemetry.org for further information. Data Acquisition. Sensor data is collected within the spacecraft, and the sensor output is typically in an analog form such as a voltage or a current. The analog value is converted to a digital code word by an analog-to-digital circuit in a process called pulse code modulation. The code word takes on a value from 0 through 2N—1 where N is the code word size. Typical values for N range from 8 to 16 depending upon the degree of resolution required. The sample rate is determined by the bandwidth of the underlying analog signal. By the Nyquist sampling theorem, the sensor needs to be sampled at a rate at least twice the signal bandwidth, although sample rates at least five times the signal bandwidth are often used to allow for higher quality signal reconstruction and additional signal processing. In PCM systems, one needs also to specify the data encoding method and the time multiplexing format. Data encoding determines the channel transmission characteristics, and the multiplexing format determines the signal sampling discipline.

Data Encoding. The data format for transmission represents a trade-off among occupied bandwidth, data clock recovery, and immunity to phase reversals. As part of the overall telemetric system specification, the data encoding methodology needs to be specified. Typical data formats used in space telemetry systems include

• NRZ-L: Different voltage levels are assigned for logic 0 and logic 1 values, and the voltage level does not change during the bit period.

• NRZ-M: The voltage level remains constant during the bit period, and a level change from the previous level occurs if the current data value is a logic 1; no change is made if the current data value is a logic 0.

• NRZ-S: The voltage level remains constant during the bit period, and a level change from the previous level occurs if the current data value is a logic 0; no change is made if the current data value is a logic 1.

* Bi-f – L: The NRZ-L signal is modulo-2 added with the data clock to show the logic level during the first half of the bit period and its complement during the second half of the bit period.

* Bi-f – M: A level change is made at the start of the bit period, and a second change is made for the second half of the bit period if the logic level is a 1.

* Bi-f – S: A level change is made at the start of the bit period, and a second change is made for the second half of the bit period if the logic level is a 0.

The M and S representations are called differential waveforms and are generally considered immune to phase reversals for correct decoding. However, the NRZ-M and NRZ-S techniques suffer from a higher error rate because when a single bit error occurs during transmission, two bit errors occur upon detection. Despite this higher error rate, the phase reversal immunity is considered a greater advantage, and differential NRZ encoding is frequently used. The L representations require correct phase determination to recover the data correctly. The Bi-f representations generally require twice the transmission bandwidth of the NRZ representations. However, they have better clocking capabilities. Because of this, typical space telemetry transponders will have specifications detailing the maximum number of consecutive 0 or 1 values and the minimum number of transitions in a block when NRZ coding is used. Data Packaging. For transmission from the spacecraft to the ground station, the data needs to be packaged to allow for synchronization, error detection, and accounting of data sets. Additionally, data may need to be tagged for routing to specific analysis centers. To accomplish this, data are typically packaged into one of two formats: telemetric frames and telemetric packets.

Telemetric frames are based on time-division multiplexing of the spacecraft sensor data for transmission across the space channel. Each sensor is transmitted at least once per frame cycle; more critical data is transmitted at a higher rate. The telemetric frame is based on a highly structured format that has embedded synchronization codes and accounting information to allow quick synchronization and verification of the transmission of all data. Normally, the frames are sent continuously to keep the channel filled to maintain synchronization and detect link dropouts. If link dropouts occur, the repeating, structured pattern of the frames allows rapid receiver resynchronization once the signal returns. The IRIG-106 standard developed for missile and aeronautical telemetry is often used in the space channel as well. The frames are processed in software upon reception using a database that shows the location of each sensor value in the overall frame structure and applies any necessary calibration to convert the raw PCM code value back to a meaningful datum.

As link reliability increases, a different philosophy for data packaging can be considered. Computer networking technology developed for ground telecommunications networks has been used as the basis for developing packet tele-metric systems. The advantage of the packet system over the frame-based system is that the transmission can be more easily customized to the needs of the data sources, not sending data merely to maintain a fixed sampling discipline. The packets also allow distributed data processing.

The Consultative Committee for Space Data Systems (CCSDS) packet format is based on a structure consisting of a header that contains data source and destination information and other related system information. The body of the packet contains the data. Following the data, error detection, correction codes, and other information can be added. This packet system has been successfully used on many spacecraft, and commercial vendors supply standard ground station hardware for processing CCSDS packet telemetry.

Modulation. Once the telemetric data are packaged in a frame or packet format, they are transmitted across the radio link using some form of modulation. There are a large number of permutations for radio modulation depending upon mission needs, power requirements, and data rates. In this section, we examine the basic technologies used in data transmission.

The PCM/FM and PCM/PM techniques are often used in missiles and sounding rockets even today. The technique first uses the PCM technique to sample the data. The data are encoded using one of the data formats described and are placed in telemetric frames. Finally, the baseband data is routed to the input of either a frequency modulator (FM) or phase modulator (PM) for transmission. The advantage of using FM and PM is the constant envelope signal on the output.

The PCM/PSK/PM technique is an extension of the PCM/PM technique.

The PCM output is used as the input to a phase shift keying (PSK) modulator.

The PSK process used is binary PSK (BPSK) modulation which is a DSBSC AM

process described by

![]()

where \[E is the normalized signal strength, d(t) is the PCM output data as a function of time, and o is the PSK subcarrier frequency. This technique has been used, for example, in the Voyager telemetric system. The additional PM modulation step provides two advantages. First, the technique allows mixing other subcarriers with the PSK data stream to provide a total composite signal. This technique also maintains a constant transmission envelope that can be important when transmitting through nonlinear amplifiers.

The use of the PM modulator does add a degree of system complexity, so that data transmission at the PSK modulator output level is frequently used. In the PSK system, many different combinations give different efficiencies (bandwidth and performance). These PSK techniques are supported in the standard TDRSS transponder hardware.

Binary-phase shift keying (BPSK) was described before. BPSK transmits one PCM bit per channel symbol and is the simplest of the PSK techniques. It also has the lowest transmission efficiency.

Quadrature-phase shift keying (QPSK) can be considered as two orthogonal BPSK channels transmitted at the same time. The transmitted signal takes two of the PCM bits at a time and forms a single channel symbol, where the channel symbol changes state every two bit periods. The two channels are called the ”in phase” or I channel and the ”quadrature phase” or Q channel. There is a total of four possible carrier phase states in QPSK, where the carrier is described by

where \[Ej are the normalized signal strengths, d{(t) and dq(t) are the PCM output data as a function of time, and w is the PSK carrier frequency. In the TDRSS system, the relative I and Q channel signal strengths can be varied; typical values are 1:1 for equal strength and 1:4 for nonequal strength. These are defined by the user profile when using the TDRSS system.

The QPSK modulation technique allows the possibility that the channel symbol state can cause a 180° phase shift. This can cause undesired harmonic generation in nonlinear amplifiers. To mitigate against this, a delay is added to one of the two bits used to form the channel symbol that keeps phase changes to a maximum of 90°. This technique is called offset QPSK (OQPSK). It is also known as staggered QPSK (SQPSK). The transmitted signal is then described by

![]()

and the variables are as described for QPSK. The delay of one bit period T is seen added to the in-phase data channel. Upon reception, a similar delay is added to the quadrature-phase channel to bring the two bits back into alignment.

In many payloads, there are two independent PCM data streams: one for health and welfare data and one for high-rate scientific data. The TDRSS system allows considering the I and Q channels as two independent data channels that have different data rates on each. This form of transmission is called unbalanced QPSK (UQPSK). This technique has the advantage that it does not send the low-rate channel at the higher data rates, thereby degrading performance. The system also has the advantage of OQPSK that it does not have frequent 180° phase shifts. The mathematical description for the carrier is the same as that given before for QPSK.

By applying waveform shaping to the I and Q data signals used in the QSPK modulation signal, a form offrequency shift keying is made. The Advanced Communications Technology Satellite used the minimum shift keying (MSK) technique. The carrier can be described by

where Tb is the bit period. The data filtering functions are half-period sinusoids. This technique can be demodulated by using a coherent QPSK demodulator.

The wireless communications industry is using another form of filtered QPSK called Gaussian minimum shift keying (GMSK). Instead of sinusoidal filter functions, Gaussian-shaped filters are used to prefilter the data before mixing with the carrier. The MSK techniques are being examined because of their transmission bandwidth efficiency compared with QPSK. A related, patented form of filtered QPSK developed by Feher is currently being evaluated for telemetric systems. This FQPSK modulation system uses proprietary filter functions in a manner similar to GMSK. Preliminary results indicate that it has an improved spectral efficiency for data transmission. Tests are currently being conducted within NASA and the U.S. DoD to evaluate this technique for ground and space telemetric systems.

Data Transmission. Several techniques are used for transmitting this modulated RF signal from a spacecraft to the ground. The original, and still most common, method is direct transmission to receiving antennae on the ground. These transmissions may be initiated by ground command or instructions stored onboard or may be broadcast continuously. Signals are usually directed to specific TT&C stations, although transmission of some types of meteorological data, for example, is simply broadcast to all interested users. For weak signals, ground antennae are occasionally arrayed to provide increased gain.

Another technique increasingly used is relay through another satellite, usually one in geosynchronous orbit (see TDRSS section following). This technique involves a space-to-space cross-link in addition to the relay-sat-to-ground link, but it offers several advantages. Communications with Earth-orbiting satellites are possible almost any time, any place. (Depending on specific geometry, some limitations may exist in polar regions.) This avoids the need for a large network of ground stations. Such ground networks are usually expensive to build and operate and are subject to disruption due to local political and environmental conditions. It also centralizes the point of data reception on the ground, avoiding the need for subsequent terrestrial relay. Although cross-links can present some technological challenges, they also offer freedom from interference and interception.

Like the evolution of tracking data handling described before—and for similar reasons—telemetric data is usually transferred from the ground receiving station to a central facility for processing. Even though the telemetric data rates of today are greatly increased, economical hardware exists to perform much of this processing in a “black box” in real time at the ground station. However, as will be discussed under Future Trends, other factors limit this evolution.

The characteristics of the transmission medium and path are different in the space-to-ground link and in the terrestrial link from the ground station back to the control and central data handling centers. Although the latter was sometimes HF radio in very early days, it is normally satellite or coax/fiber today. These differences reflect directly that the “best” protocols for the terrestrial link are different from those for the space-to-ground link. Thus, the ground receiving station is required either to reformat the data, or commonly, encapsulate the first protocol into the second, increasing overhead.

As the Internet has grown, considerable interest is developing in using standard Internet data protocols (Transmission Control Protocol/Internet Protocol, TCP/IP) in spacecraft. This is still a research area, but there is considerable interest among scientific investigators and systems designers in providing Internet-to-space support. The ground-based TCP/IP protocols have some recognized difficulties in the space environment. For example, the long link delays and relatively high channel error rates lower the throughput of these protocols. To mitigate against these effects, research is currently ongoing into modifications of TCP/IP for space. Progress is also being made within CCSDS to develop the Space Communications Protocol System (SCPS) that is based on the TCP/IP protocol stack but modified for use in the space channel environment.

As part of the long-term trend for space communications, system designers are investigating the application of commercial telecommunications technology to space communications. This emphasis comes from two drivers: the need to reduce system cost and the need to use system bandwidth better. By using commercial telecommunications satellites and associated ground networks, spacecraft operating costs can be reduced because infrastructure costs may be shared with commercial users. The need to use more efficient modulation techniques has led system designers to consider techniques used in the wireless industry. Modulation techniques such as GMSK and FQPSK were designed for the commercial wireless communications industry. The FQPSK technique is currently being evaluated within the U.S. Department of Defense for use in missile and aeronautical telemetry. Their relatively higher bandwidth efficiency over PSK methods makes them good candidates for use in constrained-bandwidth environments for space as well.

Data Processing. Telemetry was originally processed in decommutators that were essentially giant patch panels, reconfigured for each spacecraft. As computing power grew, processing was accomplished using software in mainframe computers. (Like IBM, many space pioneers found it difficult to advance beyond this stage.) The next step was use of application-specific integrated circuit (ASIC) technology. As ASIC technologies became practical, they proved much more efficient for repetitive processes such as telemetric processing than general purpose machines controlled by software. Also, processing in chips rather than mainframes permitted substantial increases in throughput telemetric rates, avoiding the need for expensive buffering to reduce processing rates. (They also proved considerably more reliable because most operating failures were in the connections.) The current state of the art is to use programmable gate arrays— combining the speed of a hardware approach with the flexibility of a software solution. Numerous vendors now offer such products.

Processing of data is often broken down into four levels: Level 0 through Level 3. Though the Level 0 step is still common, it is primarily an artifact of reel type onboard data recorders. Data were recorded serially onboard onto a recorder for playback (dump) to a ground station when one was in view. This data was then usually dumped with a reverse playback—avoiding the need to rewind and cue the recorder. The Level 0 function is to remove the space-to-ground transmission protocols, restore the reversed data stream to its original direction, and separate the various multiplexed parameters into their original components. Level 1 data processing involves appending axillary data from other sources, such as orbit and attitude data. Levels 2 and 3 processing require active involvement and judgment of the scientists in analyzing and presenting the data. Levels 1 through 3 are normally considered part of scientific data processing rather than the ground data-handling function.

The solid-state recorders increasingly used on spacecraft today, ofcourse, do not need to be dumped in the reverse direction. Second, data can be read out of the recorder in any desired sequence, obviating the need to demultiplex a serial data stream on the ground. Third, using economical hardware and relatively simple software, space-to-ground protocols can be stripped and data reformatted for terrestrial transmission in realtime, even at 100-Mbps rates. This effectively obviates the need for Level 0 processing. Using GPS for onboard orbit determination, as described earlier, similarly obviates this Level 1 step.

Error-Correction Coding

Error-correcting coding has been studied by theorists since Shannon’s work in the late 1940s. Error-correcting coding techniques became widely used in space communications in the 1970s with the advent of good techniques by Viterbi and Fano for decoding convolutional codes. Current data services used with NASA’s Deep Space Network and the TDRSS network permit the user to select from standard error-correction coding techniques.

Forward Error-Correcting Codes. Transmission over the space channel introduces errors into the data stream. The goal for space-to-ground transmission accuracy for most telemetric data has historically been a bit error rate (BER) of 10 ~ 5, or no more than one error in 100,000 bits. (For comparison, coax and fiber generally deliver data at BERs better than 10 ~10.) Two basic techniques are used for recovery: request a retransmission of the data, or add an error-correcting code to the data. On missions that have long propagative delays, requesting retransmissions often reduces the data throughput to unacceptably low levels. Thus the error-correction technique is usually preferred because it requires less memory and processing capabilities on the spacecraft.

Here, we discuss the two common error-correcting coding techniques used in space systems. They permit correction of the data upon reception, so they are called forward error-correcting (FEC) techniques. They accomplish this task by adding redundant information to the transmitted data. This implies that a higher transmission rate is needed and consequently more transmission bandwidth to achieve the same raw data throughput. This is considered acceptable when achieving a much higher data quality. The FEC can permit the system to operate as if it had a 3- to 6-dB higher received signal-to-noise ratio that it actually has—a significant benefit.

The FEC that has the longest history in space communications has been convolutional coding. This has been used on missions since the 1970s and even for deep space missions like Voyager. The convolutional encoder is built around a fixed-length shift register whose length is known as the constraint length K. Once each bit time, a new data bit enters the shift register, and multiple outputs are computed as a function of the current shift register contents. Typically two or three outputs are generated for each bit time, giving rise to a rate one-halfor rate one-third coding. A Viterbi decoder is used to decode the data and correct for transmission errors. NASA requires that all S-band services on TDRSS use a rate one-half convolutional encoder. Typically the constraint length is K = 7. TDRSS also supports the rate one-third coding, but it is not part of the CCSDS recommendations.

The Reed-Solomon coding technique is a form of block coding where the data stream is partitioned into fixed-length blocks and redundant information is added to the end of the block to form the transmitted signal. Reed-Solomon block coding is characterized by the number of information symbols and the number of code symbols in a transmitted block. The CCSDS recommends Reed-Solomon encoding using transmitted blocks of 255 total symbols that have 223 information symbols in the block. This is written as a (255,223) R-S code.

The CCSDS standards recommend using Reed-Solomon codes either by themselves or in conjunction with convolutional codes, as discussed in the following section. The TDRSS does not support Reed-Solomon decoding as a basic service but will pass encoded data to the user for decoding. Reed-Solomon codes can be used without convolutional coding through TDRSS if Ku-band services are being used, but not in the S-band services.

Concatenated Coding. It is known that the output of the Viterbi decoder produces errors in a burst pattern. To compensate for this, missions can use a concatenated coding technique. First, a Reed-Solomon code is applied to the data stream to form what is known as the ”outer code.” These coded data are then encoded with a convolutional or ”inner code.” The convolutional code removes the bulk of the channel transmission errors, and the Reed-Solomon code removes the burst errors from the convolutional decoder. The CCSDS recommendation for concatenated coding is to use a (255,223) R-S outer code with a rate one-half, K = 7 convolutional inner code.

A recently discovered coding technique called turbo codes has begun to be seriously investigated for application in space communications. These codes were first published in 1993. They have the advantage of providing performance near the Shannon limit for channel performance, even at low signal-to-noise ratios. Recent experiments have shown cases with TDRSS where the ground station receivers could not lock onto the signal in low SNR environments even though the symbol decoding was still functioning. Turbo codes are based on convolu-tional codes on the encoding side and are decoded using iterative solution techniques in the receiver. Current research involves determining optimal code structures and decoding techniques for use in the space channel.

Command

Command is essentially the reverse of telemetry: digital data is modulated in one of several ways on the uplink carrier. Basic differences are that data rates are much lower, and transmission integrity is usually more critical because these data normally control the spacecraft. The command system generally can be thought of as providing instructions for the onboard spacecraft systems to initiate some action. Two classes of command strategies are commonly used in modern spacecraft: single command words and command files. Many early spacecraft command systems were basically tone response systems, that is, when a given tone of a specific frequency was received by the spacecraft, a specific action was undertaken. Tone commands are still often found in rocket command destruct systems.

CCSDS has published standards used by many programs. Differences are primarily in the intermediate transmission path between the originating control facility and actual transmission from the RF transmitter. Some concepts transmit the command message directly from the control center in ”real time,” whereas others stage it at intermediate points such at the remote ground station. In the latter case, it is error checked and stored at this intermediate location, awaiting a transmit instruction either as a function of time or on electronic or verbal instruction from the control center. A ”command echo” is frequently used for verification: a receiver at the ground antenna detects the command on the RF link, which is returned to the control center for comparison with the intended command structure. The term ”command” is often used to describe all signals modulated on the uplink signal; however, such uplinks can include both analog and digital voice communications with astronauts, and video uses are planned in the future.

The command word will typically look like a binary computer instruction that has a system address, command instruction number, and command data value. Sometimes the command will use a simple check sum for error detection. Other error check strategies that have been used in spacecraft include repeating the command bits twice and then performing a bit-wise check for agreement before executing. In all cases, the command received word would be echoed in the telemetric data stream as a specific telemetric word, so that the operator could check for command validity. The spacecraft would be configured for two possible modes of command acceptance: execute the command upon passing the received error check, or wait until a ground operator sends an execute command after verifying that the operational command was correctly received. In operations, routine commands would be executed immediately upon passing error validation. Commands that could be dangerous for spacecraft operations, for example, firing a thruster or exploding pyrotechnic bolts, would have the operator in the loop. The operator would wait for the configuration command to be correctly echoed in telemetry and then would send the execute command to initiate the actual operation.

Command files are more appropriate for sequences of operations, especially those for a spacecraft beyond Earth orbit. For example, the Voyager planetary encounters would operate by loading a file of command sequences for camera settings and spacecraft attitude before the encounter where the communication times could be in excess of hours. The operators would verify that the file had been loaded into the spacecraft memory by playing back the memory through the telemetric stream. Any transmission errors could be corrected before the encounter, and then the spacecraft would operate in automatic mode, without immediate operator interaction, during the encounter. The structure of the commands within the files can resemble those found in the individual command mode. The file organizes the commands for bulk transmission.

All of the modulation and coding techniques used for telemetric transmission apply to command transmission as well. In general, very sophisticated FEC is not done because of onboard processing limitations. However, parity checks or check sums are often used to identify command errors. For low Earth orbit (LEO) operations, repeating commands that are found in error is often sufficient.

TDRSS Relay Satellites

In the mid 1970s, NASA began developing a communications and tracking network in space—the Tracking and Data Relay Satellite System (TDRSS). The basic concept was demonstrated during the Apollo Soyuz Test Project mission using the Applications Technology Satellite ATS-6. By using the relay satellite concept, communications with a fixed ground station for LEO satellites can be increased from 5 to 10 minutes per contact at a fixed ground station to more than 85% of the total orbit time. (The TDRSS concept used a single ground station that had two active relay satellites located above the horizon of this station. This resulted in a ”zone of exclusion” on the opposite side of Earth that had no coverage. This ZOE region was later covered by another relay satellite, using a second ground station, to meet the needs of the CGRO satellite, and increased TDRSS coverage to 100%.)

TDRSS was first seen as just another station of the STDN, except that it was located in the sky. However, technical differences such as signal levels and Doppler rates made it clearly a very different kind of station. The primary motivation for building TDRSS was economic, more for cost avoidance than cost reduction. Costs of upgrading the STDN to meet Space Shuttle needs, it was estimated, would be more than the cost of developing and deploying a relay satellite system. A second consideration was political; operations at ground facilities on foreign territory were disrupted several times by political disputes. The first TDRSS satellite was launched in April 1983. It was stranded halfway to geosynchronous altitude by an explosion of its upper stage rocket, but by using its tiny maneuvering thrusters, eventually it reached its operational location over the Atlantic that summer. Launching the second satellite was the objective of the ill-fated Challenger mission in January 1986. Completing the TDRSS constellation was a high priority because so many new programs depended on it. Thus, the third satellite was aboard Discovery in September 1988 on the first Shuttle mission after Challenger. It was completely successful; four more spacecraft have since been successfully launched.

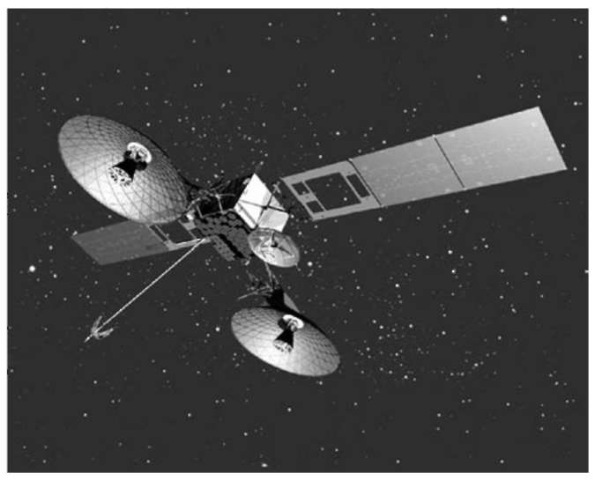

From technical and performance standpoints, TDRSS has proven outstanding. In addition to supporting users at standard S-band frequencies, TDRSS provides a high-frequency service in the Ku-band region and commercial service at C-band. (This commercial service was added by the spacecraft contractor to spread the costs of the satellites and thus reduce costs.) This complex system has demonstrated its ability to relay data at more than 400 megabits per second from user spacecraft virtually anyplace over Earth. Each relay satellite can communicate with two high-rate users simultaneously, and the system can handle an additional 20 low-rate users. This low-rate system—termed multiple access—was one of the first systems to successfully use code division multiple access (CDMA) techniques that permit multiple users to share the same radio spectrum and is now employed in some cellular phone systems. TDRSS provides more than six times the global coverage of the ground network it replaced, at data rates six times as high. It enables real-time contact virtually anytime, anyplace—an especially valuable feature of the relay satellite approach. It also has unanticipated applicability to small systems and has been successfully used with high-altitude balloons, with aircraft to relay real-time infrared images for forest fire fighting, and with handheld transmitters for voice and data.

A fixed price procurement approach was selected even though extensive development was needed, and NASA became directly responsible for launching the satellites. Thus, substantial government-industry cooperation was required in the face of a somewhat adversarial contractual relationship. Another significant challenge was that of dealing with two distinctly different cultures: a communications industry accustomed to a highly regulated environment and the engineering focused aerospace industry. The difficulties were more complex than can be summarized here; interested readers are referred to Aller, R.O. Issues in NASA Program and Project Management. From a program perspective, the TDRSS system was deployed late and cost more than original estimates. Even so, the cost of augmenting the STDN for the Shuttle was avoided, and closing most of the STDN in the 1980s and shifting to TDRSS actually reduced costs. Amortized development and launch costs plus operating expenses of the TDRSS are less than the operating costs alone would have been for the ground network it replaced.

Figure 2. Second generation of NASA’s TDRSS spacecraft, used for tracking and communications with most near-Earth-orbiting spacecraft. From geosynchronous orbit, the two large movable antennae each provide communication at high data rates with spacecraft far below. A phased array antenna on the Earth-facing panel permits simultaneous communications with 20 users at lower data rates. The small dish antenna relays signals to/from the ground control station in New Mexico.

NASA procured three follow-on TDRSS spacecraft in the mid-1990s. Hughes (now Boeing) won the competition with a design based on its HS-601 bus. An artist’s rendition of this design is shown in Fig. 2. The first of this series was successfully launched in 2000.

Radio-Frequency Spectrum Issues

By the nature of space operations, ”wireless” communications links are necessary. Thus ground data-receiving facilities are strongly influenced by radio spectrum issues. The International Telecommunication Union (ITU) was established in 1865 (as the International Telegraph Union). The ITU is now under the United Nations and endeavors to coordinate radio spectrum use internationally. Radio waves have no respect for political boundaries, so this coordination has become critical. As transmitters and receivers moved from terrestrial locations into the air and then into space, signals radiate far more of Earth. As the private sector sought to capitalize on the economic potential of freedom from ”wires” that technology subsequently enabled, extreme pressures have developed for spectrum allocations. This stage and the extremely rapid development of associated technologies presented an especially vital but difficult challenge to the somewhat cumbersome ITU processes. Not surprisingly, these pressures have focused on the lower frequencies. Technology is more proven in this region, and signal characteristics such as power, directionality, and interference favor this region for widespread commercial use. Because wave-lengths are longer, hardware manufacturing tolerances are also less critical and costs are reduced.

For largely these same reasons, space activities initially operated in the lower frequency regions, primarily the VHF region. However, factors such as interference from other RF users in this region and the need for greater bandwidth slowly drove space programs to vacate this region in favor of higher frequencies. NASA chose the S-band region, transmitting uplinks near 2100 MHz using downlinks above 2200 MHz. Some other U.S. agencies elected to downlink at these same frequencies, but to transmit at lower frequencies (L-band, near 1700 MHz). This S-band spectrum was originally allocated internationally for space use in a footnote to the allocations table, specifically noting that such use was permitted on a secondary basis. This secondary allocation meant that use must not interfere with the use of this spectrum by those holding primary allocations and that conversely, holders of secondary allocations had no recourse if primary users interfered with them. Interestingly, this applied to programs such as NASA’s Apollo and Space Shuttle programs: their primary operating frequency was authorized only as a secondary allocation, legally subject to these previously stated constraints. (Note that deployment of the TDRSS relay satellite had an interesting effect on this situation because TDRSS transmits downward to low Earth-orbiting satellites at the normal uplink frequency and conversely, receives signals transmitted upward at the downlink frequency.)

This intense competition for spectrum, together with need for additional bandwidth and desire for primary allocations, is driving space programs to adopt higher frequencies—mostly in the Ku and Ka-band regions. Initial use of optical frequencies is also being considered. Technologies for these higher frequencies is not as mature as those for lower frequencies, but they do offer advantages in bandwidth and in the power and weight of communications terminals in space. Disadvantages, in addition to less mature technologies, include the need for high pointing precision of antennae and susceptibility to atmospheric interference, especially rain and clouds. Certainly, costs of necessary modifications to the ground infrastructure inhibit these moves to higher frequencies.

Future Trends

As has been said, predictions are difficult, especially about the future! Tempting as it is to focus on the evolution of relevant technologies as the indicator of future trends, history suggests that sociological factors soon dominate technology and economics. Recall that for years after technology replaced the steam locomotive with diesel power, railroads retained firemen even though they no longer had boilers to stoke! Early TT&C facilities had the characteristics of ”infrastructure,” and infrastructures are especially adept at self-preservation, aided by the detachment from their customers. We will attempt to place data-receiving and data-handling facilities in the context of the historical evolution of technology and from this perspective identify factors determining trends. Finally, we will hazard some guesses about near-term trends.

Typically a technology evolves from an era of a few specialists proficient in using it, through one where the process becomes increasingly understood, standardized, and automated, so that necessary expertise and skill levels drop. Eventually tools become available that those unskilled in this particular technology can readily use to meet their needs. Entrepreneurs see market potential and derive new, more efficient products and processes by employing the technology. However, progress is frequently intermittent as other factors come into play. Those finding careers, organizations, and profits threatened summon political and industrial support to resist change, and efforts at standardization are soon caught up in competitive battlegrounds. Note that much of the early effort necessarily involved “inventing” the needed data-receiving and data-handling facilities. The entities formed for this purpose specialized in designing, rather than using, solutions. Once created, these entities usually strive to reinvent custom solutions for each application, rather than using or adapting existing alternatives.

Technologies that existed early in the space age determined the technical approaches for meeting satellite communications and data-handling requirements, and more importantly, they also determined the structure of the organizations and processes for meeting these requirements. Yet, technology seldom conveniently evolves within the boundaries of established management and fiscal structures. Matters that should be simple engineering issues whose solutions are submerged in silicone, for example, instead become contentious inter-organizational issues. Few comprehend the technical implications as technology expands across borders, and interpersonal relationships—influenced by individual, provincial, and fiscal interests — dominate resolution.

The result of this confluence of technological, sociological, and political realities is that future trends are dominated by the heritage of the particular organization. New players usually adopt the latest economical technology and employ commercially available solutions when they enter the arena; thereafter they become increasingly bound by their own heritage. The pace and direction of technology limit advancement, but they are only two factors determining future directions. Implicit in this hypothesis is the view that few of the concepts and facilities commonly used today for receiving and handling space data from satellites are state of the art. Prudent conservatism is certainly warranted. Challenges of adopting state-of-the-art technologies are that performance risks are often increased due to immature development, costs are usually relatively high in the early stages, and amortization of changeover costs is difficult to justify. However, if a truly competitive marketplace develops using a given technology, this usually stimulates the private sector to evaluate objectively the economics of advances in that technology.

Although the expanding technologies of communications and computing are often said to be converging, semantics must be understood. Many of the recent advances in communications result from computing advances: the ability, effectively and economically, to digitize, package, route, and unpack material being moved. Transmitter and receiver advances, especially at higher RF frequencies as well as optical, contribute directly to space operations. (Other communications technology advances such as fiber are very significant, of course, but are not directly applicable to space-to-ground communications.) Considering the computing aspect, approaches that combine the speed of a hardware approach with the flexibility of a software solution, as now offered by programmable gate arrays, will become dominant. Chip technology offers tremendous increases in processing speed over the old mainframe approach, due in part to the small dimensions.

Other prognostications: space programs will rely increasingly on navigation satellites (GPS, Glonass, Galileosat) to determine orbit onboard. A TCP/IP-like transmission protocol such as SCPS, adapting to the space channel environment, will probably become popular. Use of embedded chips at the points of application greatly streamline data processing, allowing eliminating some previously required no-value added steps such as transport to centralized facilities. Techniques such as turbo codes that promise to perform near the Shannon limit, even under low signal-to-noise ratio conditions, will be pursued. Constraints on spectrum and bandwidth will focus attention on more efficient modulation techniques, such as FQPSK. Spectrum competition and the need for additional bandwidth are also driving space programs to higher frequencies—mostly in the Ku- and Ka-band regions. Optical transmission will become a serious contender for the space-to-ground channel—circumventing RF spectrum restrictions, avoiding RF interference, and permitting increased security. Technology is improving the power limitations of light transmitting diodes, and spatial diversity (multiple receiving sites) will avoid cloud cover. Adoption of customized commercial hardware and software solutions in preference to unique solutions will greatly increase, especially among new users. Even noncommercial users will gravitate in this direction. Numerous, mostly small, vendors now offer space operations products commercially and competitively.