In a linear regression model, sample selection bias occurs when data on the dependent variable are missing nonrandomly, conditional on the independent variables. For example, if a researcher uses ordinary least squares (OLS) to estimate a regression model in which large values of the dependent variable are underrepresented in a sample, estimates of slope coefficients typically will be biased.

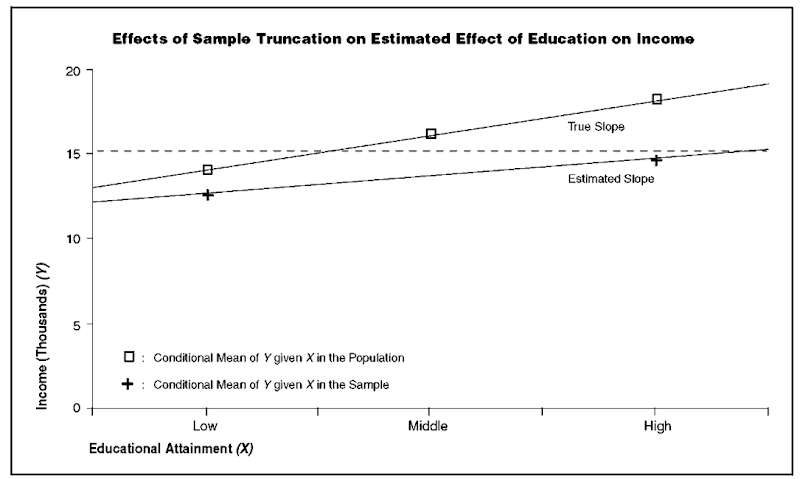

Hausman and Wise (1977) studied the problem of estimating the effect of education on income in a sample of persons with incomes below $15,000. This is known as a truncated sample and is an example of explicit selection on the dependent variable. This is shown in Figure 1, where individuals are sampled at three education levels: low (L), middle (M), and high (H). In the figure, sample truncation leads to an estimate of the effect of schooling that is biased downward from the true regression line as a result of the $15,000 ceiling on the dependent variable. In a variety of special conditions (Winship and Mare 1992), selection biases coefficients downward. In general, however, selection may bias estimated effects in either direction.

A sample that is restricted on the dependent variable is effectively selected on the error of the regression equation; at any value of X, observations with sufficiently large positive errors are eliminated from the sample. As is shown in Figure 1, as the independent variable increases, the expected value of the error becomes increasingly negative, making these two elements negatively correlated. Because this contradicts the standard assumption of OLS that the error and the independent variables are not correlated, OLS estimates become biased.

A different type of explicit selection occurs when the sample includes persons with incomes of $15,000 or more but all that is known about those persons is their educational attainment and that their incomes are $15,000 or more. When the dependent variable is outside a known bound but the exact value of the variable is unknown, the sample is censored. If these persons’ incomes are coded as $15,000, OLS estimates are biased and inconsistent for the same reasons that obtain in the truncated sample.

A third type of selection that leads to bias occurs when censoring or truncation is a stochastic function of the dependent variable. This is termed implicit selection. In the income example, individuals with high incomes may be less likely to provide information on their incomes than are individuals with low incomes. As is shown below, OLS estimates also are biased when there is implicit selection.

Yet another type of selection occurs when there is selection on the measured independent vari-able(s). For example, the sample may be selected on educational attainment alone. If persons with high levels of schooling are omitted from the model, an OLS estimate of the effect for persons with lower levels of education on income is unbiased if schooling has a constant linear effect throughout its range. Because the conditional expectation of the dependent variable (or, equivalently, the error term) at each level of the independent variable is not affected by a sample restriction on the independent variable, when a model is specified properly OLS estimates are unbiased and consistent (DuMouchel and Duncan 1983).

Figure 1

EXAMPLES

Sample selection can occur because of the way data have been collected or, as the examples below illustrate, may be a fundamental aspect of particular social processes. In econometrics, where most of the basic research on selection bias has been done, many of the applications have been to labor economics. Many studies by sociologists that deal with selection problems have been done in the cognate area of social stratification. Problems of selection bias, however, pervade sociology, and attempts to grapple with them appear in the sociology of education, family sociology, criminology, the sociology of law, social networks, and other areas.

Trends in Employment of Out-of-School Youths. Mare and Winship (1984) investigate employment trends from the 1960s to the 1980s for young black and white men who are out of school. Many factors affect these trends, but a key problem in interpreting the trends is that they are influenced by the selectivity of the out-of-school population. Over time, that selectivity changes because the proportion of the population that is out of school decreases, especially among blacks. Because persons who stay in school longer have better average employment prospects than do persons who drop out, the employment rates of nonstudents are lower than they would be if employment and school enrollment were independent. Observed employment patterns are biased because the probabilities of employment and leaving school are dependent. Other things being equal, as enrollment increases, employment rates for out-of-school young persons decrease as a result of the compositional change in this pool of individuals. To understand the employment trends of out-of-school persons, therefore, one must analyze jointly the trends in employment and school enrollment. The increasing propensity of young blacks to remain in school explains some of the growing gap in the employment rates between blacks and whites.

Selection Bias and the Disposition of Criminal Cases. A central focus in the analysis of crime and punishment involves the determinants of differences in the treatment of persons in contact with the criminal justice system, for example, the differential severity of punishment of blacks and whites (Peterson and Hagan 1984). There is a high degree of selectivity in regard to persons who are convicted of crimes. Among those who commit crimes, only a portion are arrested; of those arrested, only a portion are prosecuted; of those prosecuted, only a portion are convicted; and among those convicted, only a portion are sent to prison. Common unobserved factors may affect the continuation from one stage of this process to the next. Indeed, the stages may be jointly determined inasmuch as legal officials may be mindful of the likely outcomes later in the process when they dispose cases. The chances that a person will be punished if arrested, for example, may affect the eagerness of police to arrest suspects. Analyses of the severity of sentencing that focus on persons already convicted of crimes may be subject to selection bias and should take account of the process through which persons are convicted (Hagan and Parker 1985; Peterson and Hagan 1984; Zatz and Hagan 1985).

Scholastic Aptitude Tests and Success in College. Manski and Wise (1983) investigate the determinants of graduation from college, including the capacity of the Scholastic Aptitude Test (SAT) to predict individuals’ probabilities of graduation. Studies based on samples of students in colleges find that the SAT has little predictive power, yet those studies may be biased because of the selective stages between taking the SAT and attending college. Some students who take the SAT do not apply to college, some apply but are not admitted, some are admitted but do not attend, and those who attend are sorted among the colleges to which they have been admitted. Each stage of selection is nonrandom and is affected by characteristics of students and schools that are unknown to the analyst. When one jointly considers the stages of selection in the college attendance decision, along with the probability that a student will graduate from college, one finds that the SAT is a strong predictor of college graduation.

Women’s Socioeconomic Achievement.

Analyses of the earnings and other socioeconomic achievements of women are potentially affected by nonrandom selection of women into the labor market. The rewards that women expect from working affect their propensity to enter the labor force. Outcomes such as earnings and occupational status therefore are jointly determined with labor force participation, and analyses that ignore the process of labor force participation are potentially subject to selection bias. Many studies in economics (Gronau 1974; Heckman 1974) and sociology (Fligstein and Wolf 1978; Hagan 1990; England et al. 1988) use models that simultaneously represent women’s labor force participation and the market rewards that women receive.

Analysis of Occupational Mobility from Nineteenth-Century Censuses. Nineteenth-century decennial census data for cities provide a means of comparing nineteenth- and twentieth-century regimes of occupational mobility in the United States. Although one can analyze mobility by linking the records of successive censuses, such linkage is possible only for persons who remain in the same city and keep the same name over the decade. Persons who die, emigrate, or change their names are excluded. Because mortality and migration covary with socioeconomic success, the process of mobility and the way in which observations are selected for the analysis are jointly determined. Analyses that model mobility and sample selection jointly offer the possibility of avoiding selection bias (Hardy 1989).

MODELS OF SELECTION

Berk (1983) provides an introduction to selection models; Winship and Mare (1992) provide a review of the literature before 1992. We start by discussing the censored regression, or tobit, model. We forgo discussion of the very closely related truncated regression model (Hausman and Wise 1977).

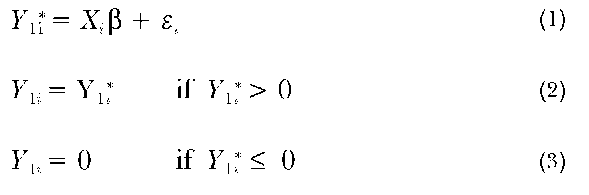

Tobit Model. The censored regression, or tobit, model is appropriate when the dependent variable is censored at an upper or lower bound as an artifact of how the data are collected (Tobin 1958; Maddala 1983). For censoring at a lower bound, the model is

where for the ith observation, Y^ is an unobserved continuous latent variable, Y1i is the observed variable, Xt is a vector of values on the independent variables, e; is the error, and B is a vector of coefficients. We assume that e; is not correlated with Xi and is independently and identically distributed. The model can be generalized by replacing the threshold zero in equations (2) and (3) with a known nonzero constant. The censoring point also may vary across observations, leading to a model that is formally equivalent to models for survival analysis (Kalbfleisch and Prentice 1980).

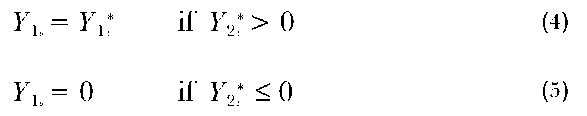

Standard Sample Selection Model. A generalization of the tobit model involves specifying that a second variable Y2; affects whether Y1; is observed. That is, retain the basic model in equation (1) but replace equations (2) and (3) with

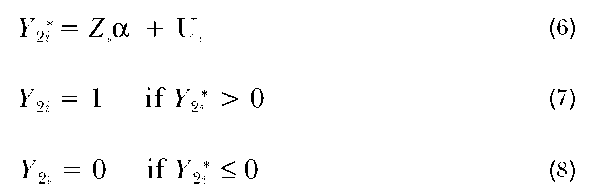

Variants of this model depend on how Y2i is specified. Commonly, Y2? is determined by a binary regression model:

where Y2; is a latent continuous variable. The classic example is a model for the wages and employment of women where Y1i is the observed wage, Y2i is a dummy variable indicating whether a woman works, and Y2? indexes a woman’s propensity to work (Gronau 1974). In a variant of this model, Y2i is hours of work and equations (6) through (8) are a tobit model (Heckman 1974). In both variants, Y1; is observed only for women with positive hours of work. One can modify the model by assuming, for example, that Y1i is dichotomous. If e; and u follow a bivariate normal distribution, this leads to a bivariate probit selection model.

The OLS regression of Y1; on X; is biased and inconsistent if e; is correlated with – Zaa, which occurs if £; is correlated with u or Zi or both. If the variables in Zi are included in X, and Zi are not correlated by assumption. If, however, Z contains additional variables, e; and Zi may be correlated. When oeu = 0, selection depends only on the observed variables in Z, not those in X{. In this case, selection can be dealt with either by conditioning on the additional Z’s or by using propensity score methods (Rosenbaum and Rubin 1983).

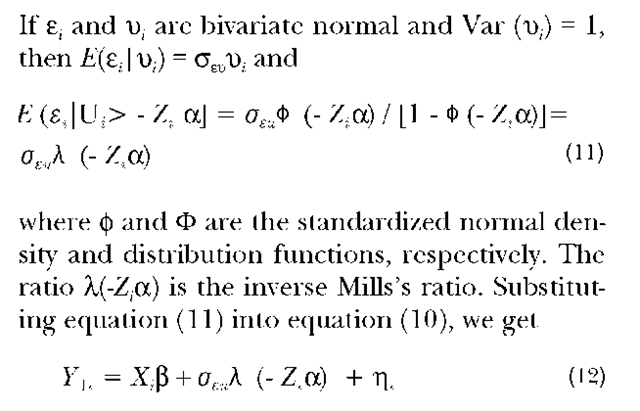

Equation (9) shows how selectivity bias may be interpreted as an omitted variable bias (Heckman 1979). The term E[e, | Y2l* > 0] can be thought of as an omitted variable that is correlated with X and affects Y1. Its omission leads to biased and inconsistent OLS estimates of B.

Nonrandom Treatment Assignment. A model intimately related to the standard selection model that is not formally presented here is used when individuals are assigned nonrandomly to some treatment in an experiment. In this case, there are essentially two selection problems. For individuals not receiving the treatment, information on what their outcomes would have been if they had received treatment is ”missing.” Similarly, for individuals receiving the treatment, we do not know what their outcomes would have been if they had not received the treatment. Heckman (1978) explicitly analyzes the relationship between the nonrandom assignment problem and selection. Winship and Morgan (1999) review the vast literature that has appeared on this question in the last two decades.

ESTIMATORS

A large number of estimators have been proposed for selection models. Until recently, all these estimators made strong assumptions about the distribution of errors. Two general classes of methods—maximum likelihood and nonlinear least squares—typically assume bivariate normality of ei and ui. The most popular method is that of Heckman (1979), known as the lambda method, which assumes only that ui in equation (6) is normally distributed and E[ti | ui] is linear.

For a number of years, there has been concern about the sensitivity of the Heckman estimator to these normality and linearity assumptions. Because maximum likelihood and nonlinear least squares make even stronger assumptions, they are typically more efficient but even less robust to violations of distributional assumptions. The main concern of the literature since the early 1980s has been the search for alternatives to the Heckman estimator that do not depend on normality and linearity assumptions.

Heckman’s Estimator. The Heckman estimator involves (1) estimating the selection model (equations [6] through [8]), (2) calculating the expected error, ui = E[ui | ui > -Zia], for each observation using the estimated a, and (3) using the estimated error as a regressor in equation (1). We can rewrite equation (9) as

where ni is not correlated with both X and X(-Za). Equation (12) can be estimated by OLS but is preferably estimated by weighted least squares since its error term is heteroskedastic (Heckman 1979).

The precision of the estimates in equation (12) is sensitive to the variance of X and collinearity between X and X. The variance of X is determined by how effectively the probit equation at the first stage predicts who is selected into the sample. The better the equation predicts, the greater the variance of X is and the more precise the estimates will be. Collinearity will be determined in part by the overlap in variables between X and Z. If X and Z are identical, the model is identified only because X is nonlinear. Since it is seldom possible to justify the form of X on substantive grounds, successful use of the method usually requires that at least one variable in Z not be included in X. Even in this case, X and X(-Zia) may be highly collinear, leading to imprecise estimates.

Robustness of Heckman’s Estimator. Because of the sensitivity of Heckman’s estimator to model specification, researchers have focused on the robustness of the estimator to violations of its several assumptions. Estimation of equations (6) through (8) as a probit model assumes that the errors ui are homoskedastic. When this assumption is violated, the Heckman procedure yields inconsistent estimates, though procedures are available to correct for heteroskedasticity (Hurd 1979). The assumed bivariate normality of ui and ei in the selection model is needed in two places. First, normality of U is needed for consistent estimation of a in the probit model. Second, the normality assumption implies a particular nonlinear relationship for the effect of Zia on Y2i through X. If the expectation of ei conditional on ui is not linear and/or ui is not normal, X misspecifies the relationship between Zia and Y2i and the model may yield biased results.

Several studies have analytically investigated the bias in the single-equation (tobit) model when the error is not normally distributed. In a model with only an intercept—that is, a model for the mean of a censored distribution—when errors are not normally distributed, the normality assumption leads to substantial bias. This result holds even when the true distribution is close to normal (for example, the logistic) (Goldberger 1983). When the normality assumption is wrong, moreover, maximum likelihood estimates may be worse than estimates that simply use the observed sample mean. For samples that are 75 percent complete, bias from the normality assumption is minimal; in samples that are 50 percent complete, bias is substantial in the truncated case but not in the censored case; and in samples that are less than 50 percent complete, bias is substantial in almost all cases (Arabmazar and Schmidt 1982).

The fact that estimation of the mean is sensitive to distributional misspecification suggests that the Heckman estimator may not be robust and raises the question of how often such problems arise in practice. In addition, even when normality holds, the Heckman estimator may not improve the mean square error of OLS estimates of slope coefficients in small samples (50 or less) (Stolzenberg and Relles 1990). This appears to parallel the standard result that when the effect of a variable is measured imprecisely, inclusion of the variable may enlarge the mean square error of the other parameters in the model (Leamer 1983).

No empirical work that the authors know of directly examines the sensitivity of Heckman’s method for a standard selection model. Work by LaLonde (1986) using the nonrandom assignment treatment model suggests that in specific circumstances the Heckman method can inadequately adjust for unobserved differences between the treatment and control groups.

Extensions of the Heckman Estimator. There are two main issues in estimating equation (12). The first is correctly estimating the probability for each individual that he or she will be selected. As it has been formulated above, this means first correctly specifying both the linear function Za and second specifying the correct, typically nonlinear relationship between the probability of selection and Za. The second issue is the problem of what nonlinear function should be chosen for X. When bivariate normality of errors holds, X is the inverse Mills’s ratio. When this assumption does not hold, inconsistent estimates may result. Moreover, since Xi and Z are often highly collinear, estimates of B in equation 12 may quite be sensitive to misspecification of X.

The first problem is handled rather easily. In the situation where one has a very large sample and there are multiple individuals with the same Z, the simplest approach is to estimate the probability of selection nonparameterically by directly estimating the probability of being selected for individuals with each vector of Z’s from the observed frequencies. With smaller samples, kernel estimation methods are available. These methods also consist of estimating probabilities directly by grouping individuals with ”similar” Z’s and directly calculating the probability of selection from weighted frequencies. Variants of this approach involve different definitions of similarity and/ or weight (Hardle 1990). In both methods, the problem of estimating how the probability of selection depends on Z is bypassed.

Semiparametric methods are also available. These methods are useful if their underlying assumptions are correct, since they generally produce more efficient estimates than does a fully nonparametric approach. These methods include Manski’s maximum score method (1975), nonparametric maximum likelihood estimation (Cosslett 1983), weighted average derivatives (Stoker 1986; Powell et al. 1989), spline methods, and series approximations (Hardle 1990).

The problem in the second stage is to deal with the fact that one generally has no a priori knowledge of the correct functional form for X. Since X is simply a monotonic function of the probability of being selected, this is equivalent to asking what nonlinear transformation of the selection probability should be entered into equation (12). A variety of approaches are available here. One approach is to approximate X through a series expansion (Newey 1990; Lee 1982) or by means of step functions (Cosslett 1991). An alternative is to control for X by using differencing or fixed effect methods (Heckman et al. 1998). The essential idea is to control for the probability of selection by implicitly including a series of dummy variables in equation (1), with each dummy variable being used to indicate a set of individuals with the same probability of selection or, equivalently, the same X. Generally, this will produce significantly larger standard errors of the slope estimates. This is appropriate, however, since selection increases uncertainty. With small samples, these methods can be generalized through kernel estimation (Powell 1987; Ahn and Powell 1990). Newey et al. (1990) apply a variety of methods to an empirical problem.

CONCLUSION

Selection problems bedevil much social science research. First and foremost, it is important for investigators to recognize that there is a selection problem and that it is likely to affect their estimates. Unfortunately, there is no panacea for selection bias. Various estimators have been proposed, and it is important for researchers to investigate the range of estimates produced by different methods. In most cases this range will be considerably broader than the confidence intervals for the OLS estimates. Selection bias introduces greater uncertainty into estimates. New methods for correcting for selection bias have been proposed that may provide a more powerful means for adjusting for selection. This needs to be determined by future research.