Abstract

This paper presents a new model for the description and recognition of distorted shapes, where the image is represented by a pixel density distribution based on the Blurred Shape Model combined with a non-linear image deformation model. This leads to an adaptive structure able to capture elastic deformations in shapes. This method has been evaluated using thee different datasets where deformations are present, showing the robustness and good performance of the new model. Moreover, we show that incorporating deformation and flexibility, the new model outperforms the BSM approach when classifying shapes with high variability of appearance.

Keywords: Shape Recognition, Deformation Model, Statistical Representation.

Introduction

Object recognition is one of the classic problems in Computer Vision. Different visual cues can be used to describe and identify objects. Color, texture or shape are some of them, being the last one probably the most widely considered. However, shape descriptors also have to face important challenges, for instance, elastic deformations. Therefore, they should be robust enough in order to guarantee intra-class compactness and inter-class separability in the presence of deformation.

In our case, we are interested in shape descriptors that could be applied to handwriting recognition. This is one of the applications where a large variability poses a big challenge to shape descriptors. Several descriptors have been applied to this field [11]. Two well-known examples are Shape context [1] or SIFT descriptor [8]. In the particular context of hand-drawn symbol recognition, the Blurred Shape Model (BSM) [4] has been introduced as a robust descriptor to classify deformed symbols. It is based on computing the spatial distribution of shape pixels in a set of pre-defined image sub-regions taking into account the influence of neighboring regions. The use of neighborhood information permits to handle a certain degree of deformation. However, due to the rigidity of the model, it has an open problem when large deformations may cause high differences in the spatial information encoded by the BSM. For this reason, a deformable model able to capture the deformations of the shape arises as an appealing alternative.

Several deformable models can be found in the literature [5] with different characteristics to manage deformations. Some models [2,3] are based on a tradeoff between forces, which leads to an energy-minimization problem. It consists in a compromise between adjusting the model to the given image, and restoring the model to the original position. Another group of models approaches to deformations as a non-linear process. An example is the Image Distortion Model (IDM) by Keysers et al. [6] which is based on a local pixel matching. This is the model that we have selected to be combined with the BSM because their integration into a unified framework seems a straightforward process. BSM computes a local description based on the grid representation. Therefore, the local pixel matching of the IDM can be adapted to work with this local grid-based representation.

Considering this, the main contribution of this work is the combination of the BSM descriptor with the IDM in order to build a new descriptor capable to deal with deformations. For this purpose, first, we modify the BSM grid-based representation to provide more flexibility and an easily deformation, and then, we adapt the IDM matching process to this new representation. Finally, we evaluate the proposed method using three datasets where deformations are present, comparing the new model with the original BSM.

The rest of the paper is organized as follows: Section 2 is devoted to explain the background on which the work has been based, while Section 3 explains the proposed model. Experimental results, which include comparisons that demonstrate the improved performance of the proposed method over the original approach, are conducted in Section 4. Finally, Section 5 concludes the paper.

Background

The background of this work has two main components. First, we are going to introduce the main characteristics of the Blurred Shape Model. And then, we are going to describe the deformation model selected to be combined with the BSM: the Image Distortion Model.

Blurred Shape Model

The main idea of the BSM descriptor [4] is to describe a given shape by a probability density function encoding the probability of pixel densities of a certain number of image sub-regions. Given a set of points forming the shape of a particular symbol, each point contributes to compute the BSM descriptor. This is done by dividing the given image in a n x n grid with equal-sized sub-regions (cells). Then, each cell receives votes from the shape pixels located inside its corresponding cell, but also from those located in the adjacent cells. Thereby, every pixel contributes to the density measure of its sub-region cell, and its neighboring ones. This contribution is weighted according to the distance between the point and the centroid of the cell receiving the vote. In Fig. 1 an example of the contribution for a given pixel is shown. The output is a vector histogram, where each position contains the accumulated value of each sub-region, and contains the spatial distribution in the context of the sub-region and its neighbors.

Fig. 1. BSM density estimation example. (a) Distances of a given shape pixel to the neighboring centroids. (b) Vector descriptor update using distances of (a).

Image Distortion Model

The Image Distortion Model (IDM) is introduced by Keysers et al. in [6]. It is a non-linear image deformation model for the task of image recognition. It consists in, given a test and a reference images, determining for each pixel in the test image the best matching pixel within a region of size w x w defined around the corresponding position in the reference image. Matching is based on the difference between a feature vector computed from the context (neighborhood) of both pixels. A final distance between two given images is simply computed summing the context differences between the mapped pixels.

Due to its simplicity and efficiency, this model has been described independently in the literature several times with different names. However, the novelty introduced by Keysers is the incorporation of pixel contexts to determine the best matching pixel. Among other possibilities, context that reported the lowest error rate in the data sets tested by Keysers is to compute the vertical and horizontal gradients in a sub-image of 3 x 3 around the concerned pixel. This leads to a vector of dimension U = 18 computed for each pixel. An example of the matching process applied to USPS digit images is shown in Fig. 2.

Fig. 2. Example of matching applied to the USPS digit images using the IDM. Image extracted from [6].

Deformable Blurred Shape Model (DBSM)

We now turn to the central problem addressed by this paper: the integration of the BSM descriptor and the IDM in a new deformable shape model. This new model will be based on deforming the grid structure of the BSM in order to adapt it to the given shape. Therefore, the first step is to modify the original grid-based representation in order to make it flexible and easily deformable (Sec. 3.1). Then, we will adapt the matching process of the IDM model using this new representation (Sec. 3.2).

DBSM Focus Representation

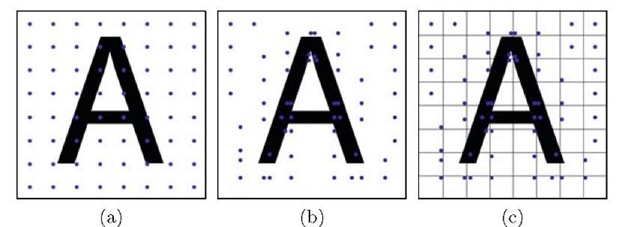

As it has been explained, BSM is based on placing a fixed regular grid over the image and accumulating votes of neighboring pixels on the centroid of each cell. In order to allow deformations of the grid we must adopt a slightly different representation. Instead of a regular grid of size k x k we will place over the image a set of k x k points, equidistantly distributed. These points, denoted as focuses, will correspond to the centroids of the original regular grid and, as in the original approach, will accumulate votes of the neighboring pixels weighted by their distance. Instead of defining the neighborhood as a set of fixed cells of the grid, it will be defined as an arbitrary influence area centered on the focus, in order to provide flexibility. The deformation of the grid will be obtained by moving independently each of the focuses along with their respective influence area. In order to limit the amount of deformation, each focus will be allowed to move only inside a pre-defined deformation area. In Fig. 3 we show an example of the focus representation and their influence and deformation areas. This resulting representation provides more flexibility and allows the focus deformation tracking.

Fig. 3. (a) Focuses representation. (b) Influence area. (c) Deformation area.

Deformation Process

Using this new representation the adaptation of the original IDM is relatively straightforward. In the IDM, every pixel in the test image is moved inside a certain region to find the best matching pixel in the reference image. In an analog way, we will move every focus in the test image inside the deformation area to find the best matching focus in the reference image according to the BSM value obtained by accumulating votes from pixels in their respective influence areas.

The deformation model can be applied not only in the classification step to match two images, but also in the training step to adapt the initial representation of the focuses to the actual shape of the symbol. Thus, for every reference image in the training set, every focus will be moved independently inside the deformation area to maximize the accumulated BSM value. Therefore, the final position of each focus will be the local maximum of the density measure within its deformation area. Figure 4 shows an example of this process. As a result every image in the training set will be represented with two output descriptors:

— A vector histogram![]() which contains the density measure of nearby pixels of each focus.

which contains the density measure of nearby pixels of each focus.

— A vector![]() , which contains x and y coordinates of each focus.

, which contains x and y coordinates of each focus.

Fig. 4. Example of the focuses deformation. (a) Initial position of the focuses. (b) Final position of the focuses after the maximization of their values. (c) Deformation area used.

Concerning the matching step, given a sample image I from the training set, and a test image J, we move the focuses in the test image inside the deformation area to optimize a certain matching criterion with the corresponding focuses in the reference image. For this purpose, we have defined two different criteria with different characteristics, whose performance will be compared experimentally.

— DBSMmin : Every focus in J will be deformed in order to minimize the difference of its BSM with that of its correspondent focus of I.

— DBSMmax : Analog to training, every focus in J will be moved to maximize its BSM value, independently of the value of its correspondent focus in I.

Both criterion result in two vectors (vi and vj) containing the BSM value of the focuses of I and J, and two vectors (pI and pJ) containing the position coordinates of the focuses. Thus, after normalizing the vectors, the distance between two images is computed using the following equation:

where d is the euclidean distance between two vectors, and 0 is a factor for weighting the contribution.

Experiments

We now show experimentally the benefits of the model proposed in section 3. First, in section 4.1, we will describe the datasets that will be used in our experiments and then, in section 4.2, we will report and discuss the results obtained.

Datasets

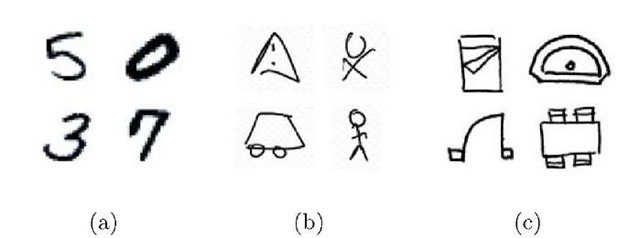

We run our experiments in three different datasets (Fig. 5): MNIST, NicIcon and a database composed of handwritten symbols from floor plans. Following, we describe these datasets as well as the experimental protocol used in each one.

Fig. 5. Image samples of the three datasets. (a) MNIST. (b) NicIcon. (c) Floor plan symbol sketches.

MNIST. The MNIST [7] is a database of handwritten digits from different writers and it is divided in a training set of 60.000 examples, and a test set of 10.000 examples. The digit size is normalized and centered in a fixed-size image of 28 x 28 pixels. We have re-centered the digits by their bounding box, as it is reported in [7] to improve error rates.

NicIcon. NicIcon [10] is composed of 26163 handwritten symbols of 14 classes from 34 different writers. On-line and off-line data is available. The dataset is divided in three subsets (training, validation and test) for both writer dependent and independent settings. We have selected the off-line writer dependent configuration as benchmark to test our method. Every symbol in the off-line data has been cropped in an image of 240 x 240 pixels.

Floor plan symbols. This dataset [9] contains 7414 sketched symbols from architectural floor plans, divided in 50 classes, with an average of 150 symbols per class. Symbols have been centered in 128 x 128 fixed-size images. The experimental protocol selected is a 10-fold cross-validation.

Results and Analysis

Experiments have been done using a Nearest Neighbor classifier over the three datasets. We compare the three methods (the original BSM and the proposed DBSM using the two different criteria for matching) in terms of accuracy in classification. Before running the final experiments on the test set, we have optimized all the parameters of the different methods, which include the number of regions/focuses, the size of the influence and deformation areas, and the weight 0 to compute the final distance, using a training set for each dataset. The final results of the experiments on the test set for each dataset are shown in Table 1.

Table 1. Accuracy rate of the compared methods in classification over the datasets selected

|

DBSMmin |

DBSMmax |

BSM |

|

|

MNIST |

94’3 |

94’4 |

92’6 |

|

Niclcon |

81’7 |

82’3 |

80’4 |

|

Floorplans |

99’2 |

99’2 |

98’8 |

As we can see, DBSM outperforms the original approach when classifying in the three tested datasets. Although the results for the MNIST dataset are below the current state-of-the-art, this is due to the low accuracy of the BSM descriptor with this dataset. We can see that DBSM clearly improves the BSM approach. Furthermore, it is noteworthy that approaches with highest accuracy rate in MNIST use some pre-processing or classification methods which could considerably improve the performance of our approach. Thus, we can conclude that the integration of deformations to the fixed grid-based representation leads to a higher accuracy rate in all the databases.

Regarding both DBSM criteria, we notice that DBSMmax has a slightly higher accuracy rate, although the difference is not enough significant. However, the main advantage of the DBSMmax over DBSMmin is that the testing process is computationally faster because, given a test image, DBSMmin has to perform the matching process for every reference image in the training set. On the contrary, DBSMmax only has to run the deformation process once for every test image, obtaining a vector descriptor that is used to compare with all reference images. Moreover, this description could be used to train any classifier, and it is not only limited to the k-NN classifier as in the case of DBSMmin.

Conclusions

We have designed and developed a new model for shape recognition, integrating a deformable model with the Blurred Shape Model. We have shown, using three different datasets with deformations, that the resulting model is able to capture and deal with elastic distortions. Furthermore, the new model outperforms the original BSM approach in terms of accuracy rate in classification tasks.

As future work, we are going to work on improving one of the weaknesses of our model, its lack of rotation invariance. We have considered to use a different representation, instead of the rectangular grid based on the BSM. For example, a circular grid, will provide the model a way to deal with rotations. Furthermore, we have considered applying other deformation models with different characteristics or extending this work to other shape descriptors.

![Example of matching applied to the USPS digit images using the IDM. Image extracted from [6]. Example of matching applied to the USPS digit images using the IDM. Image extracted from [6].](http://what-when-how.com/wp-content/uploads/2011/07/tmp3682_thumb_thumb.jpg)