Introduction

Digitizing objects and environments from the real world has become an important part of creating realistic computer graphics. Capturing geometric models has become a common process through the use of structured lighting, laser triangulation, and laser time-of-flight measurements. Recent projects such as [1-3] have shown that accurate and detailed geometric models can be acquired of real-world objects using these techniques.

To produce renderings of an object under changing lighting as well as viewpoint, it is necessary to digitize not only the object’s geometry but also its reflectance properties: how each point of the object reflects light. Digitizing reflectance properties has proven to be a complex problem, since these properties can vary across the surface of an object, and since the reflectance properties of even a single surface point can be complicated to express and measure. Some of the best results that have been obtained [2,4,5] capture digital photographs of objects from a variety of viewing and illumination directions, and from these measurements estimate reflectance model parameters for each surface point.

Digitizing the reflectance properties of outdoor scenes can be more complicated than for objects since it is more difficult to control the illumination and viewpoints of the surfaces. Surfaces are most easily photographed from ground level rather than from a full range of angles. During the daytime the illumination conditions in an environment change continuously. Finally, outdoor scenes generally exhibit significant mutual illumination between their surfaces, which must be accounted for in the reflectance estimation process. Two recent pieces of work have made important inroads into this problem. In Yu at al. [6] estimated spatially varying reflectance properties of an outdoor building based on fitting observations of the incident illumination to a sky model, and [7] estimated reflectance properties of a room interior based on known light source positions and using a finite element radiosity technique to take surface inter reflections into account.

In this paper, we describe a process that synthesizes previous results for digitizing geometry and reflectance and extends them to the context of digitizing a complex real-world scene observed under arbitrary natural illumination. The data we acquire includes a geometric model of the scene obtained through laser scanning, a set of photographs of the scene under various natural illumination conditions, a corresponding set of measurements of the incident illumination for each photograph, and finally, a small set of Bi-directional Reflectance Distribution Function (BRDF) measurements of representative surfaces within the scene. To estimate the scene’s reflectance properties, we use a global illumination algorithm to render the model from each of the photographed viewpoints as illuminated by the corresponding incident illumination measurements. We compare these renderings to the photographs, and then iteratively update the surface reflectance properties to best correspond to the scene’s appearance in the photographs. Full BRDFs for the scene’s surfaces are inferred from the measured BRDF samples. The result is a set of estimated reflectance properties for each point in the scene that most closely generates the scene’s appearance under all input illumination conditions.

While the process we describe leverages existing techniques, our work includes several novel contributions. These include our incident illumination measurement process, which can measure the full dynamic range of both sunlit and cloudy natural illumination conditions, a hand-held BRDF measurement process suitable for use in the field, and an iterative multiresolution inverse global illumination process capable of estimating surface reflectance properties from multiple images for scenes with complex geometry seen under complex incident illumination.

The scene we digitize is the Parthenon in Athens, Greece, digitally laser scanned and photographed in April 2003 in collaboration with the ongoing Acropolis Restoration project. Scaffolding and equipment around the structure prevented the application of the process to the middle section of the temple, but we were able to derive models and reflectance parameters for both the East and West facades. We validated the accuracy of our results by comparing our reflectance measurements to ground truth measurements of specific surfaces around the site, and we generate renderings of the model under novel lighting that are consistent with real photographs of the site. At the end of the paper we discuss avenues for future work to increase the generality of these techniques. The work in this topic was first described as a Technical Report in [8].

Background and Related Work

The process we present leverages previous results in 3D scanning, reflectance modeling, lighting recovery, and reflectometry of objects and environments. Techniques for building 3D models from multiple range scans generally involve first aligning the scans to each other [9,10], and then combining the scans into a consistent geometric model by either “zippering” the overlapping meshes [11] or using volumetric merging [12] to create a new geometric mesh that optimizes its proximity to all of the available scans. In its simplest form, a point’s reflectance properties can be expressed in terms of its Lambertian surface color – usually an RGB triplet expressing the point’s red, green, and blue reflectance properties. More complex reflectance models can include parametric models of specular and retroflective components; some commonly used models are [13-15]. More generally, a point’s reflectance can be characterized in terms of its Bi-directional Reflectance Distribution Function (BRDF) [16], which is a 4D function that characterizes for each incident illumination direction the complete distribution of reflected illumination. Marschner et al. [17] proposed an efficient method for measuring a material’s BRDFs if a convex homogeneous sample is available. Recent work has proposed models which also consider scattering of illumination within translucent materials [18]. To estimate a scene’s reflectance properties, we use an incident illumination measurement process. Marschner et al. [19] recovered low-resolution incident illumination conditions by observing an object with known geometry and reflectance properties. Sato et al. [20] estimated incident illumination conditions by observing the shadows cast from objects with known geometry. Debevec in [21] acquired high-resolution lighting environments by taking high dynamic range images [22] of a mirrored sphere, but did not recover natural illumination environments where the sun was directly visible. We combine ideas from [19,21] to record high-resolution incident illumination conditions in cloudy, partly cloudy, and sunlit environments. Considerable recent work has presented techniques to measure spatially-varying reflectance properties of objects. Marschner in [4] uses photographs of a 3D scanned object taken under point-light source illumination to estimate its spatially varying diffuse albedo. This work used a texture atlas system to store the surface colors of arbitrarily complex geometry, which we also perform in our work. The work assumed that the object was Lambertian, and only considered local reflections of the illumination. Sato et al. [23] use a similar sort of dataset to compute a spatially-varying diffuse component and a sparsely sampled specular component of an object. Rushmeier et al. [2] use a photometric stereo technique [24,25] to estimate spatially varying Lambertian color as well as improved surface normals for the geometry. Rocchini et al. [26] use this technique to compute diffuse texture maps for 3D scanned objects from multiple images. Debevec et al. [27] use a dense set of illumination directions to estimate spatially-varying diffuse and specular parameters and surface normals. Lensch et al. [5] presents an advanced technique for recovering spatially-varying BRDFs of real-world objects, performing principal component analysis of relatively sparse lighting and viewing directions to cluster the object’s surfaces into patches of similar reflectance. In this way, many reflectance observations of the object as a whole are used to estimate spatially-varying BRDF models for surfaces seen from limited viewing and lighting directions. Our reflectance modeling technique is less general, but adapts ideas from this work to estimate spatially-varying non-Lambertian reflectance properties of outdoor scenes observed under natural illumination conditions, and we also account for mutual illumination. Capturing the reflectance properties of surfaces in large-scale environments can be more complex, since it can be harder to control the lighting conditions on the surfaces and the viewpoints from which they are photographed. Yu et al. [6] solve for the reflectance properties of a polygonal model of an outdoor scene modeled with photogrammetry. The technique used photographs taken under clear sky conditions, fitting a small number of radiance measurements to a parameterized sky model. The process estimated spatially varying diffuse and piecewise constant specular parameters, but did not consider retroreflective components. The process derived two pseudo-BRDFs for each surface, one according to its reflectance of light from the sun and one according to its reflectance of light from the sky and environment. This allowed more general spectral modeling but required every surface to be observed under direct sunlight in at least one photograph, which we do not require. Using room interiors [7, 28, 29] estimate spatially varying diffuse and piecewise constant specular parameters using inverse global illumination. The techniques used knowledge of the position and intensity of the scene’s light sources, using global illumination to account for the mutual illumination between the scene’s surfaces. Our work combines and extends aspects of each of these techniques: we use pictures of our scene under natural illumination conditions, but we image the illumination directly in order to use photographs taken in sunny, partly sunny, or cloudy conditions. We infer non-Lambertian reflectance from sampled surface BRDFs. We do not consider full-spectral reflectance, but have found RGB imaging to be sufficiently accurate for the natural illumination and reflectance properties recorded in this work. We provide comparisons to ground truth reflectance for several surfaces within the scene. Finally, we use a more general Monte-Carlo global illumination algorithm to perform our inverse rendering, and we employ a multiresolution geometry technique to efficiently process a complex laser-scanned model.

Data Acquisiton and Calibration

Camera Calibration

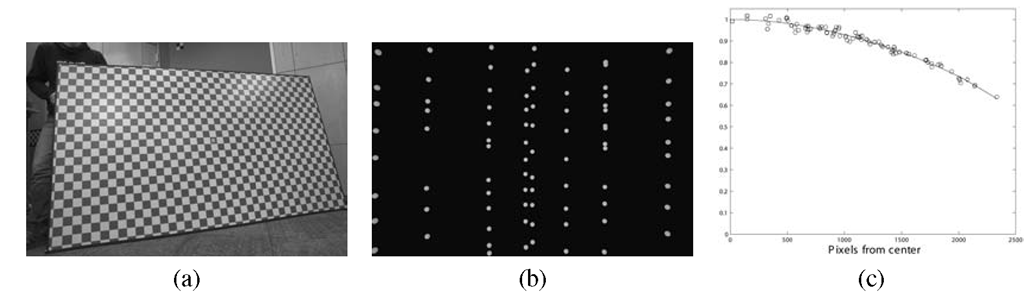

In this work we used a Canon EOS D30 and a Canon EOS 1Ds digital camera, which were calibrated geometrically and radiometrically. For geometric calibration, we used the Camera Calibration Toolbox for Matlab [30] which uses techniques from [31]. Since changing the focus of a lens usually changes its focal length, we calibrated our lenses at chosen fixed focal lengths. The main lens used for photographing the environment was a 24mm lens focused at infinity. Since a small calibration object held near this lens would be out of focus, we built a larger calibration object 1.2m x 2.1m from an aluminum honeycomb panel with a 5cm square checkerboard pattern applied (Figure 6.1(a)). Though nearly all images were acquired at f/ 8 aperture, we verified that the camera intrinsic parameters varied insignificantly (less than 0.05%) with changes of f/stop from f/2.8 to f/22. In this work we wished to obtain radiometrically linear pixel values that would be consistent for images taken with different cameras, lenses, shutter speeds, and f/stop. We verified that the “RAW” 12-bit data from the cameras was linear using three methods: we photographed a gray scale calibration chart, we used the radiometric self-calibration technique of [22], and we verified that pixel values were proportional to exposure times for a static scene. From this we found that the RAW pixel values exhibited linear response to within 0.1% for values up to 3,000 out of 4,095, after which saturation appeared to reduce pixel sensitivity. We ignored values outside of this linear range, and we used multiple exposures to increase the effective dynamic range of the camera when necessary.

Most lenses exhibit a radial intensity falloff, producing dimmer pixel values at the periphery of the image. We mounted each camera on a Kaidan nodal rotation head and photographed a diffuse disk light source at an array of positions for each lens at each f/stop used for data capture (Figure 6.1(b)). From these intensities recorded at different image points, we fit a radially symmetric 6th order even polynomial to model the falloff curve and produce a flat-field response function, normalized to unit response at the image center.

Each digital camera used had minor variations in sensitivity and color response. We calibrated these variations by photographing a MacBeth color checker chart under natural illumination with each camera, lens, and f/stop combination, and solved for the best 3 x 3 color matrix to convert each image into the same color space. Finally we used a utility for converting RAW images to floating-point images using the EXIF metadata for camera model, lens, ISO, f/stop, and shutter speed to apply the appropriate radiometric scaling factors and matrices. These images were organized in a PostGreSQL database for convenient access.

FIGURE 6.1

(a) 1.2m x 2.1m geometric calibration object; (b) Lens falloff measurements for 24mm lens at f/8; (c) Lens falloff curve for (b).

BRDF Measurement and Modeling

In this work we measure BRDFs of a set of representative surface samples, which we use to form the most plausible BRDFs for the rest of the scene. Our relatively simple technique is motivated by the principal component analyses of reflectance properties used in [5, 32], except that we choose our basis BRDFs manually. Choosing the principal BRDFs in this way meant that BRDF data collected under controlled illumination could be taken for a small area of the site, while the large-scale scene could be photographed under a limited set of natural illumination conditions.

Data Collection and Registration

The site used in this work is composed entirely of marble, but its surfaces have been subject to different discoloration processes yielding significant reflectance variations. We located an accessible 30cm x 30cm surface that exhibited a range of coloration properties representative of the site. Since measuring the reflectance properties of this surface required controlled illumination conditions, we performed these measurements during our limited nighttime access to the site and used a BRDF measurement technique that could be executed quickly.

The BRDF measurement setup (Figure 6.2), includes a hand-held light source and camera, and uses a frame placed around the sample area that allows the lighting and viewing directions to be estimated from the images taken with the camera. The frame contains fiducial markers at each corner of the frame’s aperture from which the camera’s position can be estimated, and two glossy black plastic spheres used to determine the 3D position of the light source. Finally, the device includes a diffuse white reflectance standard parallel to the sample area for determining the intensity of the light source.

The light source chosen was a 1,000W halogen source mounted in a small diffuser box, held approximately 3m from the surface. Our capture assumed that the surfaces exhibited isotropic reflection, requiring the light source to be moved only within a single plane of incidence. We placed the light source in four consecutive positions of 0°, 30°, 50°, 75°, and for each took hand-held photographs at a distance of approximately 2m from twenty directions distributed on the incident hemisphere, taking care to sample the specular and retroreflective directions with a greater number of observations. Dark clothing was worn to reduce stray illumination on the sample. The full capture process involving 83 photographs required forty minutes.

Data Analysis and Reflectance Model Fitting

To calculate the viewing and lighting directions, we first determined the position of the camera from the known 3D positions of the four fiducial markers using photogrammetry. With the camera positions known, we computed the positions of the two spheres by tracing rays from the camera centers through the sphere centers for several photographs, and calculated the intersection points of these rays. With the sphere positions known, we determined each light position by shooting rays toward the center of the light’s reflection in the spheres. Reflecting the rays off the spheres, we find the center of the light source position where the two rays most nearly intersect. Similar techniques to derive light source positions have been used in [5, 33].

FIGURE 6.2

BRDF samples are measured from a 30cm square region exhibiting a representative set of surface reflectance properties. The technique used a hand-held light source and camera and a calibration frame to acquire the BRDF data quickly.

From the diffuse white reflectance standard, the incoming light source intensity for each image could be determined. By dividing the overall image intensity by the color of the reflectance standard, all images were normalized by the incoming light source intensity. We then chose three different areas within the sampling region best corresponding to the different reflectance properties of the large-scale scene. These properties included a light tan area that is the dominant color of the site’s surfaces, a brown color corresponding to encrusted biological material, and a black color representative of soot deposits. To track each of these sampling areas across the dataset, we applied a homography to each image to map them to a consistent orthographic viewpoint. For each sampling area, we then obtained a BRDF sample by selecting a 30 x 30 pixel region and computing the average RGB value. Had there been a greater variety of reflectance properties in the sample, a PCA analysis of the entire sample area as in [5] could have been used.

Looking at Figure 6.3, the data show largely diffuse reflectance but with noticeable retroreflective and broad specular components. To extrapolate the BRDF samples to a complete BRDF, we fit the BRDF to the Lafortune cosine lobe model (Eq. 6.1) in its isotropic form with three lobes for the Lambertian, specular, and retroreflective components:

As suggested in [15], we then use a non-linear Levenberg-Marquardt optimization algorithm to determine the parameters of the model from our measured data. We first estimate the Lambertian component pd, and then fit a retroreflective and a specular lobe separately before optimizing all the parameters in a single system. The resulting BRDFs (Figure 6.4(b), back row) show mostly Lambertian reflectance with noticeable retroreflection and rough specular components at glancing angles. The brown area exhibited the greatest specular reflection, while the black area was the most retroreflective.

FIGURE 6.3

BRDF data and fitted reflectance lobes are shown for the RGB colors of the tan material sample for the four incident illumination directions. Only measurements within 15° of in-plane are plotted.

BRDF Inference

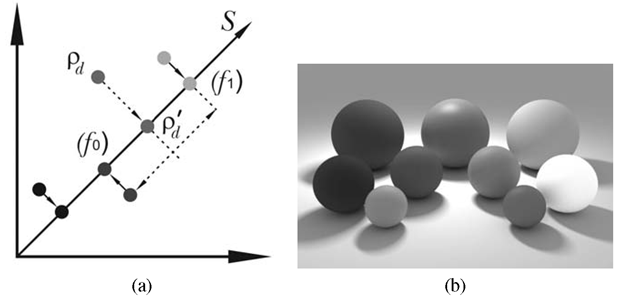

We wish to be able to make maximal use of the BRDF information obtained from our material samples in estimating the reflectance properties of the rest of the scene. The approach we take is informed by the BRDF basis construction technique from [5], the data-driven reflectance model presented in [32], and spatially-varying BRDF construction technique used in [34]. Because the surfaces of the rest of the scene will often be seen in relatively few photographs under relatively diffuse illumination, the most reliable observation of a surface’s reflectance is its Lambertian color. Thus, we form our problem as one of inferring the most plausible BRDF for a surface point given its Lambertian color and the BRDF samples available.

We first perform a principal component analysis of the Lambertian colors of the BRDF samples available. For RGB images, the number of significant eigenvalues will be at most three, and for our samples the first eigenvalue dominates, corresponding to a color vector of (0.688, 0.573, 0.445). We project the Lambertian color of each of our sample BRDFs onto the 1D subspace S (Figure 6.4(a)) formed by this eigenvector. To construct a plausible BRDF f for a surface having a Lambertian color pd, we project pd onto S to obtain the projected color p’d. We then determine the two BRDF samples whose Lambertian components project most closely to p’d. We form a new BRDF f ‘ by linearly interpolating the Lafortune parameters![]() of the specular and retroreflective lobes of these two nearest BRDFs f0 and f based on distance. Finally, since the retroflective color of a surface usually corresponds closely to its Lambertian color, we adjust the color of the retroflective lobe to correspond to the actual Lambertian color pd rather than the projected color p’d. We do this by dividing the retroreflective parameters Cxy and Cz by (pd)1/N and then multiplying by (pd)1/N for each color channel, which effectively scales the retroreflective lobe to best correspond to the Lambertian color pd. Figure 6.4(b) shows a rendering with several BRDFs inferred from new Lambertian colors with this process.

of the specular and retroreflective lobes of these two nearest BRDFs f0 and f based on distance. Finally, since the retroflective color of a surface usually corresponds closely to its Lambertian color, we adjust the color of the retroflective lobe to correspond to the actual Lambertian color pd rather than the projected color p’d. We do this by dividing the retroreflective parameters Cxy and Cz by (pd)1/N and then multiplying by (pd)1/N for each color channel, which effectively scales the retroreflective lobe to best correspond to the Lambertian color pd. Figure 6.4(b) shows a rendering with several BRDFs inferred from new Lambertian colors with this process.

FIGURE 6.4

(a) Inferring a BRDF based on its Lambertian component pd; (b) Rendered spheres with measured and inferred BRDFs. Back row: the measured black, brown, and tan surfaces. Middle row: intermediate BRDFs along the subspace S. Front row: inferred BRDFs for materials with Lambertian colors not on S.