TOP-DOWN PARSING WITH NO BACKUP

In this section top-down parsing with no backup is discussed. The information required during a no-backup parse is first discussed informally and then formally characterized in terms of LL(1) grammars. This class of grammars can be parsed in a deterministic manner by looking at the next input symbol in the input string to be parsed. The discussion will proceed as follows: First the class of simple LL(1) grammars is introduced. This class of grammars is very easily parsed and e-rules are not allowed. The next step is to permit a more general form of production but still not allow e-rules to be used. Finally the general class of LL(1) grammars are introduced with e-rules allowed.

Parsing algorithms are given for each subclass of grammars just discussed. The parsing tables required in these algorithms can be obtained directly from the rules of the grammars. The use of bit matrices (refer to Sec. 2-1.2) can facilitate the generation of such parsing tables.

LL(1) grammars have been used in defining programming languages for some 15 years (Lewis and Stearns, 1968). The parsing algorithm for this class of grammars is efficient. Actually, the performance of this algorithm is a linear function of the input string length. Furthermore, error-detection and -recovery •techniques can be easily appended to the parsing algorithm for this class of grammars. Details of such diagnostic techniques will be described in this section.

Notions of Parsing with No Backup

In the situation where no backup is allowed, our problem becomes one of determining which production is to be applied given that we know which nonterminal we are attempting to expand and which head (see Sec. 2-1.3) of the input string has been successfully parsed to this point. We are looking for a left canonical parse (see Sec. 2-4.2), that is, a parse in which the leftmost nonterminal in the current sentential form is always chosen for expansion. (A similar analysis can be made for right canonical parses.)

Another way of stating this requirement is that we must always make the correct choice among alternative productions for a particular nonterminal so that we never undo a particular expansion. Either the parse moves directly from left to right across the input string and concludes successfully or it reaches a point where no production can correctly be applied, in which case the input string is rejected as being invalid.

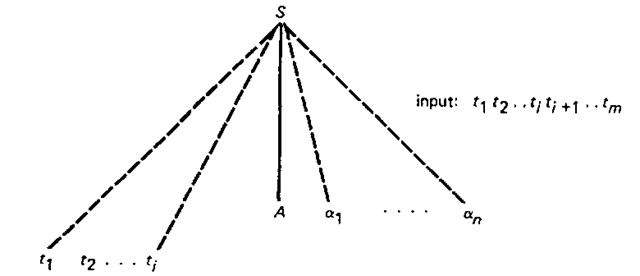

Figure 6-9

Figure 6-9 illustrates this situation in terms of a generalized syntax diagram. At the point illustrated, the head![]() of the input string

of the input string![]() has been matched and the nonterminal A is to be expanded. We must now correctly choose which production to use to expand A, the leftmost nonterminal in the current sentential form

has been matched and the nonterminal A is to be expanded. We must now correctly choose which production to use to expand A, the leftmost nonterminal in the current sentential form![]()

A large class of left-parsible grammars, the LL(k) grammars, allow this determination of the correct production to apply. Given the input head (prefix) parsed to this point and the leftmost nonterminal, the correct production can be applied provided that we also know the next few symbols of the unparsed input substring—say, the k symbols![]() As a simple instance of the nature of the help provided by this lookahead of k symbols, we next turn to a discussion of simple LL(1) grammars.

As a simple instance of the nature of the help provided by this lookahead of k symbols, we next turn to a discussion of simple LL(1) grammars.

Simple LL(1) Grammars

In this section we examine a class of grammars which can be parsed by simply looking at the next symbol in the unparsed input string in order to decide which production is to be applied. The parsing method described here is deterministic in the sense that no backup is required. We first describe in an informal manner a parser for this class of grammars. The discussion is followed by an algorithm which will construct a parser from a given grammar in this class.

Definition 6-1 A simple LL( 1) grammar is a context-free grammar without e-rules such that for every![]() the alternates for A each begin with a different terminal symbol. More formally, a context-free grammar is called a simple LL( 1) grammar or an s-grammar if all of its rules are of the form

the alternates for A each begin with a different terminal symbol. More formally, a context-free grammar is called a simple LL( 1) grammar or an s-grammar if all of its rules are of the form

The language generated by an .s-grammar is called an s-language.

These simple LL(1) grammars were first investigated by Korenjak and Hopcroft (1966).

As an example, consider the grammar whose productions are:

Clearly this grammar is a simple LL(1) grammar. It is convenient (and necessary, when e-rules are introduced in Sec. 6-2.4) to have some end-marker symbol at the end of all input strings to be parsed. We use the symbol # to denote the end of the input string. Because # is not in the terminal vocabulary VT of the grammar, it is impossible for some malicious person to insert the # in the middle of the input string. Conventionally the grammar is augmented with an extra production

which facilitates the construction of certain relations and tables later in this topic.

Let us construct the parse of the string aabccd# according to the preceding grammar. The leftmost canonical derivation for the sample input string is as follows:

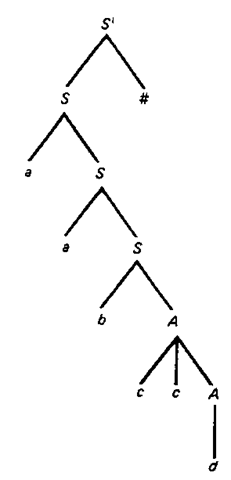

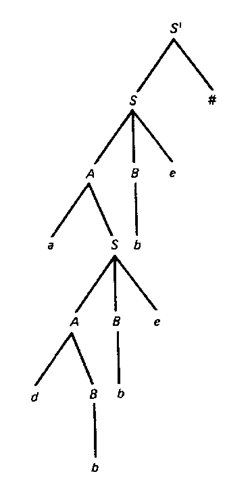

The parse tree for this derivation is given in Fig. 6-10.

We now describe an informal method for constructing, in a top-down manner without backup, the parse tree of Fig. 6-10. This construction process will then be formalized.

Figure 6-10 Parse tree for the string aabccd#.

Recall in the brute-force method with full backup (Sec. 6-1.1) that two stacks were used, one for the current sentential form and the other for the history of the parse. In parsing a simple LL(1) grammar, only one stack is required. Its purpose is to record a portion of the current sentential form. If the current sentential form is![]() where the substring

where the substring![]() denotes the prefix of the given input string which is matched so far, then the portion of the current sentential form which is retained in the stack is the string

denotes the prefix of the given input string which is matched so far, then the portion of the current sentential form which is retained in the stack is the string ![]() This string, in the case of a valid input string, will produce the remaining unmatched input symbols. That is, for the input string

This string, in the case of a valid input string, will produce the remaining unmatched input symbols. That is, for the input string

![]() In addition to the stack, an output tape is used to record the production numbers in the order in which they were applied. The symbol # is used as the stack bottom symbol. Initially, the stack is set to the contents

In addition to the stack, an output tape is used to record the production numbers in the order in which they were applied. The symbol # is used as the stack bottom symbol. Initially, the stack is set to the contents

S#

with the leftmost symbol denoting the top symbol in the stack. From this initial configuration, a rule must be selected on the basis of the top stack symbol and the current input character being examined. With a stack symbol of 5 and the current input symbol a, rule 1 must be chosen if we are to avoid backup. So S is replaced by the right part of rule 1, thus giving a stack contents of

aS#

Now, since the stack symbol is a terminal which matches the current input symbol, the stack is popped and the input cursor is advanced. The current stack contents are

S#

The second symbol a in the original input string then becomes the new current symbol. Rule 1 is again chosen. Note that in each of these two cases the choice is made in a deterministic manner. With this rule application the stack contents change to

aS#

The stack symbol is a, which matches the second input symbol. This condition causes the stack to be popped. The input cursor is then advanced, with b becoming the new current input symbol. The stack contents are

S#

Based on the fact that the stack symbol is 5 and the current input symbol is b, rule 2 can be chosen in a deterministic manner as the production to apply at this point. The stack symbol is replaced by the right part of this rule, thus yielding a stack contents of

bA#

The stack and the current input symbols now match and the stack is popped and the input cursor is advanced to its next position. The current stack contents are

A#

The stack symbol A and the current input symbol c uniquely specify that rule 4 and not rule 3 must be applied at this point. Therefore, the A on the stack is replaced by ccA, thus giving a new stack contents of

ccA#

This process is continued until the remaining input symbols are processed. A trace of this parse is given in Table 6-1.

We can now, in a more formal manner, formulate a parsing algorithm for the process just described. The heart of this algorithm is a parsing function which is represented in a table. Given the symbol at the top of the stack and the next input symbol, the table yields as a value either the production to apply or else an indication of how to continue or terminate. This table is defined in a manner similar to that given in Sec. 6-1.1 as follows:

where # marks the bottom of the stack and the end of the input string, and where (/?, /’) is an ordered pair such that is the right part of production number /.

In short, if A is the symbol on top of the stack and a is the current input symbol, then M(A,a) is defined as follows:

Table 6-1 Trace of a top-down parse for the string aabccd#

|

Unused input string |

Stack contents |

Output tape |

|

£ |

||

|

1 |

||

|

1 |

||

|

11 |

||

|

11 |

||

|

112 |

||

|

112 |

||

|

1124 |

||

|

1124 |

||

|

1124 |

||

|

11243 |

||

|

11243 |

The parsing function M for the current example grammar is given in Table 6-2. Note that the column headings of the table are the # end marker and the terminal alphabet of the grammar. The row headings consist of the stack bottom marker # and the vocabulary symbols.

We use the parsing function M to alter configurations as we did in the full backup algorithm of Sec. 6-1.1. Given the general form of a parse configuration, (az, Aa, P) where az is the unused input string, Aa is the stack with A being the stack top, and P is the output tape contents, we use M(A, a), where a is the current input symbol, to determine the next configuration according to the "yields" relation (I-) given by

The initial configuration is (z, S#,e), where z is the entire input string and 5 is the goal symbol.

As an example, consider the parse of the string aabccd# according to our current sample grammar. The sequence of configurations in the parse of this string is

Table 6-2 Parsing function for a simple LL(1) grammar

|

Stack symbol |

|

Current input symbol |

|

|

|

a |

b c |

d |

# |

|

|

S |

(aS, 1) |

(bA,2) |

||

|

A |

(ccA, 4) |

(d, 3) |

||

|

a |

pop |

|||

|

b |

pop |

|||

|

c |

pop |

|||

|

d |

pop |

|||

|

# |

accept |

Blank entries are all error entries.

Table 6-3

|

Unused input string |

Stack contents |

Output tape |

|

« |

||

|

1 |

||

|

11 |

||

|

112 |

||

|

1124 |

||

|

1124 |

||

|

11243 |

An algorithm similar to Algorithm TD_FULL of Sec. 6-1.1 can be formulated to parse a simple LL(1) language. This task is left as an exercise.

Before the end of this section, it should be noted that the parsing process, as exemplified by Table 6-1 for the case of simple LL(1) grammars, can be simplified. In particular, since the nonterminal on the stack and the current input symbol uniquely determine which rule is to be used and, furthermore, the leftmost symbol in that rule always matches the current input symbol, only the right part of the rule excluding its leftmost symbol need be stacked. If this is done, a more efficient algorithm results. A trace of the parsing process which uses this approach is given in Table 6-3. It is also left as an exercise to formulate an algorithm which takes advantage of this observation.

In summary, we have introduced a class of grammars which are very easy and efficient to parse. The restrictions placed on the rules of a simple LL(1) grammar, however, are severe. It is highly desirable, for practical reasons, to expand the class of grammars which can be handled by such a straightforward approach. This problem is examined in the next subsection.

LL(1) Grammars without e-Rules

In this section we pursue the discussion of top-down deterministic parsers which require only one lookahead symbol. Our aim is to generalize the simple LL(1) grammars discussed in the previous subsection. This generalization will eliminate some of the restrictions which were imposed on these simple grammars. Specifically, the condition that the leftmost symbol in the right part of a production must be a terminal symbol is removed. One restriction which still remains, however, is that e-rules are not allowed. This restriction will be removed in the next subsection. Furthermore, it should be noted that LL(1) grammars without e-rules have the same generative power as s-grammars (see Kurki-Suonio, 1969). That is, given an LL(1) grammar without e-rules we can obtain an equivalent j-grammar which generates the same language.

Throughout the following discussion, it is assumed that all useless symbols and left-recursive rules have been eliminated from the grammar. We return to the problem of left-recursive grammars later.

Let us attempt to parse, in a deterministic manner which is based on a single look ahead character, the input string adbbebe# generated by the following grammar:

The leftmost derivation for the given string is

and the associated syntax tree is given in Fig. 6-11. Note that this grammar is not in its present form a simple LL(1) grammar. Productions 0, 1, and 5 violate the simple LL(1) condition.

Using the approach taken in the previous subsection, let us now attempt to parse the given string based on a one-character lookahead. As before, the stack is initialized to the contents with the leftmost symbol denoting the top symbol in the stack. From this initial configuration, a rule must be selected on the basis of the top stack symbol (S) and the current input character being examined (a).

Figure 6-11 Parse tree for the string adbbebe#.

For the simple LL(1) grammar case, we merely had to select the rule whose left part and the leftmost symbol in its’ right part were the stack symbol and the current input symbol, respectively. In this instance, however, such an approach is inadequate, since the only rule which has S as its left part contains a nonterminal (A) as the leftmost symbol in its right part. If A can eventually leftmost produce the symbol a, then we are assured that by selecting rule 1 we are on the right path and backup beyond this point will never be necessary. More formally, A a… where the notation… denotes a string (possibly empty) of characters selected from the vocabulary. The replacement of the stack symbol S by the string A Be yields a new stack contents of

ABe#

We now must select a second production based on the knowledge that the stack symbol is A and the current input symbol is a. Three rules are possible: rules 2, 3, and 4. The decision in this case, however, is clear—rule 3 must be selected. This choice results in the following stack contents:

aSBe#

Now that the stack symbol matches the current input symbol, the stack is popped and the input cursor is advanced. As a result of these operations, the new stack symbol and current input symbol become the symbols S and d, respectively, thus

yielding a stack contents of SBe#

At this point in the parse, we observe that the symbol d is leftmost derivable from S; that is, S =» A ■ ■ • => d… . Consequently, we select rule 1 as the next rule to apply in the parsing process. This choice results in the stack being changed to

ABeBe#

Clearly, A can leftmost produce d. Therefore, we select production 2 as the next rule to apply. Such a selection yields a stack contents of

dBBeBe#

As a result, the stack and current input symbols match. We can now pop the stack and advance the input cursor, thus obtaining the stack contents

BBeBe#

and the new input symbol b.

Two rules for B are possible—rules 5 and 6. Because of the current input symbol, we cannot choose rule 5, since this choice will leftmost produce symbols a, c, or d. Consequently, we must choose rule 6. The new stack contents now become

bBeBe#

The parsing process can be continued until the complete parse is obtained. A trace of this parse is given in Table 6-4.

Table 6-4

|

Unused input string |

Stack |

Output tape |

|

£ |

||

|

1 |

||

|

13 |

||

|

13 |

||

|

131 |

||

|

1312 |

||

|

1312 |

||

|

13126 |

||

|

13126 |

||

|

131266 |

||

|

131266 |

||

|

131266 |

||

|

1312666 |

||

|

1312666 |

||

|

1312666 |

The sample grammar under consideration seems to be LL(1). Let us now introduce certain notions which will be used to formalize the definition of an LL(1) grammar without e-rules. In the previous discussion, we introduced the notion of "leftmost derivable." Given some string a e V+, the set of terminal symbols which are leftmost derivable from a is given by the equation

For example, in the current example grammar the set

Again referring to our example grammar, we note that there are three rules with a left part of A : the rules A -* dB, A —> aS, and A -* c. The FIRST set of each right part is given by

These FIRST sets are disjoint. There are also two rules with a left part of B whose FIRST sets are also disjoint, namely,

We are now in a position to generalize these observations.

Definition 6-2 A grammar without e-rules is an LL( 1) grammar if for all rules of the form![]()

and FIRST(a„) are pairwise disjoint, that is,

By applying this definition to the sample grammar, we obtain the following:

Therefore, this grammar is LL(1).

Using the approach of Sec. 6-2.2 we can formulate in a formal manner a parsing algorithm for the process just described. The parsing function associated with this parsing algorithm is defined as follows:

where # marks the bottom of the stack and the end of the input string, and where (/?,/) is an ordered pair such that P is the right part of production number i.

If A is the top symbol on the stack and a is the current input symbol, then M is defined as follows:

The parsing function of our current grammar is given in Table 6-5.

The parsing function M is used to alter configurations as we did in the simple LL(1) algorithm of Sec. 6-2.1. Given the general form of a parse configuration (z, Aa, P), where z is the unused input string, Aa is the stack, with A being the stack top, P is the output tape contents, we use M(A, u), where u = FIRST(z), to determine the next configuration according to the " yields" relation (f-) given by

The initial configuration is![]() , where z is the entire input string and S is the goal symbol.

, where z is the entire input string and S is the goal symbol.

As an example, consider the parse of the string adbbebe# according to the sample LL(1) grammar given earlier. The sequence of configurations in the parse of this string is as follows:

Table 6-5 Parsing function (M) for an LL(1) grammar

|

Stack symbol |

|

|

Current input symbol |

|

|

|

a |

b |

c |

d |

e # |

|

|

S |

(ABe, 1) |

(ABe, 1) |

(ABe, 1) |

||

|

A |

(c,4) |

(dB, 2) |

|||

|

B |

(AS. 5) |

(6,6) |

(AS,5) |

||

|

a |

pop |

||||

|

b |

pop |

||||

|

c |

pop |

||||

|

d |

pop |

||||

|

e |

pop |

||||

|

# |

accept |

||||

Blank entries are all error entries.

terminate with a successful parse.

We have defined the FIRST relation, and it is clear that this relation is important in computing the parsing function associated with a particular grammar. Since the mechanical evaluation of FIRST may be cumbersome, we redefine it in terms of another relation over a finite set in the following manner. Let F be a relation over the vocabulary such that

UFX if there exists a production![]()

The transitive closure (see Sec. 2-1.2) of this relation holds between U and X(UF+X) iff there exists some sequence of rules (at least one) such that

It is clear that![]() This relation is easily computed by using Warshall’s algorithm (see Sec. 2-1.2).

This relation is easily computed by using Warshall’s algorithm (see Sec. 2-1.2).

Our aim is to compute the FIRST(a) set for![]() . Now FIRST(a) = FIRST^), since we have assumed no e-rules except the case where S’ -» e and S’ does not occur in the right part of any rule in the grammar. The FIRST sets for terminals are trivial to form. Therefore, in order to compute the FIRST sets, we need only compute those associated with nonterminals. These FIRST sets are given by

. Now FIRST(a) = FIRST^), since we have assumed no e-rules except the case where S’ -» e and S’ does not occur in the right part of any rule in the grammar. The FIRST sets for terminals are trivial to form. Therefore, in order to compute the FIRST sets, we need only compute those associated with nonterminals. These FIRST sets are given by

For each

The F, F+, and FIRST relations for the sample grammar are given in Table 6-6.

In this subsection we have given a definition for LL(1) grammars without e-rules. Kurki-Suonio (1969) has shown that a grammar belonging to this class of grammars generates an s-language. Alternatively, an LL(1) grammar without e-rules can always be replaced by an equivalent simple LL(1) grammar. The absence of e-rules results in a rather simple parsing algorithm for this class of F entries are marked by F. entries are marked by F or F*.

Table 6-6 F and F + matrices, and FIRST sets for the sample grammar

|

|

S’ S A B a |

b c |

d e |

|

S’ |

|||

|

s |

|||

|

A |

|||

|

B |

|

|

|

|

b |

|||

|

c d e |

Contributors to a FIRST set are circled. They are the F+ entries in VN x VT and diagonal elements of grammars. A disadvantage of not allowing e-rules is that the class of grammars introduced in this section is a proper subset of the class of grammars to be introduced in the following subsection.

![tmp9-154_thumb[2][2] tmp9-154_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9154_thumb22_thumb.png)

![tmp9-156_thumb[2][2] tmp9-156_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9156_thumb22_thumb.png)

![tmp9-167_thumb[2][2] tmp9-167_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9167_thumb22_thumb.png)

![tmp9-192_thumb[2][2] tmp9-192_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9192_thumb22_thumb.png)

![tmp9-193_thumb[2][2] tmp9-193_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9193_thumb22_thumb.png)

![tmp9-209_thumb[2][2] tmp9-209_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9209_thumb22_thumb.png)

![tmp9-210_thumb[2][2] tmp9-210_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9210_thumb22_thumb.png)

![tmp9-243_thumb[2][2] tmp9-243_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9243_thumb22_thumb.png)

![tmp9-249_thumb[2][2] tmp9-249_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9249_thumb22_thumb.png)

![tmp9-251_thumb[2][2] tmp9-251_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9251_thumb22_thumb.png)

![tmp9-252_thumb[2][2] tmp9-252_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9252_thumb22_thumb.png)

![tmp9-255_thumb[2][2] tmp9-255_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp9255_thumb22_thumb.png)