Because of the nature of the programming process, a programming language translator is more often confronted with syntactically erroneous programs than with correct ones. Thus the translator must have some strategy for dealing with source programs which are invalid with respect to the rules of the programming language. The response to errors can lie anywhere in the continuum between total collapse of the translator and complete correction of the erroneous program.

This topic presents a classification of responses to errors with emphasis on what is desirable, what is undesirable, and what is possible with current techniques. Section 5-1 presents an overview of compile-time error handling. Section 5-2 discusses the problems of syntactic error detection in a compiler. Section 5-3 proposes guidelines for the reporting of compiler-detected errors. Sections 5-4 and 5-5 survey error recovery and error repair, respectively.

Sections in Chaps. 6 and 7 present the details of error-handling techniques which are applicable to particular methods of syntax analysis.

OVERVIEW OF ERROR HANDLING

This section of the topic classifies the levels of error responses which could conceivably be found in a compiler. We indicate which of these responses are desirable, which are practical, and why. The classification, which is based on Horning (1976), is summarized in Table 5-1.

Table 5-1 Classification of error responses

I. Unacceptable responses

1. Incorrect responses (error not reported)

- a. Compiler crashes

- b. Compiler loops indefinitely

- c. Compiler continues, producing incorrect object program

2. Correct (but nearly useless)

- a. Compiler reports first error and then halts

II. Acceptable responses

1. Possible responses

- a. Compiler reports error and recovers, continuing to find later errors if they exist

- b. Compiler reports the error and repairs it, continuing the translation and producing a valid object program

2. Impossible with current techniques

- a. Compiler corrects error and produces an object program which is the translation of what the programmer intended to write

The lowest level of response to errors is no planned response at all. This type of response might occur in a compiler produced by designers and programmers who never considered the possibility of invalid programs being submitted to the compiler. The compiler might go totally out of control, crashing or going into an unbounded loop. Or it might ignore the error and generate an invalid object program which the user might reasonably believe to be correct. The latter is especially dangerous, because the incorrect translation will show up only when the object program is actually run, perhaps obliterating a portion of a vital data file. Because such a compiler is useless and possibly dangerous, no self-respecting compiler writer allows the release of a compiler which responds to errors in this way. Such behavior can be prevented by careful and consistent design. The same discipline should be applied to compiler writing as to every other software project. Ensuring a correct, bounded, and stable reaction to all possible inputs is a key responsibility of the software engineer.

The next level of error handling is found in compilers constructed by programmers who consider errors in programs to be a rare occurrence. These compilers are capable of detecting and reporting errors—but only at the rate of one per compilation. When an error is detected in the source program, such a compiler reports the error and then halts. The authors of such a compiler may feel morally justified in that the compiler responds correctly to errors. However, the user of a compiler requires a more useful response to errors. The ability to detect one error per compilation is a step upward, but only a small one. The repeated compilations necessary to catch the compile-time detectable errors are a waste of computer time and of programmer time. This time would be better spent in thorough testing of the program to detect its run-time deficiencies.

As indicated in Table 5-1, we classify the aforementioned levels of error handling as unacceptable responses. Our criterion in making this judgment is the safety and convenience of the user, who is, after all, paying for the use of the compiler. We now describe three levels of acceptable error handling, only two of which are currently attainable.

The least ambitious acceptable approach to error handling is error recovery. The recovering compiler adjusts its internal data structures and its input stream so that it may continue parsing and checking the program as if nothing has gone wrong. A parser normally checks for the total syntactic validity of a program. The recovering parser localizes the effects of syntactic errors so that it may check the local syntactic validity of other parts of the program. The goal of error recovery is to allow the compiler to continue checking the input for further compile-time detectable errors and to report and recover from these errors as well.

Ideally, a compiler would recover so thoroughly that it would generate exactly one fully descriptive message reporting an error and would not generate further messages due to that error. Such recovery is not a trivial task. Poor error-recovery methods, including the nonmethod of simply ignoring the error, lead to an avalanche of messages produced by one programmer error. Such an avalanche can and must be avoided, since the user begins to ignore messages if they are profuse and inaccurate. The avalanche is avoided by taking care to adjust the state of the parser so that it is ready to accept the remainder of the program if it is valid.

Far more difficult to avoid is the occurrence of multiple messages later in the compilation due to information lost in the original error. For example, if a declaration is erroneous and the compiler fails to locate the attributes of a variable X, then all later occurrences of X may be flagged as erroneous because the attributes of X are incorrect. The adjustm’ent of the parser state just mentioned must include the adjustment of the contents of the various tables maintained by the compiler during compilation. It can become excessively difficult and time-consuming to derive lost information from other parts of the program and fix up these tables. As long as the reason for the extra messages is clear to the user, and as long as these messages are few in number, it is probably not worthwhile to attempt to eliminate all of them.

The next acceptable level of error handling is error repair. Error repair is £ form of error recovery which modifies the source or internal form of the erroneous program to make it syntactically valid. Other parts of the compiler may assume syntactically Valid inputs, and translation may be performed. This requirement on the error recovery makes the compiler larger and more complex but has the advantage that the resulting object program can be executed to discover errors in programming which are undetectable at compile time. The repaired program text may be provided to the user as an error diagnostic— the repair may suggest hew the error should be corrected and will certainly make subsequent messages easier to understand. In some environments these capabilities may be highly desirable, while in others they may be quite undesirable. They are most common in student compilers, notably PL/C (Conway and Wilcox, 1973) and SP/k (Barnard 1975). The ability to run programs with repaired errors is most useful on batch-processing systems where the turnaround time may be large and where every run must be as productive as possible. Since even a program with errors is executed, the user derives more information from each submission of the program. In a modern interactive environment, it may be preferable to speed up compilation by not generating an object program if a serious error is found at compile time. Users can correct their syntactic errors, recompile, and get a run of the corrected program. The whole process may take only slightly longer than the time taken by an error-repairing compiler to get a run of the repaired program. Finally, it must be observed that there are some situations in which an erroneous program must not run, i.e., when it might damage irreplaceable programs or data already on the system. One might argue that these vital resources should not be exposed even to a program which compiled successfully but is untested— expendable test versions of the necessary files and programs should be used to check out the new program.

The zenith of error-handling methods is true error correction. The term error correction is sometimes used to designate the error-handling method we have called error repair. We believe that this usage is misleading, especially to novice users, and we reserve the term error correction for a type of error repair which, through extrasensory perception or some other means, finds out what the programmer intended to write and substitutes this for the error. In fact, it may be necessary for the error-correcting compiler to know more about the programming language and the problem specifications than the programmer knows, since the programmer may be rather mediocre, and the only good definition of correctness lies in conformity to the program specifications. Clearly this points in the direction of an automatic programmer rather than a compiler. There is little reason for the existence of a high-level language if we have a program which can take problem specifications and generate a correct object language program to solve the problem. The true error-correcting compiler is a dream which will not come true, at least not in the near future.

As we have seen, error recovery and error repair are the only methods of error handling which are both acceptable and possible with present techniques. In the remainder of this topic we examine the prerequisites of good error handling, namely, effective error detection and reporting, and study the general strategies of error recovery and of error repair.

ERROR DETECTION

Before we can make any attempt to handle errors, we must be sure that we can detect them. This section discusses how and why we can detect errors, and what kinds of errors can be detected. Some attention is also given to the question of where in the input string an error can be detected can be determined about its cause at the time it is detected.

The Nature of Syntax Errors

Recall from Sec. 2-4 the distinction between the syntax and the semantics of a program. The syntax of the program corresponds to its form and determines whether or not it is a legal program according to the rules of some programming language. The semantics of the program is its meaning (the operation it performs) and is invariant during the translation process. The object program has the same semantics as the source program but does not usually have the same syntax. By definition, the compiler can only detect syntactic errors. Even the detection of such errors as the use of a variable before it is initialized may be considered syntactic. They are not errors in the meaning of the program; they are errors which make the program meaningless, simply because any syntactically invalid program is meaningless. Semantic errors manifest themselves only at run time, in terms of deviations from the program specifications. Such errors may someday be detectable by means of program verifiers, relying on the specifications of the program to verify that the program written by the programmer actually does what is required. However, this cannot be done given only the source program and a knowledge of the language in which it is written.

A compiler performs a syntactic analysis of the source program in order to discern its structure and in order to check that the program is valid in a given programming language L. Any deviation from the rules of L is termed a syntactic error. In most modern compilers, part of the syntactic analysis is performed by a relatively formalized context-free parser (Chaps. 6 and 7), and the remainder (primarily the checking of the attributes of programmer-defined objects in the program) is performed by a somewhat ad hoc mechanism, involving various compile-time tables (discussion in Chaps. 8 and 11). The two stages are necessary because most programming languages cannot be defined by a context-free grammar and because more powerful grammars are rather inconvenient as a basis for a compiler. Therefore, part of the language is expressed as a context-free grammar and the remainder is expressed as a set of context-sensitive restrictions on the context-free grammar. Because of this dichotomy, we speak of context-free errors and context-sensitive errors which are violations of the syntax specified by the context-free grammar and the context-sensitive restrictions, respectively.

Consider a programming language defined on an alphabet of terminal symbols VT. If the language L contains all strings in V*, then we can never detect a syntactic error in a program written in the alphabet VT, since if any symbol of the program is removed or replaced by any other symbol in VT the result is a valid program in L. We can detect errors only if L is a proper subset of Moreover, error detection becomes more effective as L becomes a smaller subset of V*. The attitude behind some PL/I compilers that any program which could conceivably mean something should be considered valid leads to poor error detection—errors usually do not cause error messages; instead they cause the invocation of special language features not intended by the programmer (see Sec. 3-4.2). It is important that the restrictions on members of V* which define the language L be made in a reasonable and consistent way, both for the sake of the user and so that the compiler writer may easily construct a parser which accepts precisely the language L and not some subtle variation on L.

We may usefully treat the restriction of the language from V* to L as the introduction of redundancy into programs in L. The restriction of the set of valid strings implies an unnecessary increase in the average length of a program. This increase is due to the requirement that the programmer explicitly state information which can be derived from the rest of the program. Clearly the explicit restatement of this information is redundant, but it does provide a check against errors in the program. If two redundant pieces of information are inconsistent, a syntactic error has been found. Thus error detection is most effective in languages in which the programmer is required to provide the same information in two or more ways.

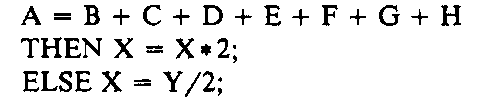

The clearest example of the accuracy of the preceding comments lies in the type of checking performed in languages with so-called strong-type structures. The requirement to declare the attributes of all variables is often unnecessary, but the requirement is made to ease compilation and to increase the effectiveness of error detection. Given an assignment statement like

it is possible for the compiler to determine that i should be a variable of integer type. Thus the declaration

would be redundant. However, with the redundant declaration the compiler can determine that the assignment

is an error, since the assignment indicates that i is a string variable rather than an integer variable. Possibly the programmer made a typing error or meant to use another variable name instead of i. In either case the detection of the error by the compiler frees the programmer from a possibly lengthy attempt to find this error once the program is being tested. The introduction of typeless variables may have advantages in very small interactive programming languages intended for writing short, simple programs. Such departures from ALGOL-like strong typing are liabilities in languages designed for the implementation of software or large applications programs (see Sec. 3-5.3). The same comments apply to most other attempts to reduce redundancy in the hope of lightening the programmer’s burden.

How Errors Are Detected

As mentioned in Sec. 5-2.1, the grammars which define the syntax of programming languages are usually not context-free grammars. They are often specified in two parts: a rigorous context-free grammar defining a superset of the language, and a set of context-sensitive restrictions on the context-free syntax. As a consequence of this, errors are detected in two ways. Errors in the context-free syntax of the source program are usually easy to detect because of the precise specification of the context-free syntax. Errors may also be detected by checks on the context-sensitive syntax of the source program. Errors of this class, including errors in the types of variables and expressions, are often harder to detect effectively, because of the vagueness and imprecision of the prose description which defines the context-sensitive syntax.

Context-free errors are most effectively detected by a syntax-directed parsing algorithm. This type of parser consists of a general table-driven algorithm and a set of tables which are generated from the context-free grammar of the programming language. Since the parsing algorithm and the table-constructing algorithm are both precisely specified, it can be proven that the parser accepts precisely the language specified by a particular grammar. A context-free error in the source program is detected when the parsing algorithm finds an error entry in the tables. When this happens, the parser calls some recovery or repair routine to handle the error.

It is unfortunate, in terms of compiler reliability, that some of the heavily used compilers in existence today use ad hoc methods of syntactic analysis. (By ad hoc we mean any method which is not formalized.) Ad hoc methods are popular because they allow an efficient, direct, and conceptually simple interface between the syntactic analysis and the code generation. The algorithms which generate code for a particular construct may be incorporated into the syntactic analysis for that construct. The ad hoc approach to syntactic analysis consists of writing a special-case program which accepts only strings of the language being analyzed and at the same time generates either object code or some intermediate form which is processed by another pass of the compiler. This statement is simply a loose definition of a syntactic analyzer.

Formalized methods can be proven correct; the correctness of an ad hoc parser depends entirely on the care taken by its designers and programmers to ensure its correctness. The designers of an ad hoc parser must have an extraordinary understanding of the details of the language being implemented and must be extremely thorough in cross-checking their code with the language specifications.

The ad hoc compiler typically consists of a large number of checks of the next input symbol to see that it is legal in the context of the symbols already seen. Thus an error is detected when one of these tests fails unexpectedly. If the compiler has been properly constructed, this failure causes some-error-handling mechanism to be invoked, perhaps by means of a procedure call.

The aforementioned shortcomings of ad hoc syntactic-analysis methods should be taken to heart by all prospective compiler writers. No matter how formal their context-free syntactic-analysis methods may be, almost all compilers use ad hoc methods for checking context-sensitive syntax. Such checking includes making sure that variables, constants, labels, and procedure names are defined and have the correct attributes for the context in which they occur. In designing programming languages we attempt to keep the syntax of operations similar for all data types. Because of this, errors in attributes of operands usually have more localized effects than do context-free errors, which often invalidate the structure of a large portion of the source program. Thus it is often easier to recover from context-sensitive errors than from context-free errors.

Where Errors Are Detected

We now briefly turn our attention to the question of where in the input string we can detect an error, and what we can determine about the cause of an error at the time it is detected. Peterson (1972) presents the following incorrect PL/I statement as a demonstration of the fact that the detection of an error may not occur until the parser has proceeded an arbitrary distance beyond the point where the error was actually made:

In the example, it is apparent to the eye of a PL/I programmer that the error is a missing IF token. The location of the THEN is the earliest possible point at which the error can be detected in a left-to-right parse. When one’s eye reaches the THEN, it is capable of quickly backtracking to discover the actual point of the error.

A parser can be made to backtrack and find the actual error in the preceding example, but in general this process is too time-consuming to be practical. Lyon (1974) gives an algorithm which repairs an invalid program by transforming it into the valid program which is closest to it, in the sense that a minimum number of symbol insertions and deletions is required to do the transformation. The algorithm takes a number of steps proportional to n3, where n is the number of symbols in the source program. The algorithm is too slow to be suitable for programming-language translation. Barnard (1976) points out that this minimum-distance error repair may be preferable to less time-consuming methods but certainly does not ensure a correction of the syntactic error.

Although the THEN token in the preceding example is the earliest point at which a syntactic error can be detected, it is certainly not the latest. Some formal parsing methods, including the precedence parsers of Sec. 7-3, may not detect the syntactic error until several more symbols have been processed. The LL (Sec. 6-2) and LR (Sec. 7-4) parsing methods detect the error when the THEN token is processed. These parsing techniques have the so-called valid prefix property, which means that if the parser does not detect an error in the first portion X of a program XY, then there must exist some string of symbols W such that XW is a valid program.

It must be noted that the compiler is not usually able to determine the cause of an error, even if it is detected immediately. The error may be due to a typing mistake, a programmer oversight, or a serious misunderstanding of the programming language. The sooner the error is detected the more likely it is that the compiler can correctly guess the cause and take an appropriate action. This action must always include a precise and complete description of the error. Section 5-3 looks in greater detail at methods and standards for error reporting.